In the direction of large models, technology giants are training larger models, while academia is thinking of ways to optimize them. Recently, the method of optimizing computing power has risen to a new level.

Large-scale language models (LLM) have revolutionized the field of natural language processing (NLP), demonstrating extraordinary capabilities such as emergence and epiphany. However, if you want to build a model with certain general capabilities, billions of parameters are needed, which greatly raises the threshold for NLP research. The LLM model tuning process usually requires expensive GPU resources, such as an 8×80GB GPU device, which makes it difficult for small laboratories and companies to participate in research in this field.

Recently, people are studying parameter efficient fine-tuning techniques (PEFT), such as LoRA and Prefix-tuning, which provide solutions for tuning LLM with limited resources. However, these methods do not provide practical solutions for full-parameter fine-tuning, which has been recognized as a more powerful method than parameter-efficient fine-tuning.

In the paper "Full Parameter Fine-tuning for Large Language Models with Limited Resources" submitted by Qiu Xipeng's team at Fudan University last week, researchers proposed a new optimizer LOw- Memory Optimization (LOMO).

By integrating LOMO with existing memory saving techniques, the new approach reduces memory usage to 10.8% compared to the standard approach (DeepSpeed solution). As a result, the new approach enables full parameter fine-tuning of a 65B model on a machine with 8×RTX 3090s, each with 24GB of memory.

Paper link: https://arxiv.org/abs/2306.09782

In this work, the author analyzed four aspects of memory usage in LLM: activation, optimizer state, gradient tensor and parameters, and optimized the training process in three aspects:

#New technology makes memory usage equal to parameter usage plus activation and maximum gradient tensors. The memory usage of full parameter fine-tuning is pushed to the extreme, which is only equivalent to the usage of inference. This is because the memory footprint of the forward backward process should be no less than that of the forward process alone. It is worth noting that when using LOMO to save memory, the new method ensures that the fine-tuning process is not affected, because the parameter update process is still equivalent to SGD.

The study evaluated the memory and throughput performance of LOMO and showed that with LOMO, researchers can train a 65B parameter model on 8 RTX 3090 GPUs. Furthermore, to verify the performance of LOMO on downstream tasks, they applied LOMO to tune all parameters of LLM on the SuperGLUE dataset collection. The results demonstrate the effectiveness of LOMO for optimizing LLMs with billions of parameters.

In the method section, this article introduces LOMO (LOW-MEMORY OPTIMIZATION) in detail. Generally speaking, the gradient tensor represents the gradient of a parameter tensor, and its size is the same as the parameters, which results in larger memory overhead. Existing deep learning frameworks such as PyTorch store gradient tensors for all parameters. Currently, there are two reasons for storing gradient tensors: computing the optimizer state and normalizing gradients.

Since this study adopts SGD as the optimizer, there is no gradient-dependent optimizer state, and they have some alternatives to gradient normalization.

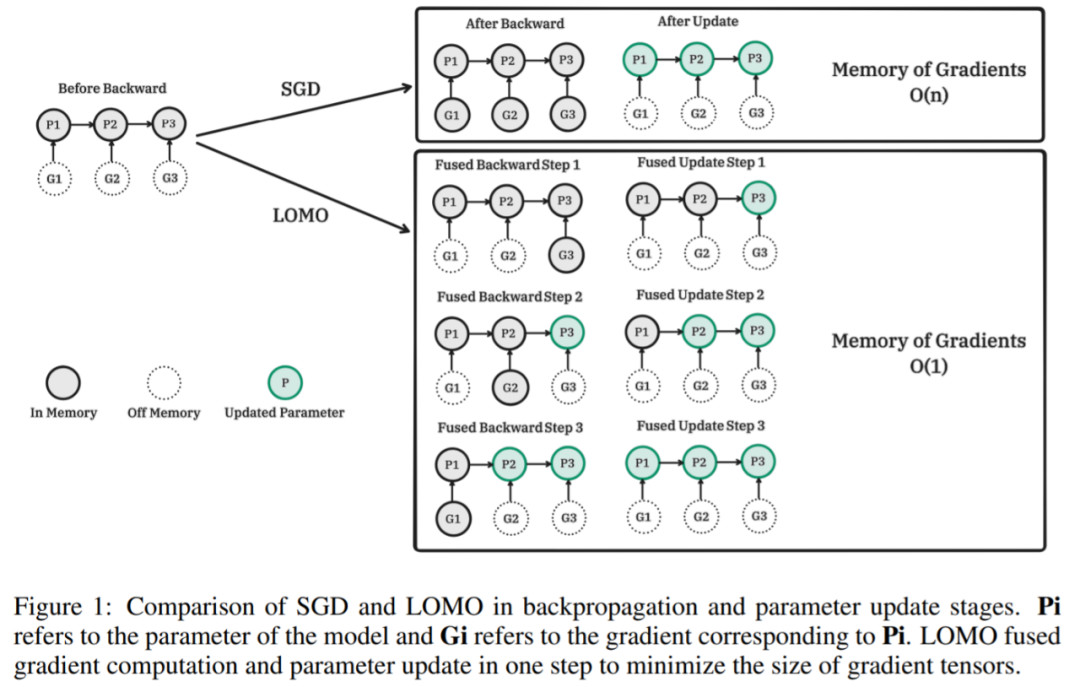

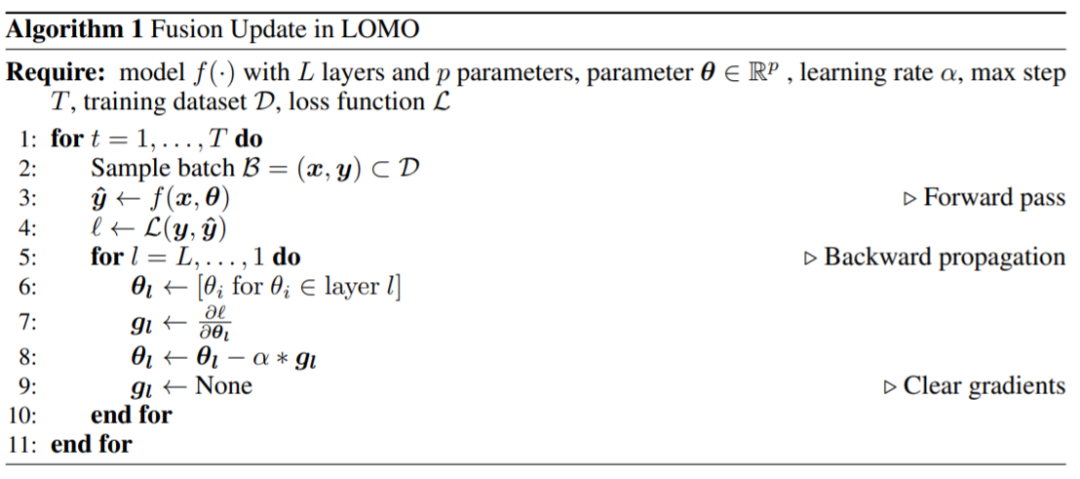

They proposed LOMO, as shown in Algorithm 1, which fuses gradient calculation and parameter update in one step, thus avoiding the storage of gradient tensors.

The following figure shows the comparison between SGD and LOMO in the backpropagation and parameter update stages. Pi is the model parameter, and Gi is the gradient corresponding to Pi. LOMO integrates gradient calculation and parameter update into a single step to minimize the gradient tensor.

LOMO corresponding algorithm pseudo code:

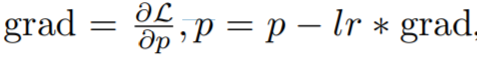

, which is a two-step process, first is to calculate the gradient and then update the parameters. The fused version is

The key idea of this research is to update the parameters immediately when calculating the gradient, so that the gradient tensor is not stored in memory. This step can be achieved by injecting hook functions into backpropagation. PyTorch provides related APIs for injecting hook functions, but it is impossible to achieve precise instant updates with the current API. Instead, this study stores the gradient of at most one parameter in memory and updates each parameter one by one with backpropagation. This method reduces the memory usage of gradients from storing gradients of all parameters to gradients of only one parameter.

Most of the LOMO memory usage is consistent with the memory usage of parameter-efficient fine-tuning methods, indicating that combining LOMO with these methods results in only a slight increase in gradient memory usage. This allows more parameters to be tuned for the PEFT method.

In the experimental part, the researchers evaluated their proposed method from three aspects, namely memory usage, throughput and downstream performance. Without further explanation, all experiments were performed using LLaMA models 7B to 65B.

Memory usage

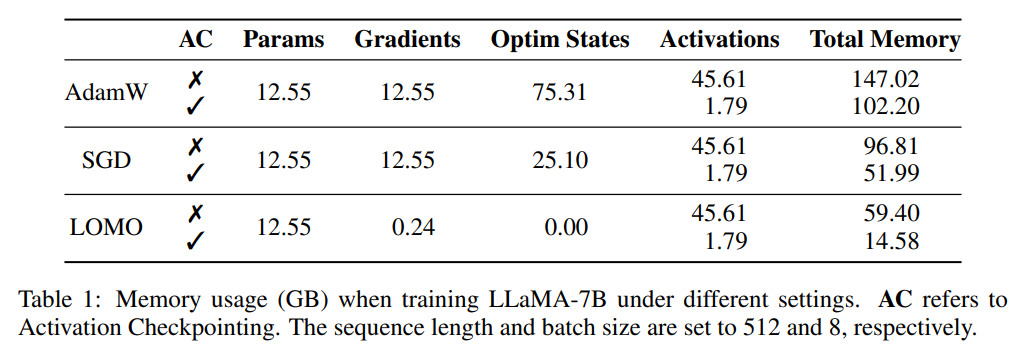

The researchers first analyzed the model status and Activated memory usage. As shown in Table 1, compared with the AdamW optimizer, the use of the LOMO optimizer results in a significant reduction in memory usage, from 102.20GB to 14.58GB; compared with SGD, when training the LLaMA-7B model, the memory usage decreases from 51.99GB reduced to 14.58GB. The significant reduction in memory usage is primarily due to reduced memory requirements for gradients and optimizer states. Therefore, during the training process, the memory is mostly occupied by parameters, which is equivalent to the memory usage during inference.

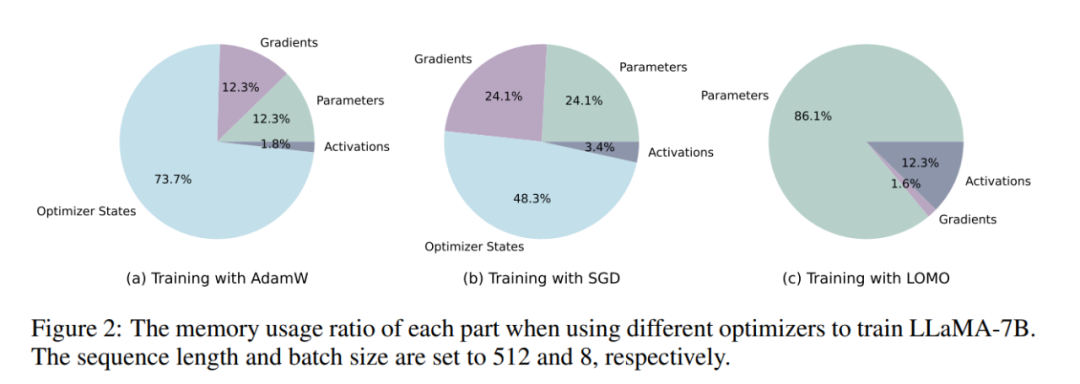

As shown in Figure 2, if the AdamW optimizer is used for LLaMA-7B training, a considerable proportion of memory ( 73.7%) is assigned to optimizer state. Replacing the AdamW optimizer with the SGD optimizer effectively reduces the percentage of memory occupied by the optimizer state, thereby alleviating GPU memory usage (from 102.20GB to 51.99GB). If LOMO is used, parameter updates and backward are merged into a single step, further eliminating memory requirements for the optimizer state.

Throughput

Researchers compared LOMO, AdamW and SGD throughput performance. Experiments were conducted on a server equipped with 8 RTX 3090 GPUs.

For the 7B model, the throughput of LOMO shows a significant advantage, exceeding AdamW and SGD by about 11 times. This significant improvement can be attributed to LOMO's ability to train the 7B model on a single GPU, which reduces inter-GPU communication overhead. The slightly higher throughput of SGD compared to AdamW can be attributed to the fact that SGD excludes the calculation of momentum and variance.

As for the 13B model, due to memory limitations, it cannot be trained with AdamW on the existing 8 RTX 3090 GPUs. In this case, model parallelism is necessary for LOMO, which still outperforms SGD in terms of throughput. This advantage is attributed to the memory-efficient nature of LOMO and the fact that only two GPUs are required to train the model with the same settings, thus reducing communication costs and improving throughput. Additionally, SGD encountered out-of-memory (OOM) issues on 8 RTX 3090 GPUs when training the 30B model, while LOMO performed well with only 4 GPUs.

Finally, the researcher successfully trained the 65B model using 8 RTX 3090 GPUs, achieving a throughput of 4.93 TGS. With this server configuration and LOMO, the training process of the model on 1000 samples (each sample contains 512 tokens) takes approximately 3.6 hours.

Downstream Performance

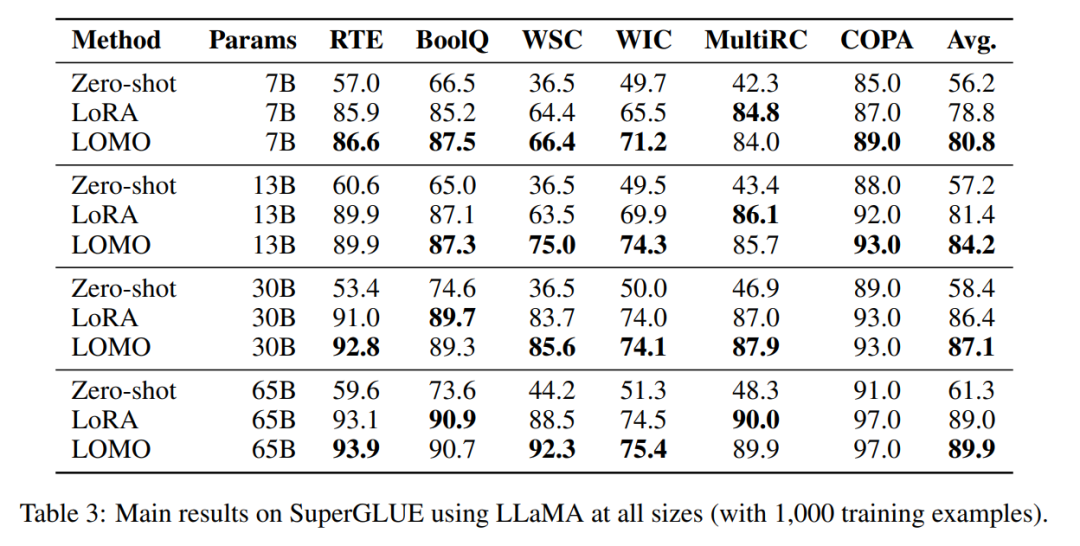

To evaluate the effectiveness of LOMO in fine-tuning large language models, the researchers conducted a An extensive series of experiments. They compared LOMO with two other methods, one is Zero-shot, which does not require fine-tuning, and the other is LoRA, a popular parameter-efficient fine-tuning technique.

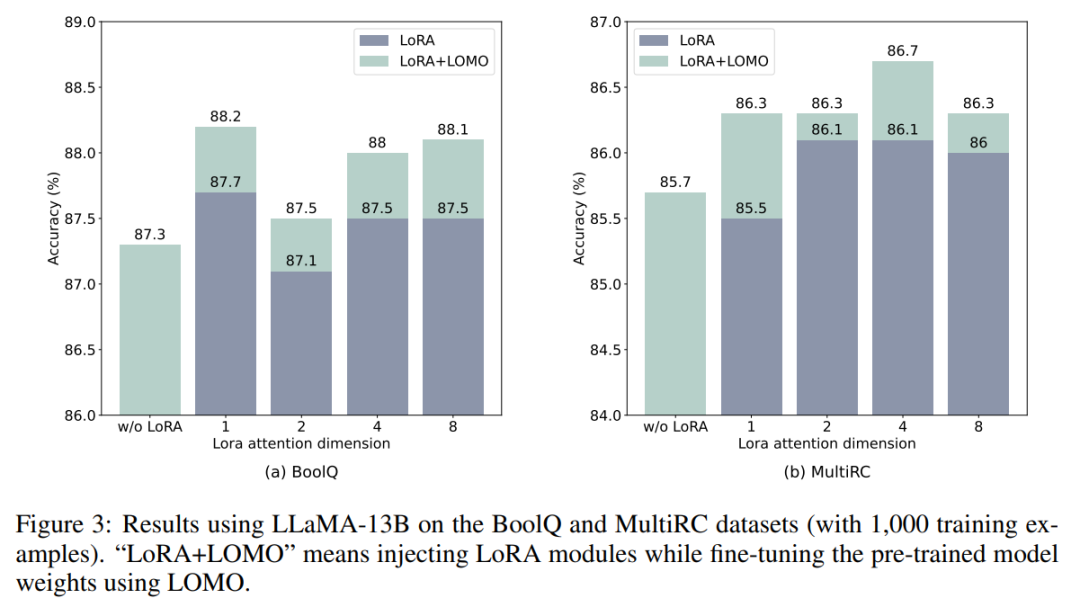

LOMO and LoRA are essentially independent of each other. To verify this statement, the researchers conducted experiments on the BoolQ and MultiRC datasets using LLaMA-13B. The results are shown in Figure 3.

They found that LOMO continued to enhance the performance of LoRA, regardless of how high the results LoRA achieved. This shows that the different fine-tuning methods employed by LOMO and LoRA are complementary. Specifically, LOMO focuses on fine-tuning the weights of the pre-trained model, while LoRA adjusts other modules. Therefore, LOMO does not affect the performance of LoRA; instead, it facilitates better model tuning for downstream tasks.

See the original paper for more details.

The above is the detailed content of 65 billion parameters, 8 GPUs can fine-tune all parameters: Qiu Xipeng's team has lowered the threshold for large models. For more information, please follow other related articles on the PHP Chinese website!