What is a web crawler

When it comes to technical SEO, it can be difficult to understand how it works. But it is important to gain as much knowledge as possible to optimize our website and reach a larger audience. One tool that plays an important role in SEO is the web crawler.

A web crawler (also known as a web spider) is a robot that searches and indexes content on the Internet. Essentially, web crawlers are responsible for understanding the content on a web page in order to retrieve it when a query is made.

You may be wondering, "Who runs these web crawlers?"

Typically, web crawlers are operated by search engines that have their own algorithms. The algorithm will tell web crawlers how to find relevant information in response to search queries.

A web spider will search (crawl) and categorize all web pages on the Internet that it can find and is told to index. So, if you don't want your page to be found on search engines, you can tell web crawlers not to crawl your page.

To do this, you need to upload a robots.txt file. Essentially, the robots.txt file will tell search engines how to crawl and index the pages on your website.

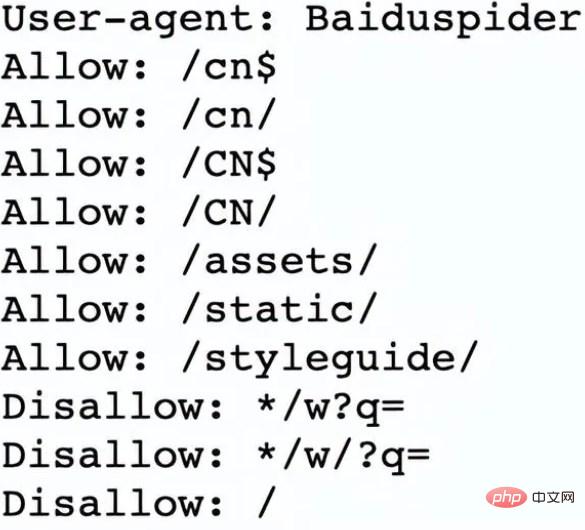

For example, let’s look at Nike.com/robots.txt

Nike uses its robots.txt file to determine which links within its website will be crawled and indexed.

In this section of the file, it determines:

The web crawler Baiduspider is allowed to crawl the first 7 links

Web crawler Baiduspider is banned from crawling the remaining three links

This is beneficial to Nike because some of the company's pages are not suitable for search, and the disallowed links will not affect its optimized pages, which Pages help them rank in search engines.

So now we know what web crawlers are and how do they get their job done? Next, let’s review how web crawlers work.

Web crawlers work by discovering URLs and viewing and classifying web pages. In the process, they find hyperlinks to other web pages and add them to the list of pages to crawl next. Web crawlers are smart and can determine the importance of each web page.

Search engine web crawlers will most likely not crawl the entire Internet. Instead, it will determine the importance of each web page based on factors including how many other pages link to it, page views, and even brand authority. Therefore, web crawlers will determine which pages to crawl, the order in which to crawl them, and how often they should crawl updates.

For example, if you have a new web page, or changes are made to an existing web page, the web crawler will record and update the index. Or, if you have a new web page, you can ask search engines to crawl your site.

When a web crawler is on your page, it looks at the copy and meta tags, stores that information, and indexes it for search engines to rank for keywords.

Before the entire process begins, web crawlers will look at your robots.txt file to see which pages to crawl, which is why it is so important for technical SEO.

Ultimately, when a web crawler crawls your page, it determines whether your page will appear on the search results page for your query. It's important to note that some web crawlers may behave differently than others. For example, some people may use different factors when deciding which pages are most important to crawl.

Now that we understand how web crawlers work, we’ll discuss why they should crawl your website.

The above is the detailed content of What is a web crawler. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to build a powerful web crawler application using React and Python

Sep 26, 2023 pm 01:04 PM

How to build a powerful web crawler application using React and Python

Sep 26, 2023 pm 01:04 PM

How to build a powerful web crawler application using React and Python Introduction: A web crawler is an automated program used to crawl web page data through the Internet. With the continuous development of the Internet and the explosive growth of data, web crawlers are becoming more and more popular. This article will introduce how to use React and Python, two popular technologies, to build a powerful web crawler application. We will explore the advantages of React as a front-end framework and Python as a crawler engine, and provide specific code examples. 1. For

Develop efficient web crawlers and data scraping tools using Vue.js and Perl languages

Jul 31, 2023 pm 06:43 PM

Develop efficient web crawlers and data scraping tools using Vue.js and Perl languages

Jul 31, 2023 pm 06:43 PM

Use Vue.js and Perl languages to develop efficient web crawlers and data scraping tools. In recent years, with the rapid development of the Internet and the increasing importance of data, the demand for web crawlers and data scraping tools has also increased. In this context, it is a good choice to combine Vue.js and Perl language to develop efficient web crawlers and data scraping tools. This article will introduce how to develop such a tool using Vue.js and Perl language, and attach corresponding code examples. 1. Introduction to Vue.js and Perl language

What is a web crawler

Jun 20, 2023 pm 04:36 PM

What is a web crawler

Jun 20, 2023 pm 04:36 PM

A web crawler (also known as a web spider) is a robot that searches and indexes content on the Internet. Essentially, web crawlers are responsible for understanding the content on a web page in order to retrieve it when a query is made.

How to write a simple web crawler using PHP

Jun 14, 2023 am 08:21 AM

How to write a simple web crawler using PHP

Jun 14, 2023 am 08:21 AM

A web crawler is an automated program that automatically visits websites and crawls information from them. This technology is becoming more and more common in today's Internet world and is widely used in data mining, search engines, social media analysis and other fields. If you want to learn how to write a simple web crawler using PHP, this article will provide you with basic guidance and advice. First, you need to understand some basic concepts and techniques. Crawling target Before writing a crawler, you need to select a crawling target. This can be a specific website, a specific web page, or the entire Internet

Detailed explanation of HTTP request method of PHP web crawler

Jun 17, 2023 am 11:53 AM

Detailed explanation of HTTP request method of PHP web crawler

Jun 17, 2023 am 11:53 AM

With the development of the Internet, all kinds of data are becoming more and more accessible. As a tool for obtaining data, web crawlers have attracted more and more attention and attention. In web crawlers, HTTP requests are an important link. This article will introduce in detail the common HTTP request methods in PHP web crawlers. 1. HTTP request method The HTTP request method refers to the request method used by the client when sending a request to the server. Common HTTP request methods include GET, POST, and PU

How to use PHP and swoole for large-scale web crawler development?

Jul 21, 2023 am 09:09 AM

How to use PHP and swoole for large-scale web crawler development?

Jul 21, 2023 am 09:09 AM

How to use PHP and swoole for large-scale web crawler development? Introduction: With the rapid development of the Internet, big data has become one of the important resources in today's society. In order to obtain this valuable data, web crawlers came into being. Web crawlers can automatically visit various websites on the Internet and extract required information from them. In this article, we will explore how to use PHP and the swoole extension to develop efficient, large-scale web crawlers. 1. Understand the basic principles of web crawlers. The basic principles of web crawlers are very simple.

PHP simple web crawler development example

Jun 13, 2023 pm 06:54 PM

PHP simple web crawler development example

Jun 13, 2023 pm 06:54 PM

With the rapid development of the Internet, data has become one of the most important resources in today's information age. As a technology that automatically obtains and processes network data, web crawlers are attracting more and more attention and application. This article will introduce how to use PHP to develop a simple web crawler and realize the function of automatically obtaining network data. 1. Overview of Web Crawler Web crawler is a technology that automatically obtains and processes network resources. Its main working process is to simulate browser behavior, automatically access specified URL addresses and extract all information.

PHP study notes: web crawlers and data collection

Oct 08, 2023 pm 12:04 PM

PHP study notes: web crawlers and data collection

Oct 08, 2023 pm 12:04 PM

PHP study notes: Web crawler and data collection Introduction: A web crawler is a tool that automatically crawls data from the Internet. It can simulate human behavior, browse web pages and collect the required data. As a popular server-side scripting language, PHP also plays an important role in the field of web crawlers and data collection. This article will explain how to write a web crawler using PHP and provide practical code examples. 1. Basic principles of web crawlers The basic principles of web crawlers are to send HTTP requests, receive and parse the H response of the server.