Backend Development

Backend Development

Python Tutorial

Python Tutorial

How to use Scrapy to crawl Douban books and their ratings and comments?

How to use Scrapy to crawl Douban books and their ratings and comments?

How to use Scrapy to crawl Douban books and their ratings and comments?

With the development of the Internet, people increasingly rely on the Internet to obtain information. For book lovers, Douban Books has become an indispensable platform. In addition, Douban Books also provides a wealth of book ratings and reviews, allowing readers to understand a book more comprehensively. However, obtaining this information manually is tantamount to finding a needle in a haystack. At this time, we can use the Scrapy tool to crawl the data.

Scrapy is an open source web crawler framework based on Python that helps us extract data from websites efficiently. In this article, I will focus on the steps and introduce in detail how to use Scrapy to crawl Douban books and their ratings and comments.

Step One: Install Scrapy

First, you need to install Scrapy on your computer. If you have installed pip (Python package management tool), you only need to enter the following command in the terminal or command line:

pip install scrapy

In this way, Scrapy will be installed on your computer. If an error or warning occurs, it is recommended to make appropriate adjustments according to the prompts.

Step 2: Create a new Scrapy project

Next, we need to enter the following command in the terminal or command line to create a new Scrapy project:

scrapy startproject douban

This command will be in Create a folder named douban in the current directory, which contains Scrapy's basic files and directory structure.

Step 3: Write a crawler program

In Scrapy, we need to write a crawler program to tell Scrapy how to extract data from the website. Therefore, we need to create a new file named douban_spider.py and write the following code:

import scrapy

class DoubanSpider(scrapy.Spider):

name = 'douban'

allowed_domains = ['book.douban.com']

start_urls = ['https://book.douban.com/top250']

def parse(self, response):

selector = scrapy.Selector(response)

books = selector.xpath('//tr[@class="item"]')

for book in books:

title = book.xpath('td[2]/div[1]/a/@title').extract_first()

author = book.xpath('td[2]/div[1]/span[1]/text()').extract_first()

score = book.xpath('td[2]/div[2]/span[@class="rating_nums"]/text()').extract_first()

comment_count = book.xpath('td[2]/div[2]/span[@class="pl"]/text()').extract_first()

comment_count = comment_count.strip('()')

yield {'title': title, 'author': author, 'score': score, 'comment_count': comment_count}The above code implements two functions:

- Crawling the book titles, authors, ratings and number of reviews in the top 250 pages of Douban Books.

- Return the crawled data in the form of a dictionary.

In this program, we first need to define a DoubanSpider class and specify the name of the crawler, the domain name and starting URL that the crawler is allowed to access. In the parse method, we parse the HTML page through the scrapy.Selector object and use XPath expressions to obtain relevant information about the book.

After obtaining the data, we use the yield keyword to return the data in the form of a dictionary. The yield keyword here is to turn the function into a generator to achieve the effect of returning one data at a time. In Scrapy, we can achieve efficient crawling of website data by defining generators.

Step 4: Run the crawler program

After writing the crawler program, we need to run the following code in the terminal or command line to start the crawler program:

scrapy crawl douban -o result.json

This The function of the instruction is to start the crawler named douban and output the crawled data to the result.json file in JSON format.

Through the above four steps, we can successfully crawl Douban books and their ratings and review information. Of course, if you need to further improve the efficiency and stability of the crawler program, you will also need to make some other optimizations and adjustments. For example: setting delay time, preventing anti-crawling mechanism, etc.

In short, using Scrapy to crawl Douban books and their ratings and review information is a relatively simple and interesting task. If you are interested in data crawling and Python programming, you can further try to crawl data from other websites to improve your programming skills.

The above is the detailed content of How to use Scrapy to crawl Douban books and their ratings and comments?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Scrapy implements crawling and analysis of WeChat public account articles

Jun 22, 2023 am 09:41 AM

Scrapy implements crawling and analysis of WeChat public account articles

Jun 22, 2023 am 09:41 AM

Scrapy implements article crawling and analysis of WeChat public accounts. WeChat is a popular social media application in recent years, and the public accounts operated in it also play a very important role. As we all know, WeChat public accounts are an ocean of information and knowledge, because each public account can publish articles, graphic messages and other information. This information can be widely used in many fields, such as media reports, academic research, etc. So, this article will introduce how to use the Scrapy framework to crawl and analyze WeChat public account articles. Scr

Metadata scraping using the New York Times API

Sep 02, 2023 pm 10:13 PM

Metadata scraping using the New York Times API

Sep 02, 2023 pm 10:13 PM

Introduction Last week, I wrote an introduction about scraping web pages to collect metadata, and mentioned that it was impossible to scrape the New York Times website. The New York Times paywall blocks your attempts to collect basic metadata. But there is a way to solve this problem using New York Times API. Recently I started building a community website on the Yii platform, which I will publish in a future tutorial. I want to be able to easily add links that are relevant to the content on my site. While people can easily paste URLs into forms, providing title and source information is time-consuming. So in today's tutorial I'm going to extend the scraping code I recently wrote to leverage the New York Times API to collect headlines when adding a New York Times link. Remember, I'm involved

Scrapy asynchronous loading implementation method based on Ajax

Jun 22, 2023 pm 11:09 PM

Scrapy asynchronous loading implementation method based on Ajax

Jun 22, 2023 pm 11:09 PM

Scrapy is an open source Python crawler framework that can quickly and efficiently obtain data from websites. However, many websites use Ajax asynchronous loading technology, making it impossible for Scrapy to obtain data directly. This article will introduce the Scrapy implementation method based on Ajax asynchronous loading. 1. Ajax asynchronous loading principle Ajax asynchronous loading: In the traditional page loading method, after the browser sends a request to the server, it must wait for the server to return a response and load the entire page before proceeding to the next step.

How to set English mode on Douban app How to set English mode on Douban app

Mar 12, 2024 pm 02:46 PM

How to set English mode on Douban app How to set English mode on Douban app

Mar 12, 2024 pm 02:46 PM

How to set English mode on Douban app? Douban app is a software that allows you to view reviews of various resources. This software has many functions. When users use this software for the first time, they need to log in, and the default language on this software is For Chinese mode, some users like to use English mode, but they don’t know how to set the English mode on this software. The editor below has compiled the method of setting the English mode for your reference. How to set the English mode on the Douban app: 1. Open the "Douban" app on your phone; 2. Click "My"; 3. Select "Settings" in the upper right corner.

Scrapy case analysis: How to crawl company information on LinkedIn

Jun 23, 2023 am 10:04 AM

Scrapy case analysis: How to crawl company information on LinkedIn

Jun 23, 2023 am 10:04 AM

Scrapy is a Python-based crawler framework that can quickly and easily obtain relevant information on the Internet. In this article, we will use a Scrapy case to analyze in detail how to crawl company information on LinkedIn. Determine the target URL First, we need to make it clear that our target is the company information on LinkedIn. Therefore, we need to find the URL of the LinkedIn company information page. Open the LinkedIn website, enter the company name in the search box, and

How to crawl and process data by calling API interface in PHP project?

Sep 05, 2023 am 08:41 AM

How to crawl and process data by calling API interface in PHP project?

Sep 05, 2023 am 08:41 AM

How to crawl and process data by calling API interface in PHP project? 1. Introduction In PHP projects, we often need to crawl data from other websites and process these data. Many websites provide API interfaces, and we can obtain data by calling these interfaces. This article will introduce how to use PHP to call the API interface to crawl and process data. 2. Obtain the URL and parameters of the API interface. Before starting, we need to obtain the URL of the target API interface and the required parameters.

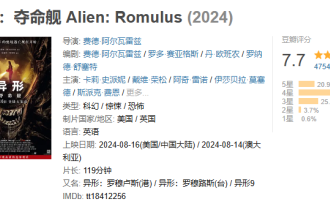

The space thriller movie 'Alien' scored 7.7 on Douban, and the box office exceeded 100 million the day after its release.

Aug 17, 2024 pm 10:50 PM

The space thriller movie 'Alien' scored 7.7 on Douban, and the box office exceeded 100 million the day after its release.

Aug 17, 2024 pm 10:50 PM

According to news from this website on August 17, the space thriller "Alien: The Last Ship" by 20th Century Pictures was released in mainland China yesterday (August 16). The Douban score was announced today as 7.7. According to real-time data from Beacon Professional Edition, as of 20:5 on August 17, the film’s box office has exceeded 100 million. The distribution of ratings on this site is as follows: 5 stars account for 20.9% 4 stars account for 49.5% 3 stars account for 25.4% 2 stars account for 3.7% 1 stars account for 0.6% "Alien: Death Ship" is produced by 20th Century Pictures , Ridley Scott, the director of "Blade Runner" and "Prometheus", serves as the producer, directed by Fede Alvare, written by Fede Alvare and Rodo Seiagues, and Card Leigh Spaeny, Isabella Merced, Aileen Wu, Spike Fey

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Jun 22, 2023 pm 01:57 PM

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Jun 22, 2023 pm 01:57 PM

Scrapy is a powerful Python crawler framework that can be used to obtain large amounts of data from the Internet. However, when developing Scrapy, we often encounter the problem of crawling duplicate URLs, which wastes a lot of time and resources and affects efficiency. This article will introduce some Scrapy optimization techniques to reduce the crawling of duplicate URLs and improve the efficiency of Scrapy crawlers. 1. Use the start_urls and allowed_domains attributes in the Scrapy crawler to