Backend Development

Backend Development

Python Tutorial

Python Tutorial

Practical application of Scrapy in Twitter data crawling and analysis

Practical application of Scrapy in Twitter data crawling and analysis

Practical application of Scrapy in Twitter data crawling and analysis

Scrapy is a Python-based web crawler framework that can quickly crawl data from the Internet and provides simple and easy-to-use APIs and tools for data processing and analysis. In this article, we will discuss practical application cases of Scrapy in Twitter data crawling and analysis.

Twitter is a social media platform with massive users and data resources. Researchers, social media analysts, and data scientists can access large amounts of data and discover interesting insights and information through data mining and analysis. However, there are some limitations to obtaining data through the Twitter API, and Scrapy can bypass these limitations by simulating human access to obtain larger amounts of Twitter data.

First, we need to create a Twitter developer account and apply for API Key and Access Token. Next, we need to set the Twitter API access parameters in Scrapy's settings.py file, which will allow Scrapy to simulate manual access to the Twitter API to obtain data. For example:

TWITTER_CONSUMER_KEY = 'your_consumer_key' TWITTER_CONSUMER_SECRET = 'your_consumer_secret' TWITTER_ACCESS_TOKEN = 'your_access_token' TWITTER_ACCESS_TOKEN_SECRET = 'your_access_token_secret'

Next, we need to define a Scrapy crawler to crawl Twitter data. We can use Scrapy's Item definition to specify the type of data to be crawled, for example:

class TweetItem(scrapy.Item):

text = scrapy.Field()

created_at = scrapy.Field()

user_screen_name = scrapy.Field()In the crawler configuration, we can set the keywords and time range to be queried, for example:

class TwitterSpider(scrapy.Spider):

name = 'twitter'

allowed_domains = ['twitter.com']

start_urls = ['https://twitter.com/search?f=tweets&q=keyword%20since%3A2021-01-01%20until%3A2021-12-31&src=typd']

def parse(self, response):

tweets = response.css('.tweet')

for tweet in tweets:

item = TweetItem()

item['text'] = tweet.css('.tweet-text::text').extract_first().strip()

item['created_at'] = tweet.css('._timestamp::text').extract_first()

item['user_screen_name'] = tweet.css('.username b::text').extract_first().strip()

yield itemIn this example crawler, we used a CSS selector to extract all tweets about "keywords" on Twitter from January 1, 2021 to December 31, 2021. We store the data in the TweetItem object defined above and pass it to the Scrapy engine via a yield statement.

When we run the Scrapy crawler, it will automatically simulate human access to the Twitter API, obtain Twitter data and store it in the defined data type TweetItem object. We can use various tools and data analysis libraries provided by Scrapy to analyze and mine the crawled data, for example:

class TwitterAnalyzer():

def __init__(self, data=[]):

self.data = data

self.texts = [d['text'] for d in data]

self.dates = [dt.strptime(d['created_at'], '%a %b %d %H:%M:%S %z %Y').date() for d in data]

def get_top_hashtags(self, n=5):

hashtags = Counter([re.findall(r'(?i)#w+', t) for t in self.texts])

return hashtags.most_common(n)

def get_top_users(self, n=5):

users = Counter([d['user_screen_name'] for d in self.data])

return users.most_common(n)

def get_dates_histogram(self, step='day'):

if step == 'day':

return Counter(self.dates)

elif step == 'week':

return Counter([date.fromisoformat(str(dt).split()[0]) for dt in pd.date_range(min(self.dates), max(self.dates), freq='W')])

analyzer = TwitterAnalyzer(data)

print(analyzer.get_top_hashtags())

print(analyzer.get_top_users())

print(analyzer.get_dates_histogram('day'))In this sample code, we define a TwitterAnalyzer class, which uses TweetItem The data in the object helps us obtain various information and insights from Twitter data. We can use methods of this class to obtain the most frequently used hash tags in tweets, reveal time changes in active users and impression data, and more.

In short, Scrapy is a very effective tool that can help us obtain data from websites such as Twitter, and then use data mining and analysis techniques to discover interesting information and insights. Whether you are an academic researcher, social media analyst or data science enthusiast, Scrapy is a tool worth trying and using.

The above is the detailed content of Practical application of Scrapy in Twitter data crawling and analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

What are the blockchain data analysis tools?

Feb 21, 2025 pm 10:24 PM

What are the blockchain data analysis tools?

Feb 21, 2025 pm 10:24 PM

The rapid development of blockchain technology has brought about the need for reliable and efficient analytical tools. These tools are essential to extract valuable insights from blockchain transactions in order to better understand and capitalize on their potential. This article will explore some of the leading blockchain data analysis tools on the market, including their capabilities, advantages and limitations. By understanding these tools, users can gain the necessary insights to maximize the possibilities of blockchain technology.

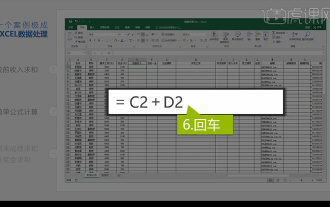

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

1. In this lesson, we will explain integrated Excel data analysis. We will complete it through a case. Open the course material and click on cell E2 to enter the formula. 2. We then select cell E53 to calculate all the following data. 3. Then we click on cell F2, and then we enter the formula to calculate it. Similarly, dragging down can calculate the value we want. 4. We select cell G2, click the Data tab, click Data Validation, select and confirm. 5. Let’s use the same method to automatically fill in the cells below that need to be calculated. 6. Next, we calculate the actual wages and select cell H2 to enter the formula. 7. Then we click on the value drop-down menu to click on other numbers.

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

Recommended: 1. Business Data Analysis Forum; 2. National People’s Congress Economic Forum - Econometrics and Statistics Area; 3. China Statistics Forum; 4. Data Mining Learning and Exchange Forum; 5. Data Analysis Forum; 6. Website Data Analysis; 7. Data analysis; 8. Data Mining Research Institute; 9. S-PLUS, R Statistics Forum.

Where is the official entrance to DeepSeek? Latest visit guide in 2025

Feb 19, 2025 pm 05:03 PM

Where is the official entrance to DeepSeek? Latest visit guide in 2025

Feb 19, 2025 pm 05:03 PM

DeepSeek, a comprehensive search engine that provides a wide range of results from academic databases, news websites and social media. Visit DeepSeek's official website https://www.deepseek.com/, register an account and log in, and then you can start searching. Use specific keywords, precise phrases, or advanced search options to narrow your search and get the most relevant results.

Golang application examples in data analysis and visualization

Jun 04, 2024 pm 12:10 PM

Golang application examples in data analysis and visualization

Jun 04, 2024 pm 12:10 PM

Go is widely used for data analysis and visualization. Examples include: Infrastructure Monitoring: Building monitoring applications using Go with Telegraf and Prometheus. Machine Learning: Build and train models using Go and TensorFlow or PyTorch. Data visualization: Create interactive charts using Plotly and Go-echarts libraries.

Bitget Exchange official website login latest entrance

Feb 18, 2025 pm 02:54 PM

Bitget Exchange official website login latest entrance

Feb 18, 2025 pm 02:54 PM

The Bitget Exchange offers a variety of login methods, including email, mobile phone number and social media accounts. This article details the latest entrances and steps for each login method, including accessing the official website, selecting the login method, entering the login credentials, and completing the login. Users should pay attention to using the official website when logging in and properly keep the login credentials.

How much is the price of MRI coins? The latest price trend of MRI coin

Mar 03, 2025 pm 11:48 PM

How much is the price of MRI coins? The latest price trend of MRI coin

Mar 03, 2025 pm 11:48 PM

This cryptocurrency does not really have monetary value, and its value depends entirely on community support. Investors must carefully investigate before investing, because it lacks practical uses and attractive token economic models. Since the token was issued last month, investors can currently only purchase through decentralized exchanges. The real-time price of MRI coin is $0.000045≈¥0.00033MRI coin historical price As of 13:51 on February 24, 2025, the price of MRI coin is $0.000045. The following figure shows the price trend of the token from February 2022 to June 2024. MRI Coin Investment Risk Assessment Currently, MRI Coin has not been listed on any exchange and its price has been reset to zero and cannot be purchased again. Even if the project

gateio official website entrance

Mar 05, 2025 pm 08:09 PM

gateio official website entrance

Mar 05, 2025 pm 08:09 PM

The official Gate.io website is accessible through the official application. Fake websites may contain misspelled, design differences, or suspicious security certificates. Protections include avoiding clicking on suspicious links, using two-factor authentication, and reporting fraudulent activity to the official team. Frequently asked questions cover registration, transactions, withdrawals, customer service and fees, while security measures include cold storage, multi-signatures, and KYC compliance. Users should be aware of common fraudulent means of impersonating employees, giving tokens, or asking for personal information.