Backend Development

Backend Development

Python Tutorial

Python Tutorial

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency

Scrapy is a powerful Python crawler framework that can be used to obtain large amounts of data from the Internet. However, when developing Scrapy, we often encounter the problem of crawling duplicate URLs, which wastes a lot of time and resources and affects efficiency. This article will introduce some Scrapy optimization techniques to reduce the crawling of duplicate URLs and improve the efficiency of Scrapy crawlers.

1. Use the start_urls and allowed_domains attributes

In the Scrapy crawler, you can use the start_urls attribute to specify the URLs that need to be crawled. At the same time, you can also use the allowed_domains attribute to specify the domain names that the crawler can crawl. The use of these two attributes can help Scrapy quickly filter out URLs that do not need to be crawled, saving time and resources while improving efficiency.

2. Use Scrapy-Redis to implement distributed crawling

When a large number of URLs need to be crawled, single-machine crawling is inefficient, so you can consider using distributed crawling technology. Scrapy-Redis is a plug-in for Scrapy that uses the Redis database to implement distributed crawling and improve the efficiency of Scrapy crawlers. By setting the REDIS_HOST and REDIS_PORT parameters in the settings.py file, you can specify the address and port number of the Redis database that Scrapy-Redis connects to achieve distributed crawling.

3. Use incremental crawling technology

In Scrapy crawler development, we often encounter the need to crawl the same URL repeatedly, which will cause a lot of waste of time and resources. Therefore, incremental crawling techniques can be used to reduce repeated crawling. The basic idea of incremental crawling technology is: record the crawled URL, and during the next crawl, check whether the same URL has been crawled based on the record. If it has been crawled, skip it. In this way, crawling of duplicate URLs can be reduced and efficiency improved.

4. Use middleware to filter duplicate URLs

In addition to incremental crawling technology, you can also use middleware to filter duplicate URLs. The middleware in Scrapy is a custom processor. During the running of the Scrapy crawler, requests and responses can be processed through the middleware. We can implement URL deduplication by writing custom middleware. Among them, the most commonly used deduplication method is to use the Redis database to record a list of URLs that have been crawled, and query the list to determine whether the URL has been crawled.

5. Use DupeFilter to filter duplicate URLs

In addition to custom middleware, Scrapy also provides a built-in deduplication filter DupeFilter, which can effectively reduce the crawling of duplicate URLs. DupeFilter hashes each URL and saves unique hash values in memory. Therefore, during the crawling process, only URLs with different hash values will be crawled. Using DupeFilter does not require additional Redis server support and is a lightweight duplicate URL filtering method.

Summary:

In Scrapy crawler development, crawling of duplicate URLs is a common problem. Various optimization techniques are needed to reduce the crawling of duplicate URLs and improve the efficiency of Scrapy crawlers. . This article introduces some common Scrapy optimization techniques, including using the start_urls and allowed_domains attributes, using Scrapy-Redis to implement distributed crawling, using incremental crawling technology, using custom middleware to filter duplicate URLs, and using the built-in DupeFilter to filter duplicates URL. Readers can choose appropriate optimization methods according to their own needs to improve the efficiency of Scrapy crawlers.

The above is the detailed content of Scrapy optimization tips: How to reduce crawling of duplicate URLs and improve efficiency. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

What is the difference between html and url

Mar 06, 2024 pm 03:06 PM

What is the difference between html and url

Mar 06, 2024 pm 03:06 PM

Differences: 1. Different definitions, url is a uniform resource locator, and html is a hypertext markup language; 2. There can be many urls in an html, but only one html page can exist in a url; 3. html refers to is a web page, and url refers to the website address.

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Golang's garbage collection (GC) has always been a hot topic among developers. As a fast programming language, Golang's built-in garbage collector can manage memory very well, but as the size of the program increases, some performance problems sometimes occur. This article will explore Golang’s GC optimization strategies and provide some specific code examples. Garbage collection in Golang Golang's garbage collector is based on concurrent mark-sweep (concurrentmark-s

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

Laravel is a popular PHP development framework, but it is sometimes criticized for being as slow as a snail. What exactly causes Laravel's unsatisfactory speed? This article will provide an in-depth explanation of the reasons why Laravel is as slow as a snail from multiple aspects, and combine it with specific code examples to help readers gain a deeper understanding of this problem. 1. ORM query performance issues In Laravel, ORM (Object Relational Mapping) is a very powerful feature that allows

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed! Laravel, as a popular PHP framework, provides developers with rich functions and a convenient development experience. However, as the size of the project increases and the number of visits increases, we may face the challenge of performance bottlenecks. This article will delve into Laravel performance optimization techniques to help developers discover and solve potential performance problems. 1. Database query optimization using Eloquent delayed loading When using Eloquent to query the database, avoid

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

Time complexity measures the execution time of an algorithm relative to the size of the input. Tips for reducing the time complexity of C++ programs include: choosing appropriate containers (such as vector, list) to optimize data storage and management. Utilize efficient algorithms such as quick sort to reduce computation time. Eliminate multiple operations to reduce double counting. Use conditional branches to avoid unnecessary calculations. Optimize linear search by using faster algorithms such as binary search.

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed! With the development of Internet technology, the performance optimization of websites and applications has become increasingly important. As a popular PHP framework, Laravel may face performance bottlenecks during the development process. This article will explore the performance problems that Laravel applications may encounter, and provide some optimization solutions and specific code examples so that developers can better solve these problems. 1. Database query optimization Database query is one of the common performance bottlenecks in Web applications. exist

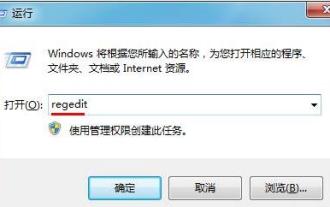

How to optimize the startup items of WIN7 system

Mar 26, 2024 pm 06:20 PM

How to optimize the startup items of WIN7 system

Mar 26, 2024 pm 06:20 PM

1. Press the key combination (win key + R) on the desktop to open the run window, then enter [regedit] and press Enter to confirm. 2. After opening the Registry Editor, we click to expand [HKEY_CURRENT_USERSoftwareMicrosoftWindowsCurrentVersionExplorer], and then see if there is a Serialize item in the directory. If not, we can right-click Explorer, create a new item, and name it Serialize. 3. Then click Serialize, then right-click the blank space in the right pane, create a new DWORD (32) bit value, and name it Star

Vivox100s parameter configuration revealed: How to optimize processor performance?

Mar 24, 2024 am 10:27 AM

Vivox100s parameter configuration revealed: How to optimize processor performance?

Mar 24, 2024 am 10:27 AM

Vivox100s parameter configuration revealed: How to optimize processor performance? In today's era of rapid technological development, smartphones have become an indispensable part of our daily lives. As an important part of a smartphone, the performance optimization of the processor is directly related to the user experience of the mobile phone. As a high-profile smartphone, Vivox100s's parameter configuration has attracted much attention, especially the optimization of processor performance has attracted much attention from users. As the "brain" of the mobile phone, the processor directly affects the running speed of the mobile phone.