Backend Development

Backend Development

Python Tutorial

Python Tutorial

The power of Scrapy: How to recognize and process verification codes?

The power of Scrapy: How to recognize and process verification codes?

The power of Scrapy: How to recognize and process verification codes?

Scrapy is a powerful Python framework that helps us crawl data on websites easily. However, we run into problems when the website we want to crawl has a verification code. The purpose of CAPTCHAs is to prevent automated crawlers from attacking a website, so they tend to be highly complex and difficult to crack. In this post, we’ll cover how to use the Scrapy framework to identify and process CAPTCHAs to allow our crawlers to bypass these defenses.

What is a verification code?

Captcha is a test used to prove that the user is a real human being and not a machine. It is usually an obfuscated text string or an indecipherable image that requires the user to manually enter or select what is displayed. CAPTCHAs are designed to catch automated bots and scripts to protect websites from malicious attacks and abuse.

There are usually three types of CAPTCHAs:

- Text CAPTCHA: Users need to copy and paste a string of text to prove they are a human user and not a bot.

- Number verification code: The user is required to enter the displayed number in the input box.

- Image verification code: The user is required to enter the characters or numbers in the displayed image in the input box. This is usually the most difficult type to crack because the characters or numbers in the image can be distorted, misplaced or Has other visual noise.

Why do you need to process verification codes?

Crawlers are often automated on a large scale, so they can easily be identified as robots and banned from websites from obtaining data. CAPTCHAs were introduced to prevent this from happening. Once ep enters the verification code stage, the Scrapy crawler will stop waiting for user input, and therefore cannot continue to crawl data, resulting in a decrease in the efficiency and integrity of the crawler.

Therefore, we need a way to handle the verification code so that our crawler can automatically pass and continue its task. Usually we use third-party tools or APIs to complete the recognition of verification codes. These tools and APIs use machine learning and image processing algorithms to recognize images and characters, and return the results to our program.

How to handle verification codes in Scrapy?

Open Scrapy's settings.py file, we need to modify the DOWNLOADER_MIDDLEWARES field and add the following proxy:

DOWNLOADER_MIDDLEWARES = {'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware': 350,

'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 350,'scrapy.contrib.downloadermiddleware.redirect.RedirectMiddleware': 400,

'scrapy.contrib.downloadermiddleware.cookies.CookiesMiddleware': 700,'scrapy. contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 750,

'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 400,'scrapy.contrib.downloadermiddleware.defaultheaders.DefaultHeadersMiddleware': 550,

'scrapy.contrib. downloadermiddleware.ajaxcrawl.AjaxCrawlMiddleware': 900,'scrapy.contrib.downloadermiddleware.httpcompression.HttpCompressionMiddleware': 800,

'scrapy.contrib.downloadermiddleware.chunked.ChunkedTransferMiddleware': 830,'scrapy.contrib.downloadermiddleware.stats.DownloaderSt ats ': 850,

'tutorial.middlewares.CaptchaMiddleware': 999}

In this example, we use CaptchaMiddleware to handle the verification code. CaptchMiddleware is a custom middleware class that will handle the download request and call the API to identify the verification code when needed, then fill the verification code into the request and return to continue execution.

Code example:

class CaptchaMiddleware(object):

def __init__(self):

self.client = CaptchaClient()

self.max_attempts = 5

def process_request(self, request, spider):

# 如果没有设置dont_filter则默认开启

if not request.meta.get('dont_filter', False):

request.meta['dont_filter'] = True

if 'captcha' in request.meta:

# 带有验证码信息

captcha = request.meta['captcha']

request.meta.pop('captcha')

else:

# 没有验证码则获取

captcha = self.get_captcha(request.url, logger=spider.logger)

if captcha:

# 如果有验证码则添加到请求头

request = request.replace(

headers={

'Captcha-Code': captcha,

'Captcha-Type': 'math',

}

)

spider.logger.debug(f'has captcha: {captcha}')

return request

def process_response(self, request, response, spider):

# 如果没有验证码或者验证码失败则不重试

need_retry = 'Captcha-Code' in request.headers.keys()

if not need_retry:

return response

# 如果已经尝试过,则不再重试

retry_times = request.meta.get('retry_times', 0)

if retry_times >= self.max_attempts:

return response

# 验证码校验失败则重试

result = self.client.check(request.url, request.headers['Captcha-Code'])

if not result:

spider.logger.warning(f'Captcha check fail: {request.url}')

return request.replace(

meta={

'captcha': self.get_captcha(request.url, logger=spider.logger),

'retry_times': retry_times + 1,

},

dont_filter=True,

)

# 验证码校验成功则继续执行

spider.logger.debug(f'Captcha check success: {request.url}')

return response

def get_captcha(self, url, logger=None):

captcha = self.client.solve(url)

if captcha:

if logger:

logger.debug(f'get captcha [0:4]: {captcha[0:4]}')

return captcha

return None

In this middleware, we use the CaptchaClient object as the captcha solution middleware, we can use multiple A captcha solution middleware.

Notes

When implementing this middleware, please pay attention to the following points:

- The identification and processing of verification codes require the use of third-party tools or APIs. We need to make sure we have legal licenses and use them according to the manufacturer's requirements.

- After adding such middleware, the request process will become more complex, and developers need to test and debug carefully to ensure that the program can work properly.

Conclusion

By using the Scrapy framework and the middleware for verification code recognition and processing, we can effectively bypass the verification code defense strategy and achieve effective crawling of the target website. This method usually saves time and effort than manually entering verification codes, and is more efficient and accurate. However, it is important to note that you read and comply with the license agreements and requirements of third-party tools and APIs before using them.

The above is the detailed content of The power of Scrapy: How to recognize and process verification codes?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

The operation process of WIN10 service host occupying too much CPU

Mar 27, 2024 pm 02:41 PM

The operation process of WIN10 service host occupying too much CPU

Mar 27, 2024 pm 02:41 PM

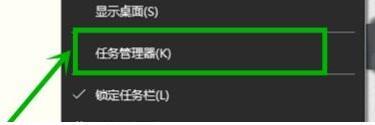

1. First, we right-click the blank space of the taskbar and select the [Task Manager] option, or right-click the start logo, and then select the [Task Manager] option. 2. In the opened Task Manager interface, we click the [Services] tab on the far right. 3. In the opened [Service] tab, click the [Open Service] option below. 4. In the [Services] window that opens, right-click the [InternetConnectionSharing(ICS)] service, and then select the [Properties] option. 5. In the properties window that opens, change [Open with] to [Disabled], click [Apply] and then click [OK]. 6. Click the start logo, then click the shutdown button, select [Restart], and complete the computer restart.

What should I do if Google Chrome does not display the verification code image? Chrome browser does not display the verification code?

Mar 13, 2024 pm 08:55 PM

What should I do if Google Chrome does not display the verification code image? Chrome browser does not display the verification code?

Mar 13, 2024 pm 08:55 PM

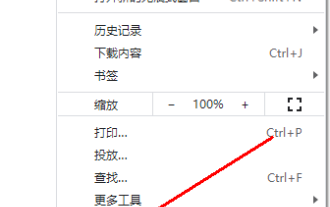

What should I do if Google Chrome does not display the verification code image? Sometimes you need a verification code to log in to a web page using Google Chrome. Some users find that Google Chrome cannot display the content of the image properly when using image verification codes. What should be done? The editor below will introduce how to deal with the Google Chrome verification code not being displayed. I hope it will be helpful to everyone! Method introduction: 1. Enter the software, click the "More" button in the upper right corner, and select "Settings" in the option list below to enter. 2. After entering the new interface, click the "Privacy Settings and Security" option on the left. 3. Then click "Website Settings" on the right

Can virtual numbers receive verification codes?

Jan 02, 2024 am 10:22 AM

Can virtual numbers receive verification codes?

Jan 02, 2024 am 10:22 AM

The virtual number can receive the verification code. As long as the mobile phone number filled in during registration complies with the regulations and the mobile phone number can be connected normally, you can receive the SMS verification code. However, you need to be careful when using virtual mobile phone numbers. Some websites do not support virtual mobile phone number registration, so you need to choose a regular virtual mobile phone number service provider.

A quick guide to CSV file manipulation

Dec 26, 2023 pm 02:23 PM

A quick guide to CSV file manipulation

Dec 26, 2023 pm 02:23 PM

Quickly learn how to open and process CSV format files. With the continuous development of data analysis and processing, CSV format has become one of the widely used file formats. A CSV file is a simple and easy-to-read text file with different data fields separated by commas. Whether in academic research, business analysis or data processing, we often encounter situations where we need to open and process CSV files. The following guide will show you how to quickly learn to open and process CSV format files. Step 1: Understand the CSV file format First,

Learn how to handle special characters and convert single quotes in PHP

Mar 27, 2024 pm 12:39 PM

Learn how to handle special characters and convert single quotes in PHP

Mar 27, 2024 pm 12:39 PM

In the process of PHP development, dealing with special characters is a common problem, especially in string processing, special characters are often escaped. Among them, converting special characters into single quotes is a relatively common requirement, because in PHP, single quotes are a common way to wrap strings. In this article, we will explain how to handle special character conversion single quotes in PHP and provide specific code examples. In PHP, special characters include but are not limited to single quotes ('), double quotes ("), backslash (), etc. In strings

How to handle XML and JSON data formats in C# development

Oct 09, 2023 pm 06:15 PM

How to handle XML and JSON data formats in C# development

Oct 09, 2023 pm 06:15 PM

How to handle XML and JSON data formats in C# development requires specific code examples. In modern software development, XML and JSON are two widely used data formats. XML (Extensible Markup Language) is a markup language used to store and transmit data, while JSON (JavaScript Object Notation) is a lightweight data exchange format. In C# development, we often need to process and operate XML and JSON data. This article will focus on how to use C# to process these two data formats, and attach

How to solve the problem after the upgrade from win7 to win10 fails?

Dec 26, 2023 pm 07:49 PM

How to solve the problem after the upgrade from win7 to win10 fails?

Dec 26, 2023 pm 07:49 PM

If the operating system we use is win7, some friends may fail to upgrade from win7 to win10 when upgrading. The editor thinks we can try upgrading again to see if it can solve the problem. Let’s take a look at what the editor did for details~ What to do if win7 fails to upgrade to win10. Method 1: 1. It is recommended to download a driver first to evaluate whether your computer can be upgraded to Win10. 2. Then use the driver test after upgrading. Check if there are any driver abnormalities, and then fix them with one click. Method 2: 1. Delete all files under C:\Windows\SoftwareDistribution\Download. 2.win+R run "wuauclt.e

How to use JavaScript to implement verification code function?

Oct 19, 2023 am 10:46 AM

How to use JavaScript to implement verification code function?

Oct 19, 2023 am 10:46 AM

How to use JavaScript to implement the verification code function? With the development of the Internet, verification codes have become one of the indispensable security mechanisms in websites and applications. Verification code (VerificationCode) is a technology used to determine whether the user is a human rather than a machine. With CAPTCHAs, websites and applications can prevent spam submissions, malicious attacks, bot crawlers, and more. This article will introduce how to use JavaScript to implement the verification code function and provide specific code