Seamless integration and data analysis of Spring Boot and Elastic Stack

With the rapid growth of data volume, the demand for data analysis is also becoming stronger and stronger. During the development process, it is often necessary to centralize and store the log data generated by the application, and analyze and visually display the data. To solve this problem, Elastic Stack came into being. As a framework for quickly building enterprise-level applications, the seamless integration of Spring Boot and Elastic Stack has also become a major choice for developers.

This article will introduce the integration method of Spring Boot and Elastic Stack, and how to use Elastic Stack to perform data analysis and visual display of logs generated by business systems.

1. Integration method of Spring Boot and Elastic Stack

In Spring Boot, we can use log frameworks such as log4j2 or logback to collect and record application log data. Writing these log data to the Elastic Stack requires the use of logstash. Therefore, we need to configure the pipeline for communication between logstash and Spring Boot applications to achieve data transmission.

The following is a basic configuration example combining Spring Boot and Elastic Stack:

- Configure logstash:

input {

tcp {

port => 5000

codec => json

}

}

output {

elasticsearch {

hosts => "localhost:9200"

index => "logs-%{+YYYY.MM.dd}"

}

}Here, logstash will listen to 5000 Port that receives log data from Spring Boot applications in JSON format and stores the data into the logs-yyyy.mm.dd index in Elasticsearch.

- Introduce logback into the Spring Boot application to configure log output:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{ISO8601} [%thread] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>localhost:5000</destination>

<encoder class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<root level="info">

<appender-ref ref="STDOUT" />

<appender-ref ref="LOGSTASH" />

</root>

</configuration>In this logback configuration file, we configure two appenders: STDOUT and LOGSTASH . Among them, STDOUT outputs the log to the console, while LOGSTASH outputs the log to the 5000 port we defined in the logstash configuration file.

Through the above configuration, we can send the logs generated by the Spring Boot application to the Elastic Stack for storage and analysis.

2. Data analysis and visual display

After storing log data in Elasticsearch, we can use Kibana to query, analyze and visually display the data.

- Querying and analyzing log data

In Kibana, we can use Search and Discover to query and analyze log data. Among them, Search provides more advanced query syntax and allows us to perform operations such as aggregation, filtering, and sorting. Discover, on the other hand, focuses more on simple browsing and filtering of data.

- Visual display of log data

In addition to query and analysis of log data, Kibana also provides tools such as Dashboard, Visualization and Canvas for visual display of data .

Dashboard provides a way to combine multiple visualizations to build customized dashboards. Visualization allows us to display data through charts, tables, etc. Finally, Canvas provides a more flexible way to create more dynamic and interactive visualizations.

Through the above data analysis and visual display tools, we can convert the log data generated by the application into more valuable information, providing more support for the optimization and improvement of business systems.

Conclusion

This article introduces the seamless integration of Spring Boot and Elastic Stack, and how to use Elastic Stack to perform data analysis and visual display of logs generated by business systems. In modern application development, data analysis and visualization have become an indispensable task, and the Elastic Stack provides us with a set of efficient, flexible and scalable solutions.

The above is the detailed content of Seamless integration and data analysis of Spring Boot and Elastic Stack. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Pandas is a powerful data analysis tool that can easily read and process various types of data files. Among them, CSV files are one of the most common and commonly used data file formats. This article will introduce how to use Pandas to read CSV files and perform data analysis, and provide specific code examples. 1. Import the necessary libraries First, we need to import the Pandas library and other related libraries that may be needed, as shown below: importpandasaspd 2. Read the CSV file using Pan

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Common data analysis methods: 1. Comparative analysis method; 2. Structural analysis method; 3. Cross analysis method; 4. Trend analysis method; 5. Cause and effect analysis method; 6. Association analysis method; 7. Cluster analysis method; 8 , Principal component analysis method; 9. Scatter analysis method; 10. Matrix analysis method. Detailed introduction: 1. Comparative analysis method: Comparative analysis of two or more data to find the differences and patterns; 2. Structural analysis method: A method of comparative analysis between each part of the whole and the whole. ; 3. Cross analysis method, etc.

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

Following the last inventory of "11 Basic Charts Data Scientists Use 95% of the Time", today we will bring you 11 basic distributions that data scientists use 95% of the time. Mastering these distributions helps us understand the nature of the data more deeply and make more accurate inferences and predictions during data analysis and decision-making. 1. Normal Distribution Normal Distribution, also known as Gaussian Distribution, is a continuous probability distribution. It has a symmetrical bell-shaped curve with the mean (μ) as the center and the standard deviation (σ) as the width. The normal distribution has important application value in many fields such as statistics, probability theory, and engineering.

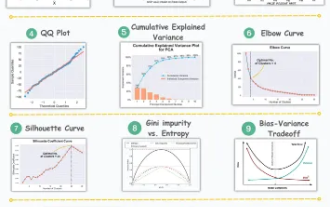

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

Visualization is a powerful tool for communicating complex data patterns and relationships in an intuitive and understandable way. They play a vital role in data analysis, providing insights that are often difficult to discern from raw data or traditional numerical representations. Visualization is crucial for understanding complex data patterns and relationships, and we will introduce the 11 most important and must-know charts that help reveal the information in the data and make complex data more understandable and meaningful. 1. KSPlotKSPlot is used to evaluate distribution differences. The core idea is to measure the maximum distance between the cumulative distribution functions (CDF) of two distributions. The smaller the maximum distance, the more likely they belong to the same distribution. Therefore, it is mainly interpreted as a "system" for determining distribution differences.

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

In today's intelligent society, machine learning and data analysis are indispensable tools that can help people better understand and utilize large amounts of data. In these fields, Go language has also become a programming language that has attracted much attention. Its speed and efficiency make it the choice of many programmers. This article introduces how to use Go language for machine learning and data analysis. 1. The ecosystem of machine learning Go language is not as rich as Python and R. However, as more and more people start to use it, some machine learning libraries and frameworks

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and PHP interfaces to implement data analysis and prediction of statistical charts. Data analysis and prediction play an important role in various fields. They can help us understand the trends and patterns of data and provide references for future decisions. ECharts is an open source data visualization library that provides rich and flexible chart components that can dynamically load and process data by using the PHP interface. This article will introduce the implementation method of statistical chart data analysis and prediction based on ECharts and php interface, and provide

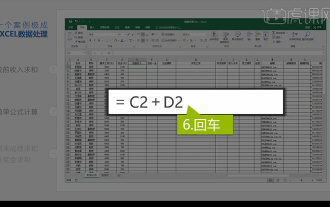

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

1. In this lesson, we will explain integrated Excel data analysis. We will complete it through a case. Open the course material and click on cell E2 to enter the formula. 2. We then select cell E53 to calculate all the following data. 3. Then we click on cell F2, and then we enter the formula to calculate it. Similarly, dragging down can calculate the value we want. 4. We select cell G2, click the Data tab, click Data Validation, select and confirm. 5. Let’s use the same method to automatically fill in the cells below that need to be calculated. 6. Next, we calculate the actual wages and select cell H2 to enter the formula. 7. Then we click on the value drop-down menu to click on other numbers.

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

Recommended: 1. Business Data Analysis Forum; 2. National People’s Congress Economic Forum - Econometrics and Statistics Area; 3. China Statistics Forum; 4. Data Mining Learning and Exchange Forum; 5. Data Analysis Forum; 6. Website Data Analysis; 7. Data analysis; 8. Data Mining Research Institute; 9. S-PLUS, R Statistics Forum.