Backend Development

Backend Development

Python Tutorial

Python Tutorial

How does Scrapy implement Docker containerization and deployment?

How does Scrapy implement Docker containerization and deployment?

How does Scrapy implement Docker containerization and deployment?

With the continuous development and increasing complexity of modern Internet applications, web crawlers have become an important tool for data acquisition and analysis. As one of the most popular crawler frameworks in Python, Scrapy has powerful functions and easy-to-use API interfaces, which can help developers quickly crawl and process web page data. However, when faced with large-scale crawling tasks, a single Scrapy crawler instance is easily limited by hardware resources, so Scrapy usually needs to be containerized and deployed into a Docker container to achieve rapid expansion and deployment.

This article will focus on how to implement Scrapy containerization and deployment. The main content includes:

- The basic architecture and working principle of Scrapy

- Docker containerization Introduction and advantages

- How Scrapy implements Docker containerization

- How Scrapy runs and deploys in Docker containers

- Practical application of Scrapy containerized deployment

- Scrapy The basic architecture and working principle

Scrapy is a web crawler framework based on the Python language, mainly used to crawl data on the Internet. It consists of multiple components, including schedulers, downloaders, middleware and parsers, etc., which can help developers quickly build a web page crawling system.

The basic architecture of Scrapy is shown in the figure below:

启动器(Engine):负责控制和协调整个爬取过程。 调度器(Scheduler):负责将请求(Request)按照一定的策略传递给下载器(Downloader)。 下载器(Downloader):负责下载并获取Web页面的响应数据。 中间件(Middleware):负责对下载器和调度器之间进行拦截、处理和修改。 解析器(Parser):负责对下载器所获取的响应数据进行解析和提取。

The entire process is roughly as follows:

1. 启动者对目标网站进行初始请求。 2. 调度器将初始请求传递给下载器。 3. 下载器对请求进行处理,获得响应数据。 4. 中间件对响应数据进行预处理。 5. 解析器对预处理后的响应数据进行解析和提取。 6. 解析器生成新的请求,并交给调度器。 7. 上述过程不断循环,直到达到设定的终止条件。

- Introduction and advantages of Docker containerization

Docker is a lightweight containerization technology that packages an application and its dependencies into an independent executable package. Docker achieves a more stable and reliable operating environment by isolating applications and dependencies, and provides a series of life cycle management functions, such as build, release, deployment and monitoring.

Advantages of Docker containerization:

1. 快速部署:Docker可以将应用程序及其依赖项打包成一个独立的可执行软件包,方便快速部署和迁移。 2. 节省资源:Docker容器采用隔离技术,可以共享主机操作系统的资源,从而节省硬件资源和成本。 3. 高度可移植:Docker容器可以在不同的操作系统和平台上运行,提高了应用程序的可移植性和灵活性。 4. 简单易用:Docker提供了一系列简单和易用的API接口和工具,可供开发人员和运维人员快速理解和使用。

- How Scrapy implements Docker containerization

Before implementing Scrapy Docker containerization, we need to understand something first Basic concepts and operations.

Docker image (Image): Docker image is a read-only template that can be used to create Docker containers. A Docker image can contain a complete operating system, applications, dependencies, etc.

Docker container (Container): A Docker container is a runnable instance created by a Docker image and contains all applications and dependencies. A Docker container can be started, stopped, paused, deleted, etc.

Docker warehouse (Registry): Docker warehouse is a place used to store and share Docker images, usually including public warehouses and private warehouses. Docker Hub is one of the most popular public Docker repositories.

In the process of Scrapy Dockerization, we need to perform the following operations:

1. 创建Dockerfile文件 2. 编写Dockerfile文件内容 3. 构建Docker镜像 4. 运行Docker容器

Below we will introduce step by step how to implement Scrapy Dockerization.

- Create a Dockerfile

Dockerfile is a text file used to build a Docker image. Dockerfile contains a series of instructions for identifying base images, adding dependent libraries, copying files and other operations.

Create a Dockerfile file in the project root directory:

$ touch Dockerfile

- Write the contents of the Dockerfile file

We need to Write a series of instructions in to set up the Scrapy environment and package the application into a Docker image. The specific content is as follows:

FROM python:3.7-stretch

# 设置工作目录

WORKDIR /app

# 把Scrapy所需的依赖项添加到环境中

RUN apt-get update && apt-get install -y

build-essential

git

libffi-dev

libjpeg-dev

libpq-dev

libssl-dev

libxml2-dev

libxslt-dev

python3-dev

python3-pip

python3-lxml

zlib1g-dev

# 安装Scrapy和其他依赖项

RUN mkdir /app/crawler

COPY requirements.txt /app/crawler

RUN pip install --no-cache-dir -r /app/crawler/requirements.txt

# 拷贝Scrapy程序代码

COPY . /app/crawler

# 启动Scrapy爬虫

CMD ["scrapy", "crawl", "spider_name"]

The functions of the above instructions are as follows:

FROM:获取Python 3.7及其中的Stretch的Docker镜像; WORKDIR:在容器中创建/app目录,并将其设置为工作目录; RUN:在容器中安装Scrapy的依赖项; COPY:将应用程序代码和依赖项复制到容器的指定位置; CMD:在容器中启动Scrapy爬虫。

Among them, be careful to modify the CMD instructions according to your own needs.

- Building a Docker image

Building a Docker image is a relatively simple operation. You only need to use the docker build command in the project root directory:

$ docker build -t scrapy-crawler .

Among them, scrapy-crawler is the name of the image, . is the current directory, be sure to add a decimal point.

- Run the Docker container

The running of the Docker container is the last step in the Scrapy Dockerization process and the key to the entire process. You can use the docker run command to start the created image, as follows:

$ docker run -it scrapy-crawler:latest

Among them, scrapy-crawler is the name of the image, and latest is the version number.

- How to run and deploy Scrapy in a Docker container

Before Dockerizing Scrapy, we need to install Docker and Docker Compose. Docker Compose is a tool for defining and running multi-container Docker applications, making it possible to quickly build and manage Scrapy containerized applications.

Below we will introduce step by step how to deploy Scrapy Dockerization through Docker Compose.

- Create docker-compose.yml file

Create docker-compose.yml file in the project root directory:

$ touch docker-compose. yml

- Write the content of docker-compose.yml file

Configure in docker-compose.yml, the configuration is as follows:

version: '3'

services:

app:

build:

context: .

dockerfile: Dockerfile

volumes:

- .:/app

command: scrapy crawl spider_name

In the above configuration , we define a service named app and use the build directive to tell Docker Compose to build the app image, and then use the volumes directive to specify shared files and directories.

- Start Docker Compose

Run the following command in the project root directory to start Docker Compose:

$ docker-compose up -d

The -d option is to background the Docker container run.

- View the running status of the container

We can use the docker ps command to check the running status of the container. The following command will list the running Scrapy containers:

$ docker ps

- 查看容器日志

我们可以使用docker logs命令来查看容器日志。如下命令将列出Scrapy容器的运行日志:

$ docker logs <CONTAINER_ID>

其中,CONTAINER_ID是容器ID。

- Scrapy容器化部署的实践应用

Scrapy Docker化技术可以应用于任何需要爬取和处理Web页面数据的场景。因此,我们可以将其应用于各种数据分析和挖掘任务中,如电商数据分析、舆情分析、科学研究等。

举例来说,我们可以利用Scrapy Docker容器已有的良好扩展性,搭建大规模爬虫系统,同时使用Docker Swarm实现容器的快速扩展和部署。我们可以设定预先定义好的Scrapy容器规模,根据任务需求动态地进行扩容或缩容,以实现快速搭建、高效运行的爬虫系统。

总结

本文介绍了Scrapy Docker化的基本流程和步骤。我们首先了解了Scrapy的基本架构和工作原理,然后学习了Docker容器化的优势和应用场景,接着介绍了如何通过Dockerfile、Docker Compose实现Scrapy容器化和部署。通过实践应用,我们可以将Scrapy Docker化技术应用到任何需要处理和分析Web页面数据的应用场景中,从而提高工作效率和系统扩展性。

The above is the detailed content of How does Scrapy implement Docker containerization and deployment?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

PHP distributed system architecture and practice

May 04, 2024 am 10:33 AM

PHP distributed system architecture and practice

May 04, 2024 am 10:33 AM

PHP distributed system architecture achieves scalability, performance, and fault tolerance by distributing different components across network-connected machines. The architecture includes application servers, message queues, databases, caches, and load balancers. The steps for migrating PHP applications to a distributed architecture include: Identifying service boundaries Selecting a message queue system Adopting a microservices framework Deployment to container management Service discovery

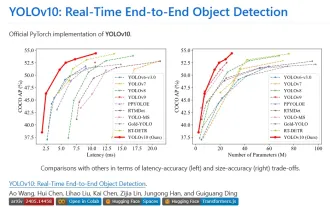

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end

Agile development and operation of PHP microservice containerization

May 08, 2024 pm 02:21 PM

Agile development and operation of PHP microservice containerization

May 08, 2024 pm 02:21 PM

Answer: PHP microservices are deployed with HelmCharts for agile development and containerized with DockerContainer for isolation and scalability. Detailed description: Use HelmCharts to automatically deploy PHP microservices to achieve agile development. Docker images allow for rapid iteration and version control of microservices. The DockerContainer standard isolates microservices, and Kubernetes manages the availability and scalability of the containers. Use Prometheus and Grafana to monitor microservice performance and health, and create alarms and automatic repair mechanisms.

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Detailed explanation and installation guide for PiNetwork nodes This article will introduce the PiNetwork ecosystem in detail - Pi nodes, a key role in the PiNetwork ecosystem, and provide complete steps for installation and configuration. After the launch of the PiNetwork blockchain test network, Pi nodes have become an important part of many pioneers actively participating in the testing, preparing for the upcoming main network release. If you don’t know PiNetwork yet, please refer to what is Picoin? What is the price for listing? Pi usage, mining and security analysis. What is PiNetwork? The PiNetwork project started in 2019 and owns its exclusive cryptocurrency Pi Coin. The project aims to create a one that everyone can participate

How to install deepseek

Feb 19, 2025 pm 05:48 PM

How to install deepseek

Feb 19, 2025 pm 05:48 PM

There are many ways to install DeepSeek, including: compile from source (for experienced developers) using precompiled packages (for Windows users) using Docker containers (for most convenient, no need to worry about compatibility) No matter which method you choose, Please read the official documents carefully and prepare them fully to avoid unnecessary trouble.

Deploy JavaEE applications using Docker Containers

Jun 05, 2024 pm 08:29 PM

Deploy JavaEE applications using Docker Containers

Jun 05, 2024 pm 08:29 PM

Deploy Java EE applications using Docker containers: Create a Dockerfile to define the image, build the image, run the container and map the port, and then access the application in the browser. Sample JavaEE application: REST API interacts with database, accessible on localhost after deployment via Docker.

How to use PHP CI/CD to iterate quickly?

May 08, 2024 pm 10:15 PM

How to use PHP CI/CD to iterate quickly?

May 08, 2024 pm 10:15 PM

Answer: Use PHPCI/CD to achieve rapid iteration, including setting up CI/CD pipelines, automated testing and deployment processes. Set up a CI/CD pipeline: Select a CI/CD tool, configure the code repository, and define the build pipeline. Automated testing: Write unit and integration tests and use testing frameworks to simplify testing. Practical case: Using TravisCI: install TravisCI, define the pipeline, enable the pipeline, and view the results. Implement continuous delivery: select deployment tools, define deployment pipelines, and automate deployment. Benefits: Improve development efficiency, reduce errors, and shorten delivery time.

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

To successfully deploy and maintain a PHP website, you need to perform the following steps: Select a web server (such as Apache or Nginx) Install PHP Create a database and connect PHP Upload code to the server Set up domain name and DNS Monitoring website maintenance steps include updating PHP and web servers, and backing up the website , monitor error logs and update content.