Technology peripherals

Technology peripherals

AI

AI

Why is the large model of China's most powerful AI research institute late?

Why is the large model of China's most powerful AI research institute late?

Why is the large model of China's most powerful AI research institute late?

Produced by Huxiu Technology Group

Author|Qi Jian

Editor|Chen Yifan

Header picture|FlagStudio

"Will OpenAI open source large models again?"

When Zhang Hongjiang, Chairman of the Zhiyuan Research Institute, asked OpenAI CEO Sam Altman, who attended the 2023 Zhiyuan Conference online, about open source issues, Sam Altman smiled and said, OpenAI will open more codes in the future. But there is no specific open source timetable.

This discussion comes from one of the topics of this Zhiyuan conference-Open source large model.

On June 9, the 2023 Zhiyuan Conference was held in Beijing, and all seats were packed. At the conference site, AI-related words such as "computing power", "large model" and "ecology" appeared from time to time in the chats of participants, as well as various companies in this industry chain.

At this conference, Zhiyuan Research Institute released the comprehensive open source Wu Dao 3.0. Including visual large model series "Vision", language large model series "Sky Eagle", and original large model evaluation system "Libra" .

Open source for large models means making the model code public for AI developers to study. The "Sky Eagle" basic layer language model in Wu Dao 3.0 is still a commercially available model, and everyone can use this large model for free.

Currently, Microsoft’s in-depth partners OpenAI, Google and BAAI are the three institutions at the forefront of the field of artificial intelligence. "In a recent interview, Microsoft President Brad Smith mentioned BAAI, the "strongest" AI research institution in China, which is as famous as OpenAI and Google. This institution is the Beijing Zhiyuan Artificial Intelligence Research Institute. Many people in the industry believe that , the artificial intelligence conference hosted by this institute is a benchmark for industry trends.

Zhiyuan Research Institute, which is highly recognized by the president of Microsoft, has launched the AI large model "Enlightenment" project as early as October 2020, and has successively released two versions of the Enlightenment model 1.0 and 2.0. Among them, the officially announced parameter scale of Enlightenment 2.0 reaches 1.7 trillion. At that time, it had only been a year since OpenAI released the 175 billion-parameter GPT-3 model.

However, such a pioneer of large AI models has been extremely low-key during the AI large model craze in the past six months.

While big models are emerging one after another among large manufacturers and start-up companies, Zhiyuan has remained "silent" to the outside world for more than three months, except for the "SAM" that collided with Meta's cutout AI "SAM" in early April. SegGPT”, revealing almost nothing about the large AI model to the public.

In this regard, many people inside and outside the AI industry have questions. Why does Zhiyuan Research Institute, a leader in the field of AI large models, seem to be late in the climax of large models?

Will the open source model dismantle OpenAI’s moat?

"Although the competition for large models is fierce now, neither OpenAI nor Google has a moat, because "open source" is rising in the field of AI large models."

In a document leaked by Google, internal Google researchers believe that open source models may lead the future of large model development. The document mentions that "Open source models have faster iterations and are more customizable. Stronger and more private, people will not pay for restricted models when the free, unrestricted alternatives are of equal quality. " This may also be one of the reasons why Zhiyuan chose to develop open source large models one.

At present, there are not many open source commercial large models. Zhiyuan Research Institute conducted a survey on some of the AI large models that have been released. Among the 39 open source language large models released abroad, the commercially available large models include 16. Among the 28 major language models released in China, a total of 11 are open source models, but only one of them is an open source and commercially available model.

The large language model released by Zhiyuan this time is open source and commercially available. It is also one of the few open source large language models currently available for commercial use. This also determines that such a model needs to be more cautious before releasing it.

"As far as Zhiyuan is concerned, we definitely don't want the open source model to be too ugly, so we will release it with caution." An AI researcher at the Zhiyuan conference said that open source models will inevitably have to be repeatedly verified and have bugs picked up by a large number of developers. In order to ensure the quality of the open source model, Zhiyuan's research and development progress may have been slowed down by "open source".

Huang Tiejun, President of Zhiyuan Research Institute, believes that the current open source and openness of large models in our country's market is far from enough. "We should further strengthen open source and open source. Open source and open source are also competitions. There are really good standards and good algorithms. Only by evaluating and comparing can we prove our technical level.”

There is a lack of transparency when domestic manufacturers release large models, and many people doubt whether these manufacturers have truly conducted independent research and development. Some people say that they call ChatGPT via API, while others say that they are trained using the answer data of ChatGPT, the LLaMA model leaked by Meta. The open source model cuts off these doubts from the source.

However, the open source model and improving technical transparency are not to prove one's innocence, but to really "concentrate efforts to do big things." According to Zhiyuan data, the daily training cost of Tianying Big Language Model is more than 100,000 yuan. Under the general trend of the domestic "War of One Hundred Models" or even "War of Thousands of Models", many industries are The repeated expenses caused by a large number of unnecessary repeated trainings may be astronomical.

And open source models can reduce repeated training. For companies with model needs, directly using large open source commercial AI models and combining their own data for training may be the best solution for AI implementation and industry applications. .

Another consideration of open source is to accumulate users and developers in the early stage in order to build a good ecosystem and achieve future commercialization. A founder of a large domestic model company told Huxiu, “OpenAI’s GPT-1 and GPT-2 are both open source large models. This is to accumulate users and improve the recognition of the model. Once the model capabilities of GPT-3 are fully Display, commercialization will become the focus of consideration, and this model will gradually become closed. Therefore, open source models generally will not allow commercial use, which is also due to subsequent commercialization considerations."

But obviously, as a non-profit research institution, Zhiyuan has no commercial considerations when it comes to open source issues. For Zhiyuan, in terms of model open source, on the one hand, it hopes to promote scientific research and innovation in the AI large model industry and accelerate industrial implementation by opening up open source such as underlying models. On the other hand, perhaps we also want to accumulate more user feedback based on open source models and improve the usability of large models in engineering.

However, model open source is not "perfect".

An AI technical director of a major factory told Huxiu that the current commercialization market for large AI models can be divided into three tiers. The first tier is the leading players who are fully capable of self-developed models, and the second tier is those who need to For enterprises that train proprietary models based on specific scenarios, the third layer is for small and medium-sized customers who only need general model capabilities and can use API calls to meet their needs.

In this context, open source models can help leading players with self-research capabilities save a lot of time and cost in developing models. But for second- and third-tier companies, they need to set up their own technical teams to train and tune the models. For many companies with less technical strength, this will make the implementation process more difficult. It gets more complicated, and open source seems to have a bit of a "free stuff is the most expensive" feel to them.

This "enlightenment" is no longer that "enlightenment"

Chiyuan’s Enlightenment 3.0 is a completely redeveloped large-scale model series. This is also one of the reasons for its “late release”.

Now that we have the foundation of Enlightenment 2.0, why does Zhiyuan need to develop a new model system?On the one hand, it is the adjustment of the technical direction of the model, and on the other hand, it is due to the "replacement" of the underlying training data of the model.

"The development of Wu Dao 2.0 will be in 2021, so whether it is a language model (such as GLM) or a Vincentian graph model (such as CogView), the algorithm architecture it is based on is relatively early from now on. In the past year Many, the model architecture in related fields has been more verified or evolved. For example, the decoder only architecture used in the language model has been confirmed that with higher quality data, it can be obtained in the basic model of large-scale parameters. Better generation performance. In the text graph model, we switched to difussion-based for further innovation. So in Wu Dao 3.0, weadopted these updates for the large language model, the large text graph generation model, etc. Lin Yonghua, vice president and chief engineer of Zhiyuan Research Institute, said that based on the research of past models, Wu Dao 3.0 has been reconstructed in many directions. In addition, Wudao 3.0 has also comprehensively optimized and upgraded the training data of the underlying model. The training data uses the updated Wudao Chinese data, including from 2021 to the present, and has undergone more stringent quality cleaning; on the other hand, , a large amount of high-quality Chinese has been added, including Chinese books, literature, etc.;

In addition, high-quality code data sets have been added, so the basic model has also undergone great changes.The underlying model training data is not native Chinese, causing many domestic models to have problems with Chinese understanding capabilities. Many large-scale AI models at home and abroad use massive open source data from abroad for training. Major sources include the famous open source dataset Common Crawl.

Zhiyuan analyzed 1 million Common Crawl web page data and

can extract 39,052 Chinese web pages. From the perspective of website sources, there are 25,842 websites that can extract Chinese, of which only 4,522 have IPs in mainland China, accounting for only 17%.This not only greatly reduces the accuracy of Chinese data, but also reduces security. "The corpus used to train the basic model will largely affect the compliance, security and values generated by AIGC applications, fine-tuned models and other content." Lin Yonghua said that the Chinese ability of the Tianying basic model is not a simple translation; By "pressing enough Chinese knowledge into this model", 99% of its Chinese Internet data comes from domestic websites, and companies can safely conduct continuous training based on it. At the same time, through a large amount of refined processing and cleaning of data and numbers, a model with the same or even better performance can be trained with a small amount of data. This data can even be as low as 30% or 40% of the data amount. It can catch up with or exceed the existing open source model. Now it seems that this path may be a better solution for Zhiyuan. Because in terms of training data, Zhiyuan has shortcomings compared with Internet manufacturers. Large Internet companies have rich user interaction data and a large amount of copyright data for training. Not long ago, Alibaba Damo Academy just released a video language data set Youku-mPLUG. All the content in it comes from Youku, a video platform owned by Alibaba. Since Zhiyuan does not have a deep user base, in terms of training data, it can only obtain authorization through negotiation with the copyright owner, and collect and accumulate bit by bit through some public welfare data projects. However, at present, Zhiyuan’s Chinese data set can only be partially open source. The main reason is that the copyright of Chinese data is scattered in the hands of various institutions. At present, Zhiyuan’s training data is obtained through the coordination of multiple parties. The open source model studies open access. Most of the data can only be applied to Zhiyuan's models and does not have the right to be used for secondary use. "It is very necessary in China to establish an industrial alliance for data sets, unite copyright holders, and conduct unified planning of training data for artificial intelligence, but this requires the wisdom of top-level design." Lin Yonghua told Huxiu. Whampoa Military Academy in the domestic large model industry Wudao 3.0 is telling a different story from Wudao 2.0, and the changes in the R&D team are one of them. As a pioneer in the AI large model industry, Zhiyuan Research Institute is like the Whampoa Military Academy of domestic AI large models. From Zhiyuan scholars to grassroots engineers, they have all become popular in the industry in today's large-scale model craze. The original team of Zhiyuan has also incubated several large-scale entrepreneurial teams. Before Wu Dao 3.0, a large model series was a combination of research results jointly released by multiple external laboratories, but this time Wu Dao 3.0 is a series of models completely self-developed by the Zhiyuan team. Wudao 2.0 model was released in 2021, including Wenyuan, Wenlan, Wenhui and Wensu. Among them, the two core models were completed by two laboratories of Tsinghua University. Today, the two teams have founded their own companies and developed their own independent products in the research and development direction of CPM and GLM. Among them, Tsinghua University’s Knowledge Engineering Laboratory (KEG), the main R&D team of GLM, launched the open source model ChatGLM-6B together with Zhipu AI, and has been widely recognized by the industry; Tsinghua University, the main R&D team of CPM Shenyan Technology, which is composed of some members of the Natural Language Processing and Social Humanities Computing Laboratory (THUNLP) of the Department of Computer Science, has been favored by various capitals since its establishment one year ago. Tencent Investment and Sequoia Investment appeared in the two rounds of financing this year. China, Qiji Chuangtan and other funds are present. Someone close to Zhiyuan Research Institute told Huxiu that Since the rise of large-scale AI models in China, the Zhiyuan team has become the “hunting target” of the talent war. “The entire R&D team is being targeted by other companies or headhunters. superior". At present, in the domestic AI large model industry, what is most in short supply is money, and what is most in short supply is people. Search ChatGPT on the three platforms of Liepin, Maimai, and BOSS Zhipin. The monthly salary for positions with master's and doctoral degrees is generally higher than 30,000, and the highest is 90,000. "In terms of salary, big IT companies don't take much advantage. The research and development of large AI models are all done at a high level. The salary offered by startups may be more competitive." Yu Jia, COO of Xihu Xinchen, told Huxiu that talent The war will become increasingly fierce in the AI industry. "Double salary is not competitive at all in the eyes of many employees at Zhiyuan. Because now they are poaching people with five or even ten times the salary. No matter how ideal you are and how you plan for the future, It is difficult to resist the temptation of an annual salary of more than one million." A person close to Zhiyuan told Huxiu, Since Zhiyuan is a non-profit research institution, the salary level is difficult to match that of major Internet companies or companies with a large amount of capital behind it. compared to supported startups. Through headhunting, Huxiu learned that the starting salary of natural language processing experts currently exceeds 1 million. For some employees with long working years and low wages, it is difficult not to waver when faced with several times their salary. However, judging from the current public data of Zhiyuan Research Institute, most of the core project team leaders of Zhiyuan Research Institute are still responsible for the research and development projects of Zhiyuan Research Institute full-time. "The models of Enlightenment 3.0 are all developed by Zhiyuan's own researchers, including Tianying, Libra, and Vision. " Lin Yonghua said that Zhiyuan Research Institute's current R&D strength has been among the best in the industry. It's top notch. People who are changing and want to change the world are on Huxiu APP

The above is the detailed content of Why is the large model of China's most powerful AI research institute late?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

"High complexity, high fragmentation, and cross-domain" have always been the primary pain points on the road to digital and intelligent upgrading of the transportation industry. Recently, the "Qinling·Qinchuan Traffic Model" with a parameter scale of 100 billion, jointly built by China Vision, Xi'an Yanta District Government, and Xi'an Future Artificial Intelligence Computing Center, is oriented to the field of smart transportation and provides services to Xi'an and its surrounding areas. The region will create a fulcrum for smart transportation innovation. The "Qinling·Qinchuan Traffic Model" combines Xi'an's massive local traffic ecological data in open scenarios, the original advanced algorithm self-developed by China Science Vision, and the powerful computing power of Shengteng AI of Xi'an Future Artificial Intelligence Computing Center to provide road network monitoring, Smart transportation scenarios such as emergency command, maintenance management, and public travel bring about digital and intelligent changes. Traffic management has different characteristics in different cities, and the traffic on different roads

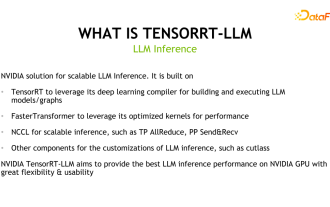

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

1. Product positioning of TensorRT-LLM TensorRT-LLM is a scalable inference solution developed by NVIDIA for large language models (LLM). It builds, compiles and executes calculation graphs based on the TensorRT deep learning compilation framework, and draws on the efficient Kernels implementation in FastTransformer. In addition, it utilizes NCCL for communication between devices. Developers can customize operators to meet specific needs based on technology development and demand differences, such as developing customized GEMM based on cutlass. TensorRT-LLM is NVIDIA's official inference solution, committed to providing high performance and continuously improving its practicality. TensorRT-LL

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

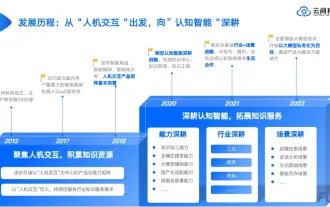

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq