Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

Configure Linux systems to support big data processing and analysis

Configure Linux systems to support big data processing and analysis

Configure Linux systems to support big data processing and analysis

Configure Linux system to support big data processing and analysis

Abstract: With the advent of the big data era, the demand for big data processing and analysis is increasing. This article describes how to configure applications and tools on a Linux system to support big data processing and analysis, and provides corresponding code examples.

Keywords: Linux system, big data, processing, analysis, configuration, code examples

Introduction: Big data, as an emerging data management and analysis technology, has been widely used in various fields . In order to ensure the efficiency and reliability of big data processing and analysis, it is very critical to correctly configure the Linux system.

1. Install the Linux system

First, we need to install a Linux system correctly. Common Linux distributions include Ubuntu, Fedora, etc. You can choose a suitable Linux distribution according to your own needs. During the installation process, it is recommended to select the server version to allow for more detailed configuration after the system installation is completed.

2. Update the system and install necessary software

After completing the system installation, you need to update the system and install some necessary software. First, run the following command in the terminal to update the system:

sudo apt update sudo apt upgrade

Next, install OpenJDK (Java Development Kit), because most big data processing and analysis applications are developed based on Java:

sudo apt install openjdk-8-jdk

After the installation is complete, you can verify whether Java is installed successfully by running the following command:

java -version

If the version information of Java is output, the installation is successful.

3. Configuring Hadoop

Hadoop is an open source big data processing framework that can handle extremely large data sets. The following are the steps to configure Hadoop:

Download Hadoop and unzip it:

wget https://www.apache.org/dist/hadoop/common/hadoop-3.3.0.tar.gz tar -xzvf hadoop-3.3.0.tar.gz

Copy after loginConfigure environment variables:

Add the following content Go to the~/.bashrcfile:export HADOOP_HOME=/path/to/hadoop-3.3.0 export PATH=$PATH:$HADOOP_HOME/bin

Copy after loginAfter saving the file, run the following command to make the configuration take effect:

source ~/.bashrc

Copy after loginCopy after loginConfigure the core file of Hadoop :

Enter the decompression directory of Hadoop, edit theetc/hadoop/core-site.xmlfile, and add the following content:<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> </configuration>

Copy after loginNext, edit

etc/hadoop/hdfs -site.xmlfile, add the following content:<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

Copy after loginAfter saving the file, execute the following command to format the Hadoop file system:

hdfs namenode -format

Copy after loginFinally, start Hadoop:

start-dfs.sh

Copy after login4. Configure Spark

Spark is a fast and versatile big data processing and analysis engine that can be used with Hadoop. The following are the steps to configure Spark:Download Spark and unzip it:

wget https://www.apache.org/dist/spark/spark-3.1.2/spark-3.1.2-bin-hadoop3.2.tgz tar -xzvf spark-3.1.2-bin-hadoop3.2.tgz

Copy after loginConfigure environment variables:

Add the following content Go to the~/.bashrcfile:export SPARK_HOME=/path/to/spark-3.1.2-bin-hadoop3.2 export PATH=$PATH:$SPARK_HOME/bin

Copy after loginAfter saving the file, run the following command to make the configuration take effect:

source ~/.bashrc

Copy after loginCopy after loginConfigure the core file of Spark :

Enter the Spark decompression directory, copy theconf/spark-env.sh.templatefile and rename it toconf/spark-env.sh. Edit theconf/spark-env.shfile and add the following content:export JAVA_HOME=/path/to/jdk1.8.0_* export HADOOP_HOME=/path/to/hadoop-3.3.0 export SPARK_MASTER_HOST=localhost export SPARK_MASTER_PORT=7077 export SPARK_WORKER_CORES=4 export SPARK_WORKER_MEMORY=4g

Copy after loginAmong them,

JAVA_HOMEneeds to be set to the installation path of Java,HADOOP_HOMENeeds to be set to the installation path of Hadoop,SPARK_MASTER_HOSTis set to the IP address of the current machine.

After saving the file, start Spark:

start-master.sh

Run the following command to view Spark’s Master address:

cat $SPARK_HOME/logs/spark-$USER-org.apache.spark.deploy.master*.out | grep 'Starting Spark master'

Start Spark Worker:

start-worker.sh spark://<master-ip>:<master-port>

Among them, <master-ip> is the IP address in Spark’s Master address, and <master-port> is the port number in Spark’s Master address.

Summary: This article describes how to configure a Linux system to support applications and tools for big data processing and analysis, including Hadoop and Spark. By correctly configuring the Linux system, the efficiency and reliability of big data processing and analysis can be improved. Readers can practice the configuration and application of Linux systems according to the guidelines and sample codes in this article.

The above is the detailed content of Configure Linux systems to support big data processing and analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Pandas is a powerful data analysis tool that can easily read and process various types of data files. Among them, CSV files are one of the most common and commonly used data file formats. This article will introduce how to use Pandas to read CSV files and perform data analysis, and provide specific code examples. 1. Import the necessary libraries First, we need to import the Pandas library and other related libraries that may be needed, as shown below: importpandasaspd 2. Read the CSV file using Pan

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Common data analysis methods: 1. Comparative analysis method; 2. Structural analysis method; 3. Cross analysis method; 4. Trend analysis method; 5. Cause and effect analysis method; 6. Association analysis method; 7. Cluster analysis method; 8 , Principal component analysis method; 9. Scatter analysis method; 10. Matrix analysis method. Detailed introduction: 1. Comparative analysis method: Comparative analysis of two or more data to find the differences and patterns; 2. Structural analysis method: A method of comparative analysis between each part of the whole and the whole. ; 3. Cross analysis method, etc.

Big data processing in C++ technology: How to use graph databases to store and query large-scale graph data?

Jun 03, 2024 pm 12:47 PM

Big data processing in C++ technology: How to use graph databases to store and query large-scale graph data?

Jun 03, 2024 pm 12:47 PM

C++ technology can handle large-scale graph data by leveraging graph databases. Specific steps include: creating a TinkerGraph instance, adding vertices and edges, formulating a query, obtaining the result value, and converting the result into a list.

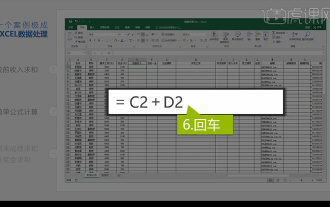

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

1. In this lesson, we will explain integrated Excel data analysis. We will complete it through a case. Open the course material and click on cell E2 to enter the formula. 2. We then select cell E53 to calculate all the following data. 3. Then we click on cell F2, and then we enter the formula to calculate it. Similarly, dragging down can calculate the value we want. 4. We select cell G2, click the Data tab, click Data Validation, select and confirm. 5. Let’s use the same method to automatically fill in the cells below that need to be calculated. 6. Next, we calculate the actual wages and select cell H2 to enter the formula. 7. Then we click on the value drop-down menu to click on other numbers.

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

What are the recommended data analysis websites?

Mar 13, 2024 pm 05:44 PM

Recommended: 1. Business Data Analysis Forum; 2. National People’s Congress Economic Forum - Econometrics and Statistics Area; 3. China Statistics Forum; 4. Data Mining Learning and Exchange Forum; 5. Data Analysis Forum; 6. Website Data Analysis; 7. Data analysis; 8. Data Mining Research Institute; 9. S-PLUS, R Statistics Forum.

Big data processing in C++ technology: How to use stream processing technology to process big data streams?

Jun 01, 2024 pm 10:34 PM

Big data processing in C++ technology: How to use stream processing technology to process big data streams?

Jun 01, 2024 pm 10:34 PM

Stream processing technology is used for big data processing. Stream processing is a technology that processes data streams in real time. In C++, Apache Kafka can be used for stream processing. Stream processing provides real-time data processing, scalability, and fault tolerance. This example uses ApacheKafka to read data from a Kafka topic and calculate the average.

Learn how to use the numpy library for data analysis and scientific computing

Jan 19, 2024 am 08:05 AM

Learn how to use the numpy library for data analysis and scientific computing

Jan 19, 2024 am 08:05 AM

With the advent of the information age, data analysis and scientific computing have become an important part of more and more fields. In this process, the use of computers for data processing and analysis has become an indispensable tool. In Python, the numpy library is a very important tool, which allows us to process and analyze data more efficiently and get results faster. This article will introduce the common functions and usage of numpy, and give some specific code examples to help you learn in depth. Installation of numpy library

Is the golang framework suitable for big data processing?

Jun 01, 2024 pm 10:50 PM

Is the golang framework suitable for big data processing?

Jun 01, 2024 pm 10:50 PM

The Go framework performs well in processing huge amounts of data, and its advantages include concurrency, high performance, and type safety. Go frameworks suitable for big data processing include ApacheBeam, Flink and Spark. In practical cases, the Beam pipeline can be used to efficiently process and transform large batches of data, such as converting a list of strings to uppercase.