Web Front-end

Web Front-end

uni-app

uni-app

UniApp's implementation techniques for speech recognition and speech synthesis

UniApp's implementation techniques for speech recognition and speech synthesis

UniApp's implementation techniques for speech recognition and speech synthesis

UniApp’s implementation skills of speech recognition and speech synthesis

With the development of artificial intelligence technology, speech recognition and speech synthesis have become commonly used technologies in people’s daily lives. In mobile application development, implementing speech recognition and speech synthesis functions has also become an important requirement. This article will introduce how to use UniApp to implement speech recognition and speech synthesis functions, and attach code examples.

1. Implementation of the speech recognition function

UniApp provides the uni-voice recognition plug-in, through which the speech recognition function can be easily realized. The following are the specific implementation steps:

- First, add a reference to the uni-voice plug-in in the manifest.json file in the uni-app project. Add the following code to the "manifest" under the "pages" node:

"plugin" : {

"voice": {

"version": "1.2.0",

"provider": "uni-voice"

}

}- Place a button on the page where the speech recognition function needs to be used to trigger the speech recognition operation. For example, assume that a button component is placed in the index.vue page:

<template>

<view>

<button type="primary" @tap="startRecognizer">开始识别</button>

</view>

</template>- Write the relevant JS code in the script block of the index.vue page to implement the speech recognition function. The following is a sample code:

import { voice } from '@/js_sdk/uni-voice'

export default {

methods: {

startRecognizer() {

uni.startRecognize({

lang: 'zh_CN',

complete: res => {

if (res.errMsg === 'startRecognize:ok') {

console.log('识别结果:', res.result)

} else {

console.error('语音识别失败', res.errMsg)

}

}

})

}

}

}In the above code, the speech recognition function is started through the uni.startRecognize method. The recognized language can be set through the lang parameter. Here, setting it to 'zh_CN' means recognizing Chinese. In the complete callback function, the recognition result res.result can be obtained and processed accordingly.

2. Implementation of the speech synthesis function

To implement the speech synthesis function in UniApp, you need to use the uni.textToSpeech method. The following are the specific implementation steps:

- Place a button on the page where the speech synthesis function is required to trigger the speech synthesis operation. For example, place a button component in the index.vue page:

<template>

<view>

<button type="primary" @tap="startSynthesis">开始合成</button>

</view>

</template>- Write the relevant JS code in the script block of the index.vue page to implement the speech synthesis function. The following is a sample code:

export default {

methods: {

startSynthesis() {

uni.textToSpeech({

text: '你好,欢迎使用UniApp',

complete: res => {

if (res.errMsg === 'textToSpeech:ok') {

console.log('语音合成成功')

} else {

console.error('语音合成失败', res.errMsg)

}

}

})

}

}

}In the above code, the speech synthesis operation is performed through the uni.textToSpeech method. The text content to be synthesized can be set through the text parameter. In the complete callback function, you can judge whether the speech synthesis is successful based on res.errMsg.

3. Summary

This article introduces how to use UniApp to implement speech recognition and speech synthesis functions. Speech recognition and speech synthesis functionality can be easily integrated in UniApp projects by using the uni-voice plugin and the uni.textToSpeech method. I hope readers can quickly implement their own speech recognition and speech synthesis functions through the introduction and sample code of this article.

The above is the detailed content of UniApp's implementation techniques for speech recognition and speech synthesis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

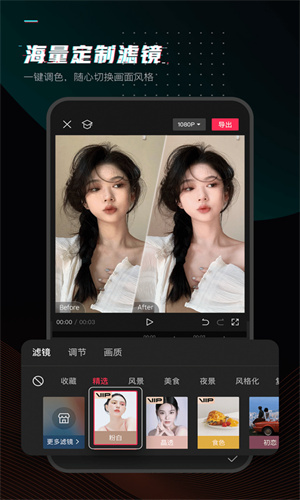

How to automatically recognize speech and generate subtitles in movie clipping. Introduction to the method of automatically generating subtitles

Mar 14, 2024 pm 08:10 PM

How to automatically recognize speech and generate subtitles in movie clipping. Introduction to the method of automatically generating subtitles

Mar 14, 2024 pm 08:10 PM

How do we implement the function of generating voice subtitles on this platform? When we are making some videos, in order to have more texture, or when narrating some stories, we need to add our subtitles, so that everyone can better understand the information of some of the videos above. It also plays a role in expression, but many users are not very familiar with automatic speech recognition and subtitle generation. No matter where it is, we can easily let you make better choices in various aspects. , if you also like it, you must not miss it. We need to slowly understand some functional skills, etc., hurry up and take a look with the editor, don't miss it.

How to implement an online speech recognition system using WebSocket and JavaScript

Dec 17, 2023 pm 02:54 PM

How to implement an online speech recognition system using WebSocket and JavaScript

Dec 17, 2023 pm 02:54 PM

How to use WebSocket and JavaScript to implement an online speech recognition system Introduction: With the continuous development of technology, speech recognition technology has become an important part of the field of artificial intelligence. The online speech recognition system based on WebSocket and JavaScript has the characteristics of low latency, real-time and cross-platform, and has become a widely used solution. This article will introduce how to use WebSocket and JavaScript to implement an online speech recognition system.

Detailed method to turn off speech recognition in WIN10 system

Mar 27, 2024 pm 02:36 PM

Detailed method to turn off speech recognition in WIN10 system

Mar 27, 2024 pm 02:36 PM

1. Enter the control panel, find the [Speech Recognition] option, and turn it on. 2. When the speech recognition page pops up, select [Advanced Voice Options]. 3. Finally, uncheck [Run speech recognition at startup] in the User Settings column in the Voice Properties window.

How to start preview of uniapp project developed by webstorm

Apr 08, 2024 pm 06:42 PM

How to start preview of uniapp project developed by webstorm

Apr 08, 2024 pm 06:42 PM

Steps to launch UniApp project preview in WebStorm: Install UniApp Development Tools plugin Connect to device settings WebSocket launch preview

Which one is better, uniapp or mui?

Apr 06, 2024 am 05:18 AM

Which one is better, uniapp or mui?

Apr 06, 2024 am 05:18 AM

Generally speaking, uni-app is better when complex native functions are needed; MUI is better when simple or highly customized interfaces are needed. In addition, uni-app has: 1. Vue.js/JavaScript support; 2. Rich native components/API; 3. Good ecosystem. The disadvantages are: 1. Performance issues; 2. Difficulty in customizing the interface. MUI has: 1. Material Design support; 2. High flexibility; 3. Extensive component/theme library. The disadvantages are: 1. CSS dependency; 2. Does not provide native components; 3. Small ecosystem.

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

Hello everyone, I am Kite. Two years ago, the need to convert audio and video files into text content was difficult to achieve, but now it can be easily solved in just a few minutes. It is said that in order to obtain training data, some companies have fully crawled videos on short video platforms such as Douyin and Kuaishou, and then extracted the audio from the videos and converted them into text form to be used as training corpus for big data models. If you need to convert a video or audio file to text, you can try this open source solution available today. For example, you can search for the specific time points when dialogues in film and television programs appear. Without further ado, let’s get to the point. Whisper is OpenAI’s open source Whisper. Of course it is written in Python. It only requires a few simple installation packages.

What are the disadvantages of uniapp

Apr 06, 2024 am 04:06 AM

What are the disadvantages of uniapp

Apr 06, 2024 am 04:06 AM

UniApp has many conveniences as a cross-platform development framework, but its shortcomings are also obvious: performance is limited by the hybrid development mode, resulting in poor opening speed, page rendering, and interactive response. The ecosystem is imperfect and there are few components and libraries in specific fields, which limits creativity and the realization of complex functions. Compatibility issues on different platforms are prone to style differences and inconsistent API support. The security mechanism of WebView is different from native applications, which may reduce application security. Application releases and updates that support multiple platforms at the same time require multiple compilations and packages, increasing development and maintenance costs.