Technology peripherals

Technology peripherals

AI

AI

It can grab glass fragments and underwater transparent objects. Tsinghua has proposed a universal transparent object grabbing framework with a very high success rate.

It can grab glass fragments and underwater transparent objects. Tsinghua has proposed a universal transparent object grabbing framework with a very high success rate.

It can grab glass fragments and underwater transparent objects. Tsinghua has proposed a universal transparent object grabbing framework with a very high success rate.

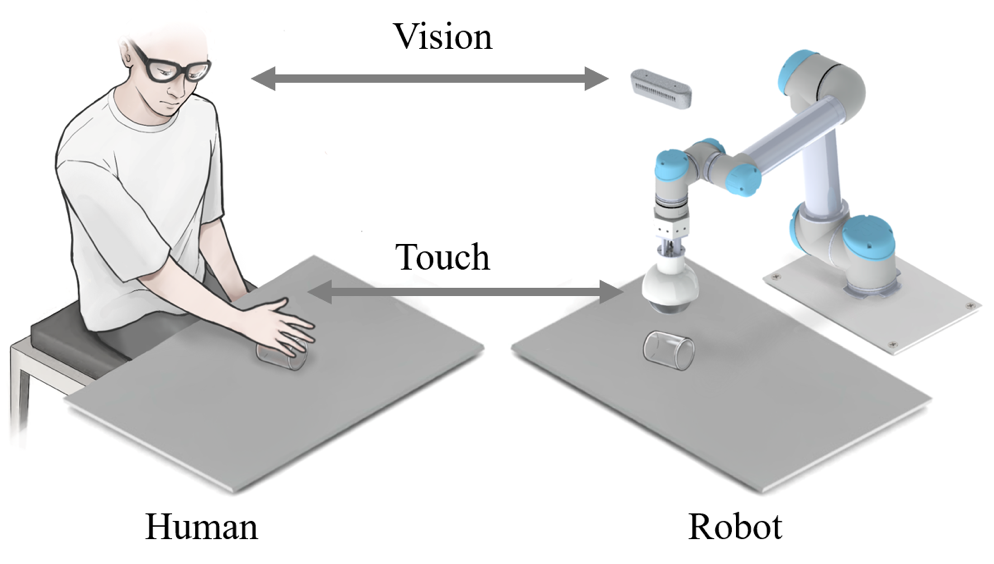

The perception and grasping of transparent objects in complex environments is a recognized problem in the field of robotics and computer vision. Recently, a team and collaborators from Tsinghua University Shenzhen International Graduate School proposed a visual-tactile fusion transparent object grasping framework, which is based on an RGB camera and a mechanical claw TaTa with tactile sensing capabilities, and uses sim2real To realize the grasping position detection of transparent objects. This framework can not only solve the problem of grasping irregular transparent objects such as glass fragments, but also solve the problems of grasping overlapping, stacked, uneven, sand piles and even highly dynamic underwater transparent objects.

Picture

Picture

Transparent objects are widely used in life due to their beauty, simplicity and other characteristics. For example, they can be found in kitchens, shops, and factories. Although transparent objects are common, grasping transparent objects is a very difficult problem for robots. There are three main reasons:

Picture

Picture

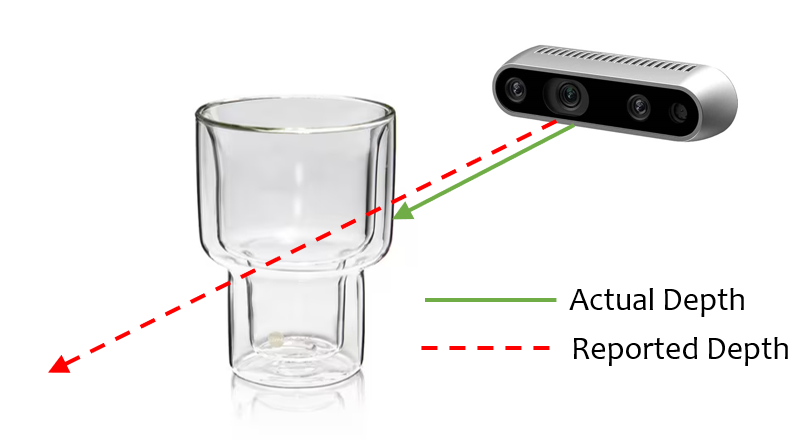

1. It does not have its own texture attributes. The information on the surface of transparent objects changes with the change of the environment, and the texture produced is mostly caused by light refraction and reflection, which brings great difficulties to the detection of transparent objects.

2. The annotation of transparent data sets is more difficult than the annotation of ordinary objects. In actual scenes, it is sometimes difficult for humans to distinguish transparent objects such as glass, let alone label images of transparent objects.

3. The surface of a transparent object is smooth, and even a small deviation in the grabbing position may lead to the failure of the grabbing task.

Therefore, how to solve the problem of grasping transparent objects in various complex scenes with as little cost as possible has become a very important issue in the field of transparent object research. Recently, the intelligent perception and robotics team from Tsinghua University Shenzhen International Graduate School proposed a transparent object grabbing framework based on visual and touch fusion. To realize the detection and grabbing of transparent objects. This method not only has a very high grabbing success rate, but can also be adapted to grabbing transparent objects in various complex scenes.

Picture

Picture

Please view the following paper link: https://ieeexplore.ieee.org/document/10175024

The corresponding author of the paper, Associate Professor Ding Wenbo of Shenzhen International Graduate School of Tsinghua University, said: "Robots have shown great application value in the field of home services, but most of the current robots focus on a single field and are general-purpose. The proposed robot grasping model will bring great impetus to the promotion and application of robot technology. Although we use transparent objects as the research object, this framework can be easily extended to the grasping tasks of common objects in life. "

The corresponding author of the paper and researcher Liu Houde from the Shenzhen International Graduate School of Tsinghua University said: "The unstructured environment in the home scene has brought great challenges to the practical application of robots. , we integrate vision and touch for perception, further simulating the perception process when humans interact with the outside world, and providing various guarantees for the stability of robot applications in complex scenarios. In addition to integrating vision and touch, the framework we propose It can also be extended to more modalities such as hearing."

Research Status

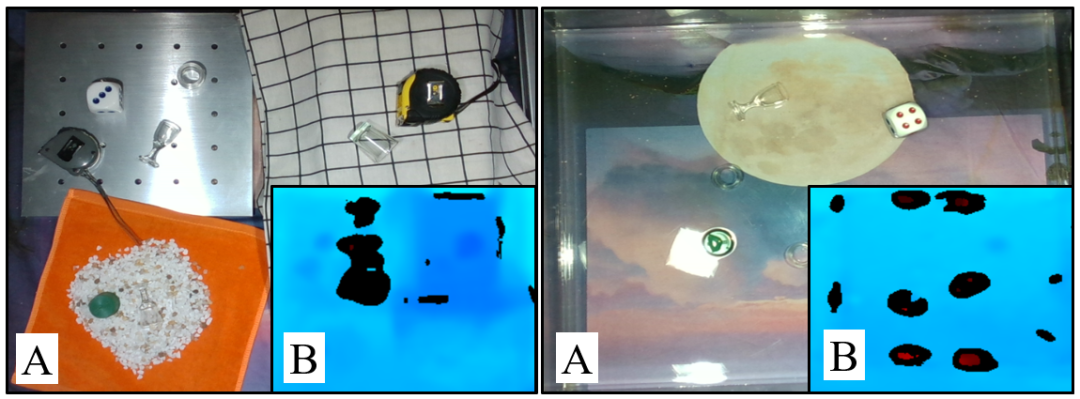

Grasping transparent objects is a challenge For this task, in addition to detecting the position of the object during the grabbing process, the grabbing position and angle should also be considered. Currently, most work on grasping transparent objects is performed on a plane with a simple background, but in real life, most scenes will not be as ideal as our experimental environment. Some special scenes, such as glass fragments, piles, overlaps, undulations, sand and underwater scenes, are more challenging.

- First of all, glass fragments are objects without a fixed model. Due to their random and changeable shapes, they place high demands on the versatility of the grasping network and grasping tools.

- Secondly, grabbing transparent objects on undulating planes is also challenging. As shown in the figure below, on the one hand, the depth information of transparent objects is difficult to obtain accurately, and on the other hand, the undulating scene has some shadows, overlaps, and reflection areas, which brings more challenges to the detection of transparent objects.

- Thirdly, due to the similar optical properties of water and transparent objects, grasping transparent objects in underwater scenes is also a challenge. Even with a depth camera, transparent objects cannot be accurately detected in water, and the situation gets worse when illuminated by light from different directions.

Picture

Picture

Algorithm Design

Picture

Picture

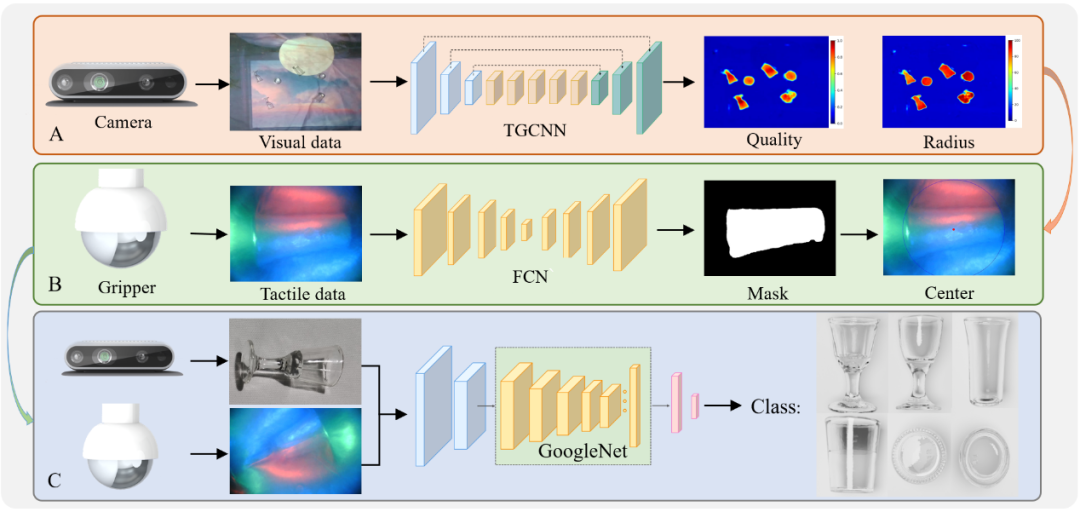

The design of the grasping algorithm is shown in the figure. In order to realize the grasping of transparent objects, we respectively proposed a transparent object grasping position detection algorithm, a tactile information extraction algorithm and a visual-tactile fusion classification. algorithm. In order to reduce the cost of labeling the dataset, we used Blender to create a multi-background transparent object grabbing synthetic dataset SimTrans12K, which contains 12,000 synthetic images and 160 real images. In addition to the dataset, we also propose a Gaussian-Mask annotation method for the unique optical properties of transparent objects. Since we use the Jamming gripper as the executor, we propose a specialized grasping network TGCNN for it, which can achieve good detection results after training on the synthetic data set.

Grasping framework

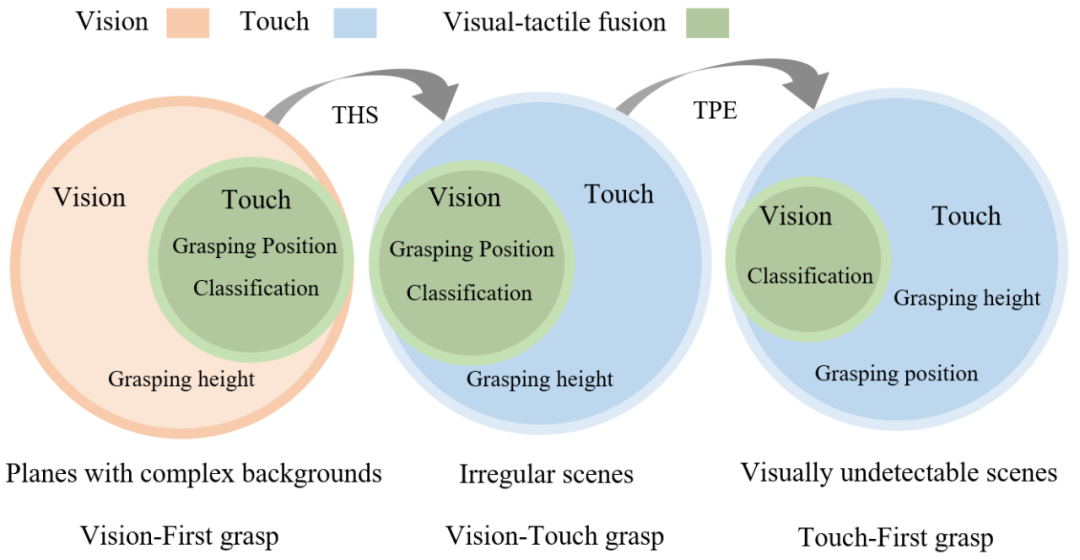

We have integrated the above algorithms to complete the capturing of transparent objects in different scenarios. Fetching, which constitutes the upper-level grabbing strategy of our visual-touch fusion framework. We decompose a grasping task into three subtasks, namely object classification, grasping position detection and grasping height detection. Each subtask can be accomplished by vision, touch, or a combination of vision and touch.

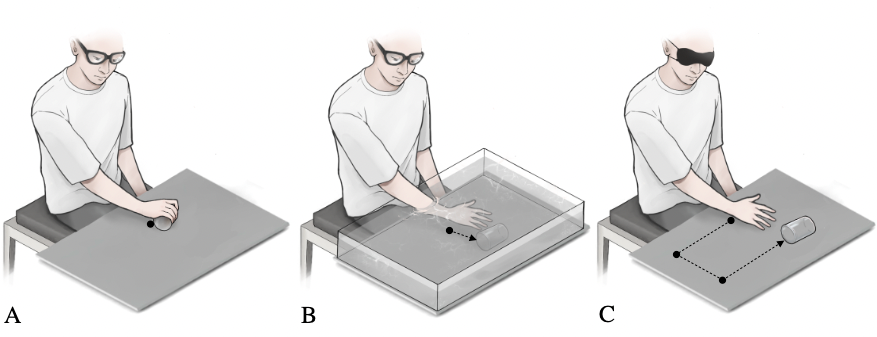

Similar to human grasping behavior, when vision can directly obtain the precise position of an object, we can control the hand to directly reach the object and complete the grasp, as shown in the figure below (A) Show. When vision cannot accurately obtain the position information of an object, after using vision to estimate the position of the object, we will use the tactile sensing function of the hand to slowly adjust the grasping position until the object is contacted and the appropriate grasping position is reached, as shown below (B) is shown. For object grasping under limited vision conditions, as shown in the figure below (C), we will use the rich tactile nerves of the hand to search within the possible range of the target until contact with the object is made. Although this is very inefficient, But it is an effective method to solve object grabbing in these special scenarios.

Picture

Picture

Inspired by human grasping strategies, we divide transparent object grasping tasks into three types: Flat surfaces with complex backgrounds, irregular scenes, and visually undetectable scenes, as shown in the figure below. In the first type, vision plays a key role, and we define the grasping method in this scenario as a vision-first grasping method. In the second type, vision and touch can work together, and we define the grasping method in this scenario as visual-tactile grasping. In the last type, vision may fail and touch becomes dominant in the task. We define the grasping method in this scenario as a touch-first grasping method.

Picture

Picture

The flow of the vision-first grabbing method is shown in the figure below. First, TGCNN is used to obtain the grabbing position and height. Then the tactile information is used to capture the position calibration, and finally the visual-tactile fusion algorithm is used for classification. The visual-tactile grasping is based on the previous one and adds the THS module, which can use the touch to obtain the height of the object. The haptic-first grasping approach has been joined by a TPE module that uses the sense of touch to obtain the position of transparent objects.

Experimental verification

In order to verify the effectiveness of our proposed framework and algorithm, we conducted a large number of verification experiments.

First, in order to test the effectiveness of our proposed transparent object dataset, annotation method and grasping position detection network, we conducted synthetic data detection experiments and under different backgrounds and brightness Transparent object grasping position detection experiment. Secondly, in order to verify the effectiveness of the visual-tactile fusion grasping framework, we designed a transparent object classification grasping experiment and a transparent fragment grasping experiment. Third, we designed a transparent object grasping experiment in irregular and visually restricted scenes to test the effectiveness of the framework after adding the THS module and TPE module.

Summary

To address the challenging problem of detecting, grasping, and classifying transparent objects, this study proposes a synthetic dataset-based A framework for visual-haptic fusion. First, the Blender simulation engine is used to render synthetic datasets instead of manually annotated datasets.

In addition, Gaussian-Mask is used instead of the traditional binary annotation method to make the generation of grab positions more accurate. In order to detect the grasping position of transparent objects, the author proposed an algorithm called TGCNN and conducted multiple comparative experiments. The results show that even if only synthetic data sets are used for training, the algorithm can perform well on different backgrounds. and lighting conditions to achieve good detection.

Considering the difficulty in grasping caused by the limitations of visual detection, this study proposes a tactile calibration method combined with the soft gripper TaTa, by adjusting the grasp with tactile information location to improve the crawling success rate. Compared with pure visual grasping, this method improves the grasping success rate by 36.7%.

In order to solve the problem of classifying transparent objects in complex scenes, this study proposes a transparent object classification method based on vision-tactile fusion, which is compared with a classification based on vision alone. , the accuracy increased by 39.1%.

In addition, in order to achieve transparent object grabbing in irregular and visually undetectable scenes, this study proposes THS and TPE modules, which can compensate for the lack of visual information. The transparent object grabbing problem below. The researchers systematically designed a large number of experiments to verify the effectiveness of the proposed framework in complex scenes such as various superpositions, overlaps, undulations, sandy areas, and underwater scenes. The study believes that the proposed framework can also be applied to object detection in low-visibility environments, such as smoke and turbid water, where tactile perception can make up for the lack of visual detection and improve classification accuracy through visual-tactile fusion.

About the author

The instructor of the visual-touch fusion transparent object grasping project is Ding Wenbo. Currently, he is an associate professor at Shenzhen International Graduate School of Tsinghua University, where he leads the intelligent perception and robotics research group. His research interests mainly include signal processing, machine learning, wearable devices, flexible human-computer interaction and machine perception. He previously graduated with a bachelor's degree and a doctoral degree from the Department of Electronic Engineering of Tsinghua University, and served as a postdoctoral fellow at the Georgia Institute of Technology, where he studied under Academician Wang Zhonglin. He has won many awards such as the Tsinghua University Special Prize, the Gold Medal of the 47th International Exhibition of Inventions of Geneva, the IEEE Scott Helt Memorial Award, the Second Prize of the Natural Science Award of the China Electronics Society, etc., and has worked in Nature Communications, Science Advances, Energy and Environmental Science, Advanced Energy He has published more than 70 papers in authoritative journals in the fields of Materials, IEEE TRO/RAL and other fields, has been cited more than 6,000 times by Google Scholar, and has authorized more than 10 patents in China and the United States. He serves as the associate editor of the authoritative international signal processing journal Digital Signal Processing, the chief guest editor of the IEEE JSTSP Special Issue on Robot Perception, and the Applied Signal Processing Systems Technical Committee Member of the IEEE Signal Processing Society.

Homepage of the research group: http://ssr-group.net/.

From left to right: Shoujie Li,Haixin Yu,Houde Liu

The co-authors of the paper are Shoujie Li (Ph.D. student at Tsinghua University) and Haixin Yu (Master student at Tsinghua University). The corresponding authors are Wenbo Ding and Houde Liu. Other authors include Linqi Ye (Shanghai University ), Chongkun Xia (Tsinghua University), Xueqian Wang (Tsinghua University), Xiao-Ping Zhang (Tsinghua University). Among them, Shoujie Li's main research directions are robot grasping, tactile perception and deep learning. As the first author, he has published many papers in authoritative robotics and control journals and conferences such as Soft Robotics, TRO, RAL, ICRA, IROS, etc., and has authorized 10 invention patents. The remaining projects have won 10 provincial and ministerial competition awards. The relevant research results were selected as the first author in the "ICRA 2022 Outstanding Mechanisms and Design Paper Finalists". He has won honors such as Tsinghua University Future Scholar Scholarship and National Scholarship.

The above is the detailed content of It can grab glass fragments and underwater transparent objects. Tsinghua has proposed a universal transparent object grabbing framework with a very high success rate.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

When converting strings to objects in Vue.js, JSON.parse() is preferred for standard JSON strings. For non-standard JSON strings, the string can be processed by using regular expressions and reduce methods according to the format or decoded URL-encoded. Select the appropriate method according to the string format and pay attention to security and encoding issues to avoid bugs.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to set the timeout of Vue Axios

Apr 07, 2025 pm 10:03 PM

How to set the timeout of Vue Axios

Apr 07, 2025 pm 10:03 PM

In order to set the timeout for Vue Axios, we can create an Axios instance and specify the timeout option: In global settings: Vue.prototype.$axios = axios.create({ timeout: 5000 }); in a single request: this.$axios.get('/api/users', { timeout: 10000 }).

Vue.js How to convert an array of string type into an array of objects?

Apr 07, 2025 pm 09:36 PM

Vue.js How to convert an array of string type into an array of objects?

Apr 07, 2025 pm 09:36 PM

Summary: There are the following methods to convert Vue.js string arrays into object arrays: Basic method: Use map function to suit regular formatted data. Advanced gameplay: Using regular expressions can handle complex formats, but they need to be carefully written and considered. Performance optimization: Considering the large amount of data, asynchronous operations or efficient data processing libraries can be used. Best practice: Clear code style, use meaningful variable names and comments to keep the code concise.

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

MySQL performance optimization needs to start from three aspects: installation configuration, indexing and query optimization, monitoring and tuning. 1. After installation, you need to adjust the my.cnf file according to the server configuration, such as the innodb_buffer_pool_size parameter, and close query_cache_size; 2. Create a suitable index to avoid excessive indexes, and optimize query statements, such as using the EXPLAIN command to analyze the execution plan; 3. Use MySQL's own monitoring tool (SHOWPROCESSLIST, SHOWSTATUS) to monitor the database health, and regularly back up and organize the database. Only by continuously optimizing these steps can the performance of MySQL database be improved.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend