What crawler frameworks are there for php?

The operating environment of this tutorial: windows10 system, php8.1.3 version, DELL G3 computer.

PHP is a popular server-side scripting language widely used for web development. In the process of web development, crawling is a very important task for collecting data from the Internet. In order to simplify the development process and improve efficiency, PHP provides many crawler frameworks. Some commonly used PHP crawler frameworks will be introduced below.

1. Goutte: Goutte is a very simple and easy to use PHP Web crawler framework. Based on Symfony components, it provides a concise API for sending HTTP requests, parsing HTML code and extracting the required data. Goutte has good scalability and supports JavaScript rendering. This makes it ideal for working with dynamic pages.

2. QueryPath: QueryPath is a jQuery-based library for collecting and manipulating HTML documents, which can help users easily parse and extract data. It converts HTML documents into DOM (Document Object Model) and provides a set of APIs similar to jQuery, making it very simple to perform various operations on the DOM. QueryPath also supports XPath queries, making data extraction more flexible.

3. Symphony DomCrawler:Symfony DomCrawler is a powerful web crawler tool that is part of the Symfony framework. It provides a simple API for parsing HTML documents, extracting data and manipulating DOM trees. DomCrawler also supports chained calls, can easily traverse the tree, and provides powerful query functions such as XPath and CSS selectors.

4. phpcrawl: phpcrawl is an open source PHP crawler framework that supports crawling a variety of network resources, such as web pages, pictures, videos, etc. It provides a customized crawling process, and users can write crawling rules suitable for specific websites according to their own needs. phpcrawl also has a fault-tolerant mechanism, able to handle network connection errors and retry requests.

5. Guzzle: Guzzle is a popular PHP HTTP client, which can also be used to write crawlers. It provides a concise and powerful API for sending HTTP requests, processing responses and parsing HTML. Guzzle supports concurrent requests and asynchronous request processing, and is suitable for handling a large number of crawling tasks.

6. Spider.php: Spider.php is a simple PHP crawler framework based on the cURL library for network requests. It provides a simple API, and users only need to write callback functions to handle request results. Spider.php supports concurrent requests and delayed access control, which can help users implement highly customized crawler logic.

These are some commonly used PHP crawler frameworks. They all have their own characteristics and applicable scenarios. Depending on the specific needs of the project, choosing an appropriate framework can improve development efficiency and crawling performance. Whether it is a simple data collection or a complex website scraping task, these frameworks can provide the required functionality and simplify the development process .

The above is the detailed content of What crawler frameworks are there for php?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

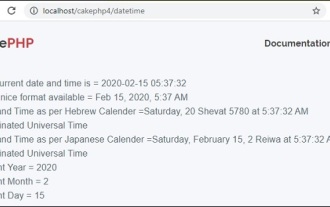

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

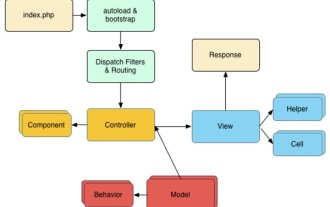

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

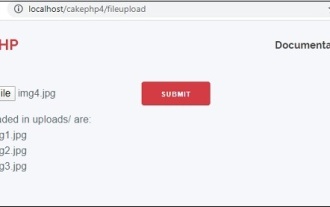

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

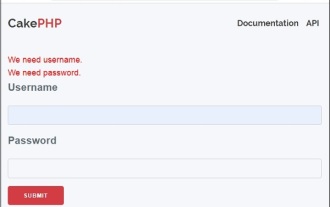

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

CakePHP Quick Guide

Sep 10, 2024 pm 05:27 PM

CakePHP Quick Guide

Sep 10, 2024 pm 05:27 PM

CakePHP is an open source MVC framework. It makes developing, deploying and maintaining applications much easier. CakePHP has a number of libraries to reduce the overload of most common tasks.

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an