Technology peripherals

Technology peripherals

AI

AI

Intel Zhang Yu: Edge computing plays an important role in the entire AI ecosystem

Intel Zhang Yu: Edge computing plays an important role in the entire AI ecosystem

Intel Zhang Yu: Edge computing plays an important role in the entire AI ecosystem

[Global Network Technology Reporter Lin Mengxue] Currently, generative AI and large models are showing high popularity around the world. During the just past 2023 World Artificial Intelligence Conference (WAIC 2023), various manufacturers even set off According to incomplete statistics from the organizing committee, a total of more than 30 large-scale model platforms were released and unveiled in the "Hundred Model War". 60% of the offline booths displayed relevant introductions and applications of generative AI technology, and 80% of the participants discussed the content. It revolves around large models.

During WAIC 2023, Zhang Yu, senior chief AI engineer of Intel Corporation and chief technology officer of Network and Edge Division China, believed that the core factor promoting the development of this round of artificial intelligence is actually the continuous advancement of computing, communication and storage technologies. promote. In the entire AI ecosystem, whether it is large-scale models or AI fusion, the edge plays a vital role.

Zhang Yu said, “With the digital transformation of the industry, people’s demands for agile connections, real-time business and application intelligence have promoted the development of edge artificial intelligence. However, most of the applications of edge artificial intelligence are still at the edge. Inference stage. That is to say, we need to use a large amount of data and huge computing power to train a model in the data center, and we push the training results to the front end to perform an inference operation. This is the current use of most artificial intelligence on the edge. model."

"This model will inevitably limit the frequency of model updates, but we have also seen that many smart industries actually have demands for model updates. Autonomous driving needs to be able to adapt to various road conditions and be suitable for the driving of different drivers. Habit. However, when we train a role model in a car factory, there are often certain differences between the training data used and the data generated during dynamic driving. This difference affects the generalization ability of the model, that is, to The ability to adapt to new road conditions and new driving behaviors. We need to continuously train and optimize models at the edge to advance this process," he said.

Therefore, Zhang Yu proposed that the second stage of artificial intelligence development should be the edge training stage. "If we want to implement edge training, we need more automated means and tools to complete a complete development process from data annotation to model training, as well as model deployment." He said that the next development direction of edge artificial intelligence should be It is independent learning.

In the actual development process, edge artificial intelligence also faces many challenges. In Zhang Yu’s view, in addition to the challenges of edge training, there are also challenges of edge equipment. "Since the power consumption that the provided computing power can carry is often limited, how to implement edge reasoning and training with limited resources puts forward higher requirements for the performance and power consumption ratio of the chip." He also It is pointed out that the fragmentation of edge devices is very obvious, and how to use software to achieve migration between different platforms also puts forward more requirements.

In addition, the development of artificial intelligence is closely related to computing power, and behind the computing power is a huge data foundation. In the face of massive data assets, how to protect data has become a hot topic in the development of edge artificial intelligence. Once AI is deployed at the edge, these models will be beyond the control of the service provider. How do we protect the model at this time? And it is necessary to achieve good protection effects during storage and operation. These are the challenges faced by edge artificial intelligence. ”

"Intel is a data company, and our products cover all aspects of computing, communications and storage. In terms of computing, Intel provides many products including CPUs, GPUs, FPGAs and various artificial intelligence acceleration chips. A variety of products to meet users' different requirements for computing power. For example, in terms of large artificial intelligence models, the Gaudi2 product launched by Intel's Habana is the only product in the entire industry that has shown excellent performance in large model training. At the edge In terms of inference, the OpenVINO deep learning deployment tool suite provided by Intel can quickly deploy the models designed and trained by developers on the open artificial intelligence framework to different hardware platforms to perform inference operations."

The above is the detailed content of Intel Zhang Yu: Edge computing plays an important role in the entire AI ecosystem. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Intel announces Wi-Fi 7 BE201 network card, supports CNVio3 interface

Jun 07, 2024 pm 03:34 PM

Intel announces Wi-Fi 7 BE201 network card, supports CNVio3 interface

Jun 07, 2024 pm 03:34 PM

According to news from this site on June 1, Intel updated the support document on May 27 and announced the product details of the Wi-Fi7 (802.11be) BE201 network card code-named "Fillmore Peak2". Source of the above picture: benchlife website Note: Unlike the existing BE200 and BE202 which use PCIe/USB interface, BE201 supports the latest CNVio3 interface. The main specifications of the BE201 network card are similar to those of the BE200. It supports 2x2TX/RX streams, supports 2.4GHz, 5GHz and 6GHz. The maximum network speed can reach 5Gbps, which is far lower than the maximum standard rate of 40Gbit/s. BE201 also supports Bluetooth 5.4 and Bluetooth LE.

Intel Core Ultra 9 285K processor exposed: CineBench R23 multi-core running score is 18% higher than i9-14900K

Jul 25, 2024 pm 12:25 PM

Intel Core Ultra 9 285K processor exposed: CineBench R23 multi-core running score is 18% higher than i9-14900K

Jul 25, 2024 pm 12:25 PM

According to news from this website on July 25, the source Jaykihn posted a tweet on the X platform yesterday (July 24), sharing the running score data of the Intel Core Ultra9285K "ArrowLake-S" desktop processor. The results show that it is better than the Core 14900K 18% faster. This site quoted the content of the tweet. The source shared the running scores of the ES2 and QS versions of the Intel Core Ultra9285K processor and compared them with the Core i9-14900K processor. According to reports, the TD of ArrowLake-SQS when running workloads such as CinebenchR23, Geekbench5, SpeedoMeter, WebXPRT4 and CrossMark

Intel N250 low-power processor exposed: 4 cores, 4 threads, 1.2 GHz frequency

Jun 03, 2024 am 10:26 AM

Intel N250 low-power processor exposed: 4 cores, 4 threads, 1.2 GHz frequency

Jun 03, 2024 am 10:26 AM

According to news from this site on May 16, the source @InstLatX64 recently tweeted that Intel is preparing to launch a new N250 "TwinLake" series of low-power processors to replace the N200 series "AlderLake-N" series. Source: videocardz The N200 series processors are popular in low-cost laptops, thin clients, embedded systems, self-service and point-of-sale terminals, NAS and consumer electronics. "TwinLake" is the code name of the new processor series, which is somewhat similar to the single-chip processor Dies using a ring bus (RingBus) layout, but with an E-core cluster to complete the computing power. The screenshots attached to this site are as follows: AlderLake-N

MSI launches new MS-C918 mini console with Intel Alder Lake-N N100 processor

Jul 03, 2024 am 11:33 AM

MSI launches new MS-C918 mini console with Intel Alder Lake-N N100 processor

Jul 03, 2024 am 11:33 AM

This website reported on July 3 that in order to meet the diversified needs of modern enterprises, MSIIPC, a subsidiary of MSI, has recently launched the MS-C918, an industrial mini host. No public price has been found yet. MS-C918 is positioned for enterprises that focus on cost-effectiveness, ease of use and portability. It is specially designed for non-critical environments and provides a 3-year service life guarantee. MS-C918 is a handheld industrial computer, using Intel AlderLake-NN100 processor, specially tailored for ultra-low power solutions. The main functions and features of MS-C918 attached to this site are as follows: Compact size: 80 mm x 80 mm x 36 mm, palm size, easy to operate and hidden behind the monitor. Display function: via 2 HDMI2.

ASUS releases BIOS update for Z790 motherboards to alleviate instability issues with Intel's 13th/14th generation Core processors

Aug 09, 2024 am 12:47 AM

ASUS releases BIOS update for Z790 motherboards to alleviate instability issues with Intel's 13th/14th generation Core processors

Aug 09, 2024 am 12:47 AM

According to news from this website on August 8, MSI and ASUS today launched a beta version of BIOS containing the 0x129 microcode update for some Z790 motherboards in response to the instability issues in Intel Core 13th and 14th generation desktop processors. ASUS's first batch of motherboards to provide BIOS updates include: ROGMAXIMUSZ790HEROBetaBios2503ROGMAXIMUSZ790DARKHEROBetaBios1503ROGMAXIMUSZ790HEROBTFBetaBios1503ROGMAXIMUSZ790HEROEVA-02 joint version BetaBios2503ROGMAXIMUSZ790A

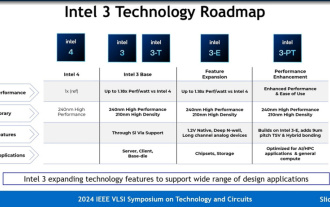

Intel explains in detail the Intel 3 process: applying more EUV lithography, increasing the frequency of the same power consumption by up to 18%

Jun 19, 2024 pm 10:53 PM

Intel explains in detail the Intel 3 process: applying more EUV lithography, increasing the frequency of the same power consumption by up to 18%

Jun 19, 2024 pm 10:53 PM

According to news from this site on June 19, as part of the 2024 IEEEVLSI seminar activities, Intel recently introduced the technical details of the Intel3 process node on its official website. Intel's latest generation of FinFET transistor technology is Intel's latest generation of FinFET transistor technology. Compared with Intel4, it has added steps to use EUV. It will also be a node family that provides foundry services for a long time, including basic Intel3 and three variant nodes. Among them, Intel3-E natively supports 1.2V high voltage, which is suitable for the manufacturing of analog modules; while the future Intel3-PT will further improve the overall performance and support finer 9μm pitch TSV and hybrid bonding. Intel claims that as its

Intel Panther Lake mobile processor specifications exposed: up to '4+8+4' 16-core CPU, 12 Xe3 core display

Jul 18, 2024 pm 04:43 PM

Intel Panther Lake mobile processor specifications exposed: up to '4+8+4' 16-core CPU, 12 Xe3 core display

Jul 18, 2024 pm 04:43 PM

According to news from this site on July 16, following the revelation of the specifications of the ArrowLake desktop processor and the BartlettLake desktop processor, blogger @jaykihn0 released the specifications of the mobile U and H versions of the Intel PantherLake processor in the early morning. The Panther Lake mobile processor is expected to be named the Core Ultra300 series and will be available in the following versions: PTL-U: 4P+0E+4LPE+4Xe, 15WPL1PTL-H: 4P+8E+4LPE+12Xe, 25WPL1PTL-H: 4P+8E+4LPE+ 4Xe, 25WPL1. The blogger also released the 12Xe nuclear display version of the PantherLake processor.

6700E debuts in China on June 6, Intel officially launches Xeon 6 series processors

Jun 06, 2024 am 10:51 AM

6700E debuts in China on June 6, Intel officially launches Xeon 6 series processors

Jun 06, 2024 am 10:51 AM

This site reported on June 4 that Intel plans to launch a new generation of Xeon processors in batches from now to the first quarter of next year, of which the Xeon 6700E will be launched in China on June 6. Intel plans to launch the Xeon 6900P "Granite Rapids" in the international market in the third quarter of 2024, with up to 128 cores; and the Xeon 6900E "Sierra Forest" in the first quarter of 2025, with up to 288 cores. The "Xeon 6" series is divided into E and P series: P series The P series is mainly aimed at computing-intensive and AI workloads such as high-performance computing, database and analysis, artificial intelligence, network, edge and infrastructure/storage, with up to 128 Personal performance cores, including 6900P/