Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

How to use PHP and swoole for large-scale web crawler development?

How to use PHP and swoole for large-scale web crawler development?

How to use PHP and swoole for large-scale web crawler development?

How to use PHP and swoole for large-scale web crawler development?

Introduction:

With the rapid development of the Internet, big data has become one of the important resources in today's society. In order to obtain this valuable data, web crawlers came into being. Web crawlers can automatically visit various websites on the Internet and extract required information from them. In this article, we will explore how to use PHP and the swoole extension to develop efficient, large-scale web crawlers.

1. Understand the basic principles of web crawlers

The basic principles of web crawlers are very simple: by sending HTTP requests, simulate a browser to access the web page, parse the content of the web page, and then extract the required information. When implementing a web crawler, we can use PHP's cURL library to send HTTP requests and use regular expressions or DOM parsers to parse HTML.

2. Use swoole extension to optimize the performance of web crawlers

Swoole is a PHP coroutine framework for production environments. It uses coroutine technology to greatly improve the concurrency performance of PHP. In web crawler development, using swoole can support thousands or more concurrent connections, allowing the crawler to handle requests and parsing of multiple web pages at the same time, greatly improving the efficiency of the crawler.

The following is a simple web crawler example written using swoole:

<?php

// 引入swoole库

require_once 'path/to/swoole/library/autoload.php';

use SwooleCoroutine as Co;

// 爬虫逻辑

function crawler($url) {

$html = file_get_contents($url);

// 解析HTML,提取所需的信息

// ...

return $data;

}

// 主函数

Coun(function () {

$urls = [

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3',

// ...

];

// 创建协程任务

$tasks = [];

foreach ($urls as $url) {

$tasks[] = Co::create(function() use ($url) {

$data = crawler($url);

echo $url . ' completed.' . PHP_EOL;

// 处理爬取到的数据

// ...

});

}

// 等待协程任务完成

Co::listWait($tasks);

});

?>In the above example, we used the coroutine attribute of swoole Coun() to create Create a coroutine environment, and then use the Co::create() method under the swoolecoroutine namespace to create multiple coroutine tasks. When each coroutine task is completed, the completed URL will be output and data will be processed. Finally, use Co::listWait() to wait for all coroutine tasks to complete.

In this way, we can easily implement high-concurrency web crawlers. You can adjust the number of coroutine tasks and the list of crawled URLs according to actual needs.

3. Other optimization methods for web crawlers

In addition to using swoole extensions to improve concurrency performance, you can also further optimize web crawlers through the following methods:

- Reasonable settings Request headers and request frequency: Simulate browser request headers to avoid being blocked by the website, and set a reasonable request frequency to avoid excessive pressure on the target website.

- Use proxy IP: Using proxy IP can avoid being restricted or blocked by the target website.

- Set a reasonable number of concurrency: The number of concurrency of the crawler should not be too high, otherwise it may cause a burden on the target website. Make reasonable adjustments based on the performance of the target website and the performance of the machine.

Conclusion:

This article introduces how to use PHP and swoole extensions to develop large-scale web crawlers. By using swoole, we can give full play to the concurrency performance of PHP and improve the efficiency of web crawlers. At the same time, we also introduced some other optimization methods to ensure the stability and reliability of the crawler. I hope this article will help you understand and develop web crawlers.

The above is the detailed content of How to use PHP and swoole for large-scale web crawler development?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

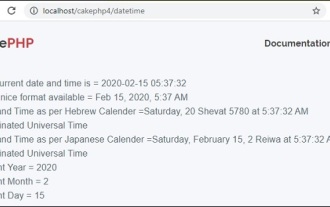

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

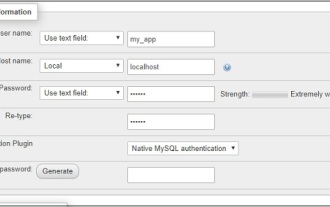

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

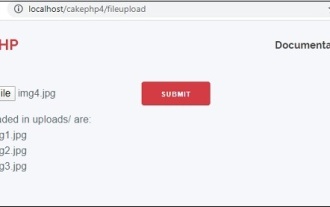

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

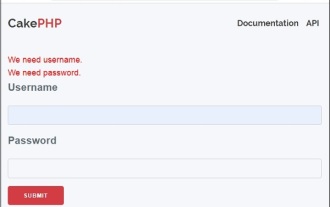

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

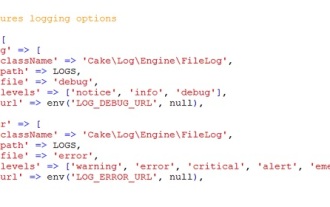

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

Logging in CakePHP is a very easy task. You just have to use one function. You can log errors, exceptions, user activities, action taken by users, for any background process like cronjob. Logging data in CakePHP is easy. The log() function is provide

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c