Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

PHP and phpSpider: How to deal with performance issues in large-scale data crawling?

PHP and phpSpider: How to deal with performance issues in large-scale data crawling?

PHP and phpSpider: How to deal with performance issues in large-scale data crawling?

PHP and phpSpider: How to deal with performance issues in large-scale data crawling?

With the development of the Internet and the popularity of data, more and more companies and individuals have begun to pay attention to data crawling to obtain the required information. In large-scale data crawling tasks, performance is an important consideration. This article will introduce how to use PHP and phpSpider to deal with the performance issues of large-scale data crawling, and illustrate it through code examples.

1. Use multi-threading

When crawling large-scale data, using multi-threading can significantly improve the running efficiency of the program. Through PHP's multi-threading extensions (such as the PHP pthreads extension), multiple crawling tasks can be performed simultaneously in one process. The following is a sample code using multi-threading:

<?php

$urls = array(

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3',

// 更多待爬取的URL

);

$threads = array();

// 创建线程

foreach ($urls as $url) {

$thread = new MyThread($url);

$threads[] = $thread;

$thread->start();

}

// 等待线程执行完毕

foreach ($threads as $thread) {

$thread->join();

}

class MyThread extends Thread {

private $url;

public function __construct($url) {

$this->url = $url;

}

public function run() {

// 在这里写爬取逻辑

// 使用$this->url作为爬取的URL

}

}

?>2. Optimize network access

When crawling data, network access is one of the bottlenecks of performance. In order to improve the efficiency of network access, you can use excellent HTTP client libraries such as curl library or Guzzle to implement functions such as parallel requests and connection pool management.

The following sample code demonstrates how to use the Guzzle library for parallel execution of multiple requests:

<?php

require 'vendor/autoload.php'; // 请确保已安装Guzzle库

use GuzzleHttpClient;

use GuzzleHttpPool;

use GuzzleHttpPsr7Request;

$urls = array(

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3',

// 更多待爬取的URL

);

$client = new Client();

$requests = function ($urls) {

foreach ($urls as $url) {

yield new Request('GET', $url);

}

};

$pool = new Pool($client, $requests($urls), [

'concurrency' => 10, // 并发请求数量

'fulfilled' => function ($response, $index) {

// 在这里处理请求成功的响应

// $response为响应对象

},

'rejected' => function ($reason, $index) {

// 在这里处理请求失败的原因

// $reason为失败原因

},

]);

$promise = $pool->promise();

$promise->wait();

?>3. Reasonable use of cache

In large-scale data crawling, often access Same URL multiple times. In order to reduce the number of network requests and improve program performance, caching mechanisms (such as Memcached or Redis) can be reasonably used to store crawled data. The following is a sample code that uses Memcached as a cache:

<?php

$urls = array(

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3',

// 更多待爬取的URL

);

$memcached = new Memcached();

$memcached->addServer('localhost', 11211);

foreach ($urls as $url) {

$data = $memcached->get($url);

if ($data === false) {

// 如果缓存中没有数据,则进行爬取并存入缓存

// 爬取逻辑略

$data = $result; // 假设$result为爬取得到的数据

$memcached->set($url, $data);

}

// 使用$data进行后续数据处理

}

?>Through reasonable use of cache, repeated network requests can be reduced and the efficiency of data crawling can be improved.

Summary:

This article introduces how to use multi-threading, optimize network access, and rationally use cache to deal with performance issues of large-scale data crawling. Code examples show how to use PHP's multi-thread extension, Guzzle library and caching mechanism to improve crawling efficiency. In actual applications, other methods can be used to further optimize performance depending on specific needs and environments.

The above is the detailed content of PHP and phpSpider: How to deal with performance issues in large-scale data crawling?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

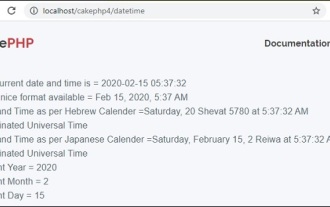

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

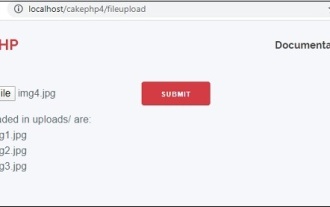

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

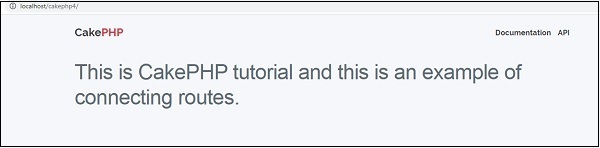

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

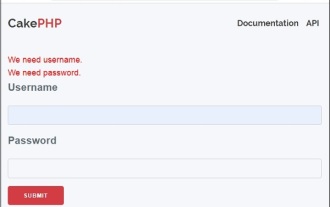

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c