Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Sharing tips on how to capture Zhihu Q&A data using PHP and phpSpider!

Sharing tips on how to capture Zhihu Q&A data using PHP and phpSpider!

Sharing tips on how to capture Zhihu Q&A data using PHP and phpSpider!

Sharing tips on how to use PHP and phpSpider to capture Zhihu Q&A data!

As the largest knowledge sharing platform in China, Zhihu has a massive amount of question and answer data. For many developers and researchers, obtaining and analyzing this data is very valuable. This article will introduce how to use PHP and phpSpider to capture Zhihu Q&A data, and share some tips and practical code examples.

1. Install phpSpider

phpSpider is a crawler framework written in PHP language. It has powerful data capture and processing functions and is very suitable for capturing Zhihu Q&A data. The following are the installation steps for phpSpider:

- Install Composer: First make sure you have installed Composer. You can check whether it is installed by running the following command:

composer -v

If it works normally If the version number of Composer is displayed, it means the installation has been successful.

- Create a new project directory: Execute the following command on the command line to create a new phpSpider project:

composer create-project vdb/php-spider my-project

This will create a project called my-project new directory and install phpSpider in it.

2. Write phpSpider code

- Create a new phpSpider task: Enter the my-project directory and use the following command to create a new phpSpider task:

./phpspider --create mytask

This will create a new directory called mytask in the my-project directory, which contains the necessary files for scraping data.

- Edit crawling rules: In the mytask directory, open the rules.php file, which is a PHP script used to define crawling rules. You can define in this script the URL of the Zhihu Q&A page you need to crawl, as well as the data fields you want to extract.

The following is a simple crawling rule example:

return array(

'name' => '知乎问答',

'tasknum' => 1,

'domains' => array(

'www.zhihu.com'

),

'start_urls' => array(

'https://www.zhihu.com/question/XXXXXXXX'

),

'scan_urls' => array(),

'list_url_regexes' => array(

"https://www.zhihu.com/question/XXXXXXXX/page/([0-9]+)"

),

'content_url_regexes' => array(

"https://www.zhihu.com/question/XXXXXXXX/answer/([0-9]+)"

),

'fields' => array(

array(

'name' => "question",

'selector_type' => 'xpath',

'selector' => "//h1[@class='QuestionHeader-title']/text()"

),

array(

'name' => "answer",

'selector_type' => 'xpath',

'selector' => "//div[@class='RichContent-inner']/text()"

)

)

);In the above example, we defined a crawling task named Zhihu Q&A, which will crawl Get all the answers to a specific question. It contains the data field name, selector type and selector that need to be extracted.

- Write a custom callback function: In the mytask directory, open the callback.php file. This is a PHP script used to process and save the captured data.

The following is a simple example of a custom callback function:

function handle_content($url, $content)

{

$data = array();

$dom = new DOMDocument();

@$dom->loadHTML($content);

// 使用XPath选择器提取问题标题

$xpath = new DOMXPath($dom);

$question = $xpath->query("//h1[@class='QuestionHeader-title']");

$data['question'] = $question->item(0)->nodeValue;

// 使用XPath选择器提取答案内容

$answers = $xpath->query("//div[@class='RichContent-inner']");

foreach ($answers as $answer) {

$data['answer'][] = $answer->nodeValue;

}

// 保存数据到文件或数据库

// ...

}In the above example, we defined a callback function named handle_content, which will be fetched is called after the data. In this function, we extracted the question title and answer content using the XPath selector and saved the data in the $data array.

3. Run the phpSpider task

- Start the phpSpider task: In the my-project directory, use the following command to start the phpSpider task:

./phpspider --daemon mytask

This will Start a phpSpider process in the background and start grabbing Zhihu Q&A data.

- View the crawling results: The phpSpider task will save the crawled data in the data directory, with the task name as the file name, and each crawling task corresponds to a file.

You can view the crawling results through the following command:

tail -f data/mytask/data.log

This will display the crawling log and results in real time.

4. Summary

This article introduces the techniques of using PHP and phpSpider to capture Zhihu Q&A data. By installing phpSpider, writing crawling rules and custom callback functions, and running phpSpider tasks, we can easily crawl and process Zhihu Q&A data.

Of course, phpSpider has more powerful functions and usages, such as concurrent crawling, proxy settings, UA settings, etc., which can be configured and used according to actual needs. I hope this article will be helpful to developers who are interested in capturing Zhihu Q&A data!

The above is the detailed content of Sharing tips on how to capture Zhihu Q&A data using PHP and phpSpider!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,

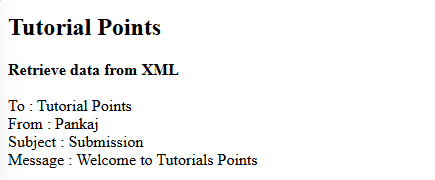

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Static binding (static::) implements late static binding (LSB) in PHP, allowing calling classes to be referenced in static contexts rather than defining classes. 1) The parsing process is performed at runtime, 2) Look up the call class in the inheritance relationship, 3) It may bring performance overhead.

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are the magic methods of PHP? PHP's magic methods include: 1.\_\_construct, used to initialize objects; 2.\_\_destruct, used to clean up resources; 3.\_\_call, handle non-existent method calls; 4.\_\_get, implement dynamic attribute access; 5.\_\_set, implement dynamic attribute settings. These methods are automatically called in certain situations, improving code flexibility and efficiency.