Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

phpSpider practical tips: How to deal with the problem of crawling asynchronously loaded content?

phpSpider practical tips: How to deal with the problem of crawling asynchronously loaded content?

phpSpider practical tips: How to deal with the problem of crawling asynchronously loaded content?

phpSpider Practical Tips: How to deal with crawling problems with asynchronously loaded content?

In the process of crawling web pages, some websites use asynchronous loading to load content, which brings certain troubles to crawlers. Traditional crawling methods often cannot obtain asynchronously loaded content, so we need to adopt some special techniques to solve this problem. This article will introduce several commonly used methods to deal with asynchronous loading of content, and provide corresponding PHP code examples.

1. Use dynamic rendering method

Dynamic rendering refers to simulating browser behavior and obtaining complete page content by executing JavaScript scripts in web pages. This method can obtain asynchronously loaded content, but it is relatively complicated. In PHP, you can use third-party libraries such as Selenium to simulate browser behavior. The following is a sample code using Selenium:

use FacebookWebDriverRemoteDesiredCapabilities;

use FacebookWebDriverRemoteRemoteWebDriver;

use FacebookWebDriverWebDriverBy;

// 设置Selenium的服务器地址和端口号

$host = 'http://localhost:4444/wd/hub';

// 设置浏览器的选项和驱动

$capabilities = DesiredCapabilities::firefox();

$driver = RemoteWebDriver::create($host, $capabilities);

// 打开目标网页

$driver->get('http://example.com');

// 执行JavaScript脚本获取异步加载的内容

$script = 'return document.getElementById("target-element").innerHTML;';

$element = $driver->executeScript($script);

// 打印获取到的内容

echo $element;

// 关闭浏览器驱动

$driver->quit();2. Analyze network requests

Another method is to obtain asynchronously loaded content by analyzing the network requests of the web page. We can use developer tools or packet capture tools to view web page requests and find interfaces related to asynchronous loading. You can then use PHP's curl library or other third-party libraries to send the HTTP request and parse the returned data. The following is a sample code using the curl library:

// 创建一个curl句柄 $ch = curl_init(); // 设置curl选项 curl_setopt($ch, CURLOPT_URL, 'http://example.com/ajax-endpoint'); curl_setopt($ch, CURLOPT_RETURNTRANSFER, true); // 发送请求并获取响应数据 $response = curl_exec($ch); // 关闭curl句柄 curl_close($ch); // 打印获取到的内容 echo $response;

3. Using third-party libraries

There are also some third-party libraries that can help us deal with asynchronously loaded content. For example, PhantomJS is a headless browser based on WebKit that can be used to crawl dynamically rendered pages. Guzzle is a powerful PHP HTTP client library that can easily send HTTP requests and process responses. Using these libraries, we can more easily crawl asynchronously loaded content. The following is a sample code using PhantomJS and Guzzle:

use GuzzleHttpClient;

// 创建一个Guzzle客户端

$client = new Client();

// 发送GET请求并获取响应数据

$response = $client->get('http://example.com/ajax-endpoint')->getBody();

// 打印获取到的内容

echo $response;Summary:

To deal with the problem of crawling asynchronously loaded content, we can use dynamic rendering methods, analyze network requests, or use third-party libraries . Choosing the appropriate method according to the actual situation can help us successfully obtain asynchronously loaded content. I hope the introduction in this article will be helpful to everyone in crawler development.

The above is the detailed content of phpSpider practical tips: How to deal with the problem of crawling asynchronously loaded content?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Win11 Tips Sharing: Skip Microsoft Account Login with One Trick

Mar 27, 2024 pm 02:57 PM

Win11 Tips Sharing: Skip Microsoft Account Login with One Trick

Mar 27, 2024 pm 02:57 PM

Win11 Tips Sharing: One trick to skip Microsoft account login Windows 11 is the latest operating system launched by Microsoft, with a new design style and many practical functions. However, for some users, having to log in to their Microsoft account every time they boot up the system can be a bit annoying. If you are one of them, you might as well try the following tips, which will allow you to skip logging in with a Microsoft account and enter the desktop interface directly. First, we need to create a local account in the system to log in instead of a Microsoft account. The advantage of doing this is

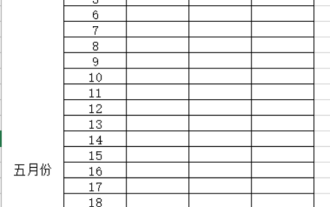

What are the tips for novices to create forms?

Mar 21, 2024 am 09:11 AM

What are the tips for novices to create forms?

Mar 21, 2024 am 09:11 AM

We often create and edit tables in excel, but as a novice who has just come into contact with the software, how to use excel to create tables is not as easy as it is for us. Below, we will conduct some drills on some steps of table creation that novices, that is, beginners, need to master. We hope it will be helpful to those in need. A sample form for beginners is shown below: Let’s see how to complete it! 1. There are two methods to create a new excel document. You can right-click the mouse on a blank location on the [Desktop] - [New] - [xls] file. You can also [Start]-[All Programs]-[Microsoft Office]-[Microsoft Excel 20**] 2. Double-click our new ex

A must-have for veterans: Tips and precautions for * and & in C language

Apr 04, 2024 am 08:21 AM

A must-have for veterans: Tips and precautions for * and & in C language

Apr 04, 2024 am 08:21 AM

In C language, it represents a pointer, which stores the address of other variables; & represents the address operator, which returns the memory address of a variable. Tips for using pointers include defining pointers, dereferencing pointers, and ensuring that pointers point to valid addresses; tips for using address operators & include obtaining variable addresses, and returning the address of the first element of the array when obtaining the address of an array element. A practical example demonstrating the use of pointer and address operators to reverse a string.

VSCode Getting Started Guide: A must-read for beginners to quickly master usage skills!

Mar 26, 2024 am 08:21 AM

VSCode Getting Started Guide: A must-read for beginners to quickly master usage skills!

Mar 26, 2024 am 08:21 AM

VSCode (Visual Studio Code) is an open source code editor developed by Microsoft. It has powerful functions and rich plug-in support, making it one of the preferred tools for developers. This article will provide an introductory guide for beginners to help them quickly master the skills of using VSCode. In this article, we will introduce how to install VSCode, basic editing operations, shortcut keys, plug-in installation, etc., and provide readers with specific code examples. 1. Install VSCode first, we need

PHP programming skills: How to jump to the web page within 3 seconds

Mar 24, 2024 am 09:18 AM

PHP programming skills: How to jump to the web page within 3 seconds

Mar 24, 2024 am 09:18 AM

Title: PHP Programming Tips: How to Jump to a Web Page within 3 Seconds In web development, we often encounter situations where we need to automatically jump to another page within a certain period of time. This article will introduce how to use PHP to implement programming techniques to jump to a page within 3 seconds, and provide specific code examples. First of all, the basic principle of page jump is realized through the Location field in the HTTP response header. By setting this field, the browser can automatically jump to the specified page. Below is a simple example demonstrating how to use P

Win11 Tricks Revealed: How to Bypass Microsoft Account Login

Mar 27, 2024 pm 07:57 PM

Win11 Tricks Revealed: How to Bypass Microsoft Account Login

Mar 27, 2024 pm 07:57 PM

Win11 tricks revealed: How to bypass Microsoft account login Recently, Microsoft launched a new operating system Windows11, which has attracted widespread attention. Compared with previous versions, Windows 11 has made many new adjustments in terms of interface design and functional improvements, but it has also caused some controversy. The most eye-catching point is that it forces users to log in to the system with a Microsoft account. For some users, they may be more accustomed to logging in with a local account and are unwilling to bind their personal information to a Microsoft account.

How to read html

Apr 05, 2024 am 08:36 AM

How to read html

Apr 05, 2024 am 08:36 AM

Although HTML itself cannot read files, file reading can be achieved through the following methods: using JavaScript (XMLHttpRequest, fetch()); using server-side languages (PHP, Node.js); using third-party libraries (jQuery.get() , axios, fs-extra).

Detailed explanation of the usage skills of √ symbol in word box

Mar 25, 2024 pm 10:30 PM

Detailed explanation of the usage skills of √ symbol in word box

Mar 25, 2024 pm 10:30 PM

Detailed explanation of the tips for using the √ symbol in the Word box. In daily work and study, we often need to use Word for document editing and typesetting. Among them, the √ symbol is a common symbol, which usually means "right". Using the √ symbol in the Word box can help us present information more clearly and improve the professionalism and beauty of the document. Next, we will introduce in detail the skills of using the √ symbol in the Word box, hoping to help everyone. 1. Insert the √ symbol In Word, there are many ways to insert the √ symbol. one