Backend Development

Backend Development

Python Tutorial

Python Tutorial

Teach you step-by-step how to use Python web crawler to obtain the video selection content of Bilibili (source code attached)

Teach you step-by-step how to use Python web crawler to obtain the video selection content of Bilibili (source code attached)

Teach you step-by-step how to use Python web crawler to obtain the video selection content of Bilibili (source code attached)

1. Background introduction

When it comes to Bilibili, the first impression is the video. I believe that many friends, like me, want to use web crawler technology Get the videos from Station B, but the videos from Station B are actually not that easy to get. Guan is about getting the videos from Station B, which was previously available at The introduction is implemented through the you-get library. Interested friends can read this article: You-Get is so powerful! .

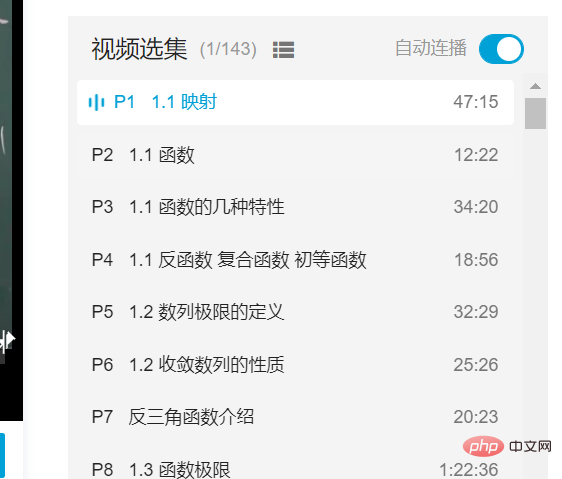

## Closer to home, friends who often study on Bilibili may often encounter bloggers who serialize dozens or even hundreds of videos, especially like this one For continuous tutorials on programming languages, courses, tool usage, etc., a selection series will appear, as shown in the figure below.

Of coursethe fields of theseselectionscan also be seen by our naked eyes. JustIf it is implemented through a program, it may not be as simple as imagined. So the goal of this article is to obtain video selections through Python web crawler technology and based on the selenium library.

2. Specific implementation

The library we use in this article is selenium. This is a Although the library used to simulate user login feels slow, it is still used quite a lot in the field of web crawlers. It has been used repeatedly to simulate login and obtain data. Below is all the code to implement video selection collection. You are welcome to practice it yourself.

# coding: utf-8

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.wait import WebDriverWait

class Item:

page_num = ""

part = ""

duration = ""

def __init__(self, page_num, part, duration):

self.page_num = page_num

self.part = part

self.duration = duration

def get_second(self):

str_list = self.duration.split(":")

sum = 0

for i, item in enumerate(str_list):

sum += pow(60, len(str_list) - i - 1) * int(item)

return sum

def get_bilili_page_items(url):

options = webdriver.ChromeOptions()

options.add_argument('--headless') # 设置无界面

options.add_experimental_option('excludeSwitches', ['enable-automation'])

# options.add_experimental_option("prefs", {"profile.managed_default_content_settings.images": 2,

# "profile.managed_default_content_settings.flash": 0})

browser = webdriver.Chrome(options=options)

# browser = webdriver.PhantomJS()

print("正在打开网页...")

browser.get(url)

print("等待网页响应...")

# 需要等一下,直到页面加载完成

wait = WebDriverWait(browser, 10)

wait.until(EC.visibility_of_element_located((By.XPATH, '//*[@class="list-box"]/li/a')))

print("正在获取网页数据...")

list = browser.find_elements_by_xpath('//*[@class="list-box"]/li')

# print(list)

itemList = []

second_sum = 0

# 2.循环遍历出每一条搜索结果的标题

for t in list:

# print("t text:",t.text)

element = t.find_element_by_tag_name('a')

# print("a text:",element.text)

arr = element.text.split('\n')

print(" ".join(arr))

item = Item(arr[0], arr[1], arr[2])

second_sum += item.get_second()

itemList.append(item)

print("总数量:", len(itemList))

# browser.page_source

print("总时长/分钟:", round(second_sum / 60, 2))

print("总时长/小时:", round(second_sum / 3600.0, 2))

browser.close()

return itemList

get_bilili_page_items("https://www.bilibili.com/video/BV1Eb411u7Fw")The selector used here is xpath, and the video example is the Tongji Edition of "Advanced Mathematics" at Station B Full teaching video (Teacher Song Hao) video selection. If you want to grab other video selections, you only need to change the URL link in the last line of the above code.

三、常见问题

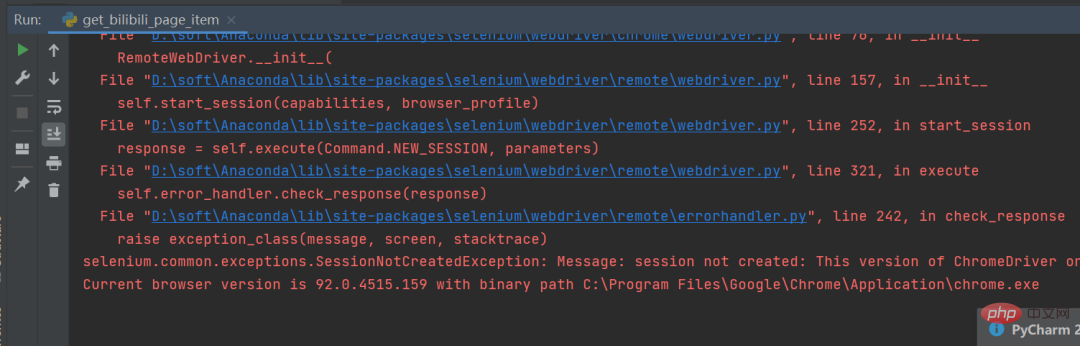

在运行过程中小伙伴们应该会经常遇到这个问题,如下图所示。

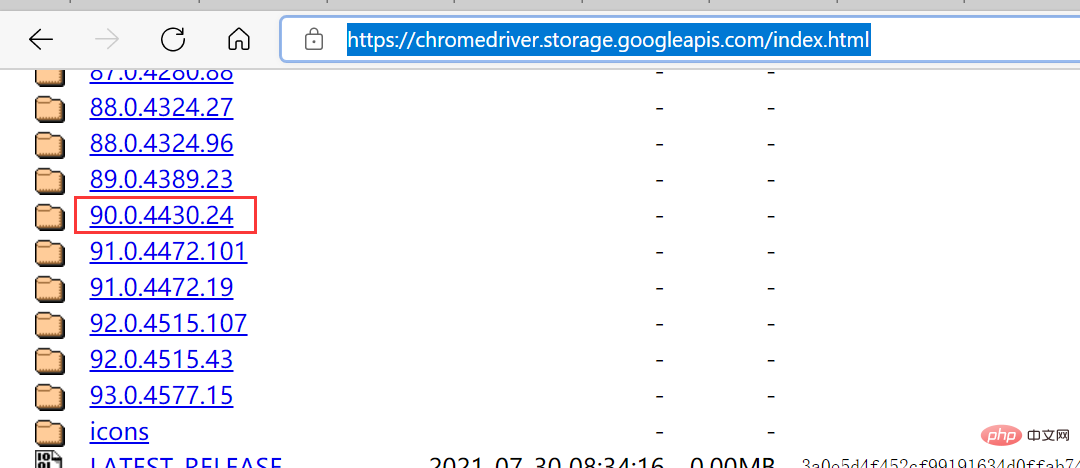

这个是因为谷歌驱动版本问题导致的,只需要根据提示,去下载对应的驱动版本即可,驱动下载链接:

https://chromedriver.storage.googleapis.com/index.html

The above is the detailed content of Teach you step-by-step how to use Python web crawler to obtain the video selection content of Bilibili (source code attached). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

This article will explain how to improve website performance by analyzing Apache logs under the Debian system. 1. Log Analysis Basics Apache log records the detailed information of all HTTP requests, including IP address, timestamp, request URL, HTTP method and response code. In Debian systems, these logs are usually located in the /var/log/apache2/access.log and /var/log/apache2/error.log directories. Understanding the log structure is the first step in effective analysis. 2. Log analysis tool You can use a variety of tools to analyze Apache logs: Command line tools: grep, awk, sed and other command line tools.

Python: Games, GUIs, and More

Apr 13, 2025 am 12:14 AM

Python: Games, GUIs, and More

Apr 13, 2025 am 12:14 AM

Python excels in gaming and GUI development. 1) Game development uses Pygame, providing drawing, audio and other functions, which are suitable for creating 2D games. 2) GUI development can choose Tkinter or PyQt. Tkinter is simple and easy to use, PyQt has rich functions and is suitable for professional development.

PHP and Python: Comparing Two Popular Programming Languages

Apr 14, 2025 am 12:13 AM

PHP and Python: Comparing Two Popular Programming Languages

Apr 14, 2025 am 12:13 AM

PHP and Python each have their own advantages, and choose according to project requirements. 1.PHP is suitable for web development, especially for rapid development and maintenance of websites. 2. Python is suitable for data science, machine learning and artificial intelligence, with concise syntax and suitable for beginners.

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

The role of Debian Sniffer in DDoS attack detection

Apr 12, 2025 pm 10:42 PM

The role of Debian Sniffer in DDoS attack detection

Apr 12, 2025 pm 10:42 PM

This article discusses the DDoS attack detection method. Although no direct application case of "DebianSniffer" was found, the following methods can be used for DDoS attack detection: Effective DDoS attack detection technology: Detection based on traffic analysis: identifying DDoS attacks by monitoring abnormal patterns of network traffic, such as sudden traffic growth, surge in connections on specific ports, etc. This can be achieved using a variety of tools, including but not limited to professional network monitoring systems and custom scripts. For example, Python scripts combined with pyshark and colorama libraries can monitor network traffic in real time and issue alerts. Detection based on statistical analysis: By analyzing statistical characteristics of network traffic, such as data

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

To maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

Nginx SSL Certificate Update Debian Tutorial

Apr 13, 2025 am 07:21 AM

Nginx SSL Certificate Update Debian Tutorial

Apr 13, 2025 am 07:21 AM

This article will guide you on how to update your NginxSSL certificate on your Debian system. Step 1: Install Certbot First, make sure your system has certbot and python3-certbot-nginx packages installed. If not installed, please execute the following command: sudoapt-getupdatesudoapt-getinstallcertbotpython3-certbot-nginx Step 2: Obtain and configure the certificate Use the certbot command to obtain the Let'sEncrypt certificate and configure Nginx: sudocertbot--nginx Follow the prompts to select

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

Configuring an HTTPS server on a Debian system involves several steps, including installing the necessary software, generating an SSL certificate, and configuring a web server (such as Apache or Nginx) to use an SSL certificate. Here is a basic guide, assuming you are using an ApacheWeb server. 1. Install the necessary software First, make sure your system is up to date and install Apache and OpenSSL: sudoaptupdatesudoaptupgradesudoaptinsta