30 pictures detailing the operating system summary!

##1. Overview

Basic features

1. Concurrency

Concurrency refers to the ability to run multiple programs at the same time within a macroscopic period, while parallelism refers to the ability to run multiple instructions at the same time. Parallelism requires hardware support, such as multiple pipelines, multi-core processors or distributed computing systems. The operating system enables programs to run concurrently by introducing processes and threads.2. Sharing

Sharing means that the resources in the system can be used by multiple concurrent processes. There are two sharing methods: mutually exclusive sharing and simultaneous sharing. Mutually exclusive shared resources are called critical resources, such as printers. Only one process is allowed to access them at the same time. A synchronization mechanism is required to achieve mutually exclusive access.3. Virtual

Virtual technology converts a physical entity into multiple logical entities. There are two main virtual technologies: time (time) division multiplexing technology and space (space) division multiplexing technology.Multiple processes can be executed concurrently on the same processor using time-division multiplexing technology, allowing each process to take turns occupying the processor, executing only a small time slice each time and switching quickly.

Virtual memory uses space division multiplexing technology, which abstracts physical memory into address space, and each process has its own address space. Pages in the address space are mapped to physical memory. Not all pages in the address space need to be in physical memory. When a page that is not in physical memory is used, the page replacement algorithm is executed to replace the page into memory.

4. Asynchronous

Asynchronous means that the process is not executed all at once, but stops and goes, advancing at an unknown speed.

Basic functions

1. Process management

Process control, process synchronization, process communication , deadlock handling, processor scheduling, etc.

2. Memory management

Memory allocation, address mapping, memory protection and sharing, virtual memory, etc.

3. File management

Management of file storage space, directory management, file read and write management and protection, etc.

4. Device management

Complete the user's I/O request, facilitate the user to use various devices, and improve the utilization of the device.

Mainly includes buffer management, device allocation, device processing, virtual devices, etc.

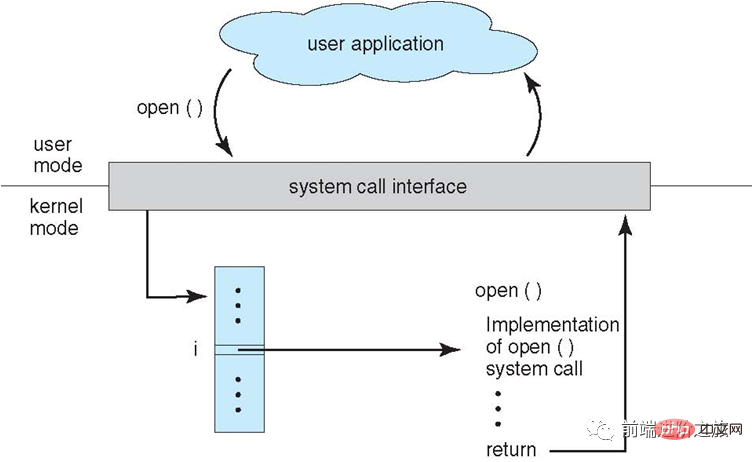

System call

If a process needs to use the functions of the kernel state in user mode, it will make a system call and fall into the kernel, and the operating system will complete it on its behalf.

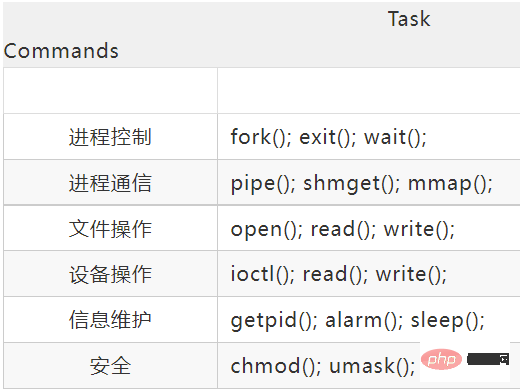

Linux system calls mainly include the following:

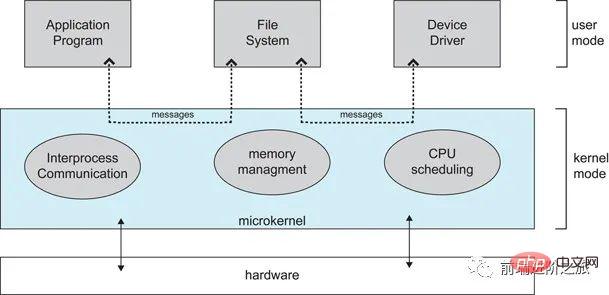

Big kernel and microkernel

1. Big kernel

The big kernel puts the operating system functions into the kernel as a tightly integrated whole .

Since each module shares information, it has high performance.

2. Microkernel

As the operating system continues to become more complex, some operating system functions are moved out of the kernel to reduce the complexity of the kernel. The removed part is divided into several services based on the layering principle, which are independent of each other.

Under the microkernel structure, the operating system is divided into small, well-defined modules. Only the microkernel module runs in the kernel mode, and the other modules run in the user mode.

Because you need to frequently switch between user mode and core mode, there will be a certain performance loss.

##Interrupt classification

1. External interrupt

Caused by events other than CPU executing instructions, such as I/O completion interrupt, indicating that device input/output processing has been completed and the processor can send the next input/output request. In addition, there are clock interrupts, console interrupts, etc.

#2. Exceptions

are caused by internal events when the CPU executes instructions, such as illegal opcodes, address out-of-bounds, arithmetic overflow, etc. .3. Trapped

Use system calls in user programs.2. Process Management

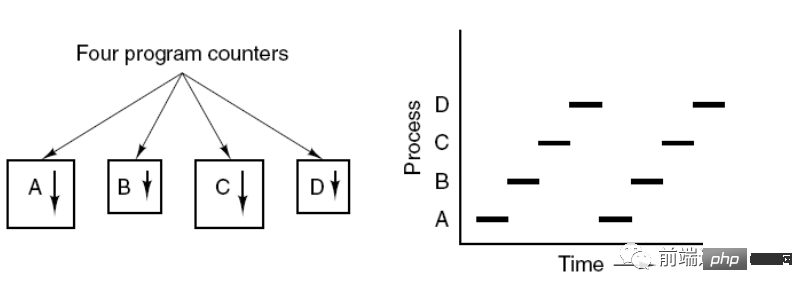

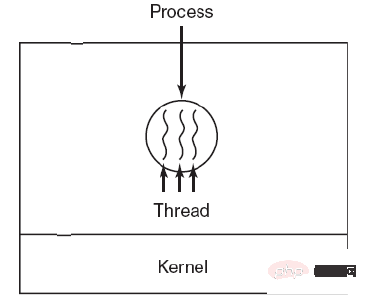

Processes and Threads

1. Process

Process is the basic unit of resource allocation.Process Control Block (PCB) describes the basic information and running status of the process. The so-called process creation and cancellation refer to operations on the PCB.

The figure below shows that 4 programs create 4 processes, and these 4 processes can be executed concurrently.

2. Thread

Thread is the basic unit of independent scheduling.

There can be multiple threads in a process, and they share process resources.

QQ and the browser are two processes. There are many threads in the browser process, such as HTTP request threads, event response threads, rendering threads, etc. The concurrent execution of threads enables clicking a new link in the browser Thus, when an HTTP request is initiated, the browser can also respond to other events from the user.

3. Difference

Ⅰ Owning resources

The process is allocated resources The basic unit, but threads do not own resources. Threads can access resources belonging to processes.

Ⅱ Scheduling

Thread is the basic unit of independent scheduling. In the same process, thread switching will not cause process switching. Switching from a thread in one process to a thread in another process , it will cause process switching.

Ⅲ System overhead

Because when creating or canceling a process, the system must allocate or reclaim resources for it, such as memory space, I/O devices, etc., the overhead paid is much greater than the overhead when creating or canceling a thread. Similarly, when switching processes, it involves saving the CPU environment of the currently executing process and setting the CPU environment of the new scheduled process. However, when switching threads, only a small number of register contents need to be saved and set, and the overhead is very small.

Ⅳ Communication

Threads can communicate by directly reading and writing data in the same process, but process communication requires the help of IPC.

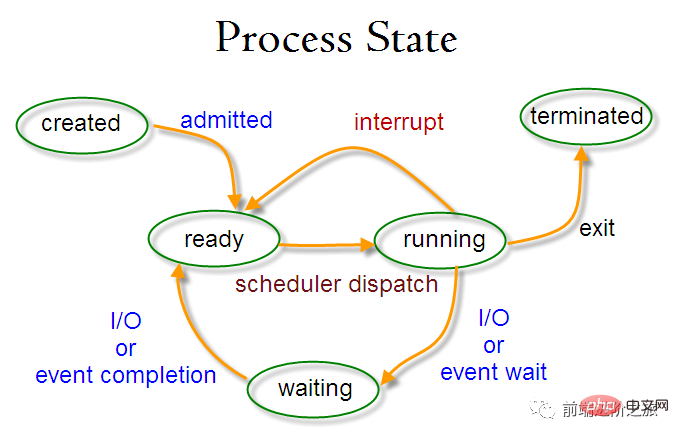

Process state switching

##Ready state (ready ): Waiting to be scheduled

Running state (running)

Blocking state (waiting ): Waiting for resources

Only the ready state and the running state can be converted to each other, other are all one-way conversions. The process in the ready state obtains CPU time through the scheduling algorithm and changes to the running state; while the process in the running state will change to the ready state after the CPU time slice allocated to it is used up, waiting for the next scheduling.

The blocking state is the lack of required resources and is converted from the running state. However, this resource does not include CPU time. The lack of CPU time will be converted from the running state to Ready state.

Process Scheduling Algorithm

The scheduling algorithm goals of different environments are different, so scheduling algorithms need to be discussed for different environments.

1. Batch processing system

The batch processing system does not have too many user operations. In this system, the scheduling algorithm goal is to ensure throughput and turnaround time (from time from submission to termination).

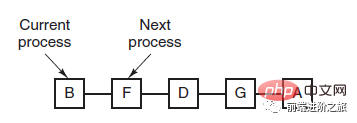

1.1 First-come first-serverd (FCFS)

Non-preemptive scheduling algorithm, scheduling in the order of requests.

It is good for long jobs, but not good for short jobs, because short jobs must wait for the previous long jobs to be completed before they can be executed, and long jobs need to be executed for a long time, resulting in long waiting times for short jobs. .

1.2 Shortest job first (SJF)

A non-preemptive scheduling algorithm that schedules in the order of the shortest estimated running time.

Long jobs may starve to death and are in a state of waiting for short jobs to be completed. Because if there are always short jobs coming, then long jobs will never be scheduled.

1.3 Shortest remaining time next (SRTN)

A preemptive version of the shortest job first, scheduled in the order of remaining running time. When a new job arrives, its entire running time is compared with the remaining time of the current process. If the new process requires less time, the current process is suspended and the new process is run. Otherwise the new process waits.

2. Interactive system

Interactive systems have a large number of user interactions, and the goal of the scheduling algorithm in this system is to respond quickly.

2.1 Time slice rotation

Arrange all ready processes into a queue according to the FCFS principle. Each time it is scheduled, allocate CPU time to the queue leader process, which can execute a time slice. When the time slice is used up, a clock interrupt is issued by the timer, and the scheduler stops the execution of the process and sends it to the end of the ready queue, while continuing to allocate CPU time to the process at the head of the queue.

The efficiency of the time slice rotation algorithm has a great relationship with the size of the time slice:

Because process switching requires saving process information and loading new For process information, if the time slice is too small, it will cause process switching too frequently, and too much time will be spent on process switching.

#And if the time slice is too long, real-time performance cannot be guaranteed.

##2.2 Priority Scheduling

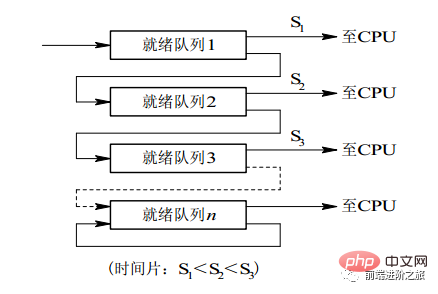

for each process Assign a priority and schedule according to priority. In order to prevent low-priority processes from never waiting for scheduling, you can increase the priority of waiting processes over time.2.3 Multi-level feedback queue

A process needs to execute 100 time slices. If the time slice rotation scheduling algorithm is used, 100 exchanges are required. Multi-level queues are considered for processes that need to execute multiple time slices continuously. It sets up multiple queues, and each queue has a different time slice size, such as 1, 2, 4, 8,. .. If the process does not finish executing in the first queue, it will be moved to the next queue. In this way, the previous process only needs to be swapped 7 times. Each queue has a different priority, and the one at the top has the highest priority. Therefore, the process on the current queue can be scheduled only if there is no process in the previous queue.This scheduling algorithm can be regarded as a combination of the time slice rotation scheduling algorithm and the priority scheduling algorithm.

3. Real-time system

Real-time system requires that a request be responded to within a certain time.

牛逼啊!接私活必备的 N 个开源项目!赶快收藏吧...

It is divided into hard real-time and soft real-time. The former must meet the absolute deadline, and the latter can tolerate a certain timeout.

Process Synchronization

1. Critical Section

The code that accesses critical resources is called the critical section.

In order to mutually exclude access to critical resources, each process needs to be checked before entering the critical section.

// entry section // critical section; // exit section

2. Synchronization and mutual exclusion

#Synchronization: Multiple processes have direct constraints due to cooperation, so that the processes have There is a certain sequential execution relationship.

#Mutual exclusion: Only one process of multiple processes can enter the critical section at the same time.

3. Semaphore

信号量(Semaphore)是一个整型变量,可以对其执行 down 和 up 操作,也就是常见的 P 和 V 操作。

down : 如果信号量大于 0 ,执行 -1 操作;如果信号量等于 0,进程睡眠,等待信号量大于 0;

up :对信号量执行 +1 操作,唤醒睡眠的进程让其完成 down 操作。

down 和 up 操作需要被设计成原语,不可分割,通常的做法是在执行这些操作的时候屏蔽中断。

如果信号量的取值只能为 0 或者 1,那么就成为了 互斥量(Mutex) ,0 表示临界区已经加锁,1 表示临界区解锁。

typedef int semaphore;

semaphore mutex = 1;

void P1() {

down(&mutex);

// 临界区

up(&mutex);

}

void P2() {

down(&mutex);

// 临界区

up(&mutex);

}使用信号量实现生产者-消费者问题

问题描述:使用一个缓冲区来保存物品,只有缓冲区没有满,生产者才可以放入物品;只有缓冲区不为空,消费者才可以拿走物品。

因为缓冲区属于临界资源,因此需要使用一个互斥量 mutex 来控制对缓冲区的互斥访问。

为了同步生产者和消费者的行为,需要记录缓冲区中物品的数量。数量可以使用信号量来进行统计,这里需要使用两个信号量:empty 记录空缓冲区的数量,full 记录满缓冲区的数量。其中,empty 信号量是在生产者进程中使用,当 empty 不为 0 时,生产者才可以放入物品;full 信号量是在消费者进程中使用,当 full 信号量不为 0 时,消费者才可以取走物品。

注意,不能先对缓冲区进行加锁,再测试信号量。也就是说,不能先执行 down(mutex) 再执行 down(empty)。如果这么做了,那么可能会出现这种情况:生产者对缓冲区加锁后,执行 down(empty) 操作,发现 empty = 0,此时生产者睡眠。消费者不能进入临界区,因为生产者对缓冲区加锁了,消费者就无法执行 up(empty) 操作,empty 永远都为 0,导致生产者永远等待下,不会释放锁,消费者因此也会永远等待下去。

#define N 100

typedef int semaphore;

semaphore mutex = 1;

semaphore empty = N;

semaphore full = 0;

void producer() {

while(TRUE) {

int item = produce_item();

down(&empty);

down(&mutex);

insert_item(item);

up(&mutex);

up(&full);

}

}

void consumer() {

while(TRUE) {

down(&full);

down(&mutex);

int item = remove_item();

consume_item(item);

up(&mutex);

up(&empty);

}

}4. 管程

使用信号量机制实现的生产者消费者问题需要客户端代码做很多控制,而管程把控制的代码独立出来,不仅不容易出错,也使得客户端代码调用更容易。

c 语言不支持管程,下面的示例代码使用了类 Pascal 语言来描述管程。示例代码的管程提供了 insert() 和 remove() 方法,客户端代码通过调用这两个方法来解决生产者-消费者问题。

monitor ProducerConsumer integer i; condition c; procedure insert(); begin // ... end; procedure remove(); begin // ... end; end monitor;

管程有一个重要特性:在一个时刻只能有一个进程使用管程。进程在无法继续执行的时候不能一直占用管程,否则其它进程永远不能使用管程。

管程引入了 条件变量 以及相关的操作:wait() 和 signal() 来实现同步操作。对条件变量执行 wait() 操作会导致调用进程阻塞,把管程让出来给另一个进程持有。signal() 操作用于唤醒被阻塞的进程。

使用管程实现生产者-消费者问题

// 管程 monitor ProducerConsumer condition full, empty; integer count := 0; condition c; procedure insert(item: integer); begin if count = N then wait(full); insert_item(item); count := count + 1; if count = 1 then signal(empty); end; function remove: integer; begin if count = 0 then wait(empty); remove = remove_item; count := count - 1; if count = N -1 then signal(full); end; end monitor; // 生产者客户端 procedure producer begin while true do begin item = produce_item; ProducerConsumer.insert(item); end end; // 消费者客户端 procedure consumer begin while true do begin item = ProducerConsumer.remove; consume_item(item); end end;

经典同步问题

生产者和消费者问题前面已经讨论过了。

1. 哲学家进餐问题

五个哲学家围着一张圆桌,每个哲学家面前放着食物。哲学家的生活有两种交替活动:吃饭以及思考。当一个哲学家吃饭时,需要先拿起自己左右两边的两根筷子,并且一次只能拿起一根筷子。

下面是一种错误的解法,如果所有哲学家同时拿起左手边的筷子,那么所有哲学家都在等待其它哲学家吃完并释放自己手中的筷子,导致死锁。

#define N 5

void philosopher(int i) {

while(TRUE) {

think();

take(i); // 拿起左边的筷子

take((i+1)%N); // 拿起右边的筷子

eat();

put(i);

put((i+1)%N);

}

}为了防止死锁的发生,可以设置两个条件:

必须同时拿起左右两根筷子;

只有在两个邻居都没有进餐的情况下才允许进餐。

#define N 5

#define LEFT (i + N - 1) % N // 左邻居

#define RIGHT (i + 1) % N // 右邻居

#define THINKING 0

#define HUNGRY 1

#define EATING 2

typedef int semaphore;

int state[N]; // 跟踪每个哲学家的状态

semaphore mutex = 1; // 临界区的互斥,临界区是 state 数组,对其修改需要互斥

semaphore s[N]; // 每个哲学家一个信号量

void philosopher(int i) {

while(TRUE) {

think(i);

take_two(i);

eat(i);

put_two(i);

}

}

void take_two(int i) {

down(&mutex);

state[i] = HUNGRY;

check(i);

up(&mutex);

down(&s[i]); // 只有收到通知之后才可以开始吃,否则会一直等下去

}

void put_two(i) {

down(&mutex);

state[i] = THINKING;

check(LEFT); // 尝试通知左右邻居,自己吃完了,你们可以开始吃了

check(RIGHT);

up(&mutex);

}

void eat(int i) {

down(&mutex);

state[i] = EATING;

up(&mutex);

}

// 检查两个邻居是否都没有用餐,如果是的话,就 up(&s[i]),使得 down(&s[i]) 能够得到通知并继续执行

void check(i) {

if(state[i] == HUNGRY && state[LEFT] != EATING && state[RIGHT] !=EATING) {

state[i] = EATING;

up(&s[i]);

}

}2. 读者-写者问题

允许多个进程同时对数据进行读操作,但是不允许读和写以及写和写操作同时发生。另外,搜索公众号顶级算法后台回复“算法”,获取一份惊喜礼包。

一个整型变量 count 记录在对数据进行读操作的进程数量,一个互斥量 count_mutex 用于对 count 加锁,一个互斥量 data_mutex 用于对读写的数据加锁。

typedef int semaphore;

semaphore count_mutex = 1;

semaphore data_mutex = 1;

int count = 0;

void reader() {

while(TRUE) {

down(&count_mutex);

count++;

if(count == 1) down(&data_mutex); // 第一个读者需要对数据进行加锁,防止写进程访问

up(&count_mutex);

read();

down(&count_mutex);

count--;

if(count == 0) up(&data_mutex);

up(&count_mutex);

}

}

void writer() {

while(TRUE) {

down(&data_mutex);

write();

up(&data_mutex);

}

}以下内容由 @Bandi Yugandhar 提供。

The first case may result Writer to starve. This case favous Writers i.e no writer, once added to the queue, shall be kept waiting longer than absolutely necessary(only when there are readers that entered the queue before the writer).

int readcount, writecount; //(initial value = 0)

semaphore rmutex, wmutex, readLock, resource; //(initial value = 1)

//READER

void reader() {

<ENTRY Section>

down(&readLock); // reader is trying to enter

down(&rmutex); // lock to increase readcount

readcount++;

if (readcount == 1)

down(&resource); //if you are the first reader then lock the resource

up(&rmutex); //release for other readers

up(&readLock); //Done with trying to access the resource

<CRITICAL Section>

//reading is performed

<EXIT Section>

down(&rmutex); //reserve exit section - avoids race condition with readers

readcount--; //indicate you're leaving

if (readcount == 0) //checks if you are last reader leaving

up(&resource); //if last, you must release the locked resource

up(&rmutex); //release exit section for other readers

}

//WRITER

void writer() {

<ENTRY Section>

down(&wmutex); //reserve entry section for writers - avoids race conditions

writecount++; //report yourself as a writer entering

if (writecount == 1) //checks if you're first writer

down(&readLock); //if you're first, then you must lock the readers out. Prevent them from trying to enter CS

up(&wmutex); //release entry section

<CRITICAL Section>

down(&resource); //reserve the resource for yourself - prevents other writers from simultaneously editing the shared resource

//writing is performed

up(&resource); //release file

<EXIT Section>

down(&wmutex); //reserve exit section

writecount--; //indicate you're leaving

if (writecount == 0) //checks if you're the last writer

up(&readLock); //if you're last writer, you must unlock the readers. Allows them to try enter CS for reading

up(&wmutex); //release exit section

}We can observe that every reader is forced to acquire ReadLock. On the otherhand, writers doesn’t need to lock individually. Once the first writer locks the ReadLock, it will be released only when there is no writer left in the queue.

From the both cases we observed that either reader or writer has to starve. Below solutionadds the constraint that no thread shall be allowed to starve; that is, the operation of obtaining a lock on the shared data will always terminate in a bounded amount of time.

int readCount; // init to 0; number of readers currently accessing resource

// all semaphores initialised to 1

Semaphore resourceAccess; // controls access (read/write) to the resource

Semaphore readCountAccess; // for syncing changes to shared variable readCount

Semaphore serviceQueue; // FAIRNESS: preserves ordering of requests (signaling must be FIFO)

void writer()

{

down(&serviceQueue); // wait in line to be servicexs

// <ENTER>

down(&resourceAccess); // request exclusive access to resource

// </ENTER>

up(&serviceQueue); // let next in line be serviced

// <WRITE>

writeResource(); // writing is performed

// </WRITE>

// <EXIT>

up(&resourceAccess); // release resource access for next reader/writer

// </EXIT>

}

void reader()

{

down(&serviceQueue); // wait in line to be serviced

down(&readCountAccess); // request exclusive access to readCount

// <ENTER>

if (readCount == 0) // if there are no readers already reading:

down(&resourceAccess); // request resource access for readers (writers blocked)

readCount++; // update count of active readers

// </ENTER>

up(&serviceQueue); // let next in line be serviced

up(&readCountAccess); // release access to readCount

// <READ>

readResource(); // reading is performed

// </READ>

down(&readCountAccess); // request exclusive access to readCount

// <EXIT>

readCount--; // update count of active readers

if (readCount == 0) // if there are no readers left:

up(&resourceAccess); // release resource access for all

// </EXIT>

up(&readCountAccess); // release access to readCount

}进程通信

进程同步与进程通信很容易混淆,它们的区别在于:

进程同步:控制多个进程按一定顺序执行;

进程通信:进程间传输信息。

进程通信是一种手段,而进程同步是一种目的。也可以说,为了能够达到进程同步的目的,需要让进程进行通信,传输一些进程同步所需要的信息。

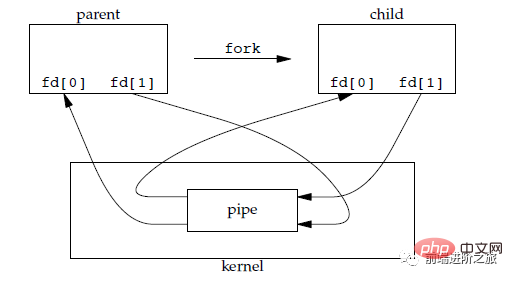

1. 管道

管道是通过调用 pipe 函数创建的,fd[0] 用于读,fd[1] 用于写。

#include <unistd.h> int pipe(int fd[2]);

它具有以下限制:

只支持半双工通信(单向交替传输);

只能在父子进程或者兄弟进程中使用。

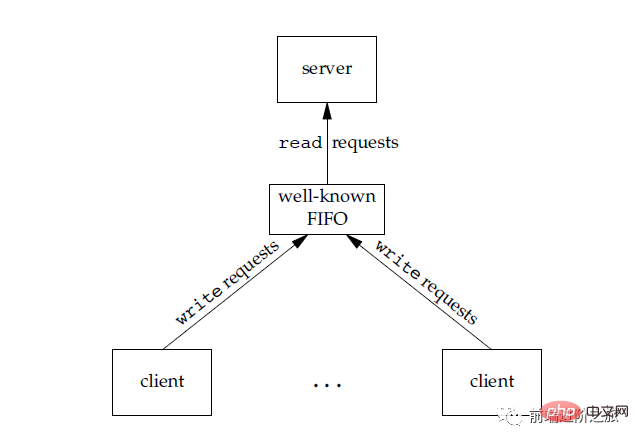

2. FIFO

也称为命名管道,去除了管道只能在父子进程中使用的限制。

#include <sys/stat.h> int mkfifo(const char *path, mode_t mode); int mkfifoat(int fd, const char *path, mode_t mode);

FIFO 常用于客户-服务器应用程序中,FIFO 用作汇聚点,在客户进程和服务器进程之间传递数据。

3. Message Queue

Compared with FIFO, Message Queue has the following advantages:

The message queue can exist independently of the reading and writing processes, thus avoiding the difficulties that may arise when opening and closing synchronization pipes in FIFO;

Avoids the synchronization blocking problem of FIFO and does not require the process to provide its own synchronization method;

The reading process can selectively receive messages according to the message type , unlike FIFO which can only receive by default.

4. Semaphore

It is a counter used to provide multiple processes with access to shared data objects.

5. Shared storage

Allows multiple processes to share a given storage area. Because data does not need to be copied between processes, this is the fastest type of IPC.

You need to use a semaphore to synchronize access to shared storage.

Multiple processes can map the same file into their address space to achieve shared memory. In addition, XSI shared memory does not use files, but uses anonymous segments of memory.

6. Socket

Different from other communication mechanisms, it can be used for process communication between different machines.

3. Memory Management

Virtual Memory

The purpose of virtual memory is to expand the physical memory into a larger logical memory, allowing the program to obtain more available memory.

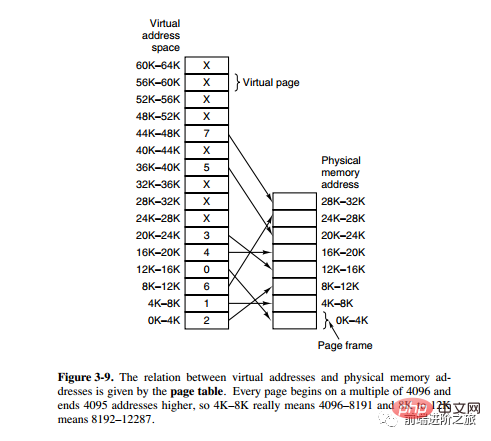

In order to better manage memory, the operating system abstracts memory into an address space. Each program has its own address space, which is divided into multiple blocks, each block is called a page. The pages are mapped into physical memory, but do not need to be mapped into contiguous physical memory, nor do all pages need to be in physical memory. When a program references a page that is not in physical memory, the hardware performs the necessary mapping, loads the missing portion into physical memory, and re-executes the failed instruction.

As can be seen from the above description, virtual memory allows the program to not map every page in the address space to physical memory, which means that a program does not need to be loaded into the memory to run. This It makes it possible to run large programs with limited memory. For example, if a computer can generate 16-bit addresses, then the address space range of a program is 0~64K. The computer has only 32KB of physical memory, and virtual memory technology allows the computer to run a 64K program.

Paging system address mapping

The memory management unit (MMU) manages the address space and physical memory conversion, in which the page table (Page table) stores the mapping table between pages (program address space) and page frames (physical memory space).

A virtual address is divided into two parts, one part stores the page number and the other part stores the offset.

下图的页表存放着 16 个页,这 16 个页需要用 4 个比特位来进行索引定位。例如对于虚拟地址(0010 000000000100),前 4 位是存储页面号 2,读取表项内容为(110 1),页表项最后一位表示是否存在于内存中,1 表示存在。后 12 位存储偏移量。这个页对应的页框的地址为 (110 000000000100)。

页面置换算法

在程序运行过程中,如果要访问的页面不在内存中,就发生缺页中断从而将该页调入内存中。此时如果内存已无空闲空间,系统必须从内存中调出一个页面到磁盘对换区中来腾出空间。

页面置换算法和缓存淘汰策略类似,可以将内存看成磁盘的缓存。在缓存系统中,缓存的大小有限,当有新的缓存到达时,需要淘汰一部分已经存在的缓存,这样才有空间存放新的缓存数据。

页面置换算法的主要目标是使页面置换频率最低(也可以说缺页率最低)。

1. 最佳

OPT, Optimal replacement algorithm

所选择的被换出的页面将是最长时间内不再被访问,通常可以保证获得最低的缺页率。

是一种理论上的算法,因为无法知道一个页面多长时间不再被访问。

举例:一个系统为某进程分配了三个物理块,并有如下页面引用序列:

7,0,1,2,0,3,0,4,2,3,0,3,2,1,2,0,1,7,0,1

开始运行时,先将 7, 0, 1 三个页面装入内存。当进程要访问页面 2 时,产生缺页中断,会将页面 7 换出,因为页面 7 再次被访问的时间最长。

2. 最近最久未使用

LRU, Least Recently Used

虽然无法知道将来要使用的页面情况,但是可以知道过去使用页面的情况。LRU 将最近最久未使用的页面换出。

为了实现 LRU,需要在内存中维护一个所有页面的链表。当一个页面被访问时,将这个页面移到链表表头。这样就能保证链表表尾的页面是最近最久未访问的。

因为每次访问都需要更新链表,因此这种方式实现的 LRU 代价很高。

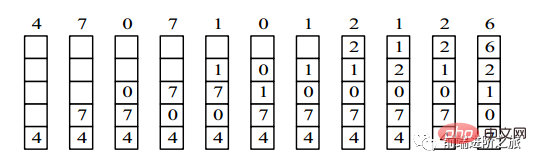

4,7,0,7,1,0,1,2,1,2,6

3. 最近未使用

NRU, Not Recently Used

每个页面都有两个状态位:R 与 M,当页面被访问时设置页面的 R=1,当页面被修改时设置 M=1。其中 R 位会定时被清零。可以将页面分成以下四类:

R=0,M=0 R=0,M=1 R=1,M=0 R=1,M=1

当发生缺页中断时,NRU 算法随机地从类编号最小的非空类中挑选一个页面将它换出。

NRU 优先换出已经被修改的脏页面(R=0,M=1),而不是被频繁使用的干净页面(R=1,M=0)。

4. First In First Out

FIFO, First In First Out

The page selected to be swapped out is the page entered first.

This algorithm will swap out frequently accessed pages, resulting in an increase in the page fault rate.

5. Second chance algorithm

The FIFO algorithm may replace frequently used pages. In order to avoid this problem, make a simple modification to the algorithm. Modification:

Set the R bit of the page to 1 when the page is accessed (read or write). When replacement is needed, check the R bit of the oldest page. If the R bit is 0, then the page is old and unused and can be replaced immediately; if it is 1, clear the R bit to 0, put the page at the end of the linked list, and modify its loading time so that It acts as if it was just loaded and continues searching from the head of the list.

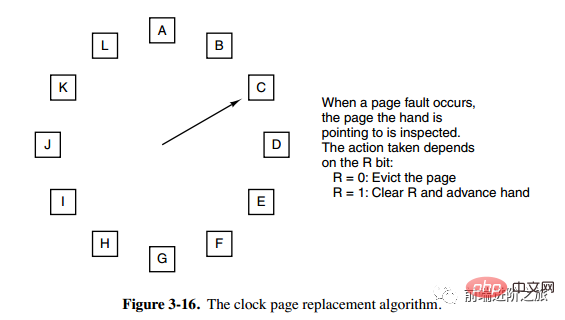

6. Clock

Clock

The second chance algorithm requires moving pages in the linked list, which reduces efficiency. The clock algorithm uses a circular linked list to connect pages, and then uses a pointer to point to the oldest page.

Segmentation

Virtual memory uses paging technology, which means that the address space is divided into fixed-size pages, and each page is mapped to the memory.

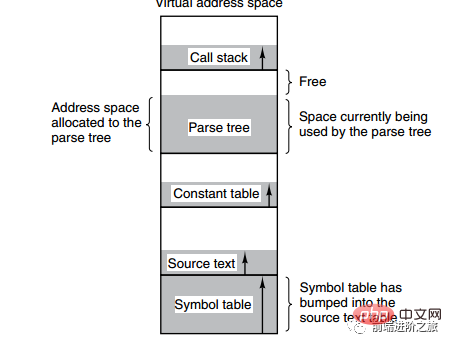

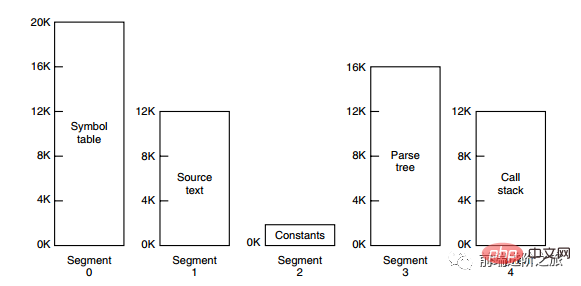

The picture below shows multiple tables created by a compiler during the compilation process. Four tables are dynamically grown. If the one-dimensional address space of the paging system is used, the dynamic growth characteristics will cause coverage problems. Appear.

The method of segmentation is to divide each table into segments, and each segment constitutes an independent address space. Each segment can be different in length and can grow dynamically.

Segment paging

The address space of the program is divided into multiple segments with independent address spaces , the address space on each segment is divided into pages of equal size. This not only has the sharing and protection of the segmented system, but also has the virtual memory function of the paging system.

Comparison of paging and segmentation

Transparency to programmers: paging is transparent, but segmentation requires programmers to display Divide each segment.

Dimensions of address space: Paging is a one-dimensional address space, and segmentation is two-dimensional.

#Whether the size can be changed: The size of the page is immutable, but the size of the segment can be changed dynamically.

Reason for appearance: Paging is mainly used to implement virtual memory to obtain a larger address space; segmentation is mainly used to enable programs and data to be divided Be a logically independent address space and facilitate sharing and protection.

4. Device Management

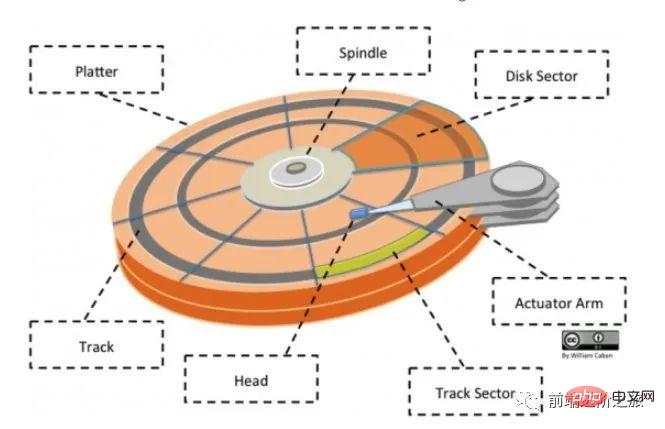

Disk Structure

Disk (Platter): A disk has multiple disks;

Track (Track): A circular strip area on the disk, one disk There can be multiple tracks;

sector (Track Sector): an arc segment on the track, a track can have multiple sectors, it is the smallest The physical storage unit currently mainly has two sizes: 512 bytes and 4 K;

Head: very close to the disk surface and can store the data on the disk The magnetic field is converted into an electrical signal (reading), or the electrical signal is converted into a magnetic field on the disk (writing); in addition, search the official account Linux and this is how you should learn to reply "Linux" in the background to get a surprise gift package.

Actuator arm: used to move the magnetic head between tracks;

Spindle: Makes the entire disk rotate.

Disk scheduling algorithm

Read and write a disk block The influencing factors of time are:

Rotation time (the spindle rotates the disk surface so that the magnetic head moves to the appropriate sector)

Seek time (the brake arm moves to move the head to the appropriate track)

Actual data transfer time

Among them, the seek time is the longest, so the main goal of disk scheduling is to minimize the average seek time of the disk.

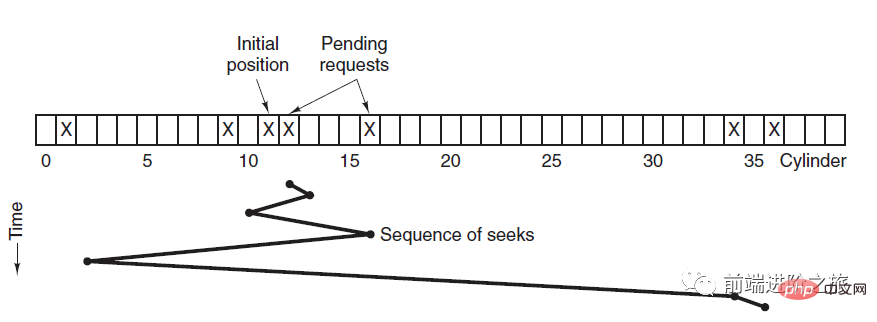

1. First Come First Served

FCFS, First Come First Served

Scheduling is performed in the order of disk requests.

The advantages are fairness and simplicity. The disadvantage is also obvious, because no optimization has been done to seek, so the average seek time may be longer.

2. Shortest Seek Time First

SSTF, Shortest Seek Time First

Prioritize scheduling the one closest to the track where the current head is located track.

Although the average seek time is relatively low, it is not fair enough. If a newly arrived track request is always closer than a waiting track request, then the waiting track request will wait forever, that is, starvation occurs. Specifically, track requests at both ends are more prone to starvation. Look at that remote control system, it’s so elegant (source code attached)!

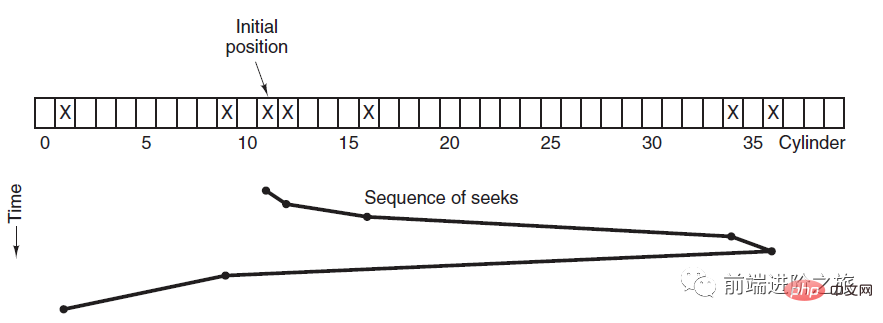

3. Elevator Algorithm

SCAN

The elevator is always Keep running in one direction until there are no requests in that direction, then change the running direction.

The elevator algorithm (scan algorithm) is similar to the running process of the elevator. It always schedules disks in one direction until there are no outstanding disk requests in that direction, and then changes the direction.

Because the movement direction is considered, all disk requests will be satisfied, solving the SSTF starvation problem.

五、链接

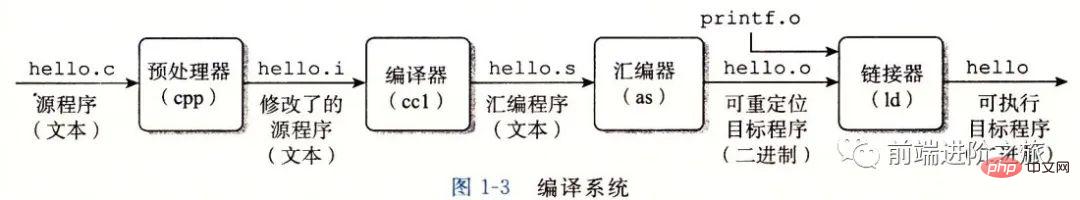

编译系统

以下是一个 hello.c 程序:

#include <stdio.h>

int main()

{

printf("hello, world\n");

return 0;

}在 Unix 系统上,由编译器把源文件转换为目标文件。

gcc -o hello hello.c

这个过程大致如下:

预处理阶段:处理以 # 开头的预处理命令;

编译阶段:翻译成汇编文件;

汇编阶段:将汇编文件翻译成可重定位目标文件;

链接阶段:将可重定位目标文件和 printf.o 等单独预编译好的目标文件进行合并,得到最终的可执行目标文件。

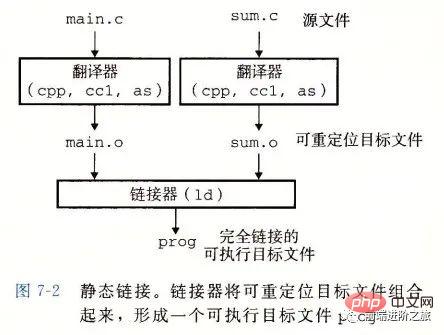

静态链接

静态链接器以一组可重定位目标文件为输入,生成一个完全链接的可执行目标文件作为输出。链接器主要完成以下两个任务:

Symbol resolution: Each symbol corresponds to a function, a global variable or a static variable. The purpose of symbol resolution is to associate each symbol reference with a symbol definition.

Relocation: The linker associates each symbol definition with a memory location and then modifies all references to these symbols so that they point to this memory Location.

Target file

Executable object files: can be executed directly in memory;

Relocatable object files: can be linked with other relocatable object files in the link phase Merge to create an executable target file;

Shared target file: This is a special relocatable target file that can be dynamically loaded at runtime Enter the memory and link;

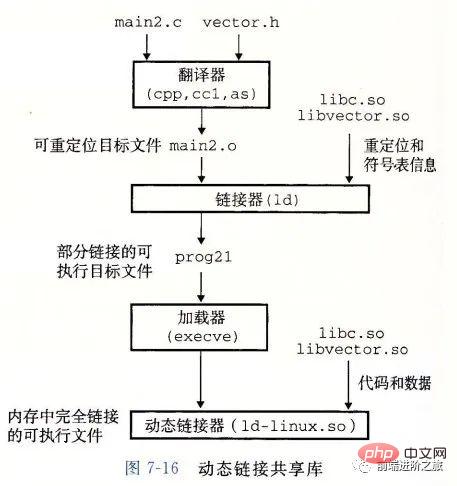

Dynamic link

The static library has the following two problems:

When the static library is updated, the entire program must be relinked;

For a standard function library like printf, if every program needs to have code, this will be a huge waste of resources.

Shared libraries are designed to solve these two problems of static libraries. They are usually represented by the .so suffix on Linux systems and on Windows systems they are called DLL. It has the following characteristics:

#A library has only one file in a given file system. All executable object files that reference the library share this file. It will not is copied into the executable file that references it;

#In memory, a .text section (the machine code of the compiled program) of a shared library Copies can be shared by different running processes.

##6. Deadlock

Necessary condition

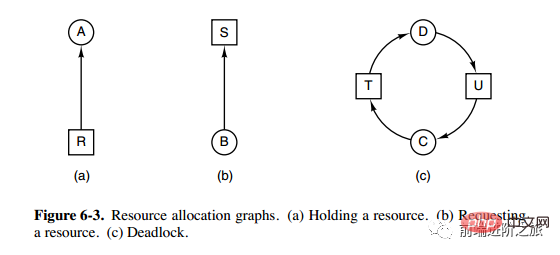

Mutually exclusive: Each resource is either already allocated to a process or is available. #Possession and waiting: A process that has already obtained a resource can request a new resource. #Non-preemptible: Resources that have been allocated to a process cannot be forcibly preempted. It can only be explicitly released by the process that occupies it. Loop waiting: There are two or more processes forming a loop, and each process in the loop is waiting for the next process. resources occupied. There are mainly the following four methods: ostrichstrategy Deadlock detection and deadlock recovery Deadlock prevention Deadlock avoidance Because the cost of solving the deadlock problem is very high, the ostrich strategy, which does not take task measures, will achieve higher performance. When a deadlock occurs, it will not have much impact on users, or the probability of a deadlock is very low, you can use the ostrich strategy. Most operating systems, including Unix, Linux and Windows, deal with the deadlock problem simply by ignoring it. Does not try to prevent deadlock, but takes measures to recover when a deadlock is detected. 1. Deadlock detection of one resource per type

Processing methods

##ostrich strategy

Heading the head Bury it in the sand and pretend there's no problem at all.

Deadlock detection and deadlock recovery

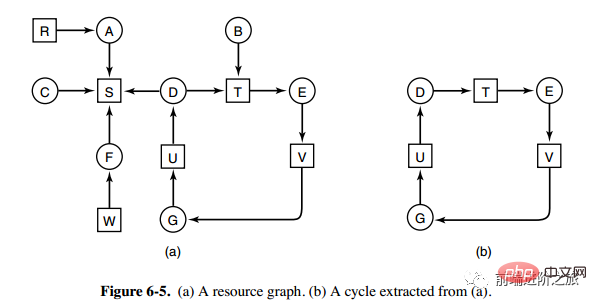

The above picture is the resource allocation diagram , where boxes represent resources and circles represent processes. The resource pointing to the process means that the resource has been allocated to the process, and the process pointing to the resource means that the process requests to obtain the resource.

The loop can be extracted from Figure a, as shown in Figure b, which satisfies the loop waiting condition, so a deadlock will occur.

The deadlock detection algorithm for each type of resource is implemented by detecting whether there is a cycle in the directed graph. Starting from a node, a depth-first search is performed, and the visited nodes are marked. If the visited nodes have been marked, nodes, it means that there is a cycle in the directed graph, that is, the occurrence of deadlock is detected.

2. Deadlock detection for multiple resources of each type

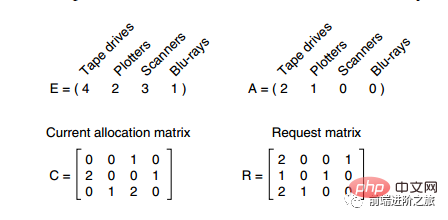

In the above figure, there are three processes and four resources. The meaning of each data is as follows:

E Vector: Total resources

A Vector: The remaining amount of resources

C Matrix: The number of resources owned by each process, Each row represents the number of resources owned by a process

#R Matrix: The number of resources requested by each process

The resources requested by processes P1 and P2 cannot be satisfied. Only process P3 can let P3 execute and then release the resources owned by P3. At this time, A = (2 2 2 0). P2 can be executed, and the resources owned by P2 are released after execution, A = (4 2 2 1). P1 can also be executed. All processes execute smoothly without deadlocks.

The algorithm is summarized as follows:

Each process is not marked at the beginning, and the execution process may be marked. When the algorithm ends, any process that is not marked is a deadlocked process.

Look for an unmarked process Pi whose requested resource is less than or equal to A.

If such a process is found, then add the i-th row vector of the C matrix to A, mark the process, and switch back to 1.

If there is no such process, the algorithm terminates.

3. Deadlock recovery

Use of preemption recovery

Using rollback recovery

Recovery by killing the process

Deadlock prevention

While the program is running Prevent deadlocks from occurring before.

1. Destruction of mutual exclusion conditions

For example, spool printer technology allows several processes to output at the same time. The only process that actually requests a physical printer is the printer daemon.

2. Destruction of possession and waiting conditions

One way to achieve this is to specify that all processes request all resources required before starting execution.

3. Destroy the non-preemption condition

4. Destroy the loop waiting

Give resources a unified number, and the process can only Request resources in numerical order.

Deadlock avoidance

Avoid deadlock while the program is running.

1. Security status

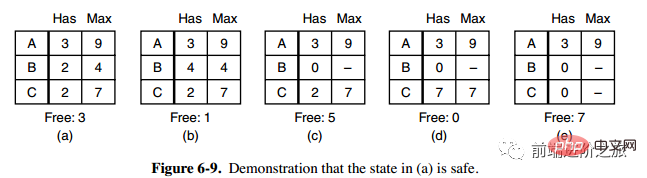

The second column Has in Figure a represents the number of resources already owned, the third column Max represents the total number of resources required, and Free represents the number of resources that can be used. Starting from picture a, first let B have all the resources it needs (picture b), release B after the operation is completed, and Free becomes 5 (picture c); then run C and A in the same way, so that all processes Both can run successfully, so the state shown in Figure a can be said to be safe.

Definition: If no deadlock occurs, and even if all processes suddenly request the maximum demand for resources, there is still a certain scheduling order that allows each process to run to completion, then the state is said to be safe.

The detection of safe state is similar to the detection of deadlock, because the safe state must require that deadlock cannot occur. The following banker's algorithm is very similar to the deadlock detection algorithm and can be combined for reference and comparison.

2. Banker Algorithm for a Single Resource

A banker in a small town promised a certain amount to a group of customers. What the algorithm has to do is make judgments. Whether satisfying the request will enter an unsafe state, if so, reject the request; otherwise, assign it.

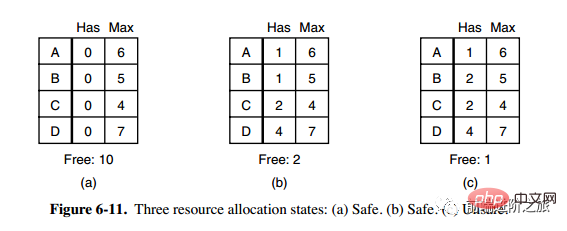

The figure c above is an unsafe state, so the algorithm will reject the previous request to avoid entering the state in figure c.

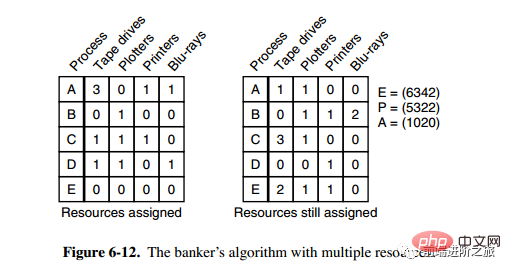

3. Banker Algorithm for Multiple Resources

There are five processes in the above figure, Four resources. The picture on the left represents the resources that have been allocated, and the picture on the right represents the resources that still need to be allocated. The rightmost E, P and A respectively represent: total resources, allocated resources and available resources. Note that these three are vectors, not specific values. For example, A=(1020), which means that 1/4 resources are left respectively. 0/2/0.

The algorithm for checking whether a state is safe is as follows:

Find whether there is a row in the matrix on the right that is less than or equal to vector A. If no such row exists, the system will deadlock and the state is unsafe.

If such a line is found, mark the process as terminated and add its allocated resources to A.

Repeat the above two steps until all processes are marked for termination, then the state is safe.

If a state is not safe, entry into this state needs to be denied.

The above is the detailed content of 30 pictures detailing the operating system summary!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to create oracle database How to create oracle database

Apr 11, 2025 pm 02:36 PM

How to create oracle database How to create oracle database

Apr 11, 2025 pm 02:36 PM

To create an Oracle database, the common method is to use the dbca graphical tool. The steps are as follows: 1. Use the dbca tool to set the dbName to specify the database name; 2. Set sysPassword and systemPassword to strong passwords; 3. Set characterSet and nationalCharacterSet to AL32UTF8; 4. Set memorySize and tablespaceSize to adjust according to actual needs; 5. Specify the logFile path. Advanced methods are created manually using SQL commands, but are more complex and prone to errors. Pay attention to password strength, character set selection, tablespace size and memory

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

What are the methods of tuning performance of Zookeeper on CentOS

Apr 14, 2025 pm 03:18 PM

Zookeeper performance tuning on CentOS can start from multiple aspects, including hardware configuration, operating system optimization, configuration parameter adjustment, monitoring and maintenance, etc. Here are some specific tuning methods: SSD is recommended for hardware configuration: Since Zookeeper's data is written to disk, it is highly recommended to use SSD to improve I/O performance. Enough memory: Allocate enough memory resources to Zookeeper to avoid frequent disk read and write. Multi-core CPU: Use multi-core CPU to ensure that Zookeeper can process it in parallel.

What is Linux actually good for?

Apr 12, 2025 am 12:20 AM

What is Linux actually good for?

Apr 12, 2025 am 12:20 AM

Linux is suitable for servers, development environments, and embedded systems. 1. As a server operating system, Linux is stable and efficient, and is often used to deploy high-concurrency applications. 2. As a development environment, Linux provides efficient command line tools and package management systems to improve development efficiency. 3. In embedded systems, Linux is lightweight and customizable, suitable for environments with limited resources.

How Debian improves Hadoop data processing speed

Apr 13, 2025 am 11:54 AM

How Debian improves Hadoop data processing speed

Apr 13, 2025 am 11:54 AM

This article discusses how to improve Hadoop data processing efficiency on Debian systems. Optimization strategies cover hardware upgrades, operating system parameter adjustments, Hadoop configuration modifications, and the use of efficient algorithms and tools. 1. Hardware resource strengthening ensures that all nodes have consistent hardware configurations, especially paying attention to CPU, memory and network equipment performance. Choosing high-performance hardware components is essential to improve overall processing speed. 2. Operating system tunes file descriptors and network connections: Modify the /etc/security/limits.conf file to increase the upper limit of file descriptors and network connections allowed to be opened at the same time by the system. JVM parameter adjustment: Adjust in hadoop-env.sh file

Where is the Redis restart service

Apr 10, 2025 pm 02:36 PM

Where is the Redis restart service

Apr 10, 2025 pm 02:36 PM

How to restart the Redis service in different operating systems: Linux/macOS: Use the systemctl command (systemctl restart redis-server) or the service command (service redis-server restart). Windows: Use the services.msc tool (enter "services.msc" in the Run dialog box and press Enter) and right-click the "Redis" service and select "Restart".

Who invented the mac system

Apr 12, 2025 pm 05:12 PM

Who invented the mac system

Apr 12, 2025 pm 05:12 PM

The macOS operating system was invented by Apple. Its predecessor, System Software, was launched in 1984. After many iterations, it was updated to Mac OS X in 2001 and changed its name to macOS in 2012.

What language is apache written in?

Apr 13, 2025 pm 12:42 PM

What language is apache written in?

Apr 13, 2025 pm 12:42 PM

Apache is written in C. The language provides speed, stability, portability, and direct hardware access, making it ideal for web server development.

Debian Hadoop data transmission optimization method

Apr 12, 2025 pm 08:24 PM

Debian Hadoop data transmission optimization method

Apr 12, 2025 pm 08:24 PM

The key to improving the efficiency of data transmission in DebianHadoop cluster lies in the comprehensive application of multiple strategies. This article will elaborate on optimization methods to help you significantly improve cluster performance. 1. The data localization strategy maximizes the allocation of computing tasks to the data storage nodes, effectively reducing data transmission between nodes. Hadoop's data localization mechanism will automatically move data blocks to the node where the computing task is located, thereby avoiding performance bottlenecks caused by network transmission. 2. Data compression technology adopts data compression technology during data transmission to reduce the amount of data transmitted on the network and thereby improve transmission efficiency. Hadoop supports a variety of compression algorithms, such as Snappy, Gzip, LZO, etc. You can choose the optimal algorithm according to the actual situation. three,