Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Best practices and experience sharing in PHP reptile development

Best practices and experience sharing in PHP reptile development

Best practices and experience sharing in PHP reptile development

Best practices and experience sharing in PHP crawler development

This article will share the best practices and experiences in PHP crawler development, as well as some code examples . A crawler is an automated program used to extract useful information from web pages. In the actual development process, we need to consider how to achieve efficient crawling and avoid being blocked by the website. Some important considerations will be shared below.

1. Reasonably set the crawler request interval time

When developing a crawler, we should set the request interval time reasonably. Because sending requests too frequently may cause the server to block our IP address and even put pressure on the target website. Generally speaking, sending 2-3 requests per second is a safer choice. You can use the sleep() function to implement time delays between requests.

sleep(1); // 设置请求间隔为1秒

2. Use a random User-Agent header

By setting the User-Agent header, we can simulate the browser sending requests to avoid being recognized as a crawler by the target website. In each request, we can choose a different User-Agent header to increase the diversity of requests.

$userAgents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.82 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36',

];

$randomUserAgent = $userAgents[array_rand($userAgents)];

$headers = [

'User-Agent: ' . $randomUserAgent,

];3. Dealing with website anti-crawling mechanisms

In order to prevent being crawled, many websites will adopt some anti-crawling mechanisms, such as verification codes, IP bans, etc. Before crawling, we can first check whether there is relevant anti-crawling information in the web page. If so, we need to write corresponding code for processing.

4. Use the appropriate HTTP library

In PHP, there are a variety of HTTP libraries to choose from, such as cURL, Guzzle, etc. We can choose the appropriate library to send HTTP requests and process the responses according to our needs.

// 使用cURL库发送HTTP请求 $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, 'https://www.example.com'); curl_setopt($ch, CURLOPT_RETURNTRANSFER, true); $response = curl_exec($ch); curl_close($ch);

5. Reasonable use of cache

Crawling data is a time-consuming task. In order to improve efficiency, you can use cache to save crawled data and avoid repeated requests. We can use caching tools such as Redis and Memcached, or save data to files.

// 使用Redis缓存已经爬取的数据

$redis = new Redis();

$redis->connect('127.0.0.1', 6379);

$response = $redis->get('https://www.example.com');

if (!$response) {

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, 'https://www.example.com');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

$redis->set('https://www.example.com', $response);

}

echo $response;6. Handling exceptions and errors

In the development of crawlers, we need to handle various exceptions and errors, such as network connection timeout, HTTP request errors, etc. You can use try-catch statements to catch exceptions and handle them accordingly.

try {

// 发送HTTP请求

// ...

} catch (Exception $e) {

echo 'Error: ' . $e->getMessage();

}7. Use DOM to parse HTML

For crawlers that need to extract data from HTML, you can use PHP's DOM extension to parse HTML and quickly and accurately locate the required data.

$dom = new DOMDocument();

$dom->loadHTML($response);

$xpath = new DOMXpath($dom);

$elements = $xpath->query('//div[@class="example"]');

foreach ($elements as $element) {

echo $element->nodeValue;

}Summary:

In PHP crawler development, we need to set the request interval reasonably, use random User-Agent headers, handle the website anti-crawling mechanism, and choose the appropriate HTTP library. Use cache wisely, handle exceptions and errors, and use the DOM to parse HTML. These best practices and experiences can help us develop efficient and reliable crawlers. Of course, there are other tips and techniques to explore and try, and I hope this article has been inspiring and helpful to you.

The above is the detailed content of Best practices and experience sharing in PHP reptile development. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

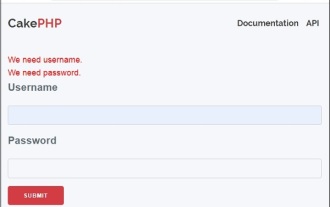

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

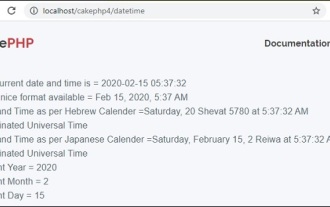

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

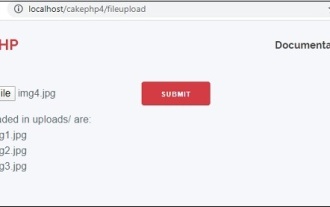

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

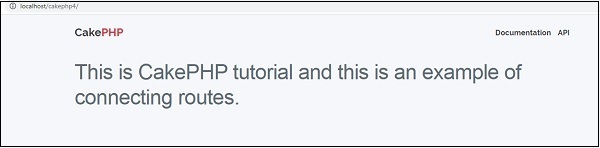

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.