Backend Development

Backend Development

Python Tutorial

Python Tutorial

Crawler + Visualization | Python Zhihu Hot List/Weibo Hot Search Sequence Chart (Part 1)

Crawler + Visualization | Python Zhihu Hot List/Weibo Hot Search Sequence Chart (Part 1)

Crawler + Visualization | Python Zhihu Hot List/Weibo Hot Search Sequence Chart (Part 1)

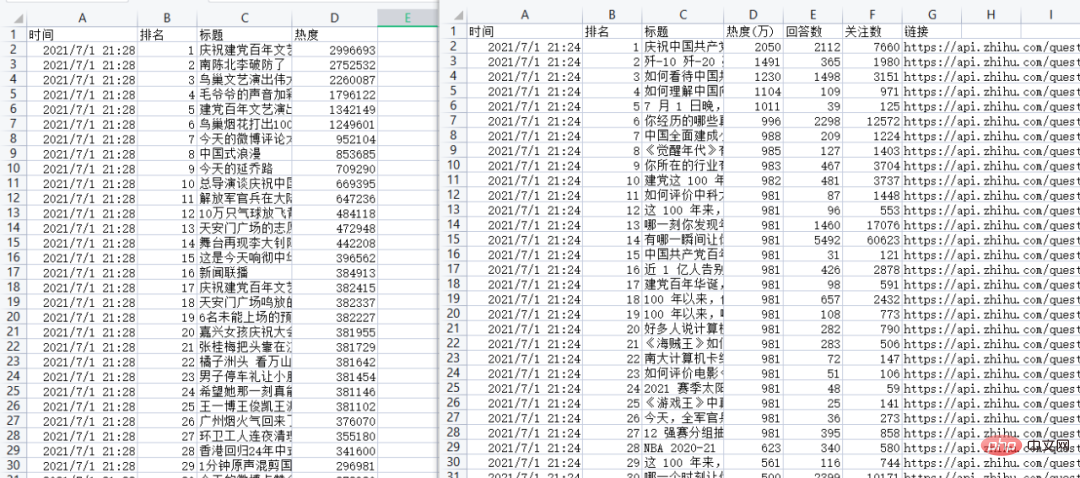

##This issue is<Zhihu Hot List/Weibo Hot Search Sequence Chart>Series of articlesThe content of the previous article introduces how to use Python to regularly crawl knowledge Hu hot list/Weibo hot search data, andsave it to a CSV file for subsequent visualization. The timing diagram part will be innext articleIntroduced in the content, I hope it will be helpful to you.

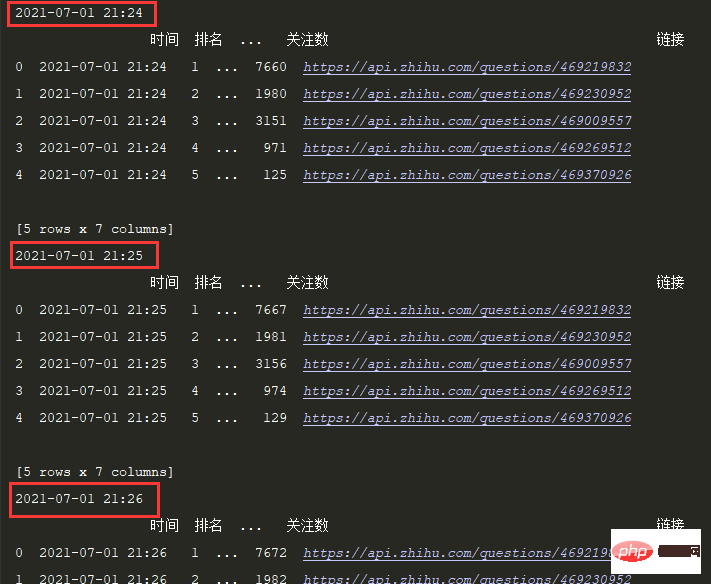

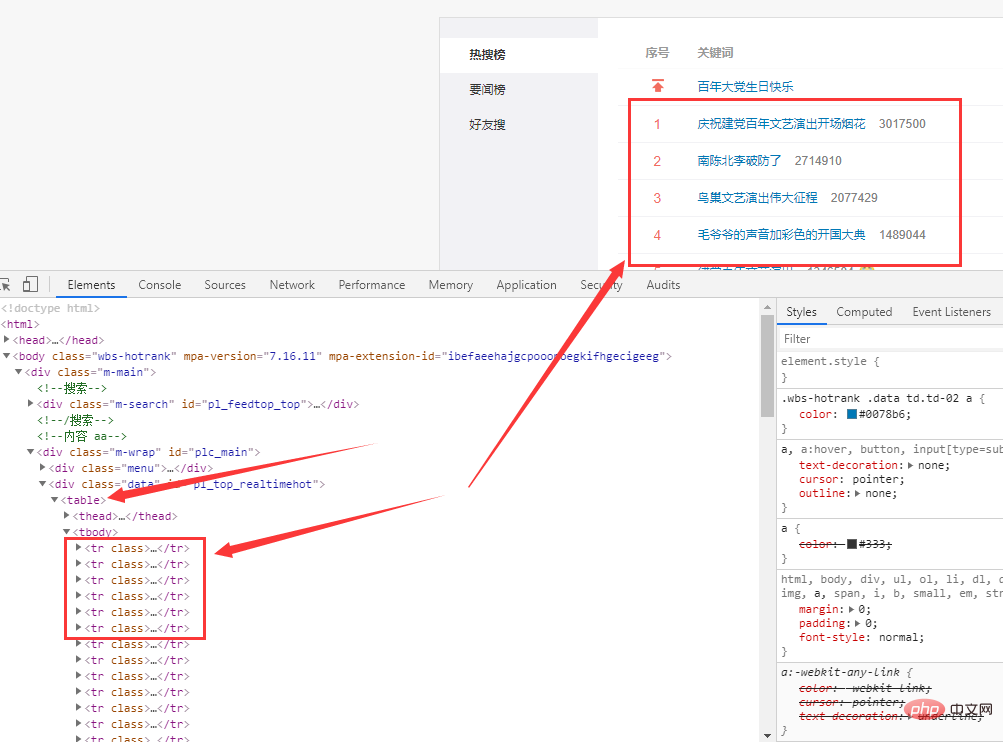

read_html — Web form processing

注意:电脑端端直接F12调试页即可看到热榜数据,手机端需要借助抓包工具查看,这里我们使用手机端接口(返回json格式数据,解析比较方便)。 ##Code: 定时间隔设置1S: 效果: 2.3 保存数据 ##3.1 Web page analysis ##Weibo hot search URL: https://s.weibo.com/top/summary ##The data is in the ##3.2 Obtain data 代码: 定时间隔设置1S,效果: 3.3 保存数据 结果: The above is the detailed content of Crawler + Visualization | Python Zhihu Hot List/Weibo Hot Search Sequence Chart (Part 1). For more information, please follow other related articles on the PHP Chinese website! AI-powered app for creating realistic nude photos Online AI tool for removing clothes from photos. Undress images for free AI clothes remover Generate AI Hentai for free. Easy-to-use and free code editor Chinese version, very easy to use Powerful PHP integrated development environment Visual web development tools God-level code editing software (SublimeText3) The speed of mobile XML to PDF depends on the following factors: the complexity of XML structure. Mobile hardware configuration conversion method (library, algorithm) code quality optimization methods (select efficient libraries, optimize algorithms, cache data, and utilize multi-threading). Overall, there is no absolute answer and it needs to be optimized according to the specific situation. It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone. There is no built-in sum function in C language, so it needs to be written by yourself. Sum can be achieved by traversing the array and accumulating elements: Loop version: Sum is calculated using for loop and array length. Pointer version: Use pointers to point to array elements, and efficient summing is achieved through self-increment pointers. Dynamically allocate array version: Dynamically allocate arrays and manage memory yourself, ensuring that allocated memory is freed to prevent memory leaks. An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents. XML can be converted to images by using an XSLT converter or image library. XSLT Converter: Use an XSLT processor and stylesheet to convert XML to images. Image Library: Use libraries such as PIL or ImageMagick to create images from XML data, such as drawing shapes and text. XML formatting tools can type code according to rules to improve readability and understanding. When selecting a tool, pay attention to customization capabilities, handling of special circumstances, performance and ease of use. Commonly used tool types include online tools, IDE plug-ins, and command-line tools. To convert XML images, you need to determine the XML data structure first, then select a suitable graphical library (such as Python's matplotlib) and method, select a visualization strategy based on the data structure, consider the data volume and image format, perform batch processing or use efficient libraries, and finally save it as PNG, JPEG, or SVG according to the needs. There is no APP that can convert all XML files into PDFs because the XML structure is flexible and diverse. The core of XML to PDF is to convert the data structure into a page layout, which requires parsing XML and generating PDF. Common methods include parsing XML using Python libraries such as ElementTree and generating PDFs using ReportLab library. For complex XML, it may be necessary to use XSLT transformation structures. When optimizing performance, consider using multithreaded or multiprocesses and select the appropriate library.import json

import time

import requests

import schedule

import pandas as pd

from fake_useragent import UserAgent

##https://www.zhihu.com/hot

https://api.zhihu.com/topstory/hot-list?limit=10&reverse_order=0

def getzhihudata(url, headers):

r = requests.get(url, headers=headers)

r.raise_for_status()

r.encoding = r.apparent_encoding

datas = json.loads(r.text)['data']

allinfo = []

time_mow = time.strftime("%Y-%m-%d %H:%M", time.localtime())

print(time_mow)

for indx,item in enumerate(datas):

title = item['target']['title']

heat = item['detail_text'].split(' ')[0]

answer_count = item['target']['answer_count']

follower_count = item['target']['follower_count']

href = item['target']['url']

info = [time_mow, indx+1, title, heat, answer_count, follower_count, href]

allinfo.append(info)

# 仅首次加表头

global csv_header

df = pd.DataFrame(allinfo,columns=['时间','排名','标题','热度(万)','回答数','关注数','链接'])

print(df.head())# 每1分钟执行一次爬取任务:

schedule.every(1).minutes.do(getzhihudata,zhihu_url,headers)

while True:

schedule.run_pending()

time.sleep(1)

df.to_csv('zhuhu_hot_datas.csv', mode='a+', index=False, header=csv_header)

csv_header = False

tag of the web page.

def getweibodata():

url = 'https://s.weibo.com/top/summary'

r = requests.get(url, timeout=10)

r.encoding = r.apparent_encoding

df = pd.read_html(r.text)[0]

df = df.loc[1:,['序号', '关键词']]

df = df[~df['序号'].isin(['•'])]

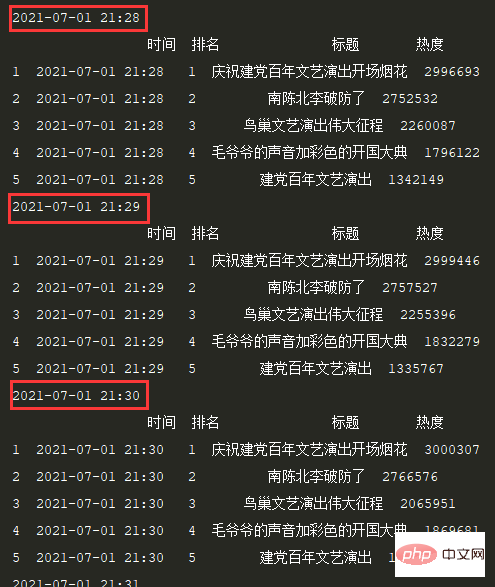

time_mow = time.strftime("%Y-%m-%d %H:%M", time.localtime())

print(time_mow)

df['时间'] = [time_mow] * df.shape[0]

df['排名'] = df['序号'].apply(int)

df['标题'] = df['关键词'].str.split(' ', expand=True)[0]

df['热度'] = df['关键词'].str.split(' ', expand=True)[1]

df = df[['时间','排名','标题','热度']]

print(df.head())

df.to_csv('weibo_hot_datas.csv', mode='a+', index=False, header=csv_header)

Hot AI Tools

Undresser.AI Undress

AI Clothes Remover

Undress AI Tool

Clothoff.io

AI Hentai Generator

Hot Article

Hot Tools

Notepad++7.3.1

SublimeText3 Chinese version

Zend Studio 13.0.1

Dreamweaver CS6

SublimeText3 Mac version

Hot Topics

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

What is the process of converting XML into images?

Apr 02, 2025 pm 08:24 PM

What is the process of converting XML into images?

Apr 02, 2025 pm 08:24 PM

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM