Understand current limiting and common solutions in ten minutes!

Recently, I received interview feedback from several netizens, and they were all asked current limit related questions during the interview. Let’s talk about it today. Let’s talk about the various current limiting solutions in our project.

Basic concept of current limiting

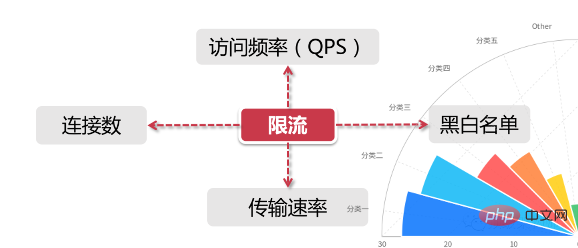

For general current limiting scenarios, it has two dimensions Information:

Time limit is based on a certain time range or a certain point in time, which is what we often call the "time window", such as every minute, every second Limit the time window of the clock Resources are limited based on available resources, such as setting the maximum number of accesses, or the maximum number of available connections

above Combining the two dimensions, current limiting means limiting resource access within a certain time window, such as setting a maximum of 100 access requests per second. But in a real scenario, we not only set one current limiting rule, but also set multiple current limiting rules to work together. The main current limiting rules are as follows:

QPS and connection number control

For (Number of connections and QPS) current limiting, we can set current limiting in the IP dimension, or we can set current limiting based on a single server.

In real environments, current limiting rules in multiple dimensions are usually set, such as setting the access frequency of the same IP to less than 10 per second. If the number of connections is less than 5, set the QPS of each machine to a maximum of 1000, and keep the number of connections to a maximum of 200. Furthermore, we can treat a server group or the servers in the entire computer room as a whole and set higher-level current limiting rules. All these current limiting rules will work together for traffic control.

Transmission rate

Everyone is familiar with "transmission rate", such as the download speed of resources. Some websites have more detailed current limiting logic in this area. For example, the download speed for ordinary registered users is 100k/s, and after purchasing a membership, it is 10M/s. Behind this is the current limiting logic based on user groups or user tags.

Black and white list

The black and white list is a very common means of limiting and releasing data in large enterprise applications, and the black and white lists often change dynamically. For example, if an IP has been visited too frequently in a period of time and is identified by the system as a robot user or traffic attack, then the IP will be added to the blacklist, thereby restricting its access to system resources. This is what we Commonly known as "IP blocking".

The crawler programs we usually see, such as crawling pictures of beautiful women on Zhihu, or crawling stock time-sharing information on brokerage systems, these crawler programs must implement the function of changing IP to prevent being added. blacklist.

Sometimes we also find that the company's network cannot access large public websites such as 12306. This is also because the outbound IP addresses of some companies are the same address. Therefore, when the number of visits is too high, this IP The address is recognized by the other party's system and added to the blacklist. Students who use home broadband should know that most network operators allocate users to different outbound IP segments, or dynamically change the user's IP address from time to time.

The whitelist is easier to understand. It is equivalent to having a gold medal given by the royal family. You can freely shuttle through various current limiting rules without any hindrance. For example, some e-commerce companies will add the accounts of very large sellers to the whitelist, because such sellers often have their own operation and maintenance systems and need to interface with the company's IT system to perform a large number of product releases, replenishments, etc. In addition, search the backend of the public account Programming Technology Circle and reply "Java" to get a surprise gift package.

Distributed environment

Distributed is different from the single-machine current limiting scenario. It considers all servers in the entire distributed environment as a whole. For example, for IP current limiting, we limit one IP to a maximum of 10 visits per second. No matter which machine the request from this IP falls on, as long as it accesses the service node in the cluster, it will be subject to current limiting. Constrained by rules.

We'd better save the current limiting information in a "centralized" component, so that it can obtain the access status of all machines in the cluster. Currently, there are two mainstream current limiting solutions:

Gateway layer current limiting applies the current limiting rules to the entrance of all traffic Middleware current limiting stores the current limiting information in the distributed In a certain middleware in the environment (such as Redis cache), each component can obtain the traffic statistics at the current moment from here, thereby deciding whether to deny service or allow traffic sentinel, A component tailor-made for microservices by the springcloud ecosystem for distributed current limiting, circuit breaker degradation and other components

Common algorithms for current limiting schemes

Token Bucket Algorithm

Token Bucket Token bucket algorithm is currently the most widely used current limiting algorithm. As the name suggests, it has the following two key roles:

The token will be processed only after the Request has obtained the token. Other Requests will either be queued or discarded directly. The place where the bucket is used to hold tokens , all Requests obtain tokens from this bucket, which mainly involves two processes: Token generation

This process involves the token generator and the token bucket. We mentioned earlier that the token bucket is a place for tokens. Since it is a bucket, it must have a capacity, which means that the token bucket can hold the number of tokens. The number of cards is a fixed value.

For the token generator, it will add tokens to the bucket according to a predetermined rate. For example, we can configure it to issue tokens at a rate of 100 requests per second, or 50 requests per minute. indivual. Note that the issuance speed here is uniform, which means that these 50 tokens are not issued all at once at the beginning of each time window, but will be issued at a uniform speed within this time window.

The token dispenser is a faucet. If the bucket holding water below is full, then the water (token) will naturally flow outside. The same is true during the token issuance process. The capacity of the token bucket is limited. If the tokens of the rated capacity are currently filled, the new tokens will be discarded.

Token acquisition

After each access request arrives, a token must be obtained to perform the following steps logic. If the number of tokens is small and there are many access requests, some requests will naturally not be able to obtain tokens. At this time, we can set up a "buffer queue" to temporarily store these excess tokens.

Buffer queue is actually an optional option, and not all programs that apply the token bucket algorithm will implement queues. When there is a cache queue, requests that have not yet obtained a token will be queued in this queue until a new token is generated, and then a request is taken from the head of the queue to match the token.

When the queue is full, this part of the access request will be discarded. In practical applications, we can also add a series of special effects to this queue, such as setting the survival time of requests in the queue, or transforming the queue into a PriorityQueue, sorting according to a certain priority instead of first in, first out.

Leaky Bucket Algorithm

Leaky Bucket is another bucket, and the current limiting algorithm is related to the bucket. So what is the difference between leaky bucket and token bucket?

The first half of the leaky bucket algorithm is similar to the token bucket, but the objects of operation are different. Token bucket puts tokens into the bucket, while leaky bucket puts the access request packets into the bucket. Likewise, if the bucket is full, incoming packets will be discarded.

The second half of the leaky bucket algorithm has distinctive characteristics. It will always flow out data packets from the bucket at a constant rate. For example, if I set up a leaky bucket to store 100 data packets, and then the outflow speed is one per second, then no matter what rate the data packets flow into the bucket, or how many data packets there are in the bucket, the leaky bucket can guarantee these data Packets are always processed at a constant rate of one second. In addition, search the official account back-end architect backend reply "clean architecture" to get a surprise gift package.

The difference between leaky bucket and token bucket

It is not difficult to see based on their respective characteristics that both algorithms have A "constant" rate and an "indeterminate" rate. The token bucket creates tokens at a constant rate, but the rate at which access requests obtain tokens is "unfixed". Anyway, as many tokens as there are, they will be issued, and if the tokens are gone, they will just wait. The leaky bucket processes requests at a "constant" rate, but the rate at which these requests flow into the bucket is "variable".

From these two characteristics, the natural characteristics of the leaky bucket determine that it will not have burst traffic. Even if 1,000 requests per second arrive, its access rate to the background service output will always be constant. The token bucket is different. Its feature can "pre-store" a certain amount of tokens. Therefore, all tokens can be consumed in a short time when dealing with sudden traffic. Its burst traffic processing efficiency will be higher than that of a leaky bucket, but it is guided. The pressure on the backend system will also increase accordingly.

Sliding window

For example, if we have 5 users visiting every second, and 10 users visiting within the 5th second, then we will visit within the time window of 0 to 5 seconds. The amount is 15. If our interface sets the upper limit of access within the time window to 20, then when the time reaches the sixth second, the total count in this time window becomes 10, because the 1 second grid has exited the time window, so in The number of visits that can be received within the sixth second is 20-10=10.

The sliding window is actually a calculator algorithm. It has a distinctive feature. When the span of the time window is longer, the current limiting effect will be smoother. For example, if the current time window is only two seconds, and all access requests are concentrated in the first second, when the time slides back one second, the count of the current window will change greatly. Extending the time window can Reduce the probability of this happening

Commonly used current limiting schemes

Legality verification current limiting

Such as verification code , IP blacklist, etc., these means can effectively prevent malicious attacks and crawler collection;

Guawa current limiting

In the field of current limiting, Guava provides the following # under its multi-threading module There are several current limiting support classes headed by ##RateLimiter, but their scope is limited to the "current" server. In other words, Guawa's current limiting is a single-machine current limiting, and it cannot do anything across machines or jvm processes. For example, Say, I currently have 2 servers [Server 1, Server 2]. Both servers have deployed a login service. If I want to perform traffic control on these two machines, Control, for example, control the total number of visits of two machines within 20 per second. If you use Guava to do it, you can only control the number of visits of each machine independently <p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">Although Guava is not a solution for distributed systems, as a simple and lightweight client current limiting component, it is very suitable to explain the current limiting algorithm</p>

<h4 id="Gateway-layer-current-limiting">Gateway layer current limiting</h4> <p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;"> The service gateway, as the first level in the entire distributed link, accepts all user requests, so limiting current at the gateway level is a good entry point. The path from top to bottom is: </p>

<ol class="list-paddingleft-1" data-tool="mdnice编辑器" style="margin-top: 8px;margin-bottom: 8px;padding-left: 25px;">

<li><section style="margin-top: 5px;margin-bottom: 5px;line-height: 26px;color: rgb(1, 1, 1);">User traffic is forwarded from the gateway layer to the background service</section></li>

<li><section style="margin-top: 5px;margin-bottom: 5px;line-height: 26px;color: rgb(1, 1, 1);">The background service accepts the traffic and calls the cache to obtain the data</section></li>

<li><section style="margin-top: 5px;margin-bottom: 5px;line-height: 26px;color: rgb(1, 1, 1);">If there is no data in the cache, access the database</section></li>

</ol>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">The traffic decreases layer by layer from top to bottom. The gateway layer gathers the most intensive user access requests, followed by the background service . </p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">After passing the verification logic of the background service, some erroneous requests are flushed out, and the remaining requests fall on the cache. If there is no data in the cache, the database at the bottom of the funnel will be requested, so the number of requests at the database level Minimum (compared to other components, the database is often the link with the worst concurrency capacity. Even if Alibaba’s MySQL has undergone extensive modifications, the single-machine concurrency cannot be compared with components such as Redis and Kafka) </p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">The current mainstream gateway layer includes Nginx represented by software, as well as gateway layer components such as Gateway and Zuul in Spring Cloud</p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;"><strong>Nginx current limit</strong></p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;"> In the system architecture, Nginx's proxy and routing forwarding are a very important function of its gateway layer. Due to Nginx's inherent lightweight and excellent design, it has become the first choice of many companies. Nginx is considered from the gateway level. , can be used as the front-end gateway to withstand most of the network traffic, so using Nginx for current limiting is also a good choice. In Nginx, commonly used policy configurations based on current limiting are also provided.</p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">Nginx provides two current limiting methods: one is to control the rate, and the other is to control the number of concurrent connections. </p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;"><strong>Control the rate</strong></p>

<p data-tool="mdnice编辑器" style="padding-top: 8px;padding-bottom: 8px;line-height: 26px;">We need to use <code style='font-size: 14px;padding: 2px 4px;border-radius: 4px;margin-right: 2px;margin-left: 2px;background-color: rgba(27, 31, 35, 0.05);font-family: "Operator Mono", Consolas, Monaco, Menlo, monospace;word-break: break-all;color: rgb(239, 112, 96);'>limit_req_zone to limit the number of requests per unit time, that is, rate limit,

Because Nginx's current limiting statistics are based on milliseconds, the speed we set is 2r/s. The conversion means that a single IP only allows one request to pass within 500 milliseconds, and the second request is only allowed to pass starting from 501ms.

Control rate optimized version

Although the above rate control is very precise, it is too harsh in a production environment. In actual circumstances, we should control the total number of visits in an IP unit within a total time, rather than being precise to milliseconds as above. We can use the burst keyword to turn on this setting

burst=4Meaning that each IP allows up to 4 burst requests

Control the number of concurrencies

Uselimit_conn_zone and limit_conn Two instructions can control the number of concurrency

limit_conn perip 10 means limiting a single IP to hold up to 10 connections at the same time;limit_conn perserver 100 means that the total number of concurrent connections that the server can handle at the same time is 100.

Note: This connection is only counted after the request header is processed by the backend.

Middleware current limiting

For a distributed environment, it is nothing more than a central node-like place to store current-limiting data. For example, if I want the access rate of the control interface to be 100 requests per second, then I need to save the number of requests received within the current 1s somewhere, and allow all nodes in the cluster environment to access. So what technology can we use to store this temporary data?

Then everyone must have thought that it must be redis. Using the expiration time feature of Redis, we can easily set the time span of the current limit (such as 10 requests per second, or 10 requests every 10 seconds). At the same time, Redis also has a special skill - script programming. We can write the current limiting logic into a script and implant it into Redis. This completely separates the current limiting responsibility from the service layer. At the same time, Redis has powerful concurrency characteristics and high The available cluster architecture can also well support current-limited access to large clusters (reids lua).

Current limiting component

In addition to the methods introduced above, there are currently some open source components that provide similar functions, such as Sentinel, which is a good choice. Sentinel is an open source component produced by Alibaba and is included in the Spring Cloud Alibaba component library. Sentinel provides a rich set of APIs and visual management consoles for current limiting, which can easily help us manage current limiting.

Consider current-limiting design from the architectural dimension

In real projects, only one current-limiting method will be used. Several methods are often used in conjunction with each other to give the current-limiting strategy a sense of hierarchy. Maximum resource usage is reached. In this process, the design of the current limiting strategy can also refer to the funnel model mentioned earlier, which is wide at the top and tight at the bottom. The design of the current limiting scheme for different parts of the funnel should pay attention to the high availability of the current components.

Take the actual project I participated in as an example. For example, we developed an interface for the product details page. Through mobile Taobao diversion, the access request on the app side will first go through Alibaba’s mtop gateway. At the gateway layer, our The current limit will be relatively loose. After the request reaches the backend product details page service through the gateway, a series of middleware current limit components will be used to perform more detailed current limit control on the service

Specific means of implementing current limiting

1) Tomcat uses maxThreads to implement current limiting.

2) Nginx’s limit_req_zone and burst are used to implement rate limiting.

3) Nginx’s limit_conn_zone and limit_conn two instructions control the total number of concurrent connections.

4) The time window algorithm can be implemented with the help of Redis's ordered collection.

5) The leaky bucket algorithm can be implemented using Redis-Cell.

6) The token algorithm can be implemented by solving Google's guava package.

It should be noted that the current limiting solution implemented with Redis can be used in distributed systems, while the current limiting solution implemented by guava can only be applied to a stand-alone environment. If you find server-side current limiting troublesome, you can directly use container current limiting (Nginx or Tomcat) without changing any code, but only if it can meet the business needs of the project.

Tomcat current limit

The maximum number of threads of Tomcat 8.5 version is in the conf/server.xml configuration. maxThreads is the maximum number of threads of Tomcat. When requesting When the concurrency is greater than this value (maxThreads), the requests will be queued for execution, thus completing the purpose of current limiting.

Notice:

The value of maxThreads can be appropriately increased. Tomcat defaults to 150 (Tomcat version 8.5), but this value is not bigger, the better. It depends on the specific server configuration. It should be noted that every time a thread is started It requires 1MB of JVM memory space for thread stack, and the more threads there are, the heavier the GC burden will be.

Finally, it should be noted that the operating system has certain restrictions on the number of threads in a process. The number of threads in each process of Windows is not allowed to exceed 2000. The number of threads in each process of Linux is not allowed. More than 1,000.

The above is the detailed content of Understand current limiting and common solutions in ten minutes!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

What is the reason why the video account live broadcast is not pushed? How to solve the current limitation?

Mar 07, 2024 pm 02:04 PM

What is the reason why the video account live broadcast is not pushed? How to solve the current limitation?

Mar 07, 2024 pm 02:04 PM

Beginning: With the rapid development of social media, live video has become one of the important ways for people to share their lives and convey information. However, sometimes when we use the live broadcast function of the video account, we may encounter a situation where the stream cannot be pushed. So what is the reason for this? 1. What is the reason why the live broadcast of the video account does not push the stream? Network problems are one of the main reasons why live video accounts do not push streams, especially unstable network connections. When the network signal is unstable, the video stream cannot be transmitted to the server smoothly, causing the live broadcast to be unable to be pushed normally. The instability of the network connection may cause freezes, interruptions or delays in the live broadcast, affecting the user's viewing experience. Therefore, a stable network connection is crucial for smooth live video streaming. To solve this problem, you can try to improve the network

What should I do if my Douyin live broadcast has no traffic? The reason why the live broadcast room is restricted

Mar 27, 2024 pm 10:51 PM

What should I do if my Douyin live broadcast has no traffic? The reason why the live broadcast room is restricted

Mar 27, 2024 pm 10:51 PM

With the development of the Internet, live streaming has become a new marketing method in the e-commerce industry. Among many live broadcast platforms, Douyin Live has attracted much attention due to its large user base and powerful social communication effect. However, when carrying out Douyin live broadcasts to bring goods, some anchors face an embarrassing problem: there is no traffic in the live broadcast room and no one cares about the goods. So, when Douyin’s live broadcast has no traffic, how should we solve this problem? 1. What should I do if the Douyin live broadcast has no traffic? Improve content quality: The content of the live broadcast room is the key to attracting users. Anchors can start with product introductions, brand stories, interactive links, etc. to improve the quality and attractiveness of live content and make users want to buy. To reach a wider audience and meet their needs and interests, through direct

How to maintain an account if Douyin has limited traffic? What skills is needed to maintain an account?

Mar 21, 2024 pm 09:10 PM

How to maintain an account if Douyin has limited traffic? What skills is needed to maintain an account?

Mar 21, 2024 pm 09:10 PM

As one of the most popular short video platforms in the world, Douyin has attracted countless users to share their lives, talents and creativity here. As the number of users increases, competition on Douyin becomes increasingly fierce. Some users may encounter Douyin's streaming limitations, which may affect their video playback, likes, and fan growth. This article will discuss how to maintain a Douyin account after the current limit, as well as the skills that need to be mastered to maintain a Douyin account. 1. How to maintain an account when TikTok is restricted? If you find that your Douyin account has been restricted, you can take the following measures to maintain your account: Review video content: Carefully check recently posted videos to ensure they meet Douyin’s recommendation standards. If any illegal, vulgar or repetitive content is found, it will be deleted immediately. 2. Improve content quality: When creating videos, pay attention to content quality

Does not being able to add dithering equal to being restricted? How to deal with works that cannot be submitted to Doujia?

Mar 21, 2024 pm 09:47 PM

Does not being able to add dithering equal to being restricted? How to deal with works that cannot be submitted to Doujia?

Mar 21, 2024 pm 09:47 PM

On Douyin, a short video platform with more than 100 million daily active users, more and more content creators hope to increase the exposure and influence of their works by voting on Douyin. Some people also find that even if their works are not submitted to Doujia, they seem to be restricted. So, does not being able to vote for Doujia really mean that it is being restricted? This article will discuss this issue and give strategies to deal with it. 1. Does not being able to add dithering equal to being restricted? First, we need to clarify a concept, which is "current limiting". On the Douyin platform, current limiting means that the platform controls the exposure of content by adjusting its algorithm, thereby reducing the frequency with which certain content is displayed to users. Throttling does not mean that content will be completely blocked, but it will reduce the chance of them appearing in the user's field of vision. Not using dithering does not directly lead to current limiting.

How to restore traffic data after Douyin is restricted? After it is restricted, are there any other ways to speed up account recovery?

Apr 01, 2024 pm 12:21 PM

How to restore traffic data after Douyin is restricted? After it is restricted, are there any other ways to speed up account recovery?

Apr 01, 2024 pm 12:21 PM

As one of the most popular short video platforms in the world, Douyin has a huge user base and content creators. Some users may encounter the problem of having their Douyin accounts restricted, resulting in a drop in traffic data. So, how to restore traffic data after Douyin's traffic restriction? This article will introduce in detail how to recover traffic data after Douyin is restricted, and whether there are other methods to speed up account recovery. 1. How to restore traffic data after Douyin traffic restriction? First, the reasons need to be analyzed: the reason why the account is restricted may be due to violation of platform regulations, poor content quality or abnormal behavior, etc. Once you understand the reasons, you can make targeted improvements. Optimize content: Improve content quality and creativity to ensure that the content complies with Douyin’s user preferences and platform regulations. You can try to publish diversified content and find users

How does Xiaohongshu determine that it has been restricted? How to cancel?

Mar 07, 2024 pm 12:04 PM

How does Xiaohongshu determine that it has been restricted? How to cancel?

Mar 07, 2024 pm 12:04 PM

Xiaohongshu is a very popular social e-commerce platform where users can share their experiences, purchase products, etc. However, due to the huge number of users and increasing scale of the platform, in order to ensure normal operation and prevent abuse, Xiaohongshu has adopted traffic limiting measures to control user activities. So, how to judge whether you are being restricted? 1. How does Xiaohongshu determine that it has been restricted? When you find that Xiaohongshu’s dynamic recommendations are significantly reduced, it may be because of traffic restrictions. Current limiting will lead to a reduction in homepage content, which is the result of the system pushing based on user activity and operating habits. When you find that the growth rate of your followers has slowed down or stagnated significantly, it may be a sign that the platform has restricted your account. Xiaohongshu may limit the number of followers you have to reduce activity

Using Redis to implement current limiting in ThinkPHP6

Jun 21, 2023 pm 03:22 PM

Using Redis to implement current limiting in ThinkPHP6

Jun 21, 2023 pm 03:22 PM

With the widespread use of Internet applications, how to effectively control traffic has become an important issue. There are currently many methods for the specific implementation of flow control. One method is to implement current limiting through the use of Redis. This article will introduce how to use Redis to implement current limiting in ThinkPHP6. 1. What is current limiting? Current limiting is a means of controlling access traffic to a certain extent to ensure the stable operation of the business system. There are many ways to implement current limiting, the more commonly used ones are the leaky bucket algorithm and the token bucket algorithm.

Understand current limiting and common solutions in ten minutes!

Aug 15, 2023 pm 04:15 PM

Understand current limiting and common solutions in ten minutes!

Aug 15, 2023 pm 04:15 PM

Recently, I received interview feedback from several netizens, and they were all asked questions related to current limiting during the interview. Let’s talk about various current limiting solutions in our project today.