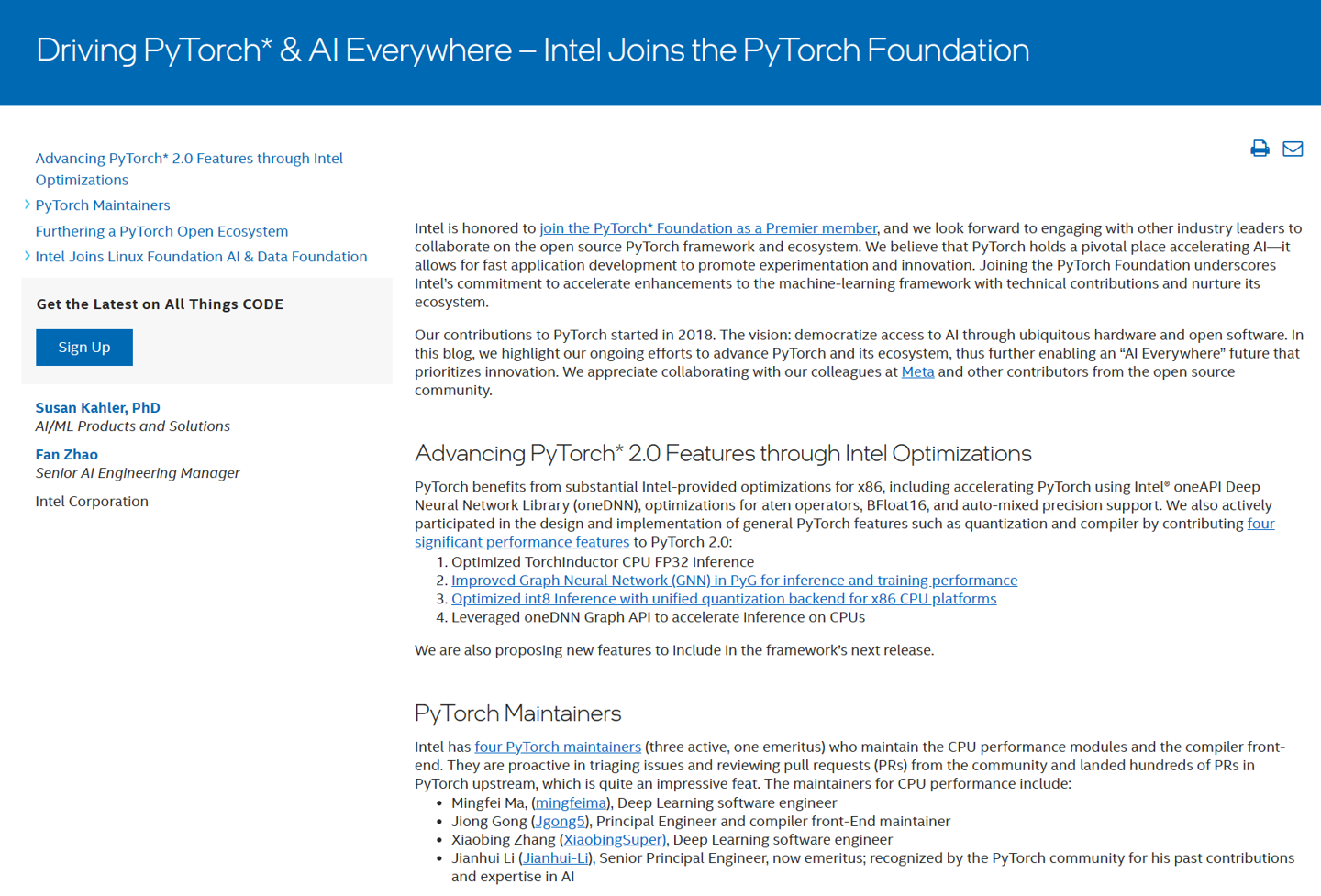

News from this site on August 14th, Intel recently issued a press release on its official website, announced that it would join the PyTorch Foundation as a Premier member.

Intel said that they have been actively involved in the development of PyTorch in the past five years, helping to optimize PyTorch's CPU inference performance, and thus improving the AI performance on Intel processors

According to earlier reports on this site, Intel has optimized the TorchInductor CPU FP32 inference in PyTorch 2.0, improving PyG. GNN's inference and training performance, and by optimizing Int8 inference on the x86 CPU platform, using the oneDNN Graph API to improve the inference speed on the CPU

Currently, Intel has four PyTorch maintainers, three of whom are active personnel , one is a retiree. The four maintainers are Mingfei Ma (deep learning and software engineer), Jiong Gong (lead engineer and compiler front-end maintainer), Xiaobing Zhang (deep learning and software engineer), and Jianhui Li (senior principal engineer, now honorary Engineer, recognized by the PyTorch community for his contributions and expertise in the field of artificial intelligence). They are responsible for maintaining the CPU performance module and compiler front-end, and have successfully merged hundreds of PRs into PyTorch upstream

This statement points out that all articles on this site contain external jump links (such as hyperlinks, QR codes , password, etc.), aiming to provide more information and save selection time, but the results are for reference only

The above is the detailed content of Intel joins PyTorch Foundation to support AI development. For more information, please follow other related articles on the PHP Chinese website!