Today, I bring you an article about Dubbo IO interaction.

This article was written by a colleague. It uses interesting words to write down boring knowledge points. It is easy to understand and very interesting, so I can’t wait to find the author’s authorization and share it with everyone:

Dubbo is an excellent RPC framework with intricate threading models. The author of this article starts from Let’s analyze the entire IO process of Dubbo based on my shallow knowledge. Before we start, let's first look at the following questions:

Next, the author will use Dubbo2.5.3 as the Consumer and 2.7.3 as the Provider to describe the entire interaction process. The author’s website From the perspective of data packets, told in the first person, fasten your seat belts, let's go.

I am a data packet, born in a small town called Dubbo2.5.3 Consumer. My mission is to deliver information, and I also like to travel.

One day, I was about to be sent out. It was said that I was going to a place called Dubbo 2.7.3 Provider.

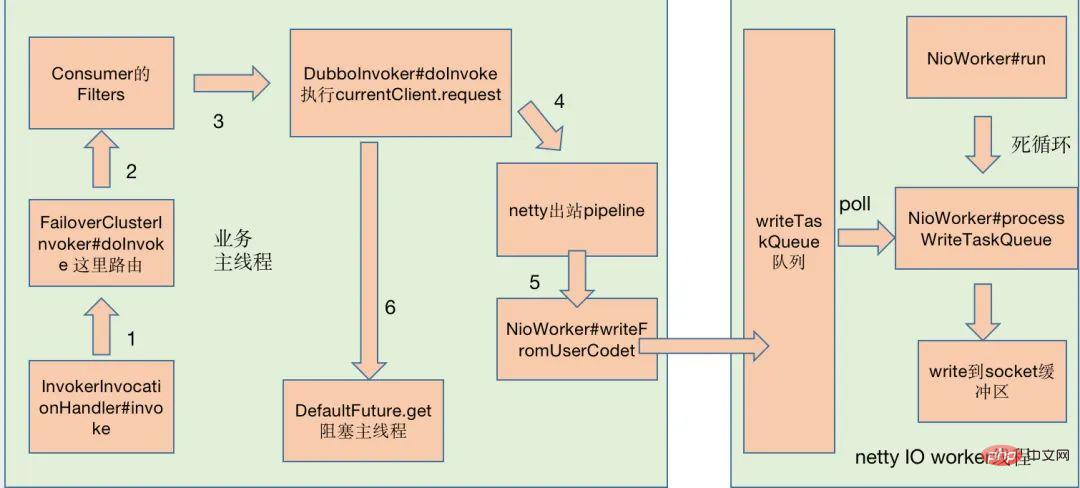

On this day, the business thread initiated a method call. In FailoverClusterInvoker#doInvoke I selected a Provider, then passed through various Consumer Filters, then through the Netty3 pipeline, and finally passed NioWorker#scheduleWriteIfNecessary Method, I came to the writeTaskQueue queue of NioWorker.

When I looked back at the main thread, I found that he was waiting for Condition in DefaultFuture. I didn't know what he was waiting for or how long he had to wait.

I queued in the writeTaskQueue queue for a while and saw that the netty3 IO worker thread was executing the run method endlessly. Everyone called this an infinite loop.

In the end, I was lucky. NioWorker#processWriteTaskQueue chose me. I was written to the Socket buffer of the operating system. I waited in the buffer. Anyway, there was enough time. Let me reflect on today. During my trip, I went through two tour groups, called the main thread and the netty3 IO worker thread. Well, the services of both tour groups were good and very efficient.

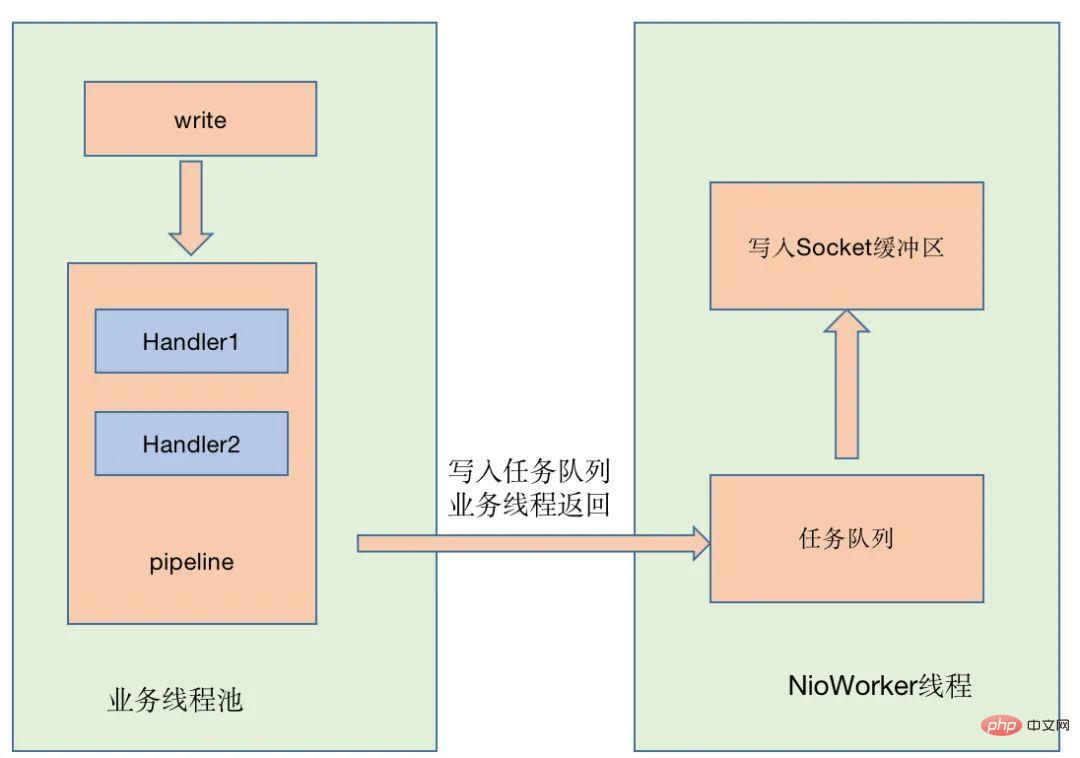

I simply recorded what I saw today and drew it into a picture. Of course, I ignored the unimportant parts.

I am in the operating system socket buffer and passed Lots of magical things.

In a place called the transport layer, I added the target port number and source port number

In a place called the network layer, I added the target IP and source IP, and at the same time did the AND operation between the target IP and the mask to find the "next hop" IP

In a place called the data link layer, I added the target MAC address and source MAC address of the "next hop" through the ARP protocol

The most interesting thing is that we took a section of the cable car. Every time we changed a cable car, we had to modify the target MAC address and source MAC address. Later, we asked the data packet information of our colleagues. Friends, this mode is called "Next Hop", jump over one hop after another. There are a lot of data packages here. The big ones are in a single cable car, and the smaller ones are squeezed into one cable car. There is also a terrible thing. If they are bigger, they have to be split into multiple cable cars (although this does not matter to our data packages). Question), this is called unpacking and sticking. During this period, we passed switches and routers, and these places were very happy to play with.

Of course, there are also unpleasant things, that is, congestion, the cable car at the destination is full, and there is no time to be taken away, so you can only wait.

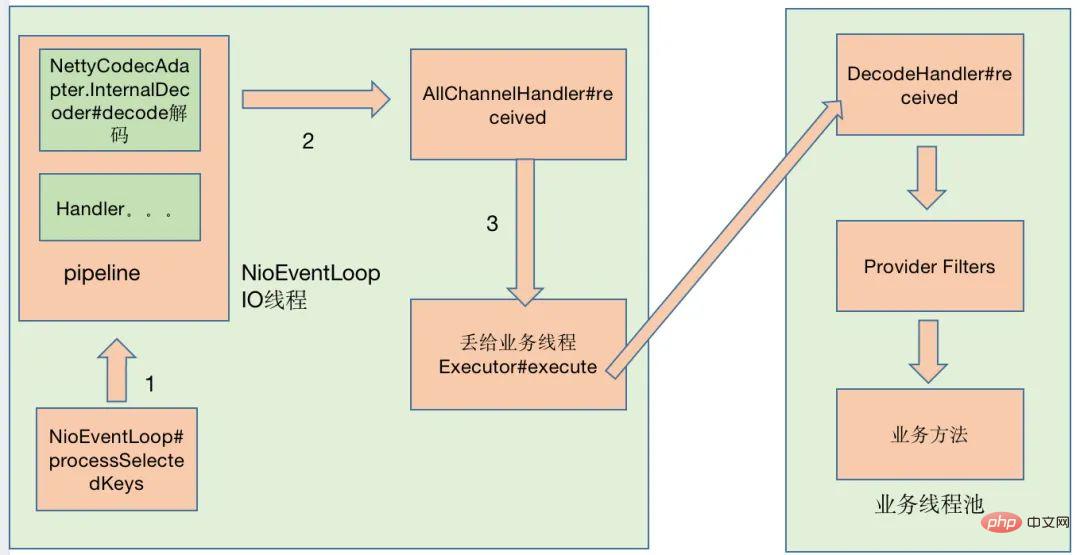

After a long time, I arrived at my destination and got on a bus called "Zero Copy" The speedboat quickly arrived at netty4, and netty4 was indeed magnificent. After passing NioEventLoop#processSelectedKeys, and then various inbound handlers in the pipeline, I came to the thread pool of AllChannelHandler. Of course, I have many choices, but I Select a destination at random, and it will go through decoding and a series of Filters before coming to the destination "business method". NettyCodecAdapter#InternalDecoder The decoder is very powerful, it can handle unpacking and sticking. .

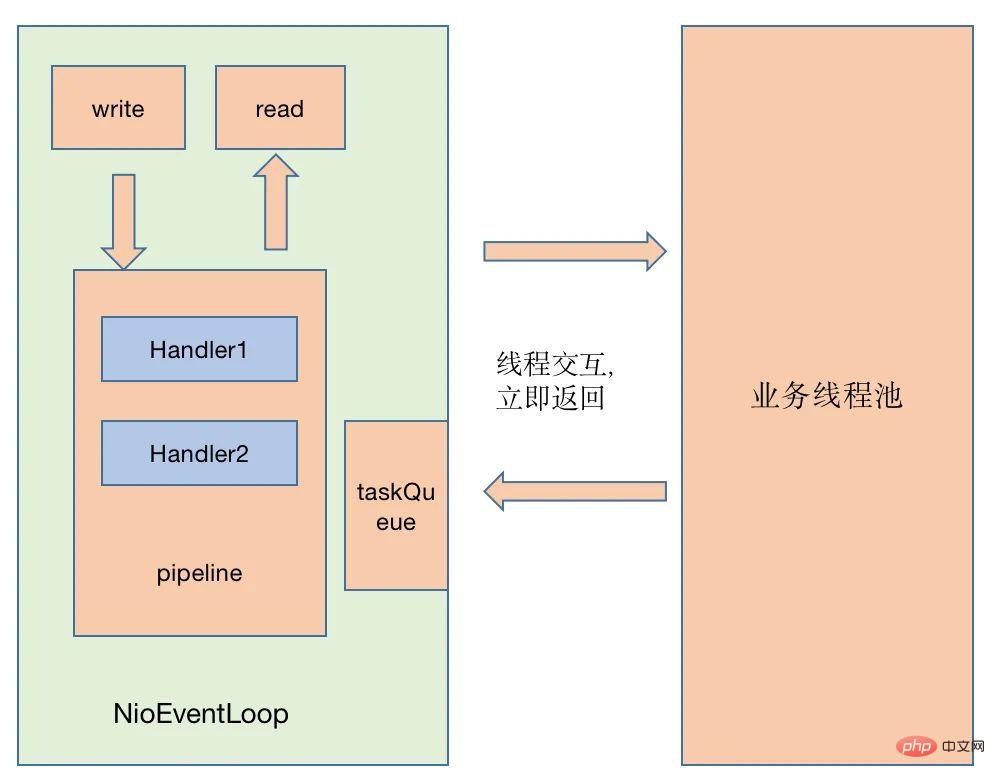

I will stay in the thread pool of AllChannelHandler for a while, so I also drew a picture to record the journey.

Since then, my trip has ended, and the new story will be continued by the new data package.

I am a data packet, born in a small provider called Dubbo2.7.3 Town, my mission is to awaken the destined thread. Next, I will start a journey to a place called Dubbo2.5.3 Consumer.

After the Provider business method is executed

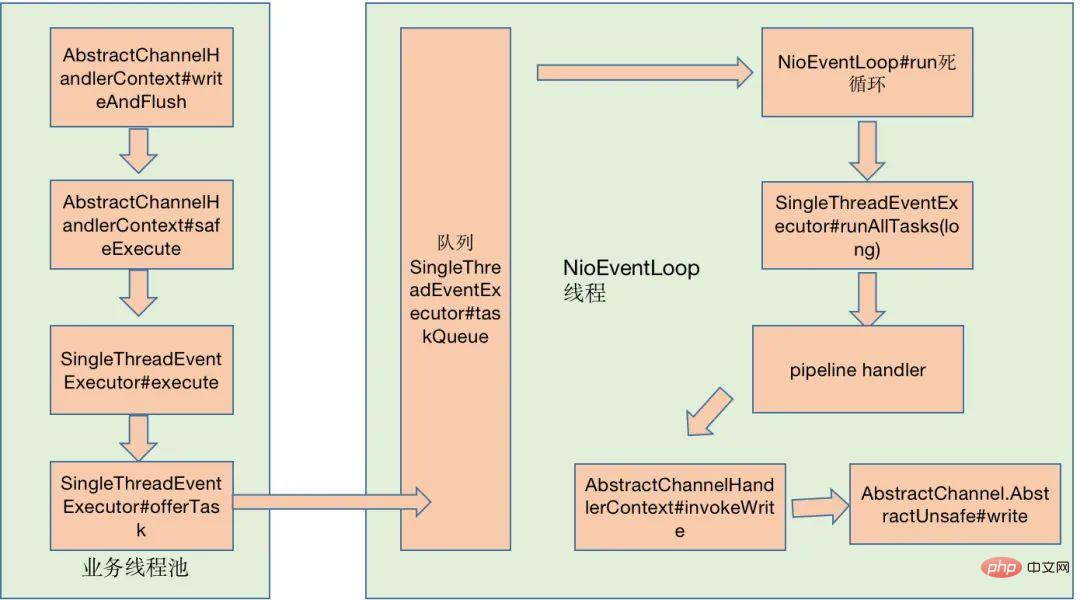

io.netty.channel.AbstractChannelHandlerContext#writeAndFlush Execute addTask and waited for NioEventLoop to start. While waiting, I recorded the steps I took.

AbstractChannelHandlerContext.WriteAndFlushTask , and then directed us to wait in the socket buffer area, never getting tired. I seemed to understand that he had a stubborn craftsman spirit that pursued perfection.

io.netty.channel.AbstractChannel.AbstractUnsafe#write, I reach the operating system socket buffer. At the operating system level, like most data packets, it also takes a cable car to reach its destination.

Arrive at the dubbo 2.5.3 Consumer side. I waited for a while in the operating system socket buffer. I also took the "Zero Copy" speedboat and arrived at the real destination dubbo 2.5.3 Consumer. Here I found that NioWorker#run is an infinite loop, and then executed NioWorker#processSelectedKeys , read it out through the NioWorker#read method, and I reached the thread pool of AllChannelHandler, which is a business thread pool.

I waited here for a while, waiting for the task to be scheduled. I saw that com.alibaba.dubbo.remoting.exchange.support.DefaultFuture#doReceived was executed, and the signal of Condition was executed at the same time. Executed. I saw a blocked thread being awakened in the distance. I seemed to understand that because of my arrival, I awakened a sleeping thread. I think this should be the meaning of my life.

At this point, my mission has been completed and this journey is over.

We summarize the threading models of netty3 and netty4 based on the self-descriptions of the two data packets.

Description: There is no netty3 reading process here. The netty3 reading process is the same as netty4, and the pipeline is executed by the IO thread.

Summary: The difference between netty3 and netty4 thread models lies in the writing process. In netty3, the pipeline is executed by the business thread, while in netty4, regardless of reading or writing, the pipeline is executed by the IO thread.

The Handler chain in the ChannelPipeline in netty4 is uniformly scheduled serially by the I/O thread. Whether it is a read or write operation, the write operation in netty3 is handled by the business thread. In netty4, the time consumption caused by context switching between threads can be reduced, but in netty3, business threads can execute Handler chains concurrently. If there are some time-consuming Handler operations that will lead to low efficiency of netty4, you can consider executing these time-consuming operations first on the business thread instead of processing them in the Handler. Since business threads can execute concurrently, efficiency can also be improved.

I have encountered some typical difficult problems, such as when the didi.log requested by the Provider takes a normal time, The Consumer side has timed out. At this time, there are following troubleshooting directions. The Filter of didi.log is actually at a very inner level and often cannot reflect the actual execution of business methods.

In addition to business direction execution of Provider, serialization may also be time-consuming, so you can use arthas to monitor the outermost method org.apache.dubbo.remoting.transport.DecodeHandler #received, eliminate the problem of high time consumption of business methods

Whether the writing of data packets in Provider is time-consuming, monitor the io.netty.channel.AbstractChannelHandlerContext#invokeWrite method

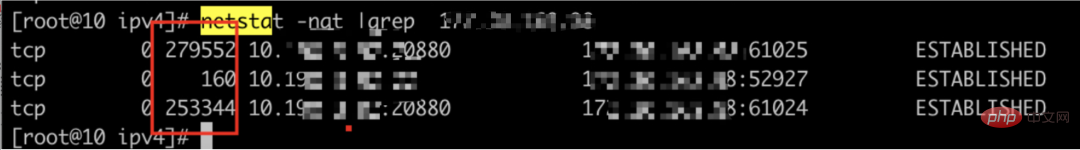

You can also check some information of the current tcp socket through netstat, such as Recv-Q, Send-Q, Recv-Q has reached the receiving buffer, but Data that has not yet been read by the application code. Send-Q has reached the sending buffer, but the other party has not yet replied with the Ack data. These two types of data generally do not accumulate. If they accumulate, there may be a problem.

See whether the Consumer NioWorker#processSelectedKeys (dubbo2.5.3) method is time-consuming.

# Until all the details of the entire link in the end... the problem can definitely be solved.

Throughout the interaction process, the author omits some details and sources of thread stack calls Details of the code, such as serialization and deserialization, how Dubbo reads the complete data packet, how the Filters are sorted and distributed before the business method is executed, and how Netty's Reactor mode is implemented. These are very interesting questions...

The above is the detailed content of 5 Dubbo interview questions with high gold content!. For more information, please follow other related articles on the PHP Chinese website!

What is the difference between dubbo and zookeeper

What is the difference between dubbo and zookeeper

What are the differences between springcloud and dubbo

What are the differences between springcloud and dubbo

What is the principle and mechanism of dubbo

What is the principle and mechanism of dubbo

How to apply for registration of email address

How to apply for registration of email address

What are the Python artificial intelligence frameworks?

What are the Python artificial intelligence frameworks?

windows change file type

windows change file type

How about Binance exchange?

How about Binance exchange?

How to reference css in html

How to reference css in html