Technology peripherals

Technology peripherals

AI

AI

Baidu CIO Li Ying: Large models are an important opportunity in the enterprise office field, and the native reconstruction of AI will change the way intelligent work is done.

Baidu CIO Li Ying: Large models are an important opportunity in the enterprise office field, and the native reconstruction of AI will change the way intelligent work is done.

Baidu CIO Li Ying: Large models are an important opportunity in the enterprise office field, and the native reconstruction of AI will change the way intelligent work is done.

On August 16, 2023, the WAVE SUMMIT Deep Learning Developer Conference was held in China. The event was hosted by the National Engineering Research Center for Deep Learning Technology and Applications and hosted by Baidu Feipiao and Wenxin Big Model. At the meeting, Baidu released the latest progress and ecological achievements of a series of technologies, products, such as Wenxin large model, flying paddle platform and AI native applications such as Liuliu. Baidu Group Vice President and Chief Information Officer Li Ying delivered a keynote speech. She believed that the current fourth technological revolution with AI large models as the core technology will fundamentally promote productivity changes, provide strong support for all walks of life, and provide enterprises with The office field brings unprecedented development opportunities

Based on AI native thinking, Li Ying announced that Baidu’s smart work knowledge management concept “Innovation Pipeline = AI Pound upgrade. Li Ying mentioned that large models compress human understanding of the world, and intelligence emerges. The most important manifestation is excellence in knowledge. Large models are a major opportunity in the corporate office space and can lead to more profound changes

Li Ying pointed out that the big language model, represented by Wen Xinyiyan, already has the capabilities of understanding, generation, logic, and memory, and has led to the emergence of more capabilities, bringing major changes to intelligent work. This change has three main characteristics: first, the interaction method will be mainly based on natural language interaction; secondly, the demand satisfaction method can achieve end-to-end ultimate satisfaction; finally, the work model and process will be reshaped, and ultimately based on AI native construction A new working paradigm

Ruliu "Super Assistant" is officially released, demonstrating the native AI capabilities of four major scenarios on site

At the meeting, Li Ying released an important product called "Super Assistant", which is based on Wen Xinyiyan's ability and has been fully upgraded on the basis of the original Ruliu Super Assistant. Different from the assistant concept, this product is more humane and proactive

The goal of Ruliu Super Assistant is to create an assistant for everyone that can understand you, be professional, and accompany you in your work in real time. Li Ying focused on demonstrating the functions of the super assistant in four scenarios including mobile task execution, intelligent document processing, conversational business intelligence CBI and intelligent communication, and conducted more than 10 demonstrations

As the entrance to AI capabilities, the super assistant can be evoked and respond anytime and anywhere, accompanying the user at all times. The super assistant’s voice functions such as one-click appointment, one-click vacation, and one-click travel are demonstrated on mobile devices. For example, the user can voice input on the super assistant interface "I will go to the Shanghai R&D Center for a meeting the day after tomorrow morning and return to Beijing on the same day. Please help me plan my itinerary." The super assistant can quickly call the functions of different modules or platforms and automatically submit travel applications and reservations. Air tickets and hotels, and provide ideal accommodation locations based on user habits. It can also intelligently complete corporate settlements without requiring users to go through the reimbursement process, just like a real assistant. Operation efficiency is compressed from hours to minutes or even seconds.

In intelligent document processing scenarios, the super assistant has excellent capabilities and can help users find documents, learn knowledge and read literature. Just enter the scene in which the document appears, and the super assistant can accurately find the target document. For English papers, the super assistant can span different platforms, quickly generate Chinese abstracts with one click, and has translation and interpretation functions. Super assistants not only greatly improve the efficiency of knowledge acquisition, but also greatly expand the boundaries of employees' capabilities

Super Assistant has also developed conversational business intelligence based on large model technology, namely Baidu Conversational BI (CBI). In the "Baidu CBI" scenario, Li Ying demonstrated functions such as querying sales data, analyzing team data, and querying business opportunities. Different from traditional business intelligence, through the super assistant, structured business analysis results can be quickly calculated and output using natural language input, and CBI will even provide suggestions

Communication is one of the most common scenarios at work, and super assistants can help reduce noise and burden, extract key information and focus on the core when faced with a large amount of information. Super Assistant has multiple functions in communication scenarios, such as "Super Assistant IM Intelligent Summary", which can generate intelligent summaries after selecting multiple conversations and forward them to the recipient with one click. The recipient can quickly see the concise and accurate summary, thus Make decisions and respond quickly. According to statistics, this feature increases message reading efficiency by 3 times. In terms of meetings, AI insights and AI meeting minutes can improve the reading and application efficiency of meeting content by 3.5 times

The Comate series of products has been significantly upgraded, and Comate X is open to enterprise developers for the first time

Baidu Comate series products have undergone a new upgrade in the development of intelligent scenarios, and have released intelligent programming assistants Comate X and Comate Stack tool suites

Comate In addition to being able to interpret code and generate code, it also has the ability to generate interline comments, unit tests, documentation, command line and interface code. Currently, the assistant supports more than 30 programming languages and more than ten integrated development environments

At the scene, Li Ying cooperated with Baidu engineers and used natural language to propose a task to Comate X, which was to "write an activation code program." Surprisingly, within a few minutes, the entire process of code generation, code interpretation, code annotation, and unit testing was completed. More importantly, during the entire process, the engineer did not write a line of code, but relied entirely on the programming assistant to complete

Li Ying officially announced that Comate X is open to enterprise developers, becoming the first commercial full-scenario intelligent programming assistant in China. Baidu has widely used Comate series products internally, and has currently provided services and docking with more than 100 corporate partners, entering a mature commercialization stage

Comate X is a R&D tool chain that uses AI native reconstruction. At the same time, this tool chain also provides services for the development of AI native applications. The research and development process is also undergoing reconstruction, requiring a new architecture and tool suite adapted to AI applications. Based on this, Li Ying released the Comate Stack tool suite, which can support the entire process of AI native research and development. Using Baidu Comate Stack, you can develop and launch a super assistant plug-in in just two steps. Through the Comate Stack tool suite, the development threshold for AI native applications can be significantly lowered and the efficiency of AI native transformation can be improved

Baidu Intelligent Work Platform has currently empowered dozens of major industries and fields, including finance, energy, artificial intelligence, manufacturing, communications, etc. Among them, the Comate series of intelligent products developed have been widely used in all walks of life

The corporate office field is undergoing a major change. This change is accelerated by artificial intelligence represented by large language models, which accelerates the transformation and upgrading of all walks of life. In this process, technological innovation and application implementation have formed a virtuous cycle, continuously improving capabilities such as understanding, generation, logic, and memory, while also continuously expanding the breadth and depth of industrial applications. Large language models bring glimmers of hope for general artificial intelligence

The above is the detailed content of Baidu CIO Li Ying: Large models are an important opportunity in the enterprise office field, and the native reconstruction of AI will change the way intelligent work is done.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

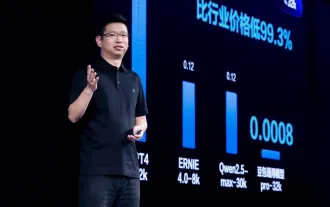

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

"High complexity, high fragmentation, and cross-domain" have always been the primary pain points on the road to digital and intelligent upgrading of the transportation industry. Recently, the "Qinling·Qinchuan Traffic Model" with a parameter scale of 100 billion, jointly built by China Vision, Xi'an Yanta District Government, and Xi'an Future Artificial Intelligence Computing Center, is oriented to the field of smart transportation and provides services to Xi'an and its surrounding areas. The region will create a fulcrum for smart transportation innovation. The "Qinling·Qinchuan Traffic Model" combines Xi'an's massive local traffic ecological data in open scenarios, the original advanced algorithm self-developed by China Science Vision, and the powerful computing power of Shengteng AI of Xi'an Future Artificial Intelligence Computing Center to provide road network monitoring, Smart transportation scenarios such as emergency command, maintenance management, and public travel bring about digital and intelligent changes. Traffic management has different characteristics in different cities, and the traffic on different roads

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

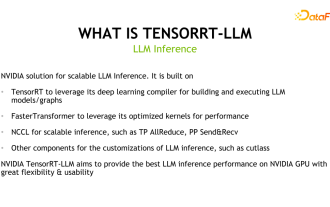

1. Product positioning of TensorRT-LLM TensorRT-LLM is a scalable inference solution developed by NVIDIA for large language models (LLM). It builds, compiles and executes calculation graphs based on the TensorRT deep learning compilation framework, and draws on the efficient Kernels implementation in FastTransformer. In addition, it utilizes NCCL for communication between devices. Developers can customize operators to meet specific needs based on technology development and demand differences, such as developing customized GEMM based on cutlass. TensorRT-LLM is NVIDIA's official inference solution, committed to providing high performance and continuously improving its practicality. TensorRT-LL

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

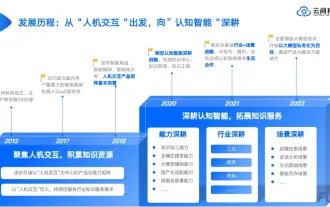

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq