Technology peripherals

Technology peripherals

AI

AI

In-depth analysis: key components and functions in the AI LLM framework

In-depth analysis: key components and functions in the AI LLM framework

In-depth analysis: key components and functions in the AI LLM framework

This article deeply explores the high-level architecture of an artificial intelligence framework, analyzing its internal components and their functions in the overall system. This AI framework aims to make it easier to combine traditional software with large language models (LLMs).

Its core purpose is to provide developers with a set of tools to help them smoothly integrate artificial intelligence into software already used in the company. This innovative strategy has created a software platform for us that can run many AI applications and intelligent agents at the same time, thereby realizing more high-end and complex solutions.

1. Application examples of AI framework

In order to have a deeper understanding of the capabilities of this framework, here are some application examples that can be developed using this framework:

- AI Sales Assistant: This is a tool that can automatically search for potential customers, analyze their business needs, and draft proposals for the sales team. Such an AI assistant will find effective ways to establish contact with target customers and open the first step of sales.

- AI Real Estate Research Assistant: This tool can continuously monitor new listings in the real estate market and screen qualified listings based on specified criteria. In addition, it can design communication strategies, collect more information about a certain property, and provide assistance to users in all aspects of home buying.

- AI Zhihu Discussion Summary ApplicationThis intelligent application should be able to analyze discussions on Zhihu , Extract conclusions, tasks, and next steps that need to be taken.

2. AI framework module

The AI framework should provide developers with a set of different modules, including contract definitions, interfaces, and implementations of common abstractions.

This solution should be a solid foundation upon which you can build your own solution, using proven patterns, adding your own implementations of individual modules, or using community-prepared modules .

- The Hints and Chaining Module is responsible for building hints, i.e. programs written for language models, and chains of calls to these hints, which are executed one after the other in sequence. This module should make it possible to implement various techniques used in Language Models (LM) and Large Language Models (LLM). It should also be able to combine prompts with models and create prompt groups that provide a single functionality across multiple LLM models.

- Model Module is responsible for processing and connecting the LLM model to the software, making it available to other parts of the system.

- Communication Module is responsible for handling and adding new communication channels with users, whether in the form of chats in one of the messaging programs or as APIs and webhooks for integration with other systems (webhook) form.

- Tools module is responsible for providing functionality to add tools used by AI applications, such as reading the content of a website from a link, reading a PDF file, searching for information online, or sending an email Ability.

- Memory module should be responsible for memory management and allow additional memory function implementations to be added to AI applications to store the current state, data and currently executing tasks.

- Knowledge Base ModuleThis module should be responsible for managing access rights and allowing the addition of new sources of organizational knowledge, such as information about processes, documentation, guidance, and all electronically captured information in the organization.

- Routing moduleThis module should be responsible for routing external information from the communication module to the appropriate AI application. Its role is to determine the user's intent and launch the correct application. If the application has been started previously and has not completed the operation, it should resume and pass data from the communication module.

- AI Application ModuleThis module should allow the addition of specialized AI applications that are focused on performing specific tasks, such as automating or partially automating processes. An example solution might be a Slack or Teams chat summary application. Such an application might include one or more prompts linked together, using tools, memory, and leveraging information from a knowledge base.

- AI Agent ModuleThis module should contain more advanced application versions that can autonomously talk to the LLM model and perform assigned tasks automatically or semi-automatically.

- Accountability and Transparency ModuleThe Accountability and Transparency Module records all interactions between users and AI systems. It tracks queries, responses, timestamps, and authorship to differentiate between human-generated and AI-generated content. These logs provide visibility into autonomous actions taken by the AI and messages between the model and the software.

- User Module In addition to basic user management functions, this module should also maintain user account mapping across integrated systems from different modules.

- Permission moduleThis module should store user permission information and control user access to resources, ensuring that they can only access appropriate resources and applications.

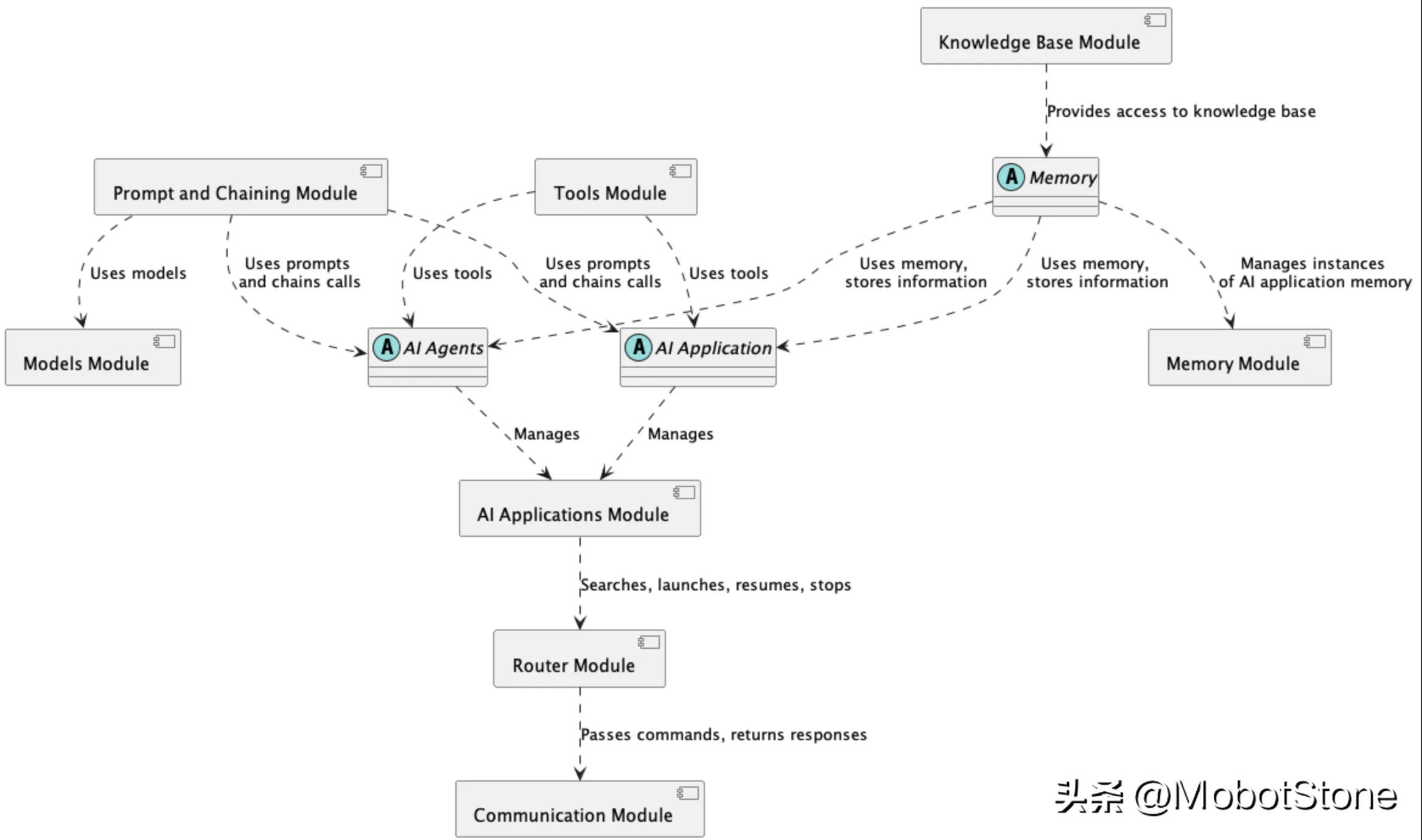

3. AI framework component architecture

In order to better demonstrate the interaction between different modules in the AI framework architecture, the following is an overview of a component diagram:

This diagram shows the relationship between the key components of the framework:

- Prompts and Chained Modules: Building prompts for AI models, And concatenate multiple prompts through chain calls to implement more complex logic.

- Memory module: Memory management through memory abstraction. The knowledge base module provides access to knowledge sources.

- Tool module: Provides tools that can be used by AI applications and agents.

- Routing module: Direct queries to the appropriate AI application. Applications are managed in the AI application module.

- Communication Module: Handles communication channels like chat.

This component architecture shows how different modules work together to make it possible to build complex AI solutions. The modular design allows functionality to be easily expanded by adding new components.

4. Module dynamic example

In order to illustrate the collaboration between AI framework modules, let’s analyze a typical information processing path in the system:

- The user sends a query using the chat function through the communication module.

- The routing module analyzes the content and determines the appropriate AI application from the application module.

- The application obtains the necessary data from the storage module to restore the conversation context.

- Next, it uses the command module to build the appropriate commands and passes them to the AI model from the model module.

- If necessary, it will execute the tools in the tool module, such as searching for information online.

- Finally, it returns a response to the user through the communication module.

- Important information will be stored in the storage module to continue the conversation.

Thanks to this way of functioning, framework modules should be able to collaborate with each other to enable AI applications and agents to implement complex scenarios.

5. Summary

The AI framework should provide comprehensive tools for building modern AI-based systems. Its flexible, modular architecture should allow for easy expansion of functionality and integration with an organization's existing software. Thanks to AI frameworks, programmers should be able to quickly design and implement a variety of innovative solutions using language models. With ready-made modules, they should be able to focus on business logic and application functionality. This makes it possible for AI frameworks to significantly accelerate the digital transformation of many organizations.

The above is the detailed content of In-depth analysis: key components and functions in the AI LLM framework. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Why do large language models use SwiGLU as activation function?

Apr 08, 2024 pm 09:31 PM

Why do large language models use SwiGLU as activation function?

Apr 08, 2024 pm 09:31 PM

If you have been paying attention to the architecture of large language models, you may have seen the term "SwiGLU" in the latest models and research papers. SwiGLU can be said to be the most commonly used activation function in large language models. We will introduce it in detail in this article. SwiGLU is actually an activation function proposed by Google in 2020, which combines the characteristics of SWISH and GLU. The full Chinese name of SwiGLU is "bidirectional gated linear unit". It optimizes and combines two activation functions, SWISH and GLU, to improve the nonlinear expression ability of the model. SWISH is a very common activation function that is widely used in large language models, while GLU has shown good performance in natural language processing tasks.

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

As the performance of open source large-scale language models continues to improve, performance in writing and analyzing code, recommendations, text summarization, and question-answering (QA) pairs has all improved. But when it comes to QA, LLM often falls short on issues related to untrained data, and many internal documents are kept within the company to ensure compliance, trade secrets, or privacy. When these documents are queried, LLM can hallucinate and produce irrelevant, fabricated, or inconsistent content. One possible technique to handle this challenge is Retrieval Augmented Generation (RAG). It involves the process of enhancing responses by referencing authoritative knowledge bases beyond the training data source to improve the quality and accuracy of the generation. The RAG system includes a retrieval system for retrieving relevant document fragments from the corpus

Optimization of LLM using SPIN technology for self-game fine-tuning training

Jan 25, 2024 pm 12:21 PM

Optimization of LLM using SPIN technology for self-game fine-tuning training

Jan 25, 2024 pm 12:21 PM

2024 is a year of rapid development for large language models (LLM). In the training of LLM, alignment methods are an important technical means, including supervised fine-tuning (SFT) and reinforcement learning with human feedback that relies on human preferences (RLHF). These methods have played a crucial role in the development of LLM, but alignment methods require a large amount of manually annotated data. Faced with this challenge, fine-tuning has become a vibrant area of research, with researchers actively working to develop methods that can effectively exploit human data. Therefore, the development of alignment methods will promote further breakthroughs in LLM technology. The University of California recently conducted a study introducing a new technology called SPIN (SelfPlayfInetuNing). S

Utilizing knowledge graphs to enhance the capabilities of RAG models and mitigate false impressions of large models

Jan 14, 2024 pm 06:30 PM

Utilizing knowledge graphs to enhance the capabilities of RAG models and mitigate false impressions of large models

Jan 14, 2024 pm 06:30 PM

Hallucinations are a common problem when working with large language models (LLMs). Although LLM can generate smooth and coherent text, the information it generates is often inaccurate or inconsistent. In order to prevent LLM from hallucinations, external knowledge sources, such as databases or knowledge graphs, can be used to provide factual information. In this way, LLM can rely on these reliable data sources, resulting in more accurate and reliable text content. Vector Database and Knowledge Graph Vector Database A vector database is a set of high-dimensional vectors that represent entities or concepts. They can be used to measure the similarity or correlation between different entities or concepts, calculated through their vector representations. A vector database can tell you, based on vector distance, that "Paris" and "France" are closer than "Paris" and

Detailed explanation of GQA, the attention mechanism commonly used in large models, and Pytorch code implementation

Apr 03, 2024 pm 05:40 PM

Detailed explanation of GQA, the attention mechanism commonly used in large models, and Pytorch code implementation

Apr 03, 2024 pm 05:40 PM

GroupedQueryAttention is a multi-query attention method in large language models. Its goal is to achieve the quality of MHA while maintaining the speed of MQA. GroupedQueryAttention groups queries, and queries within each group share the same attention weight, which helps reduce computational complexity and increase inference speed. In this article, we will explain the idea of GQA and how to translate it into code. GQA is in the paper GQA:TrainingGeneralizedMulti-QueryTransformerModelsfromMulti-HeadCheckpoint

RoSA: A new method for efficient fine-tuning of large model parameters

Jan 18, 2024 pm 05:27 PM

RoSA: A new method for efficient fine-tuning of large model parameters

Jan 18, 2024 pm 05:27 PM

As language models scale to unprecedented scale, comprehensive fine-tuning for downstream tasks becomes prohibitively expensive. In order to solve this problem, researchers began to pay attention to and adopt the PEFT method. The main idea of the PEFT method is to limit the scope of fine-tuning to a small set of parameters to reduce computational costs while still achieving state-of-the-art performance on natural language understanding tasks. In this way, researchers can save computing resources while maintaining high performance, bringing new research hotspots to the field of natural language processing. RoSA is a new PEFT technique that, through experiments on a set of benchmarks, is found to outperform previous low-rank adaptive (LoRA) and pure sparse fine-tuning methods using the same parameter budget. This article will go into depth

LLMLingua: Integrate LlamaIndex, compress hints and provide efficient large language model inference services

Nov 27, 2023 pm 05:13 PM

LLMLingua: Integrate LlamaIndex, compress hints and provide efficient large language model inference services

Nov 27, 2023 pm 05:13 PM

The emergence of large language models (LLMs) has stimulated innovation in multiple fields. However, the increasing complexity of prompts, driven by strategies such as chain-of-thought (CoT) prompts and contextual learning (ICL), poses computational challenges. These lengthy prompts require significant resources for reasoning and therefore require efficient solutions. This article will introduce the integration of LLMLingua with the proprietary LlamaIndex to perform efficient reasoning. LLMLingua is a paper published by Microsoft researchers at EMNLP2023. LongLLMLingua is a method that enhances llm's ability to perceive key information in long context scenarios through fast compression. LLMLingua and llamindex