Technology peripherals

Technology peripherals

It Industry

It Industry

The people of California deeply regret: the reliability of autonomous driving is really worrying, and the torture of unmanned taxis is so painful

The people of California deeply regret: the reliability of autonomous driving is really worrying, and the torture of unmanned taxis is so painful

The people of California deeply regret: the reliability of autonomous driving is really worrying, and the torture of unmanned taxis is so painful

On August 11, California, the most conservative region in the United States, held a hearing to discuss whether driverless taxis can legally be on the road. After six hours of debate, self-driving supporters achieved a landslide victory in a 3-1 vote

Cruise CEO Kyle Vogt (Kyle Vogt) announced in San Francisco that thousands of taxis supporting L4 autonomous driving technology will be launched in the next six months to provide all-weather services to local residents

However, a week after the California Department of Motor Vehicles lifted the ban on driverless taxis, it regretted its decision and immediately issued strict controls measure. Surprisingly, what have driverless taxis done in just one week to make the California Department of Motor Vehicles so angry?

In one week, Autonomous driving is driving California residents crazy

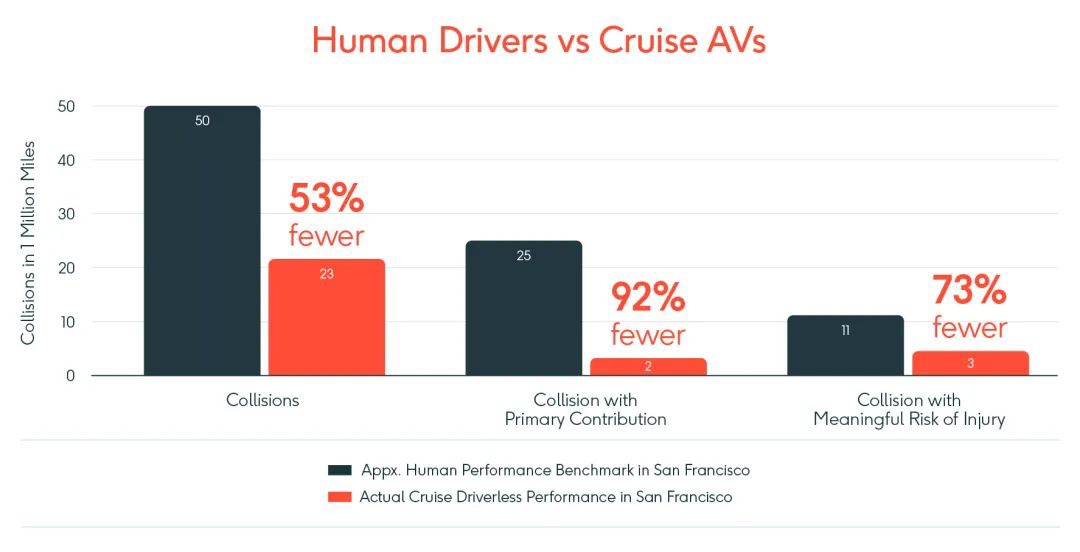

In order to convince the California government to allow driverless taxis on the road, Cruise A large amount of data was provided to prove the safety of driverless driving, but the results were...

After a week on the road in California, Cruise driverless rental There have been many accidents involving cars. Let’s take a look at a few typical cases.

First of all, at 10pm last Thursday, a driverless taxi collided with a fire truck at an intersection. Fortunately, the accident was not serious. The passengers were not seriously injured under the protection of the airbags and were sent to the hospital in time. In this regard, Cruise explained that the car had detected and identified the fire truck. And braking measures were taken, but because it was at an intersection, the accident could not be avoided

On the same day, a Cruise driverless taxi accidentally crashed into a road under construction and was firmly trapped in the cement. Since AI is not a real person after all, it cannot judge whether the cement has dried out, but the taxi company is still involved in the matter

Of course, the main responsibility should be borne by the road administration and road construction companies, because they are not set up at the road construction site Isolation facilities. Taxi companies are to blame because their maps did not capture road construction data. You know, almost all online map platforms in China can provide real-time mobile phone data. In addition to official surveying and mapping personnel, users can also upload road information

Since you have decided to develop autonomous driving, you should not ignore this point

Last Friday, Cruise had its biggest problem. Ten cars suddenly stalled at the intersection, causing the entire street to be blocked for more than 20 minutes. The explanation given by Cruise is that a music festival was being held locally, which disrupted the signal of the driverless taxi, causing the vehicle to lose data

Rewritten: This is really incredible, do cars have to stay connected all the time? ? So what should you do when going through a tunnel? Whether it is 4G base stations or 5G base stations, China has more than 60% coverage, but China cannot guarantee that signals are everywhere. In comparison, the base station coverage rate in the United States is much lower than that in China. Not only is the signal unstable, but it is also worried about interference from other signal sources. I really don’t know what Cruise is thinking about.

Although another taxi company, Waymo, did not expose too many accidents this time, it has also experienced Many problems have occurred, such as the first fatal case of a driverless car hitting a person in 2018 and dozens of collisions with fire trucks. Rewritten content: Although the Waymo taxi company did not expose too many accidents this time, many problems have occurred before, such as the first fatal case of a driverless car hitting a person in 2018, and an accident with a fire truck. Multiple collisions

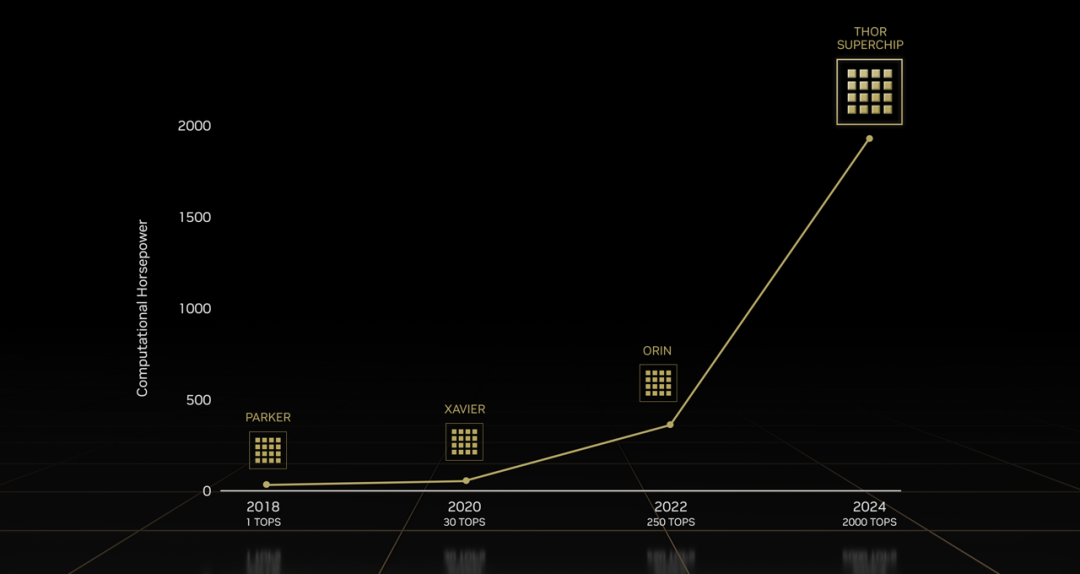

In a week, autonomous driving technology has exposed many problems, many of which are related to safety. In recent years, many countries around the world have begun to promote commercial autonomous driving technology, but in the face of so many problems, how can we use autonomous driving with confidence?Cars are speeding by on the road at speeds of dozens or hundreds of kilometers per hour. If you are not careful, you may cause a car accident and cause casualties. Therefore, we naturally pay more attention to safety issues In order to achieve autonomous driving, we first need to collect data through sensors, and then we need to combine the chip with the processor and issue instructions. From a security perspective, the more sensors, the better, and the more accurate the data collected, the better. However, a large number of high-precision sensors will put greater pressure on the chip, not to mention cost issues. After Intel, Nvidia and Qualcomm entered the automotive industry, they are developing high-performance chips. Among them, the computing power of the Thor chip launched by NVIDIA has reached 2000 trillion operations per second (2000TOPS)

Autonomous driving is heading towards the future,There are still many difficulties to be overcome

## (Source: NVIDIA)

## (Source: NVIDIA)

(Source: Tesla)

(Source: Tesla) The reason why Tesla Model 3 wants to cut off all radars is because it wants to reduce costs. Vehicle-road collaboration and high-precision maps require time, manpower and material resources to be continuously improved, and it is difficult to achieve nationwide coverage in a short time.

The future of autonomous driving is bright, but it has not yet been able to completely dominate the automotive industry

Autonomous driving,

should be put back in the cageJudging from online publicity, autonomous driving seems to be very mature and can be put into commercial use immediately with just a word from the relevant department. However, this is not the case. The current commercial autonomous driving only treats some consumers as test subjects. As for whether autonomous driving should be opened for commercial use, Xiaotong believes that it should be promoted cautiously and not be too impatient. At present, many car manufacturers have launched advanced assisted driving systems, such as Huawei ADS and Xpeng XNGP, and their performance is very good

When these car companies collect enough data and are willing to take responsibility for accidents to ensure the safety of consumers, autonomous driving technology can truly enter thousands of households

This content comes from WeChat Public account: Dianchetong (ID: dianchetong233), the author is Lost Soul Yin

Advertising statement: This article contains external jump links (including but not limited to hyperlinks, QR codes, passwords, etc. ), designed to provide more information and save screening time, the results are for reference only. Please note that all articles on this site contain this statement

The above is the detailed content of The people of California deeply regret: the reliability of autonomous driving is really worrying, and the torture of unmanned taxis is so painful. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy