Technology peripherals

Technology peripherals

AI

AI

DingTalk Conference introduces AI functions: digital avatars, conversation generation virtual backgrounds and other newly upgraded functions

DingTalk Conference introduces AI functions: digital avatars, conversation generation virtual backgrounds and other newly upgraded functions

DingTalk Conference introduces AI functions: digital avatars, conversation generation virtual backgrounds and other newly upgraded functions

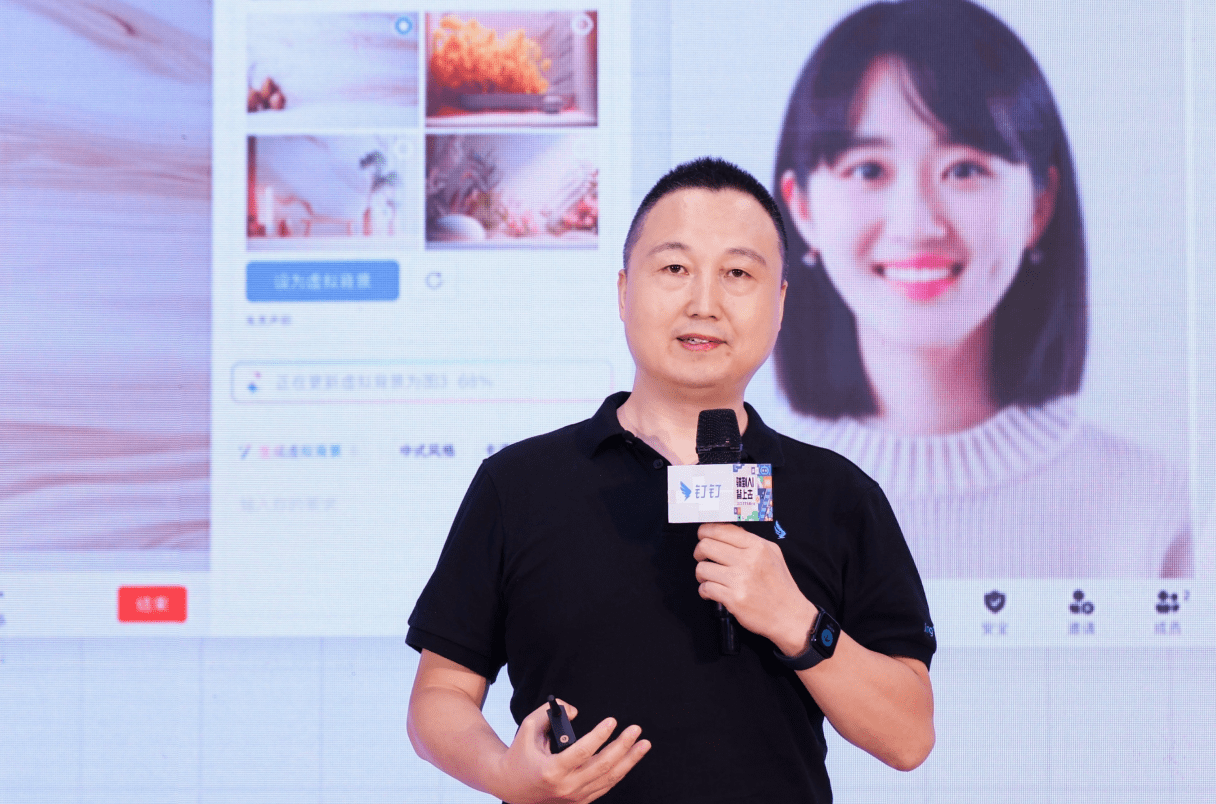

On August 22, 2023, the “Ride on the Back of AI” DingTalk Ecological Conference was held in Shanghai. At the special sub-forum for digital employees, Zhao Jiayu, vice president of DingTalk and head of the audio and video business unit, delivered a keynote speech, sharing the progress and applications of DingTalk audio and video in intelligence, as well as the field of video conferencing based on DingTalk AI PaaS. Future open outlook

Zhao Jiayu mentioned that DingTalk announced in April that it would fully integrate large models to complete intelligent reconstruction. As the integration of artificial intelligence and audio and video becomes more and more profound, DingTalk Conference has also realized many innovative applications. In addition to the previous real-time translation, subtitle transcription and intelligent summarization, three new functions have been launched this time: digital avatar instead of attending meetings, natural language control of meeting operations and Vincent virtual background. Among them, the digital clone can replace the user to participate in the meeting when the meeting time conflicts, and can synchronize relevant meeting information and conclusions in real time

He said that in the future, DingTalk Audio and Video hopes to open more fields to ecological partners, jointly build rich and valuable applications, and improve user experience and efficiency. At the same time, DingTalk also plans to embed the audio and video SDK into the applications of ecological partners to jointly create high-quality, easy-to-use audio and video solutions to meet the needs of different industries and scenarios.

The following is the full text of Zhao Jiayu’s speech:

In the past year, the key words of DingTalk Audio and Video Division are experience and intelligence

Last year we set up a dedicated team to comprehensively optimize DingTalk meetings and made a lot of improvements both internally and externally. Now, the interface interaction of DingTalk Meeting is more beautiful, convenient and immersive. At the same time, in terms of the underlying technical architecture, we have comprehensively optimized the collection, playback, encoding and decoding, network and server. Especially in terms of audio and video, our most basic requirement is to be able to have a smooth meeting and hear the other party's voice, so we have improved the audio algorithm and anti-weak network aspects. I hope you can use our DingTalk meetings more

When it comes to intelligence, the field of video conferencing has been very closely integrated with artificial intelligence before this major improvement. Artificial intelligence technology has been integrated into audio algorithms and video algorithms. For example, artificial intelligence applications related to video algorithms include beautification and virtual backgrounds, which are very familiar scene applications. The audio algorithm also achieves better noise reduction effects by combining artificial intelligence. Currently, DingTalk Meeting supports the elimination of more than 300 common noises, such as car horns and keyboard typing, allowing users to conduct meetings more focused

Another common requirement we have in meetings is subtitles, which is essentially the capability of artificial intelligence. Now DingTalk Conference has been fully integrated into Alibaba Tongyi Listening, supports translation between Chinese, English and Japanese, and realizes real-time transcription and translation. In addition, there is also an intelligent navigation function. During a meeting in a conference room, if there are online colleagues joining the discussion, it may happen that they are sitting too far away to hear clearly, or the other party cannot hear what they are saying. Our Hummingbird Audio Lab combines artificial intelligence and original differential array technology to achieve sound pickup up to 10 meters away from a single conference device. When the speaker speaks, it supports precise sound source positioning, real-time tracking and zooming in on his avatar, making each speaker the focus and creating a better conversation experience

With the advent of the era of large models, we have conducted relevant research and hope to use large models to re-create DingTalk meetings. Today, DingTalk launched a new AI PaaS intelligent base and opened it to ecosystem partners. In the DingTalk meeting, we used AI PaaS to implement many interesting functions

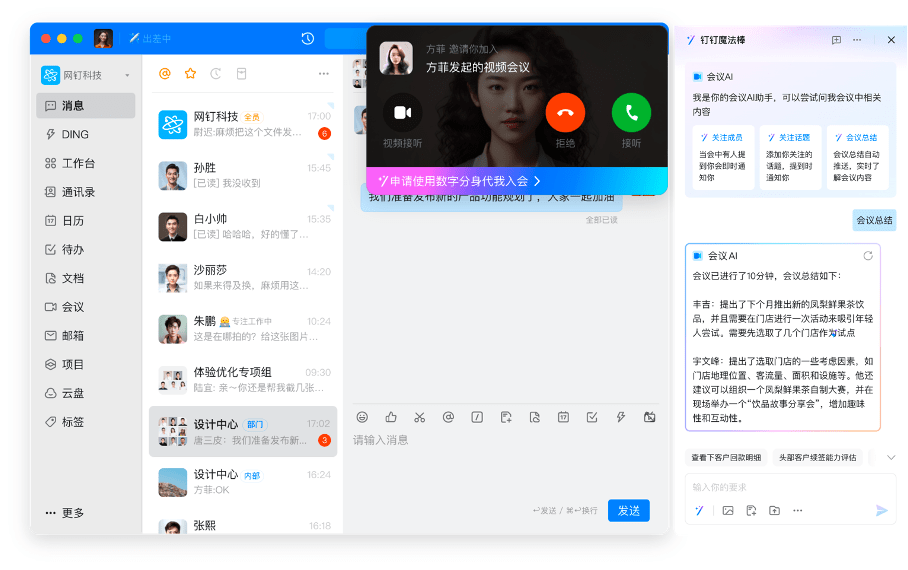

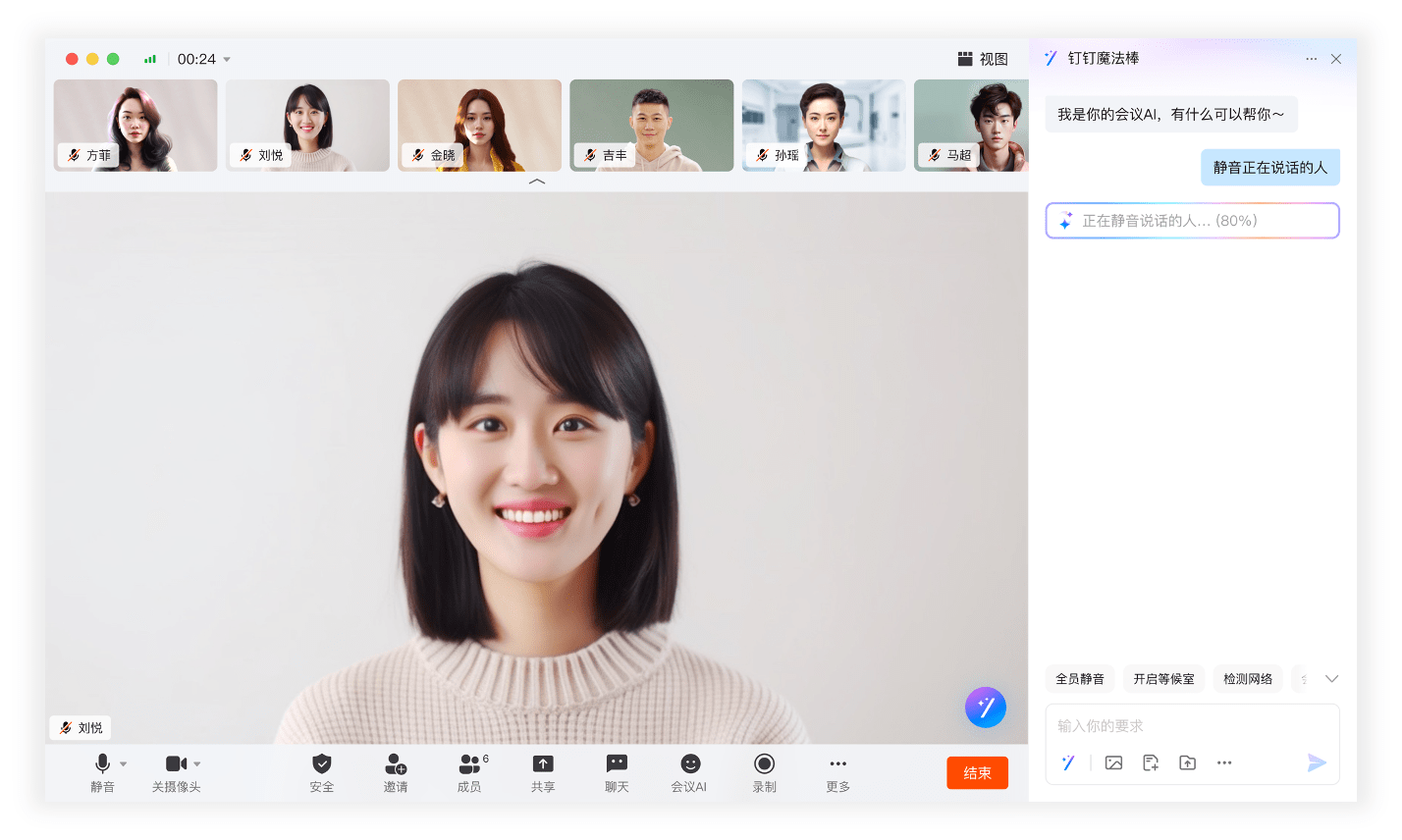

Rewritten content: First of all, it is a new way to replace meetings with digital avatars. In the workplace, people often face a large number of meetings, and now we use smart documents to help everyone solve this problem. We also tried some methods to provide users with the ability of digital avatars. If you have a meeting conflict or are not very relevant to a certain meeting, you can ask your digital doppelganger to attend the meeting in your place. During the meeting, you can actively set some tasks for the DingTalk Magic Wand through its dialog box, such as focusing on specific topics. When someone discusses this topic, AI will automatically notify you and tell you relevant content. You can also set the digital avatar to send you a summary of the meeting every few minutes, and you can also actively talk to the digital avatar to learn various information, such as who is in the meeting and whether there are any disputes. After the meeting, the digital clone will automatically push the meeting summary to you

The rewritten content is as follows: Secondly, we can use natural language for intelligent control of meetings. In the past, many users complained that DingTalk had too many functions and the entrance was difficult to find. DingTalk Conference also faces this problem. Although we have made a lot of simplifications, the threshold for use is still a bit high for some users. Today, we have simplified functional operations through the smart assistant in DingTalk Magic Wand. You just need to ask in natural language, and artificial intelligence will automatically recognize the intention and help you complete the operation. For example, if there are too many people in a meeting and someone accidentally turns on the microphone, it might be troublesome for you to find that person and mute them, but now you can tell the AI to mute the person who is speaking. In addition, when you need to invite people to join the meeting, you can also directly type the invitation without worrying about where the entrance is. With these features, we make it easier to operate and manage meetings

The content that needs to be rewritten is: The third point is about Vincent’s virtual background. Virtual backgrounds have always been loved by users, especially in the past few years. We young people have had a lot of fun, sometimes taking classes in the "space classroom" and sometimes taking classes on the "prairie". We also use virtual backgrounds in our daily work, which can effectively avoid the embarrassment of cluttered backgrounds. In the past, in addition to the existing template images, we also needed to search and download the images ourselves, but that is no longer necessary. We can automatically generate virtual backgrounds through Vincent pictures, completely releasing our imagination

DingTalk Conference will gradually open applications through AI PaaS to help partners upgrade their scenarios. In the future, more ecological products will be embedded in DingTalk meetings, such as interviews, whiteboards and other applications. We are working with our recruiting partners to develop an interview assistant that can provide real-time assistance with the interview process with resume content and communication information. We hope to provide a good experience and openness, and work with ecological partners to bring more interesting and valuable scenario applications to users in the era of intelligence

The above is the detailed content of DingTalk Conference introduces AI functions: digital avatars, conversation generation virtual backgrounds and other newly upgraded functions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and Features

Mar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and Features

Mar 09, 2025 pm 01:00 PM

DALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Sora vs Veo 2: Which One Creates More Realistic Videos?

Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?

Mar 10, 2025 pm 12:22 PM

Google's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)