Technology peripherals

Technology peripherals

AI

AI

An in-depth five-minute technical talk about GET3D's generative models

An in-depth five-minute technical talk about GET3D's generative models

An in-depth five-minute technical talk about GET3D's generative models

Part 01●

Preface

In recent years, with the rise of artificial intelligence image generation tools represented by Midjourney and Stable Diffusion, 2D artificial intelligence image generation technology has become an integral part of many designs The auxiliary tools used by engineers in actual projects have been applied in various business scenarios, creating more and more practical value. At the same time, with the rise of the Metaverse, many industries are moving in the direction of creating large-scale 3D virtual worlds, and diverse, high-quality 3D content is becoming increasingly important for industries such as games, robotics, architecture, and social platforms. However, manually creating 3D assets is time-consuming and requires specific artistic and modeling skills. One of the main challenges is the issue of scale - despite the large number of 3D models that can be found on the 3D marketplace, populating a group of characters or buildings that all look different in a game or movie still requires a significant investment of artist time. As a result, the need for content creation tools that can scale in quantity, quality and diversity of 3D content has become increasingly evident

Picture

Picture

Please look at Figure 1, which is a photo of the metaverse space (source: the movie "Wreck-It Ralph 2")

Thanks to the 2D generative model, realistic quality has been achieved in high-resolution image synthesis ,This progress has also inspired research on 3D content ,generation. Early methods aimed to directly extend 2D CNN generators to 3D voxel grids, but the high memory footprint and computational complexity of 3D convolutions hindered the generation process at high resolutions. As an alternative, other research has explored point cloud, implicit or octree representations. However, these works mainly focus on generating geometry and ignore appearance. Their output representations also need to be post-processed to make them compatible with standard graphics engines

In order to be practical for content production, the ideal 3D generative model should meet the following requirements:

Have The ability to generate shapes with geometric detail and arbitrary topology

Rewritten content: (b) The output should be a textured mesh, which is a common expression used by standard graphics software such as Blender and Maya

It is possible to use 2D images for supervision since they are more general than explicit 3D shapes

Part 02

Introduction to 3D generative models

To facilitate the content creation process and enable Practical Applications, Generative 3D networks have become an active research area, capable of producing high-quality and diverse 3D assets. Every year, many 3D generative models are published at ICCV, NeurlPS, ICML and other conferences, including the following cutting-edge models

Textured3DGAN is a generative model that is an extension of the convolutional method of generating textured 3D meshes . It is able to learn to generate texture meshes from physical images using GANs under 2D supervision. Compared with previous methods, Textured3DGAN relaxes the requirements for key points in the pose estimation step and generalizes the method to unlabeled image collections and new categories/datasets, such as ImageNet

DIB-R : It is a differentiable renderer based on interpolation, using the PyTorch machine learning framework at the bottom. This renderer has been added to the 3D Deep Learning PyTorch GitHub repository (Kaolin). This method allows the analytical calculation of gradients for all pixels in the image. The core idea is to treat foreground rasterization as a weighted interpolation of local attributes and background rasterization as a distance-based aggregation of global geometry. In this way, it can predict information such as shape, texture, and light from a single image

PolyGen: PolyGen is an autoregressive generative model based on the Transformer architecture for directly modeling meshes. The model predicts the vertices and faces of the mesh in turn. We trained the model using the ShapeNet Core V2 dataset, and the results obtained are very close to the human-constructed mesh model

SurfGen: Adversarial 3D shape synthesis with an explicit surface discriminator. The end-to-end trained model is able to generate high-fidelity 3D shapes with different topologies.

GET3D is a generative model that can generate high-quality 3D textured shapes by learning images. Its core is differentiable surface modeling, differentiable rendering and 2D generative adversarial networks. By training on a collection of 2D images, GET3D can directly generate explicitly textured 3D meshes with complex topology, rich geometric details, and high-fidelity textures

image

image

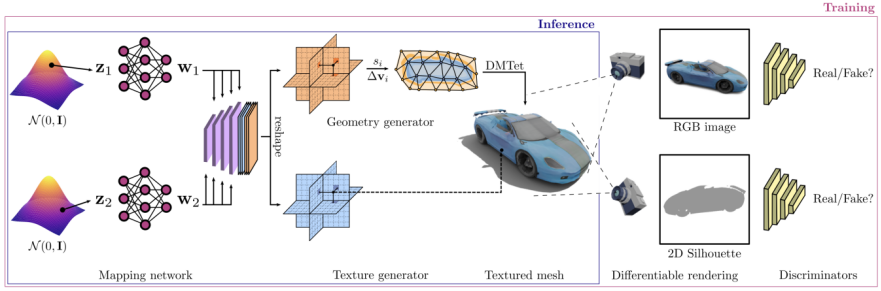

The content that needs to be rewritten is: Figure 2 GET3D generation model (Source: GET3D paper official website https://nv-tlabs.github.io/GET3D/)

GET3D is a recently proposed 3D generation model, which demonstrates state-of-the-art performance in unrestricted generation of 3D shapes by using ShapeNet, Turbosquid, and Renderpeople for multiple categories with complex geometries such as chairs, motorcycles, cars, people, and buildings

Part 03

The architecture and characteristics of GET3D

Picture

Picture

The GET3D architecture comes from the GET3D paper official website, Figure 3 shows This architecture

generates a 3D SDF (Directed Distance Field) and a texture field through two latent encodings, and then uses DMTet (Deep Marching Tetrahedra) to extract the 3D surface mesh from the SDF and add it to the surface points The cloud queries the texture field to get the color. The entire process is trained using an adversarial loss defined on 2D images. In particular, RGB images and contours are obtained using a rasterization-based differentiable renderer. Finally, two 2D discriminators are used, each for RGB images and contours, to distinguish whether the input is real or fake. The entire model can be trained end-to-end

GET3D is also very flexible in other aspects and can be easily adapted to other tasks in addition to explicit meshes as output expressions, including:

Geometry and texture separation implementation: A good decoupling is achieved between the geometry and texture of the model, allowing meaningful interpolation of geometry latent codes and texture latent codes

Smooth transitions between generating different categories of shapes When , it can be achieved by performing a random walk in the latent space and generating the corresponding 3D shape

Generating new shapes: You can perturb the local latent code by adding some small noise to generate a look-alike Similar but locally slightly different shapes

Unsupervised material generation: By combining with DIBR, materials are generated in a completely unsupervised manner and produce meaningful view-dependent lighting effects

To Text-guided shape generation: By combining StyleGAN NADA to fine-tune the 3D generator with a directional CLIP loss on computationally rendered 2D images and user-supplied text, users can generate a large number of meaningful shapes with text prompts

Picture

Picture

Please refer to Figure 4, which shows the process of generating shapes based on text. The source of this figure is the official website of GET3D paper, the URL is https://nv-tlabs.github.io/GET3D/

Part 04

Summary

Although GET3D has been Although the generation model of practical 3D texture shapes has taken an important step, it still has some limitations. In particular, the training process still relies on the knowledge of 2D silhouettes and camera distributions. Therefore, currently GET3D can only be evaluated based on synthetic data. A promising extension is to leverage advances in instance segmentation and camera pose estimation to alleviate this problem and extend GET3D to real-world data. GET3D is currently only trained by category, and will be expanded to multiple categories in the future to better represent the diversity between categories. It is hoped that this research will bring people one step closer to using artificial intelligence to freely create 3D content

The above is the detailed content of An in-depth five-minute technical talk about GET3D's generative models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

Learn about 3D Fluent emojis in Microsoft Teams

Apr 24, 2023 pm 10:28 PM

Learn about 3D Fluent emojis in Microsoft Teams

Apr 24, 2023 pm 10:28 PM

You must remember, especially if you are a Teams user, that Microsoft added a new batch of 3DFluent emojis to its work-focused video conferencing app. After Microsoft announced 3D emojis for Teams and Windows last year, the process has actually seen more than 1,800 existing emojis updated for the platform. This big idea and the launch of the 3DFluent emoji update for Teams was first promoted via an official blog post. Latest Teams update brings FluentEmojis to the app Microsoft says the updated 1,800 emojis will be available to us every day

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Paint 3D in Windows 11: Download, Installation, and Usage Guide

Apr 26, 2023 am 11:28 AM

Paint 3D in Windows 11: Download, Installation, and Usage Guide

Apr 26, 2023 am 11:28 AM

When the gossip started spreading that the new Windows 11 was in development, every Microsoft user was curious about how the new operating system would look like and what it would bring. After speculation, Windows 11 is here. The operating system comes with new design and functional changes. In addition to some additions, it comes with feature deprecations and removals. One of the features that doesn't exist in Windows 11 is Paint3D. While it still offers classic Paint, which is good for drawers, doodlers, and doodlers, it abandons Paint3D, which offers extra features ideal for 3D creators. If you are looking for some extra features, we recommend Autodesk Maya as the best 3D design software. like

Get a virtual 3D wife in 30 seconds with a single card! Text to 3D generates a high-precision digital human with clear pore details, seamlessly connecting with Maya, Unity and other production tools

May 23, 2023 pm 02:34 PM

Get a virtual 3D wife in 30 seconds with a single card! Text to 3D generates a high-precision digital human with clear pore details, seamlessly connecting with Maya, Unity and other production tools

May 23, 2023 pm 02:34 PM

ChatGPT has injected a dose of chicken blood into the AI industry, and everything that was once unthinkable has become basic practice today. Text-to-3D, which continues to advance, is regarded as the next hotspot in the AIGC field after Diffusion (images) and GPT (text), and has received unprecedented attention. No, a product called ChatAvatar has been put into low-key public beta, quickly garnering over 700,000 views and attention, and was featured on Spacesoftheweek. △ChatAvatar will also support Imageto3D technology that generates 3D stylized characters from AI-generated single-perspective/multi-perspective original paintings. The 3D model generated by the current beta version has received widespread attention.

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

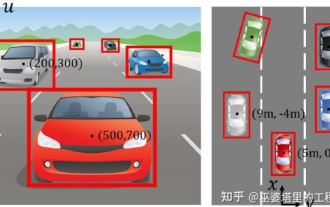

An in-depth interpretation of the 3D visual perception algorithm for autonomous driving

Jun 02, 2023 pm 03:42 PM

An in-depth interpretation of the 3D visual perception algorithm for autonomous driving

Jun 02, 2023 pm 03:42 PM

For autonomous driving applications, it is ultimately necessary to perceive 3D scenes. The reason is simple. A vehicle cannot drive based on the perception results obtained from an image. Even a human driver cannot drive based on an image. Because the distance of objects and the depth information of the scene cannot be reflected in the 2D perception results, this information is the key for the autonomous driving system to make correct judgments on the surrounding environment. Generally speaking, the visual sensors (such as cameras) of autonomous vehicles are installed above the vehicle body or on the rearview mirror inside the vehicle. No matter where it is, what the camera gets is the projection of the real world in the perspective view (PerspectiveView) (world coordinate system to image coordinate system). This view is very similar to the human visual system,