IT House reported on September 2 that in order to alleviate the systemic bias problem in many current computer vision models against women and people of color, recently launched a new AI tool called FACET for identification. Racial and gender bias in computer vision systems.

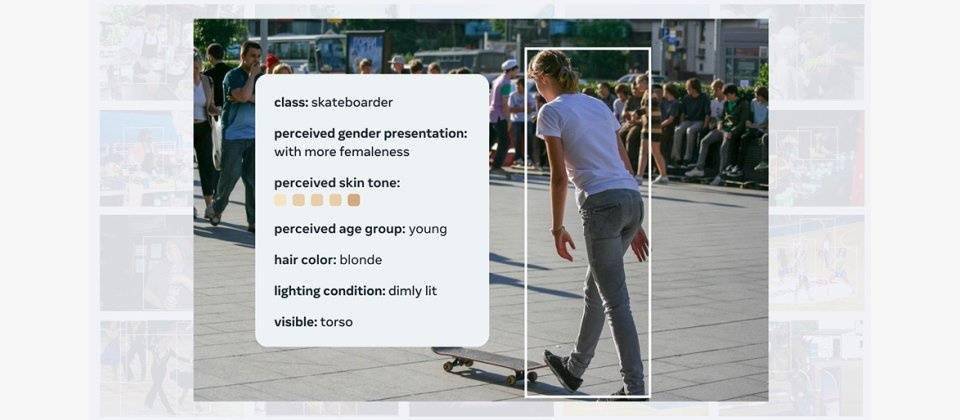

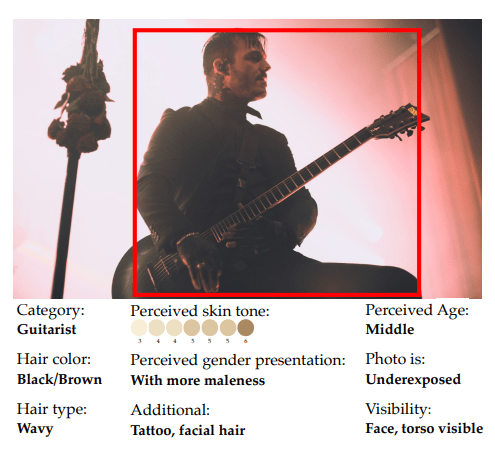

The FACET tool is currently trained on 30,000 images, including images of 50,000 people. It has especially enhanced the perception of gender and skin color and can be used to evaluate computer vision models on various features.

After training, the FACET tool can answer complex questions. For example, when the subject is identified as male, it can further identify skateboarders, as well as light or dark skin

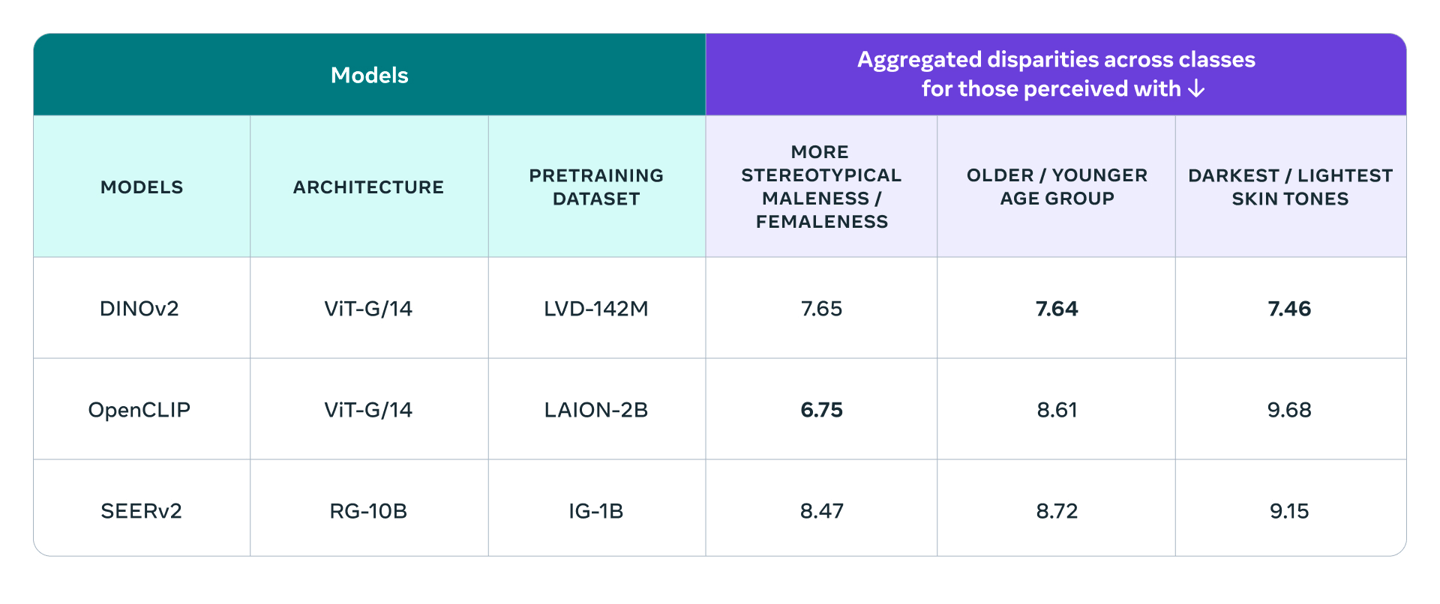

Meta used FACET to evaluate the DINOv2 model and SEERv2 model developed by the company, as well as OpenAI's OpenCLIP model. Overall, OpenCLIP performed better than other models in terms of gender, while DINOv performed better in age and skin color. Good judgment.

FACET will help researchers perform similar benchmarking to understand biases in their own models and monitor the impact of mitigation measures taken to address equity issues. IT House attaches the Meta press release address here, and interested users can read it in depth. Open-sourcing FACET will help researchers conduct similar benchmarks to understand the bias present in their models and monitor the impact of adopting fair resolution measures. IT House provides a link to Meta’s press release here. Interested users can read it in depth

The above is the detailed content of Meta's open-source FACET tool for assessing racial and gender bias in AI models. For more information, please follow other related articles on the PHP Chinese website!

How to buy and sell Bitcoin on okex

How to buy and sell Bitcoin on okex

Computer cannot copy and paste

Computer cannot copy and paste

Introduction to common commands of postgresql

Introduction to common commands of postgresql

Domestic Bitcoin buying and selling platform

Domestic Bitcoin buying and selling platform

nginx restart

nginx restart

How to solve the problem of no internet access when the computer is connected to wifi

How to solve the problem of no internet access when the computer is connected to wifi

How to start oracle data monitoring

How to start oracle data monitoring

insert statement usage

insert statement usage