Technology peripherals

Technology peripherals

AI

AI

Easily complete 3D model texture mapping in 30 seconds, simple and efficient!

Easily complete 3D model texture mapping in 30 seconds, simple and efficient!

Easily complete 3D model texture mapping in 30 seconds, simple and efficient!

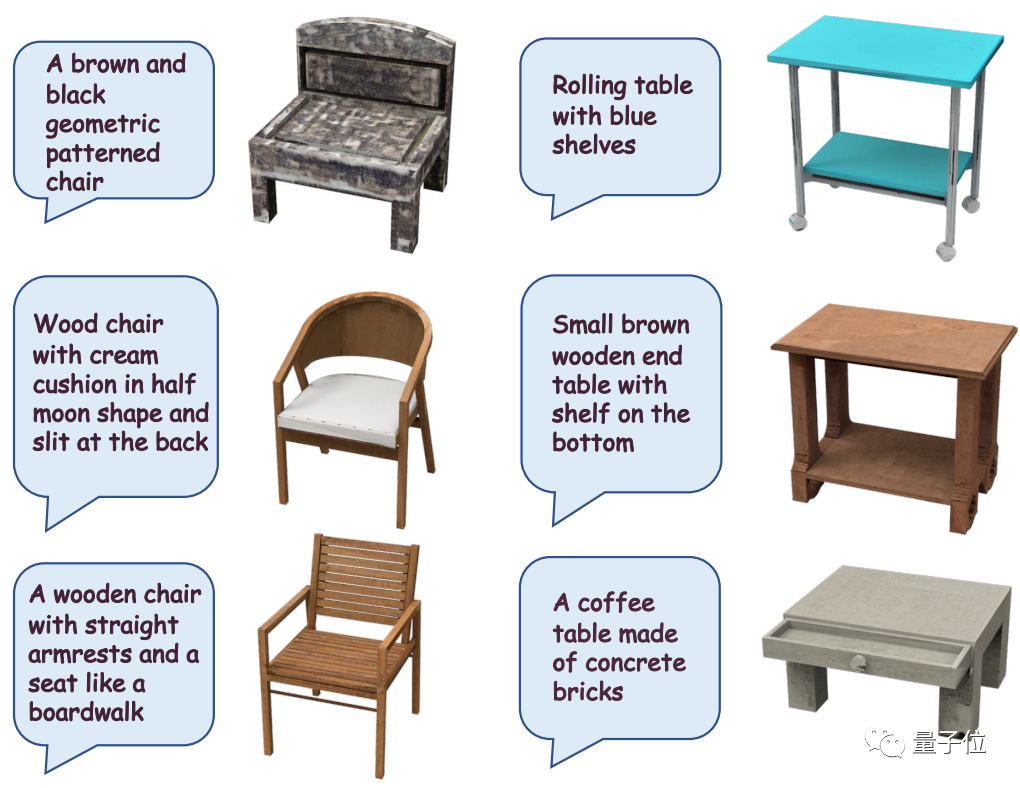

Use the diffusion model to generate textures for 3D objectsYou can do it in one sentence!

When you type "a chair with a brown and black geometric pattern", the diffusion model will immediately add an ancient texture to it, giving it a period feel

AI can immediately add detailed wooden textures to the desktop with imagination, even just a screenshot that cannot tell what the desktop looks like

You know, Adding texture to a 3D object is not just as simple as "changing the color".

When designing materials, you need to consider multiple parameters such as roughness, reflection, transparency, vortex, and bloom. In order to design good effects, you not only need to understand knowledge about materials, lighting, rendering, etc., but you also need to perform repeated rendering tests and modifications. If the material changes, you may need to restart the design

The content that needs to be rewritten is: the effect of texture loss in the game scene

However, previously used The textures designed by artificial intelligence are not ideal in appearance, so designing textures has always been a time-consuming and labor-intensive task, which has also resulted in higher costs.

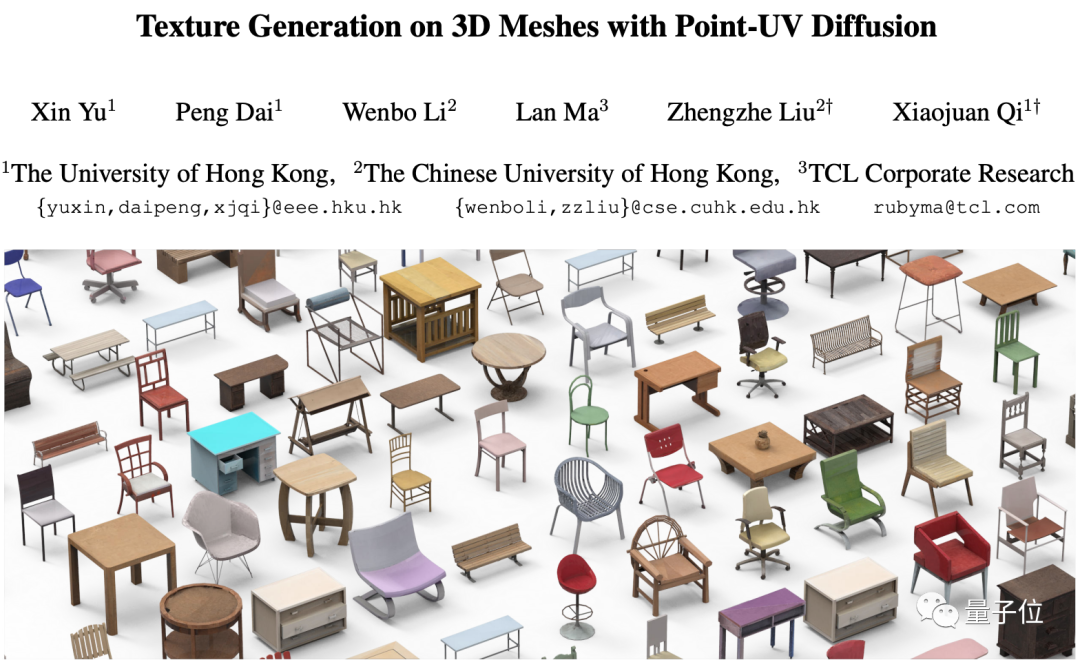

Researchers from HKU, Hong Kong Chinese and TCL recently developed A new artificial intelligence method for designing 3D object textures. This method can not only perfectly retain the original shape of the object, but also design a more realistic texture that perfectly fits the surface of the object

This research has been included as an oral report paper in ICCV 2023

What we need to rewrite is: What is the solution to this problem? Let's take a look

Using the diffusion model, 3D texture can be done in one sentence

When using artificial intelligence to design 3D texture before, there were two main types of problems

Generation The texture is unrealistic and has limited details.

During the generation process, the geometry of the 3D object itself will be specially processed, making the generated texture unable to perfectly fit the original object. , strange shapes will "pop out"

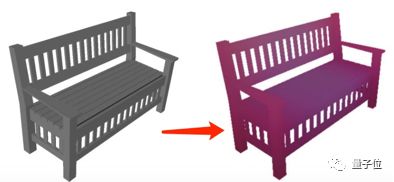

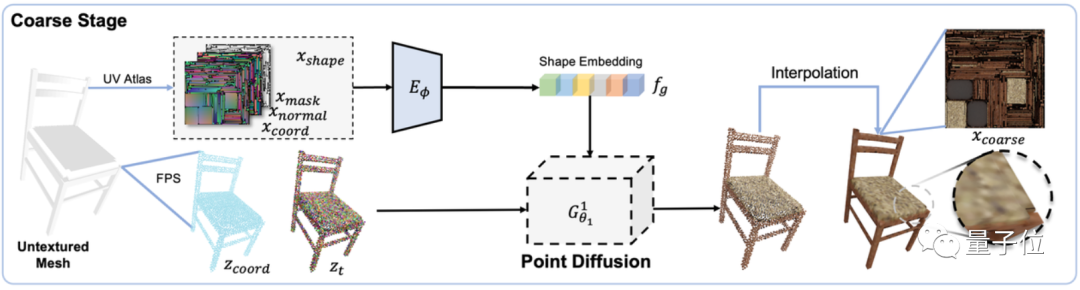

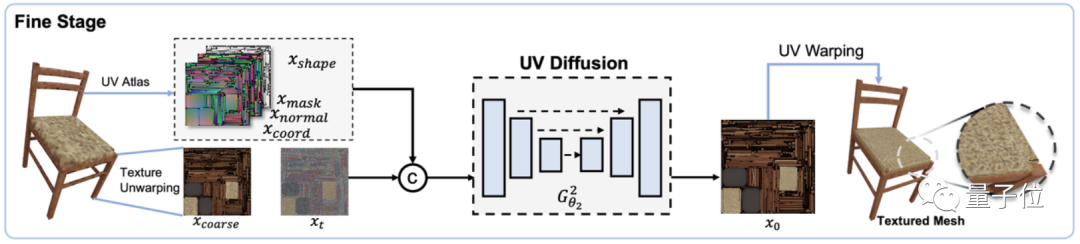

Therefore, in order to ensure the stability of the 3D object structure while generating detailed and realistic textures, this research designed a tool called Point-UV diffusion framework.

This framework includes two modules: "rough design" and "finishing", both of which are based on the diffusion model, but the diffusion models used in the two are different.

First in the "rough design" module, train a 3D diffusion model with shape features (including surface normals, coordinates and masks) as input conditions to predict the shape of the object The color of each point in it, thereby generating a rough texture image effect:

In the "Finishing" module, a 2D diffusion model is also designed for further utilization The previously generated rough texture images and object shapes are used as input conditions to generate finer textures

The reason for adopting this design structure is the previous high-resolution point cloud generation The calculation cost of the method is usually too high

With this two-stage generation method, not only can the calculation cost be saved, so that the two diffusion models can play their own roles, but compared with the previous method, not only the original 3D The structure of the object and the generated texture are also more refined

As for controlling the generated effect by inputting text or pictures, it is the "contribution" of CLIP.

In order not to change the original meaning, the content needs to be rewritten into Chinese. What needs to be rewritten is: for the input, the author will first use the pre-trained CLIP model to extract the embedding vector of the text or image, then input it into an MLP model, and finally integrate the conditions into "rough design" and "finishing" In the two-stage network

, the final output result can be achieved by controlling the texture generated through text and images

So, what is the implementation effect of this model?

The generation speed is reduced from 10 minutes to 30 seconds

Let us first take a look at the generation effect of Point-UV diffusion

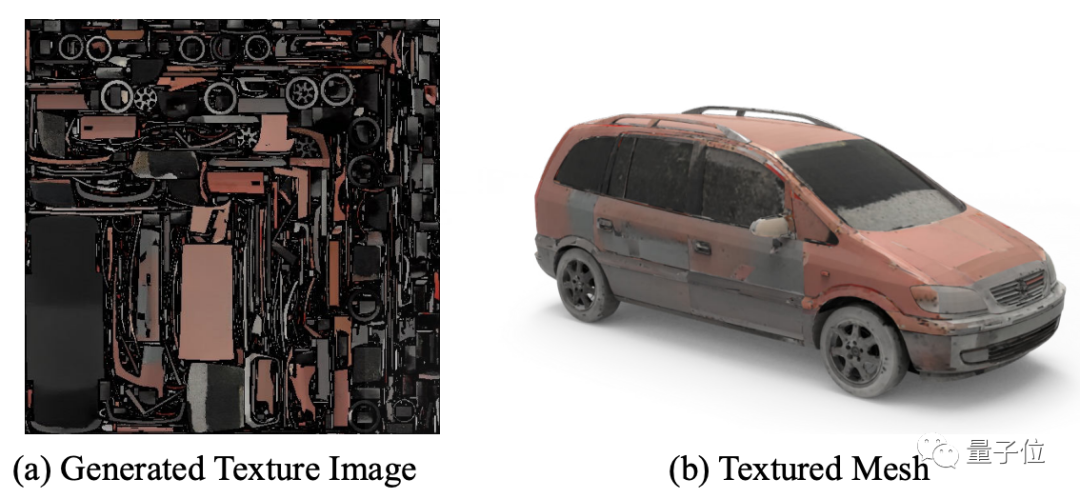

As can be seen from the renderings, in addition to tables and chairs, Point -UV diffusion can also generate textures for objects such as cars, with a richer variety

It can not only generate textures based on text:

The texture effect of the corresponding object can also be generated based on an image:

The authors also compared the texture effect generated by Point-UV diffusion with the previous method

By observing the chart, you can find that compared with other texture generation models such as Texture Fields, Texturify, PVD-Tex, etc., Point-UV diffusion shows better results in terms of structure and fineness

The author also mentioned that under the same hardware configuration, compared to Text2Mesh which takes 10 minutes to calculate, Point-UV diffusion only takes 30 seconds.

However, the author also pointed out some current limitations of Point-UV diffusion. For example, when there are too many "fragmented" parts in the UV map, it still cannot achieve a seamless texture effect. In addition, due to the reliance on 3D data for training, the refined quality and quantity of 3D data currently cannot reach the level of 2D data, so the generated effect cannot yet achieve the refined effect of 2D image generation

For those who are interested in this research, you can click on the link below to read the paper~

Paper address: https://cvmi- lab.github.io/Point-UV-Diffusion/paper/point_uv_diffusion.pdf

Project address (still under construction): https://github. com/CVMI-Lab/Point-UV-Diffusion

The above is the detailed content of Easily complete 3D model texture mapping in 30 seconds, simple and efficient!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi