Technology peripherals

Technology peripherals

AI

AI

This article will take you to understand the universal large language model independently developed by Tencent - the Hunyuan large model.

This article will take you to understand the universal large language model independently developed by Tencent - the Hunyuan large model.

This article will take you to understand the universal large language model independently developed by Tencent - the Hunyuan large model.

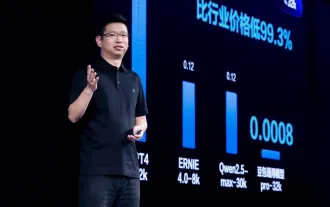

On the morning of September 7, 2023, at the Tencent Global Digital Ecology Conference, Tang Daosheng, senior executive vice president of Tencent Group and CEO of Tencent Cloud and Smart Industry Group, announced that Tencent will enter the era of "fully embracing big models" and At the same time, it was announced that Hunyuan, a general-purpose large language model independently developed by Tencent, was officially unveiled to the industry. According to Tencent officials, the Chinese capabilities of the Hunyuan model have exceeded GPT3.5

After the Hunyuan model is released, it will serve as the base for Tencent Cloud MaaS services. Users can experience it through the Tencent Cloud official website and support direct By calling the API interface, you can also use Hunyuan as the base model and customize it on the public cloud according to the actual needs of the enterprise.

1. Introduction to Hunyuan Model

2. Billing

Tencent Hunyuan Model will be provided for Tencent Cloud enterprise accounts that have been authenticated by real-name in the whitelist A total of 100,000 free calling tokens are available. After the enterprise activates the service, it can use the corresponding free quota. In this way, you can experience it first to confirm whether it meets your needs, and then consider the subsequent billing costs

The current price of the interface is still quite reasonable. When the enterprise's free quota is used up, it will be billed at the following price: Tencent Hunyuan Large Model Premium Edition charges 0.14 yuan per 1,000 tokens. (Equivalent to 1 token, which is approximately equal to 1 Chinese character or 3 English letters. Overall, 1.4 cents can complete about two or three interface calls)

The payment method adopts the post-paid daily payment mode. Users After submitting the activation application and passing it, you can use the service in accordance with the service rules. Tencent Cloud officials will bill based on actual usage and deduct the corresponding equivalent amount directly from your account.

3. Computing power

According to official news, Tencent’s Hunyuan model currently has over 100 billion parameters and pre-training corpus of over 2 trillion tokens. After all, it is a very powerful domestic manufacturer. Chinese understanding, creation, logical reasoning and other abilities.

Picture

Picture

4. Current access ecological scenario

Jiang Jie, vice president of Tencent Group, said in an interview with the media that currently More than 50 Tencent businesses and products, including Tencent Cloud, Tencent Advertising, Tencent Games, Tencent Financial Technology, Tencent Meetings, Tencent Documents, WeChat Souyisou, and QQ Browser, have all been tested on the Tencent Hunyuan large model and have achieved preliminary results. The results are promising, and the future development prospects are very promising.

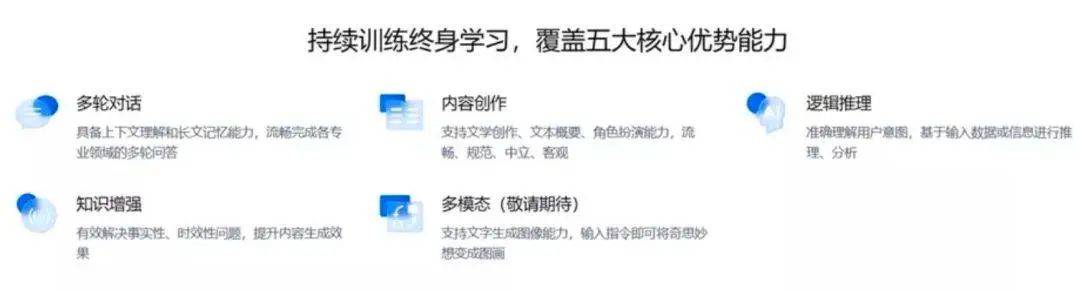

5. Advantages of Hunyuan Large Model

In multiple scenarios, Tencent Hunyuan Large Model has been able to process ultra-long texts. Through positional coding optimization technology, Hunyuan Large Model can handle long texts. Processing and performance have been improved. Moreover, the Hunyuan large model also has the ability to identify "traps". Simply put, it refuses to be "induced" through reinforcement learning methods.

A simple example: when users may ask questions that are difficult or even impossible to answer, the rejection rate for such security guidance questions can be increased by 20%. This can greatly reduce errors and invalid answers, making the content answered by the Tencent AI large model itself more credible. This is also a highlight of Tencent AI’s large model

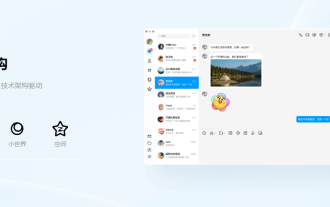

6. Typical application of Hunyuan large model-Tencent Hunyuan Assistant

Picture

Picture

At present, the "Tencent Hunyuan Assistant" WeChat applet is now open for internal testing applications. Users can apply for queuing experience. If approved, a text message reminder will be sent. Anyone who is interested can search the mini program in advance and apply as soon as possible. After all, the number of places for internal testing is limited.

Picture

Picture

The application method is very simple: just search for [Tencent Hunyuan Assistant] in the WeChat mini program and enter the mini program to proceed. Test application

Function introduction

AI Q&A: This function is similar to the current mainstream AI dialogue model. It supports AI input text content and then gives corresponding answers.

AI painting is one of the most popular artificial intelligence technologies at present. Users can describe the content of the picture and then generate a beautiful painting based on keywords

Other aspects: acquiring knowledge, solving math problems, language translation, providing travel guides, work suggestions, writing reports, writing resumes, Office skills and more.

7. Summary

The advent of Tencent’s Hunyuan model means that several major domestic technology companies currently have their own large AI models. Although Tencent's Hunyuan model was launched late, Tencent has a huge ecosystem, including WeChat, QQ, official accounts, mini programs, games and videos, and has huge potential for future development

The above is the detailed content of This article will take you to understand the universal large language model independently developed by Tencent - the Hunyuan large model.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

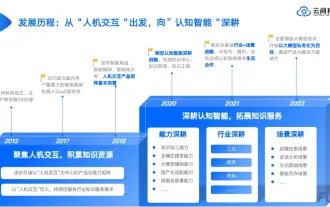

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

Tencent QQ NT architecture version memory optimization progress announced, chat scenes are controlled within 300M

Mar 05, 2024 pm 03:52 PM

Tencent QQ NT architecture version memory optimization progress announced, chat scenes are controlled within 300M

Mar 05, 2024 pm 03:52 PM

It is understood that Tencent QQ desktop client has undergone a series of drastic reforms. In response to user issues such as high memory usage, oversized installation packages, and slow startup, the QQ technical team has made special optimizations on memory and has made phased progress. Recently, the QQ technical team published an introductory article on the InfoQ platform, sharing its phased progress in special optimization of memory. According to reports, the memory challenges of the new version of QQ are mainly reflected in the following four aspects: Product form: It consists of a complex large panel (100+ modules of varying complexity) and a series of independent functional windows. There is a one-to-one correspondence between windows and rendering processes. The number of window processes greatly affects Electron’s memory usage. For that complex large panel, once there is no

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A