VLDB 2023 International Conference has been successfully held in Vancouver, Canada. The VLDB conference is one of the three top conferences with a long history in the database field. Its full name is the International Large-Scale Database Conference. Each conference focuses on displaying the current cutting-edge directions of database research, the latest technologies in the industry, and the R&D levels of various countries, attracting submissions from the world's top research institutions

The conference There are extremely high requirements on system innovation, integrity, experimental design, etc. VLDB's paper acceptance rate is generally low, about 18%. Only papers with great contributions are likely to be accepted. The competition is even fiercer this year. According to official data, a total of 9 VLDB papers won the best paper award this year, including those from Stanford University, Carnegie Mellon University, Microsoft Research, VMware Research, Meta and other world-renowned universities, research institutions and technology giants. Figure

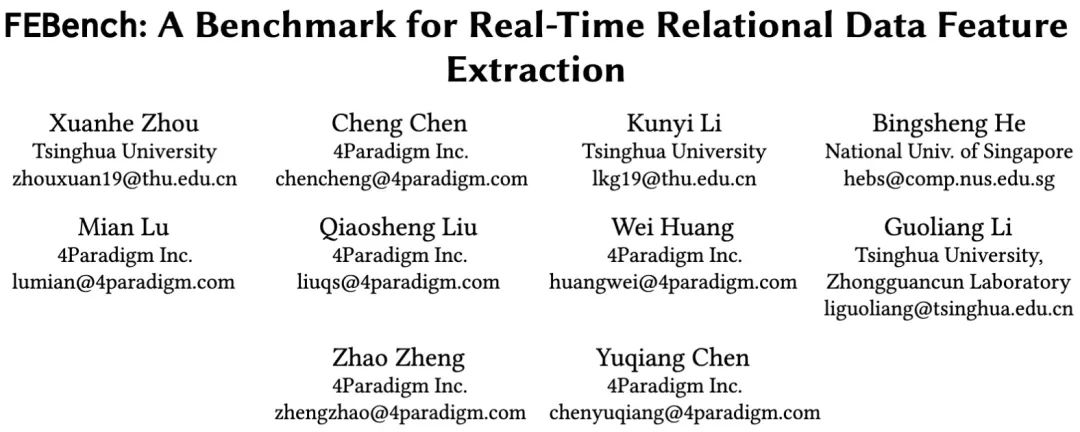

Among them, the paper "FEBench: A Benchmark for Real-Time Relational Data Feature Extraction" jointly completed by 4Paradigm, Tsinghua University and the National University of Singapore won the Runner Up award for the best industrial paper.

This paper is a collaboration between 4Paradigm, Tsinghua University and the National University of Singapore. The paper proposes a real-time feature calculation test benchmark based on the accumulation of real scenarios in the industry, which is used to evaluate real-time decision-making systems based on machine learning

Please click View the paper at the following link: https://github.com/decis-bench/febench/blob/main/report/febench.pdf

Project address: https://github.com /decis-bench/febench The content that needs to be rewritten is: The project address is https://github.com/decis-bench/febench

Project background

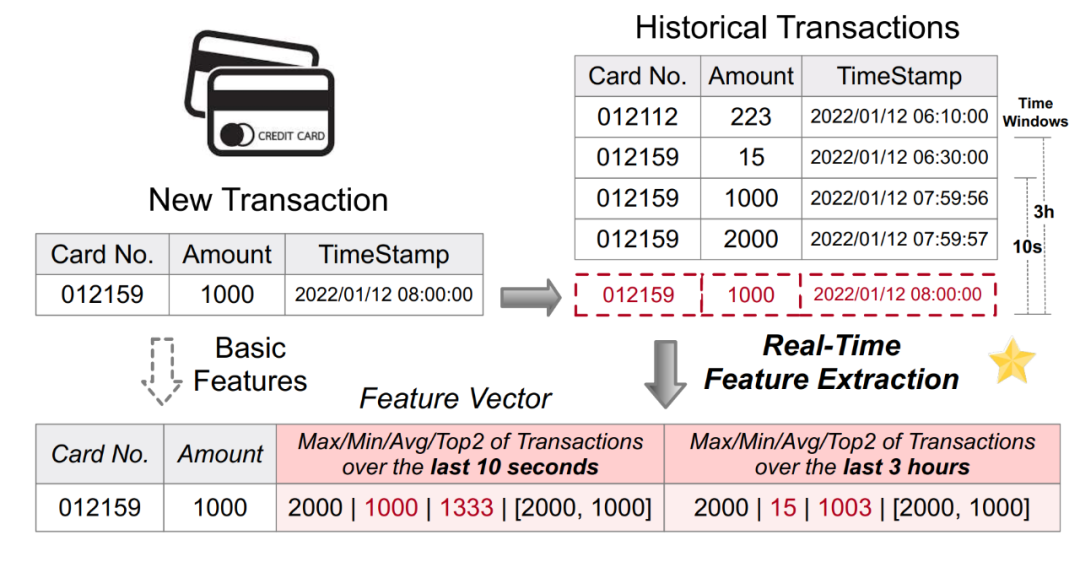

Decision-making systems based on artificial intelligence are widely used in many industry scenarios. Among them, many scenarios involve calculations based on real-time data, such as anti-fraud in the financial industry and real-time online recommendations in the retail industry. Real-time decision-making systems driven by machine learning usually include two main computing links: features and models. Due to the diversity of business logic and the requirements for low latency and high concurrency online, feature calculation often becomes the bottleneck of the entire decision-making system. Therefore, a lot of engineering practice is required to build an available, stable and efficient real-time feature calculation platform. Figure 1 below shows a common real-time feature calculation scenario for anti-fraud applications. By performing feature calculations based on the original card swiping record table, new features (such as the maximum/minimum/average card swiping amount in the last 10 seconds, etc.) are generated, and then input into the downstream model for real-time inference

Rewritten content: Figure 1. Application of real-time feature calculation in anti-fraud applications

Generally speaking, a real-time feature calculation platform needs to meet The following two basic requirements:

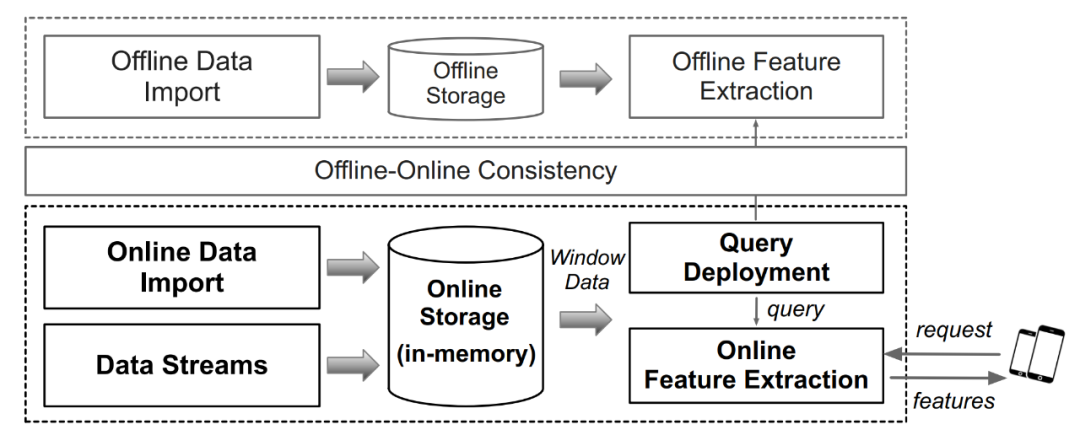

Online and offline consistency: Machine learning applications are generally divided into two processes: online and online, namely training based on historical data and training based on real-time Inference from data. Therefore, ensuring the consistency of online and offline feature calculation logic is crucial to ensuring consistent online and offline final business results.

Efficiency of online services: Online services are aimed at real-time data and calculations, meeting the needs of low latency, high concurrency, and high availability.

Figure 2. Real-time feature calculation platform architecture and workflow

As shown in Figure 2 above, one is listed The architecture of a common real-time feature computing platform. Simply put, it mainly includes offline computing engines and online computing engines. The key point is to ensure the consistency of computing logic between offline and online computing engines. There are currently many feature platforms on the market that can meet the above requirements and form a complete real-time feature computing platform, including general-purpose systems such as Flink, or specialized systems such as OpenMLDB, Tecton, Feast, etc. However, the industry currently lacks a dedicated benchmark oriented to real-time characteristics to conduct a rigorous and scientific evaluation of the performance of such systems. In response to this demand, the author of this paper built FEBench, a real-time feature computing benchmark test, which is used to evaluate the performance of the feature computing platform and analyze the overall latency, long-tail latency and concurrency performance of the system.

Technical Principles

The benchmark construction of FEBench mainly includes three aspects of work: data set collection, query generated content needs to be rewritten, and when the content needs to be rewritten, Choose the appropriate template

Dataset collection

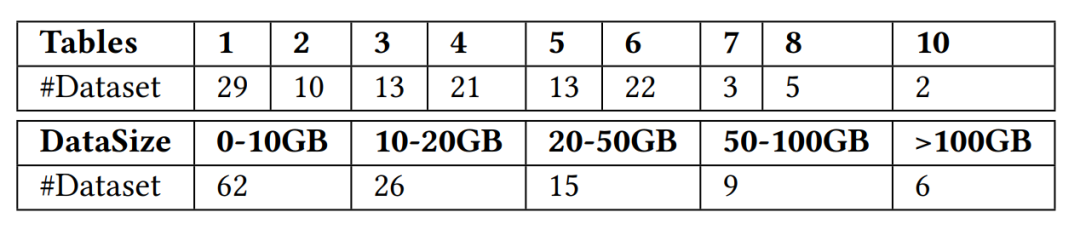

The research team has collected a total of 118 data sets that can be used in real-time feature calculation scenarios. These data sets come from Kaggle, Tianchi, UCI ML, KiltHub and other public sources. The data website and 4Paradigm internal publicly available data cover typical usage scenarios in the industry, such as finance, retail, medical, manufacturing, transportation and other industry scenarios. The research team further classified the collected data sets according to the number of tables and data set size, as shown in Figure 3 below.

Rewritten content: The chart of the number of tables and the size of the data set in FEBench is as follows:

Query The generated content needs to be rewritten

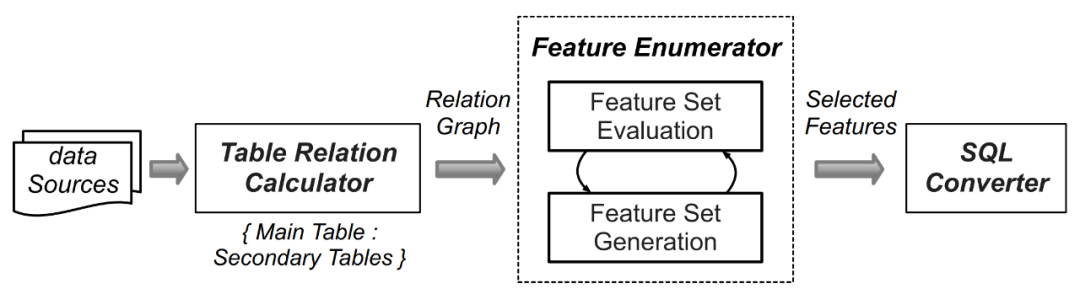

Due to the large number of data sets, the computational logic workload of manually generating feature extraction for each data set is very huge, so the researchers used tools such as AutoCross (reference paper: AutoCross: Automatic Feature Crossing for Tabular Data in Real-World Applications) and other automatic machine learning technologies to automatically generate queries for the collected data sets. FEBench's feature selection and query-generated content need to be rewritten, which includes the following four steps (as shown in Figure 4 below):

By identifying the main table in the data set (which stores streaming data ) and auxiliary tables (such as static/appendable/snapshot tables), for initialization. Subsequently, columns with similar names or key relationships in the primary and secondary tables are analyzed, and one-to-one/one-to-many relationships between columns are enumerated, which correspond to different feature operation modes.

Map column relationships to feature operators.

After extracting all candidate features, the Beam search algorithm is used to iteratively generate an effective feature set.

The selected features are converted into semantically equivalent SQL queries.

Figure 4. Query generation process in FEBench

When rewriting the content, Appropriate templates need to be selected

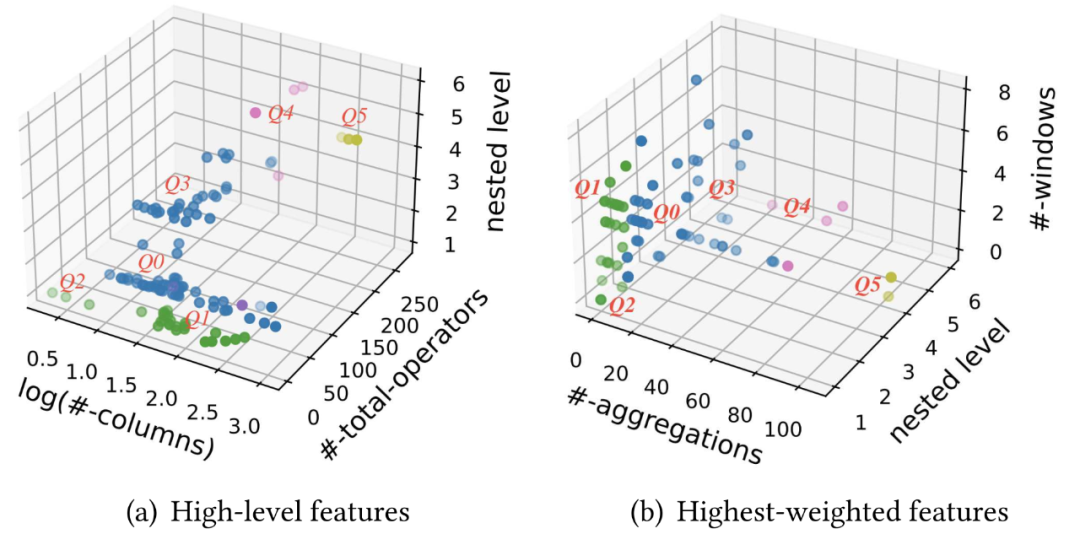

After generating queries for each data set, the researchers further used a clustering algorithm to select representative queries as query templates to reduce repeated testing of similar tasks. For the 118 collected data sets and feature queries, use the DBSCAN algorithm to cluster these queries. The specific steps are as follows:

Divide the features of each query into five parts: Outputs the number of columns, total number of query operators, frequency of complex operators, number of levels of nested subqueries, and the number of largest tuples in the time window. Since feature engineering queries usually involve time windows and query complexity is not affected by batch data size, dataset size is not included as one of the clustering features.

Use a logistic regression model to evaluate the relationship between query features and query execution characteristics, using the features as the input of the model and the execution time of the feature query as the output of the model. The importance of different features to the clustering results is considered by using the regression weight of each feature as its clustering weight

Based on the weighted query features, the DBSCAN algorithm is used to divide the feature query into Multiple clusters.

The following chart shows the distribution of 118 data sets under various consideration indicators. Figure (a) shows the indicators of statistical nature, including the number of output columns, the total number of query operators and the number of nested subquery levels; Figure (b) shows the indicators with the highest correlation with query execution time, including the number of aggregation operations, Number of nested subquery levels and number of time windows

Figure 5. 118 feature queries obtained 6 clusters through cluster analysis and generated query template (Q0 -5)

Finally, based on the clustering results, the 118 feature queries were divided into 6 clusters. For each cluster, queries near the centroid are selected as candidate templates. In addition, considering that artificial intelligence applications in different application scenarios may have different feature engineering requirements, try to select queries from different scenarios around the centroid of each cluster to better cover different feature engineering scenarios. Finally, 6 query templates were selected from 118 feature queries, suitable for different scenarios, including transportation, healthcare, energy, sales, and financial transactions. These six query templates ultimately constitute the core data sets and queries of FEBench, which are used for performance testing of the real-time feature calculation platform.

The content that needs to be rewritten is: benchmark evaluation (OpenMLDB and Flink)

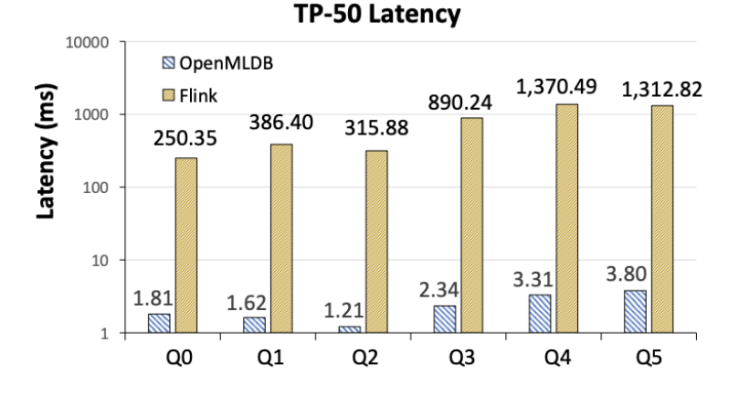

In the study, the researchers used FEBench to test two typical industrial systems, namely Flink and OpenMLDB. Flink is a general batch and stream processing consistent computing platform, while OpenMLDB is a dedicated real-time feature computing platform. Through testing and analysis, the researchers discovered the pros and cons of each system and the reasons behind them. Experimental results show that due to different architectural designs, there are differences in performance between Flink and OpenMLDB. At the same time, this also illustrates the importance of FEBench in analyzing the capabilities of the target system. In summary, the main conclusions of the study are as follows

Flink is two orders of magnitude slower than OpenMLDB in latency (Figure 6). Researchers analyzed that the main reason for the gap lies in the different implementation methods of the two system architectures. OpenMLDB, as a dedicated system for real-time feature calculation, includes memory-based double-layer jump tables and other data structures optimized for time series data. Ultimately, Compared with Flink, it has obvious performance advantages in feature calculation scenarios. Of course, as a general-purpose system, Flink has a wider range of applicable scenarios than OpenMLDB.

Figure 6. TP-50 latency comparison between OpenMLDB and Flink

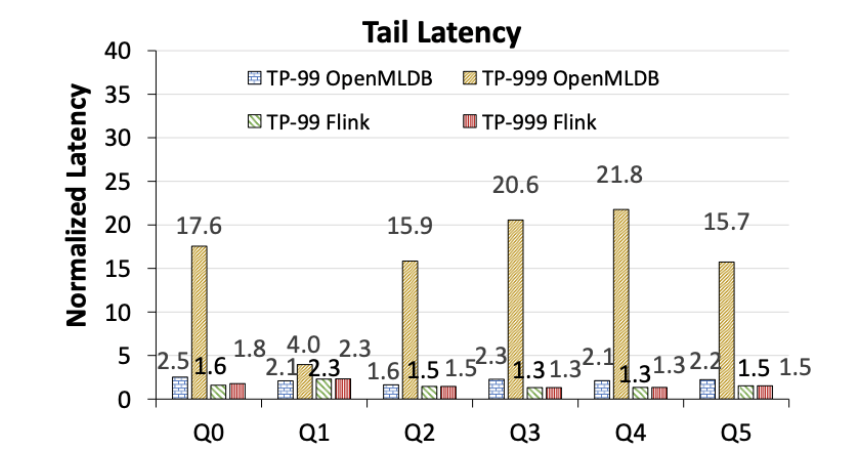

OpenMLDB exhibits obvious long tail latency issues, while Flink's tail latency is more stable (Figure 7). Note that the following numbers show latency performance normalized to OpenMLDB and Flink's respective TP-50, and do not represent absolute performance comparisons. Rewritten as: OpenMLDB has obvious problems with tail latency, while Flink’s tail latency is more stable (see Figure 7). It should be noted that the following numbers normalize the latency performance to the performance of OpenMLDB and Flink under TP-50 respectively, rather than a comparison of absolute performance

#Figure 7. Tail latency comparison between OpenMLDB and Flink (normalized to their respective TP-50 latencies)

The researchers took a deeper look at the above performance results. Analysis:

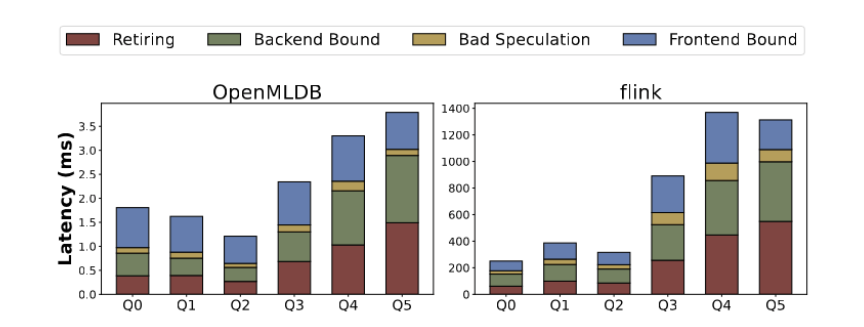

Disassemble and analyze based on execution time. Microarchitecture indicators include instruction completion, wrong branch prediction, back-end dependencies and front-end dependencies, etc. Different query templates have different performance bottlenecks at the microstructure level. As shown in Figure 8, the performance bottleneck of Q0-Q2 is mainly dependent on the front end, accounting for more than 45% of the entire running time. In this case, the operations performed are relatively simple, and most of the time is spent processing user requests and switching between feature extraction instructions. For Q3-Q5, backend dependencies (such as cache invalidation) and instruction execution (including more complex instructions) become more important factors. OpenMLDB makes its performance even better through targeted optimization

Figure 8 shows the microarchitecture indicator analysis of OpenMLDB and Flink

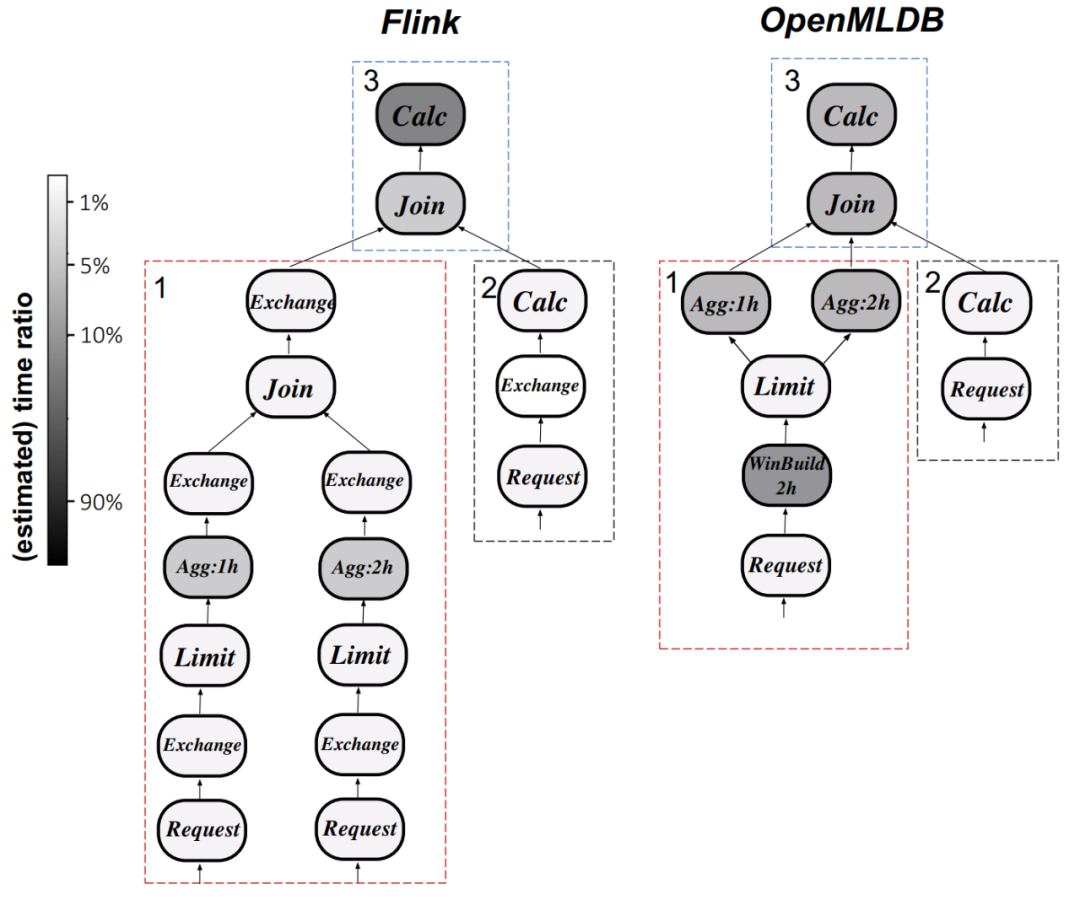

Execution plan analysis: Taking Q0 as an example, Figure 9 below compares the execution plan differences between Flink and OpenMLDB. Computational operators in Flink take the most time, while OpenMLDB reduces execution latency by optimizing windowing and using optimization techniques such as custom aggregate functions.

##The ninth picture shows the comparison between OpenMLDB and Flink in terms of execution plan (Q0)

If users want to reproduce the above experimental results, or conduct benchmark testing on the local system (the author of the paper also encourages submitting and sharing test results in the community), you can visit the FEBench project homepage for more information.The above is the detailed content of VLDB 2023 awards announced, a joint paper from Tsinghua University, 4Paradigm, and NUS won the Best Industrial Paper Award. For more information, please follow other related articles on the PHP Chinese website!

Spot trading software

Spot trading software

Minimum configuration requirements for win10 system

Minimum configuration requirements for win10 system

oracle database running sql method

oracle database running sql method

Will Sols inscription coins return to zero?

Will Sols inscription coins return to zero?

The core technologies of the big data analysis system include

The core technologies of the big data analysis system include

How to change c language software to Chinese

How to change c language software to Chinese

What are the java text editors

What are the java text editors

How to make the background transparent in ps

How to make the background transparent in ps