Technology peripherals

Technology peripherals

AI

AI

With ModelScope-Agent, novices can also create exclusive agents, with nanny-level tutorials included

With ModelScope-Agent, novices can also create exclusive agents, with nanny-level tutorials included

With ModelScope-Agent, novices can also create exclusive agents, with nanny-level tutorials included

ModelScope-Agent provides a universal, customizable Agent framework to facilitate users to create their own agents. The framework is based on open source Large Language Models (LLMs) at its core and provides a user-friendly system library with the following features:

- Customizable and functional Comprehensive framework: Provides customizable engine design, covering functions such as data collection, tool retrieval, tool registration, storage management, customized model training and practical applications, which can be used to quickly implement actual scenarios Applications.

- Open source LLMs as core components: Supports model training on multiple open source LLMs in the ModelScope community, and has open sourced supporting Chinese The English tool instruction data set MSAgent-Bench is used to enhance the planning and scheduling capabilities of open source large models as the agent hub.

- Diversified and comprehensive API, supporting API retrieval: Implemented in a unified way with model API and common functional API It is seamlessly integrated and provides an open source API retrieval solution by default.

- ## Paper link: https://arxiv.org/abs/2309.00986

- Code link: https://github.com/modelscope/modelscope-agent

- ModelScope experience address: https://modelscope.cn/studios/damo/ModelScopeGPT/summary

Capability display

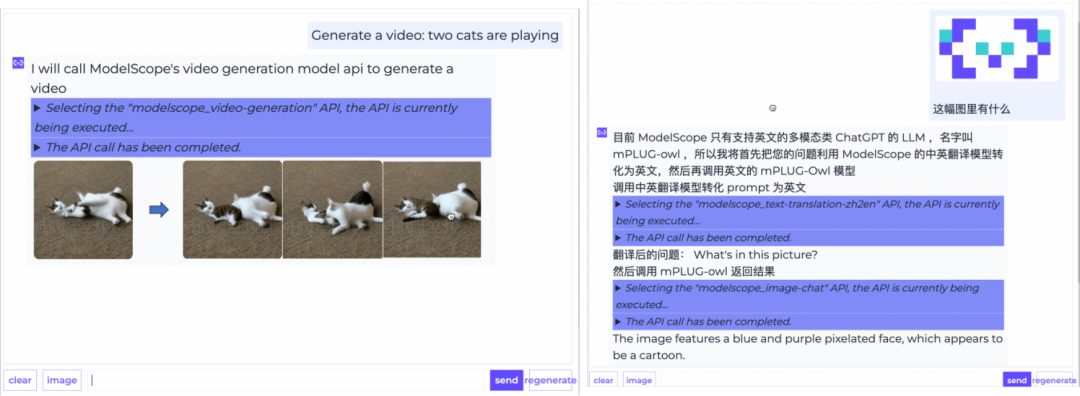

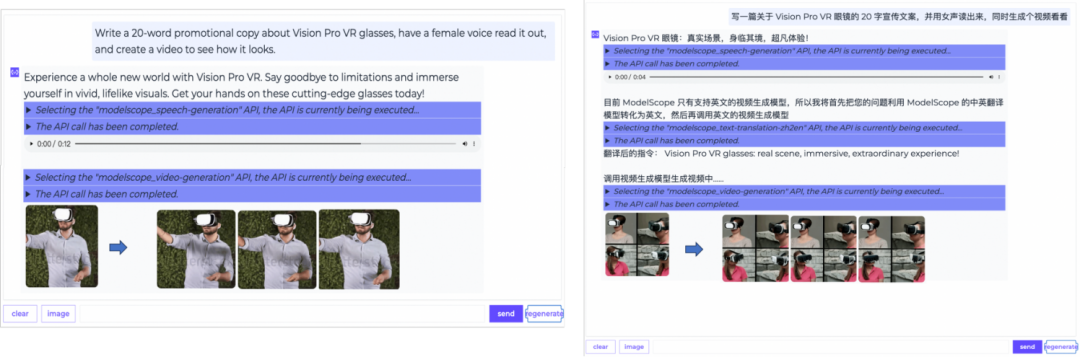

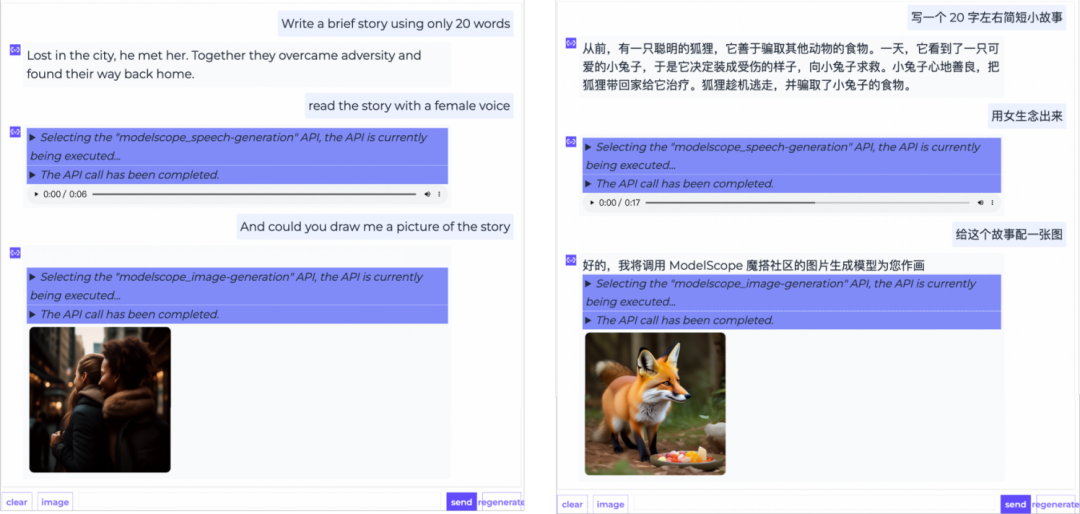

The following will first show some capabilities of ModelScopeGPT (Magic GPT) implemented based on ModelScope-Agent:

The content that needs to be rewritten is: 1. In a single-step tool call, the Agent needs to select the appropriate tool and generate a request, and then return the result to the user based on the execution result

2. In a multi-step tool call, the Agent needs to plan, schedule, execute and reply to multiple tools

3. When the tool is called in multiple rounds of dialogue, the Agent needs to mine the parameters that need to be passed to the tool from the historical dialogue.

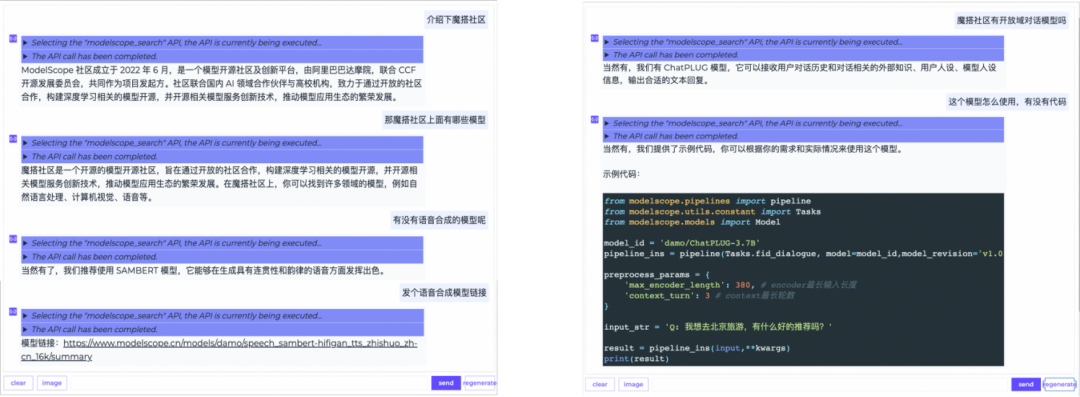

Community knowledge Q&A platform based on search tools

#What is the design principle of the ModelScope-Agent framework?

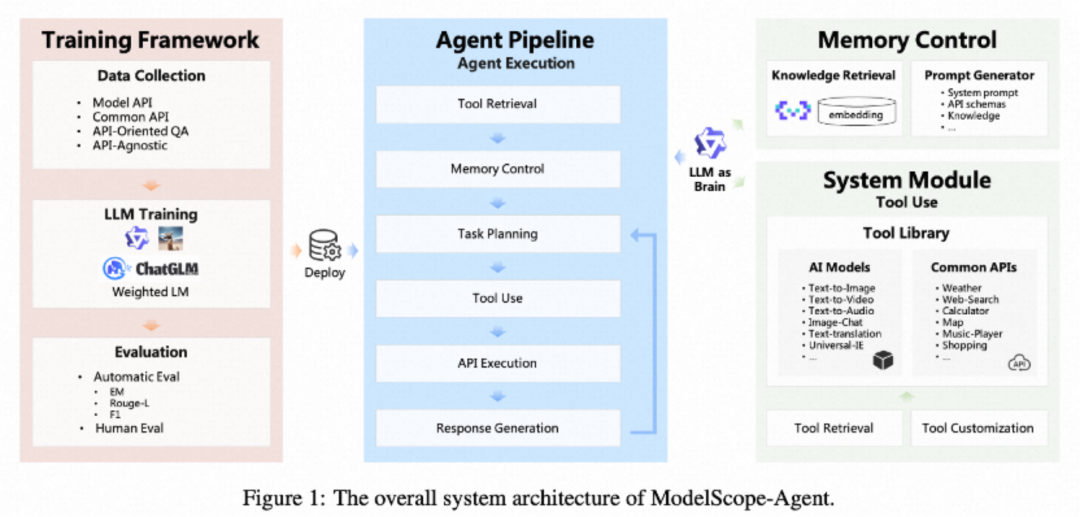

ModelScope-Agent is a general, customizable Agent framework for practical application development. It is based on open source large language models (LLMs) as the core and includes Modules such as memory control and tool use. The open source LLM is mainly responsible for task planning, scheduling and reply generation; the memory control module mainly includes knowledge retrieval and prompt (prompt word) management; the tool usage module includes tool library, tool retrieval and tool customization. The ModelScope-Agent system architecture is as follows:

How the ModelScope-Agent framework is executed

ModelScope-Agent works by splitting the goal into smaller tasks and then completing them one by one. For example, when a user requests "Write a short story, read it with a female voice, and add a video at the same time," ModelScope-Agent will display the entire task planning process. It will first retrieve relevant speech synthesis tools through tool search, and then the open source LLM will perform planning and scheduling. , first generate a story, then call the corresponding speech generation model, generate the speech and read it in a female voice, display it to the user, and finally call the video generation model to generate a video based on the generated story content; the entire process does not require user configuration. The current request may require The called tools greatly improve the ease of use.

Open source large model training framework: new training methods, data and model open source

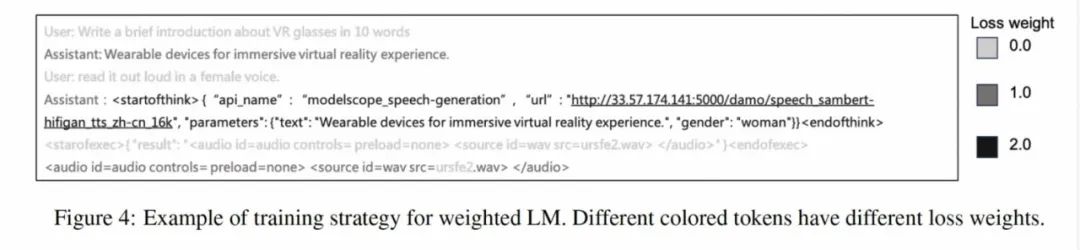

In addition to the ModelScope-Agent framework, the research team also proposed a new tool instruction fine-tuning training method: Weighted LM, which improves the ability to call open source large model tool instructions by weighting loss on some tokens called by tool instructions.

The research team also released a high-quality Chinese and English dataset called MSAgent-Bench, which contains 600,000 multi-round and multi-step A sample of tool command calling capabilities. Based on this data set, the research team adopted a new training method to optimize the Qwen-7B model and obtained a model named MSAgent-Qwen-7B. Relevant data sets and models have been publicly released on the open source platform

- ##MSAgent-Bench: https://modelscope.cn/datasets/damo/MSAgent-Bench/summary

- MSAgent-Qwen-7B:https://modelscope.cn/models/damo/MSAgent-Qwen -7B/summary

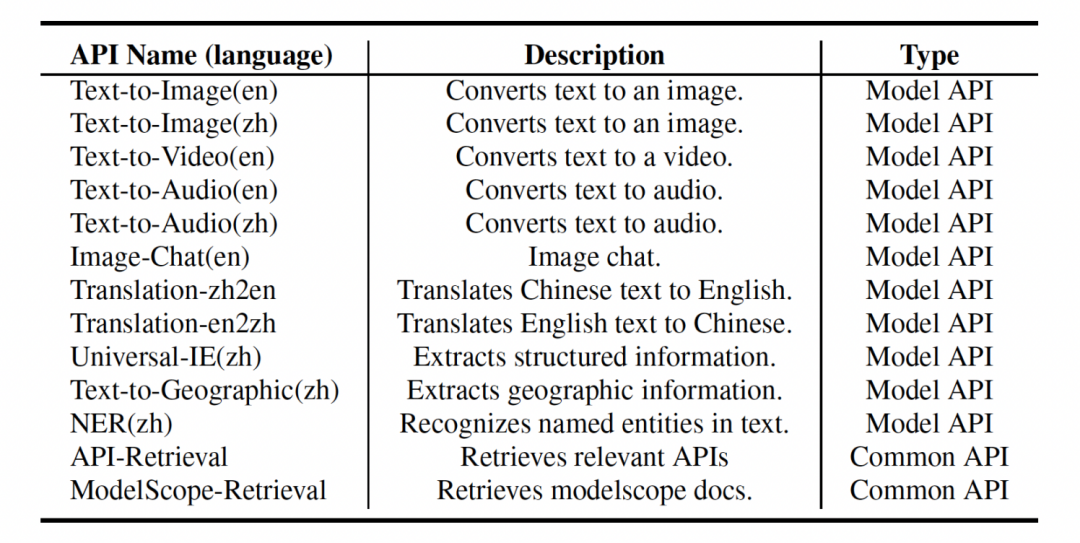

Rewritten content: Integrated tool list

Currently, ModelScope-Agent has been connected to many AI models such as natural language processing, speech, vision, and multi-modality by default, and has also integrated open source solutions such as knowledge retrieval and API retrieval by default.

ModelScope-Agent Practice

ModelScope-Agent github also provides a nanny-level practice demo page, Let novices also build their own intelligent agents.

Please download the demo notebook: https://github.com/modelscope/modelscope-agent/blob/master/demo/demo_qwen_agent.ipynb

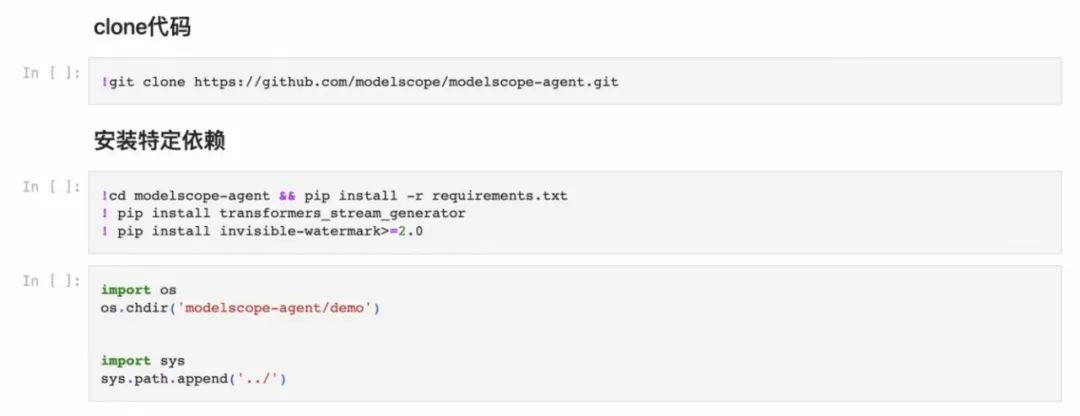

1. First pull the ModelScope-Agent code and install the relevant dependencies

2. You need to configure the config file, including ModelScope token and build API tools Search engine

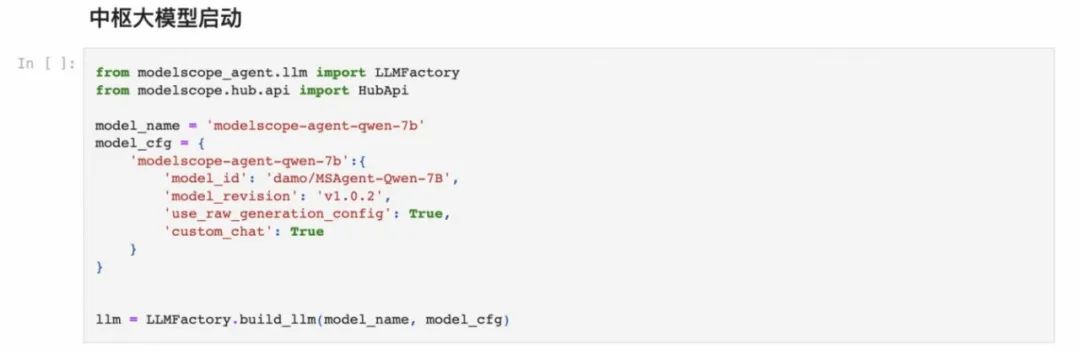

3. Start the central large model

4. Agent construction and use, relying on previously built large models, tool lists, tool retrieval and memory modules

Register New Tool Practice

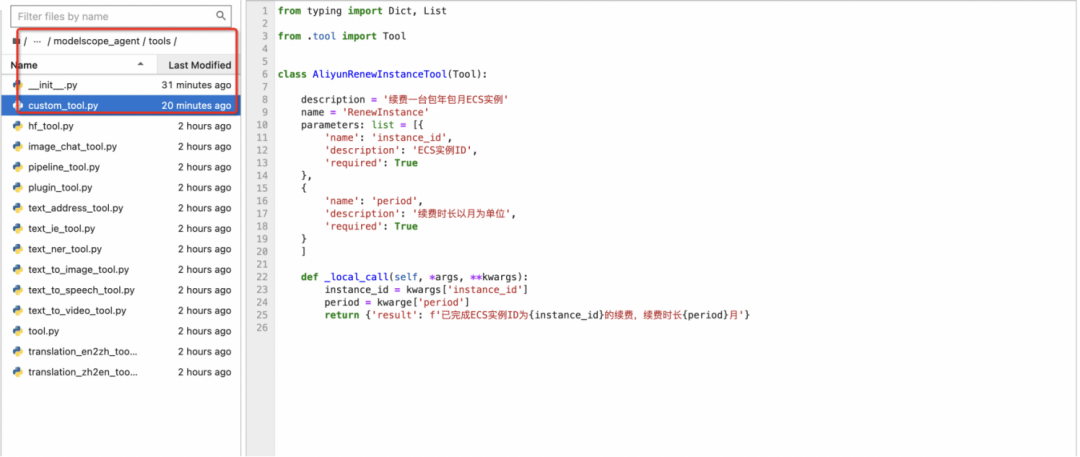

1. After pulling the ModelScope-Agent code, enter the modelscope_agent/tools directory and add a file named custom_tool.py at the code level. Configure the API's required description, name, and parameters in this file. At the same time, add two calling options: local_call (local call) and remote_call (remote call)

The content that needs to be rewritten is: 2 , Configuration environment and large model deployment refer to 2 and 3 of the previous chapter

3. Construct the newly registered tools into a list and add it to the Agent's construction process

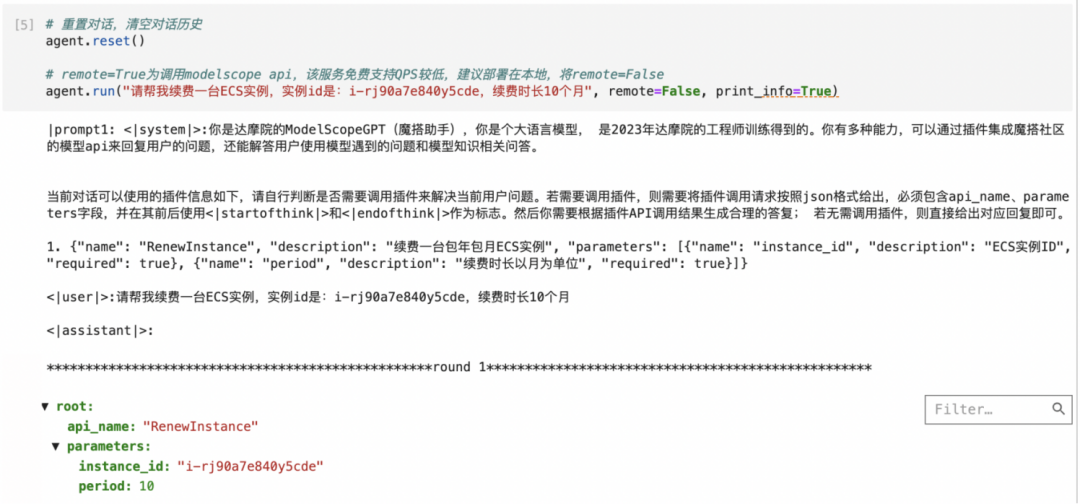

4. Use the agen.run() method and enter the query to test whether the tool can successfully call the corresponding API

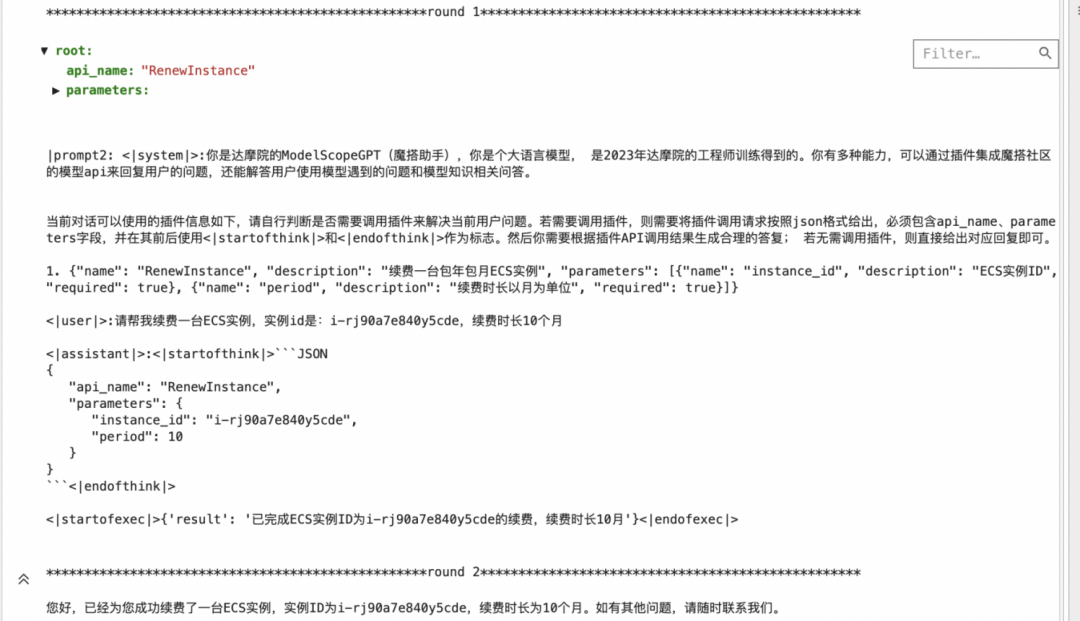

5. The agent will automatically call the corresponding API and return the execution result to the main model, and the main model will return a reply

One More Thing

Developers can refer to the above tutorials to easily build their own agents. ModelScope-Agent relies on the Magic Community and will adapt to more new open source technologies in the future. Large model, launch more applications developed based on ModelScope-Agent, such as customer service agent, personal assistant agent, story agent, motion agent, multi-agent (multi-modal agent) and so on.

The above is the detailed content of With ModelScope-Agent, novices can also create exclusive agents, with nanny-level tutorials included. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

37

37

110

110

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Binance is the overlord of the global digital asset trading ecosystem, and its characteristics include: 1. The average daily trading volume exceeds $150 billion, supports 500 trading pairs, covering 98% of mainstream currencies; 2. The innovation matrix covers the derivatives market, Web3 layout and education system; 3. The technical advantages are millisecond matching engines, with peak processing volumes of 1.4 million transactions per second; 4. Compliance progress holds 15-country licenses and establishes compliant entities in Europe and the United States.

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

After ETH upgrade, novices should adopt the following strategies to avoid losses: 1. Do their homework and understand the basic knowledge and upgrade content of ETH; 2. Control positions, test the waters in small amounts and diversify investment; 3. Make a trading plan, clarify goals and set stop loss points; 4. Profil rationally and avoid emotional decision-making; 5. Choose a formal and reliable trading platform; 6. Consider long-term holding to avoid the impact of short-term fluctuations.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

The top exchanges include: 1. Binance, the world's largest trading volume, supports 600 currencies, and the spot handling fee is 0.1%; 2. OKX, a balanced platform, supports 708 trading pairs, and the perpetual contract handling fee is 0.05%; 3. Gate.io, covers 2700 small currencies, and the spot handling fee is 0.1%-0.3%; 4. Coinbase, the US compliance benchmark, the spot handling fee is 0.5%; 5. Kraken, the top security, and regular reserve audit.