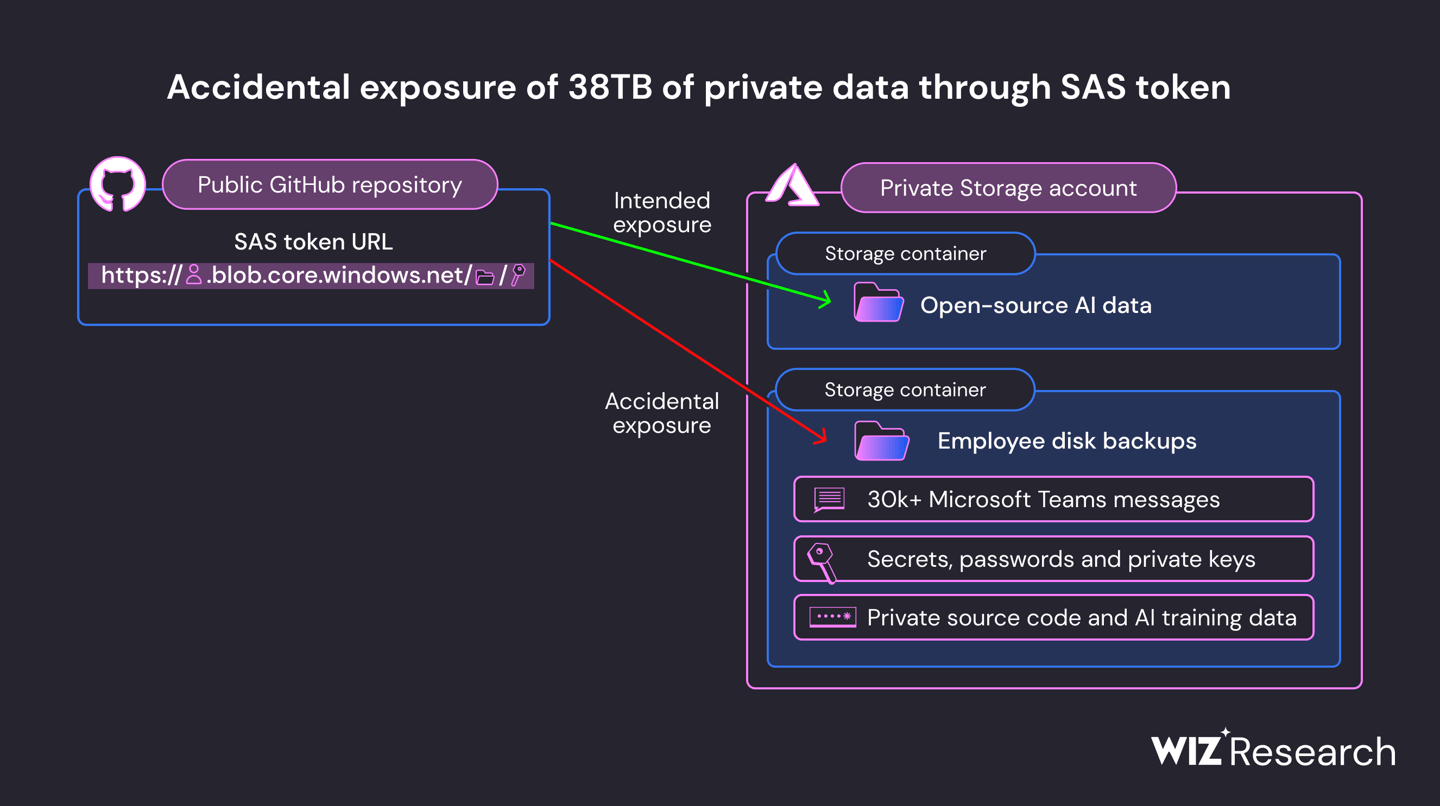

IT Home News on September 18th, cloud security startup Wiz Research announced today that a data leak was discovered in Microsoft AI’s GitHub repository, all caused by a misconfigured SAS (IT Home NOTE: Caused by Shared Access Signature) token.

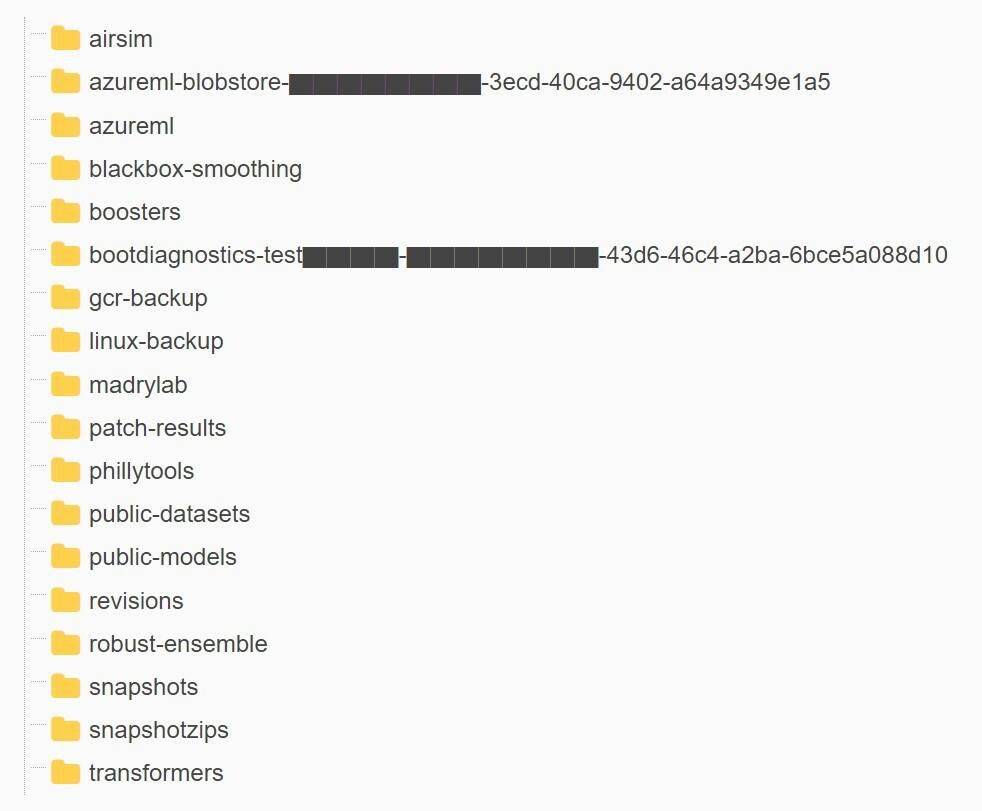

In terms of details, Microsoft's AI research team released open source training data on GitHub, but accidentally exposed 38TB of other internal data, including disk backups of the personal PCs of several Microsoft employees. The disk backup also contained secrets, private keys, passwords and more than 30,000 internal Microsoft Teams messages from hundreds of Microsoft employees.

This GitHub repository provides open source code and AI models for image recognition, and visitors are asked to download the model from an Azure storage URL. However, Wiz discovered that the URL was configured to

This GitHub repository provides open source code and AI models for image recognition, and visitors are asked to download the model from an Azure storage URL. However, Wiz discovered that the URL was configured to

. The URL in question, which allegedly exposed the data since 2020, was also misconfigured to allow "full control" instead of "read-only" permissions, meaning anyone who knew where to look could Possible removal, replacement and injection of malicious content into it.

Wiz has reported this issue to Microsoft, with the reporting date being June 22nd. Microsoft announced the revocation of the SAS token two days later, on June 24. Microsoft said it completed its investigation into potential organizational impact on August 16th

The specific timeline of the entire incident is as follows:

July 20, 2020 - Initial submission of SAS tokens to GitHub; expiry date set for October 5, 2021

The above is the detailed content of Microsoft AI researchers accidentally leaked 38TB of internal data, including private keys and passwords. For more information, please follow other related articles on the PHP Chinese website!