Technology peripherals

Technology peripherals

AI

AI

Fudan NLP team released an 80-page overview of large-scale model agents, providing an overview of the current situation and future of AI agents in one article

Fudan NLP team released an 80-page overview of large-scale model agents, providing an overview of the current situation and future of AI agents in one article

Fudan NLP team released an 80-page overview of large-scale model agents, providing an overview of the current situation and future of AI agents in one article

Paper link: https://arxiv.org/pdf/2309.07864.pdf LLM -based Agent paper list: https://github.com/WooooDyy/LLM-Agent-Paper-List

Since then, the design of agents has been a focus of the artificial intelligence community. However, past work has mainly focused on enhancing specific abilities of agents, such as symbolic reasoning or mastery of specific tasks (chess, Go, etc.). These studies focus more on algorithm design and training strategies, while ignoring the development of the inherent general capabilities of the model, such as knowledge memory, long-term planning, effective generalization, and efficient interaction. It turns out that

#The emergence of large language models (LLMs) brings hope for the further development of intelligent agents. If the development route from NLP to AGI is divided into five levels: corpus, Internet, perception, embodiment, and social attributes, then the current large-scale language models have reached the second level, with Internet-scale text input and output. On this basis, if LLM-based Agents are given perception space and action space, they will reach the third and fourth levels. Furthermore, when multiple agents interact and cooperate to solve more complex tasks, or reflect social behaviors in the real world, they have the potential to reach the fifth level - agent society.

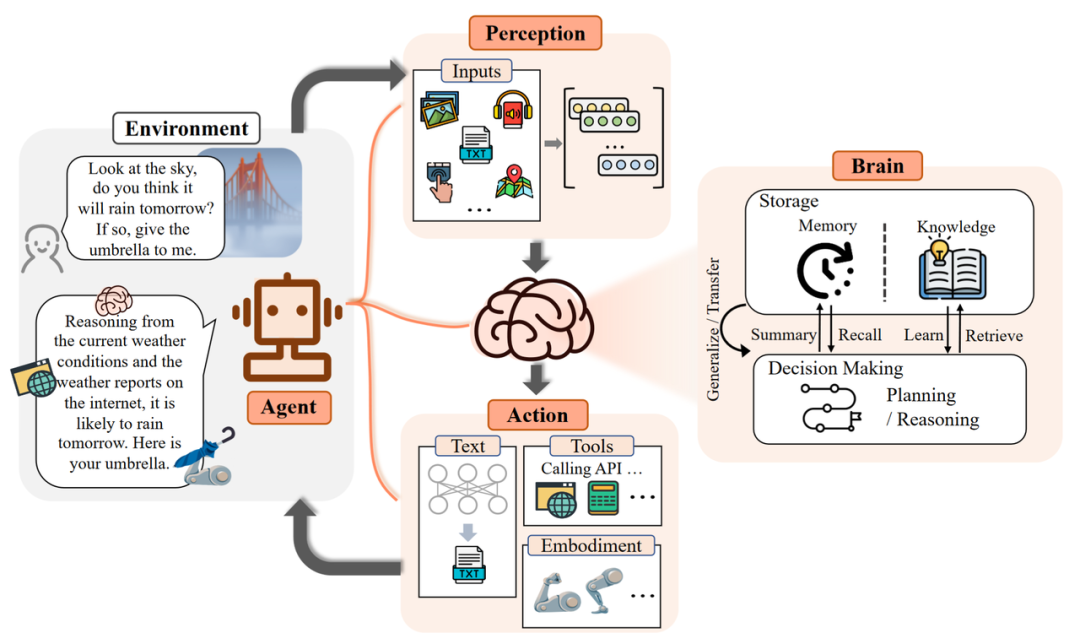

Control terminal: Usually composed of LLMs, it is the core of the intelligent agent. It can not only store memory and knowledge, but also undertake indispensable functions such as information processing and decision-making. It can present the process of reasoning and planning, and cope with unknown tasks well, reflecting the generalization and transferability of intelligent agents. Perception end: Expand the perception space of intelligent agents from pure text to include multi-modal fields such as text, vision and hearing, so that the agent can more effectively Obtain and utilize information from the surrounding environment. Action side: In addition to regular text output, the agent is also given the ability to embody and use tools, allowing it to better adapt to environmental changes. Feedback interacts with the environment and can even shape it.

- High-quality text generation: A large number of evaluation experiments show that LLMs can generate smooth, diverse, and novel text , controllable text. Although poor performance in individual languages, overall good multilingual skills are available.

- Understanding of the implication: In addition to the intuitively displayed content, the language may also convey information such as the speaker's intentions and preferences. The implication is that it helps agents communicate and cooperate more efficiently, and large models have already shown the potential in this regard.

- Extended Backbone architecture Length limit: Improvements are made to address the inherent sequence length limit problem of Transformers.

- Summarizing: Summarize the memory to enhance the agent's ability to extract key details from the memory.

- Compressed memory (Compressing): By compressing memory using vectors or appropriate data structures, memory retrieval efficiency can be improved.

- Plan Formulation: The agent breaks down complex tasks into more manageable Subtasks. For example: one-time decomposition and then execution in sequence, step-by-step planning and execution, multi-path planning and selection of the optimal path, etc. In some scenarios that require professional knowledge, agents can be integrated with domain-specific Planner modules to enhance capabilities.

- Plan Reflection: After making a plan, you can reflect on it and evaluate its strengths and weaknesses. This kind of reflection generally comes from three aspects: using internal feedback mechanisms; getting feedback from interacting with humans; getting feedback from the environment.

- Generalization of unknown tasks: With the scale of the model With the increase of training data, LLMs have emerged with amazing capabilities in solving unknown tasks.Large models fine-tuned with instructions perform well in zero-shot tests, achieving results that are as good as expert models on many tasks.

In-context Learning: Large models are not only able to learn by analogy from a small number of examples in the context, but this ability can also be extended to multi-modal scenes beyond text. Provides more possibilities for agent applications in the real world. Continuous Learning (Continual Learning): The main challenge of continuous learning is catastrophic forgetting, that is, when the model learns a new task, it easily loses knowledge in past tasks. Intelligent agents in specialized domains should try to avoid losing knowledge in general domains.

Convert visual input into corresponding text description (Image Captioning): It can be directly understood by LLMs and is interpretable high. Encode and represent visual information: use the paradigm of visual basic model LLMs to form a perception module, and allow the model to understand the content of different modalities through alignment operations, which can be carried out in an end-to-end manner train.

Observation can help the intelligent agent in the environment In locating one's own position, sensing objects and items, and obtaining other environmental information; Manipulation is to complete some specific operations such as grabbing and pushing; -

Navigation requires the intelligent agent to change its position according to the task goal and update its status according to the environmental information.

Intelligent agents that can accept human natural language commands and perform daily tasks are currently favored by users and have high practical value. The authors first elaborated on its diverse application scenarios and corresponding capabilities in the application scenario of a single intelligent agent.

In this article, the application of a single intelligent agent is divided into the following three levels:

In task-oriented deployments, agents help human users handle basic daily tasks. They need to have basic command understanding, task decomposition, and the ability to interact with the environment. Specifically, according to the existing task types, the actual application of agents can be divided into simulated network environments and simulated life scenarios. In innovation-oriented deployment, agents can demonstrate the potential for independent inquiry in cutting-edge scientific fields. Although the inherent complexity and lack of training data from specialized fields hinders the construction of intelligent agents, there is already a lot of work making progress in fields such as chemistry, materials, computers, etc. In lifecycle-oriented deployment, agents have the ability to continuously explore, learn and use new skills in an open world, and survive for a long time. In this section, the authors take the game "Minecraft" as an example. Since the survival challenge in the game can be considered a microcosm of the real world, many researchers have used it as a unique platform to develop and test the comprehensive capabilities of agents.

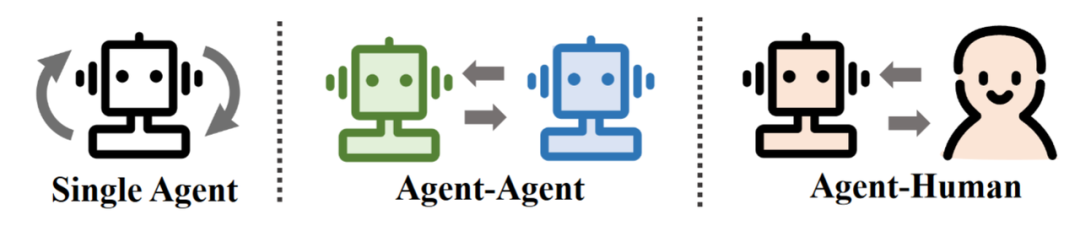

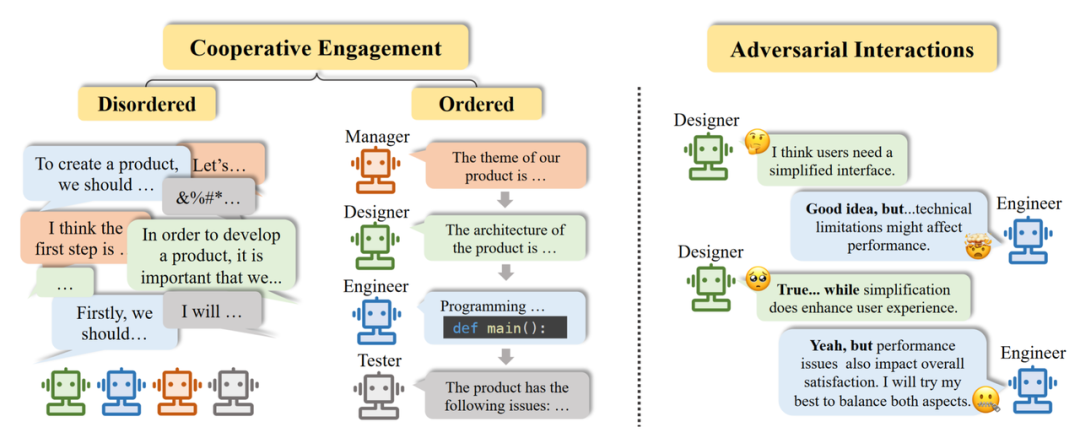

- When all agents freely express their views and opinions and cooperate in a non-sequential manner, it is called disordered cooperation.

- When all agents follow certain rules, such as expressing their opinions one by one in the form of an assembly line, the entire cooperation process is orderly, which is called orderly cooperation.

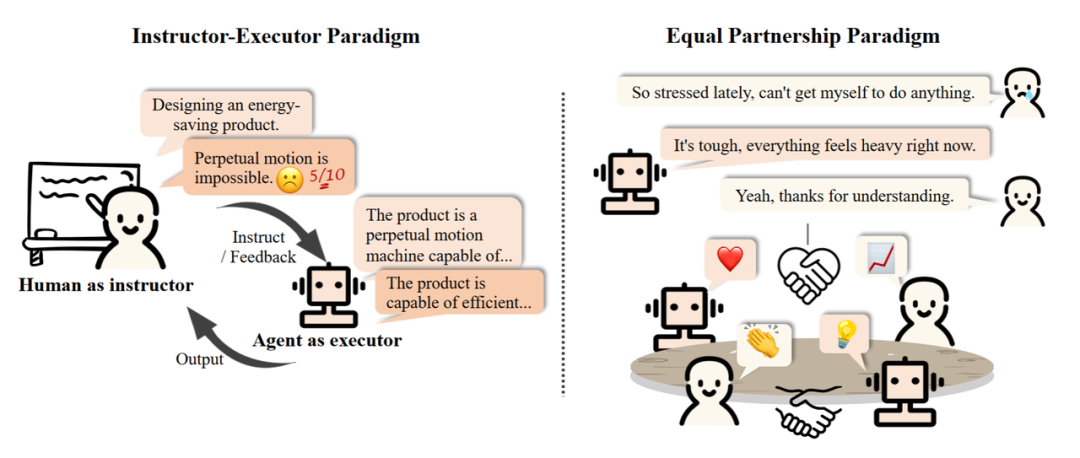

Instructor-Executor mode: Humans act as instructors, giving instructions and feedback; agents act as executors, step by step according to instructions Adjust and optimize. This model has been widely used in education, medical, business and other fields. Equal Partnership Mode: Some studies have observed that agents can show empathy in communication with humans, or participate in task execution as equals middle. Intelligent agents show potential for application in daily life and are expected to be integrated into human society in the future.

## For a long time, researchers have been dreaming of building an "interactive artificial society." From the sandbox game "The Sims" to the "Metaverse", people's definition of simulated society can be summarized as: individuals living and interacting in an environmental environment.

In the article, the authors use a diagram to describe the conceptual framework of Agent society:

Left side part: At the individual level, agents exhibit a variety of internalized behaviors, such as planning, reasoning, and reflection. In addition, agents exhibit intrinsic personality traits that span cognitive, emotional, and personality dimensions. Middle part: A single agent can form a group with other individual agents to jointly demonstrate cooperation and other group behaviors, such as collaborative cooperation, etc. Right part: The environment can be in the form of a virtual sandbox environment or a real physical world. Elements of the environment include human actors and various available resources. For a single agent, other agents are also part of the environment. Overall interaction: Agents actively participate in the entire interaction process by sensing the external environment and taking actions.

The article examines the agent's performance in society from the perspective of external behavior and internal personality:

##Social behavior:

- Crowd behavior refers to the behavior that occurs when two or more agents interact spontaneously. It includes positive behaviors represented by collaboration, negative behaviors represented by conflict, and neutral behaviors such as following the herd and watching.

Personality:

- Emotional intelligence: involves subjective feelings and emotional states, such as joy, anger, sorrow, and joy, as well as the ability to show sympathy and empathy.

- Character portrayal: In order to understand and analyze the personality characteristics of LLMs, researchers have used mature assessment methods, such as the Big Five Personality and MBTI tests, to explore the diversity of personality and complexity.

Simulated social operating environment

Agent society is not only composed of independent individuals, but also includes the environment in which they interact. The environment influences how agents perceive, act, and interact. In turn, agents also change the state of the environment through their actions and decisions. For an individual agent, the environment includes other autonomous agents, humans, and available resources.

Text-based environments:

Virtual sandbox environment:

- Scalability: Various different scenarios (Web, games, etc.) can be built and deployed to conduct various experiments, providing a broad space for agents to explore.

Real physical environment:

The above is the detailed content of Fudan NLP team released an 80-page overview of large-scale model agents, providing an overview of the current situation and future of AI agents in one article. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1243

1243

24

24

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

It is also a Tusheng video, but PaintsUndo has taken a different route. ControlNet author LvminZhang started to live again! This time I aim at the field of painting. The new project PaintsUndo has received 1.4kstar (still rising crazily) not long after it was launched. Project address: https://github.com/lllyasviel/Paints-UNDO Through this project, the user inputs a static image, and PaintsUndo can automatically help you generate a video of the entire painting process, from line draft to finished product. follow. During the drawing process, the line changes are amazing. The final video result is very similar to the original image: Let’s take a look at a complete drawing.

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com The authors of this paper are all from the team of teacher Zhang Lingming at the University of Illinois at Urbana-Champaign (UIUC), including: Steven Code repair; Deng Yinlin, fourth-year doctoral student, researcher

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com In the development process of artificial intelligence, the control and guidance of large language models (LLM) has always been one of the core challenges, aiming to ensure that these models are both powerful and safe serve human society. Early efforts focused on reinforcement learning methods through human feedback (RL

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

cheers! What is it like when a paper discussion is down to words? Recently, students at Stanford University created alphaXiv, an open discussion forum for arXiv papers that allows questions and comments to be posted directly on any arXiv paper. Website link: https://alphaxiv.org/ In fact, there is no need to visit this website specifically. Just change arXiv in any URL to alphaXiv to directly open the corresponding paper on the alphaXiv forum: you can accurately locate the paragraphs in the paper, Sentence: In the discussion area on the right, users can post questions to ask the author about the ideas and details of the paper. For example, they can also comment on the content of the paper, such as: "Given to

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

Recently, the Riemann Hypothesis, known as one of the seven major problems of the millennium, has achieved a new breakthrough. The Riemann Hypothesis is a very important unsolved problem in mathematics, related to the precise properties of the distribution of prime numbers (primes are those numbers that are only divisible by 1 and themselves, and they play a fundamental role in number theory). In today's mathematical literature, there are more than a thousand mathematical propositions based on the establishment of the Riemann Hypothesis (or its generalized form). In other words, once the Riemann Hypothesis and its generalized form are proven, these more than a thousand propositions will be established as theorems, which will have a profound impact on the field of mathematics; and if the Riemann Hypothesis is proven wrong, then among these propositions part of it will also lose its effectiveness. New breakthrough comes from MIT mathematics professor Larry Guth and Oxford University

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

Can language models really be used for time series prediction? According to Betteridge's Law of Headlines (any news headline ending with a question mark can be answered with "no"), the answer should be no. The fact seems to be true: such a powerful LLM cannot handle time series data well. Time series, that is, time series, as the name suggests, refers to a set of data point sequences arranged in the order of time. Time series analysis is critical in many areas, including disease spread prediction, retail analytics, healthcare, and finance. In the field of time series analysis, many researchers have recently been studying how to use large language models (LLM) to classify, predict, and detect anomalies in time series. These papers assume that language models that are good at handling sequential dependencies in text can also generalize to time series.

The first Mamba-based MLLM is here! Model weights, training code, etc. have all been open source

Jul 17, 2024 am 02:46 AM

The first Mamba-based MLLM is here! Model weights, training code, etc. have all been open source

Jul 17, 2024 am 02:46 AM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com. Introduction In recent years, the application of multimodal large language models (MLLM) in various fields has achieved remarkable success. However, as the basic model for many downstream tasks, current MLLM consists of the well-known Transformer network, which