Technology peripherals

Technology peripherals

AI

AI

Is the future of graphics cards only AI? An in-depth analysis of the 'violent comments” made by NV executives

Is the future of graphics cards only AI? An in-depth analysis of the 'violent comments” made by NV executives

Is the future of graphics cards only AI? An in-depth analysis of the 'violent comments” made by NV executives

In recent days, relevant people from NVIDIA have discussed that "native resolution games are dead", which has once again caused concern among the player community. The cause of this incident originated from a roundtable discussion. Several executives from NVIDIA discussed with Jakub Knapik of game manufacturer CD Projekt Red, discussing the role of deep learning anti-aliasing technology (also known as DLSS) in improving game frame rate and rendering optimization. significance.

For game manufacturers like CD Projekt Red who are dedicated to pursuing the ultimate picture effect, but have mediocre performance in optimization, they certainly welcome the widespread application of deep learning and AI super-score algorithms on graphics cards

As Jakub Knapik said, DLSS, as a representative of frame rate improvement technology, "subtly adds more performance margin to modern GPUs", which makes games like "Cyberpunk 2077" can be adopted with confidence The latest ray tracing simulation technology makes the game graphics "more realistic and detailed"

However, when NVIDIA’s Vice President of Deep Learning Research Bryan Catanzaro claimed that “native resolution is no longer the best solution for maximum graphics fidelity in games, the game graphics industry is moving towards greater reliance on AI image reconstruction in the future. and the development of graphics rendering based on AI", which makes people feel a little uneasy

The NVIDIA executive further explained that to make matters worse, improving graphics fidelity through brute force is no longer an ideal solution. He said that only by adopting smarter technology can the problem of low performance improvement of today's graphics hardware be circumvented

At first glance, these statements seem to make sense, because everyone knows that DLSS, especially the version after DLSS3, is extremely "powerful". Basically, as long as it is turned on, the game frame rate can directly increase several times. But the question is, as a technician who focuses on deep learning research, does Catanzaro really “understand” graphics cards and the development of GPU raster performance?

According to the actual data, first of all, the display should not bear this responsibility

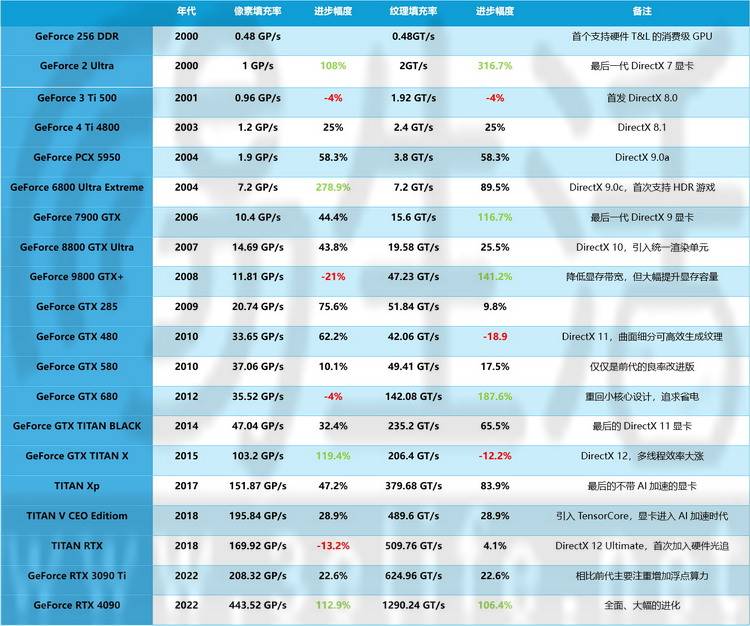

In order to study the development pattern of traditional raster performance of GPU since its inception, we at Sanyi Life collected public data and summarized the pixel and texture generation performance comparison table of NVIDIA consumer-grade graphics cards from 2000 to the present. In the table, red fonts indicate performance degradation, and green fonts indicate situations where single performance growth of a certain generation exceeds 100%

It can be clearly seen from this table that first of all, consumer-grade GPUs have experienced deterioration in raster performance more than once in history. Secondly, looking at the overall development trend, the raster performance of the latest generation of flagship GPUs has been improved thousands of times compared to the products of 22 years ago.

Some friends may say that although the raster performance of GPU has improved, game textures are now more complex than before, and today’s monitors often have 4K high refresh rates, and users have higher performance requirements, so Is it normal to not have enough?

Actually, this is not normal at all. Because as early as around 2000, the top models of the "big ass" CRT monitors at that time could already achieve a resolution of 2304*1440, which was not much lower than the current mainstream "2K" monitors, or even only It has about half the number of pixels than 4K.

As early as 2003, PC enthusiasts could buy the T221 4K LCD monitor manufactured by ViewSonic, which even had a resolution as high as 3840*2400, which is higher than most 4K monitors 20 years later.

Nowadays, the improvement in display resolution is not as huge as everyone imagines. Therefore, we should rule out the idea that advances in monitor resolution lead to insufficient graphics card performance

The real problem may lie in the graphics API

So what is the reason why some people feel that the raster performance of modern GPUs is "not enough"? Let's go back to the previous table and observe the data in it in more detail.

Some friends may have discovered that in history, almost every time the raster (pixel, texture) performance of new GPUs has deteriorated, it "happens" to occur at the time of the DirectX API upgrade. To be more specific, when the API changes and PC games usher in new visual effects and image processing technologies, the hardware design of the graphics card may go backwards.

Why is this? On the one hand, this is because new APIs often require a completely redesigned architecture of the GPU, which may cause the manufacturer's design to be immature when upgrading, so the raster performance declines. From the above table, you will find that generally after a product whose performance is degraded due to replacement, subsequent new products will often have a new and substantial improvement, which indirectly proves that the early design is still so mature.

The upgrade of the graphics API has, to some extent, covered up the temporary decline in graphics card raster performance

On the other hand, when PC games usher in an API upgrade, it means that consumers can only enjoy the special effects advancements brought by the new technology system by purchasing a new graphics card. If you are still using the old model, then at least the picture effect will be compromised, and at worst, you will not be able to run games corresponding to the new API.

In this context, because the new picture effects (such as HDR, tessellation, and ray tracing) corresponding to the new API are "exclusive" to the new hardware, there is actually no difference between the new and old GPUs across generations. It may be possible to really compare performance in games (because at this time the old card cannot run new games at all, or even if it can run, it cannot open new graphics effects). Therefore, even if the new generation of GPU has a regression in raster performance, consumers often cannot feel it.

DX12.2, which focuses on light chasing, has actually been around for four years

But the problem is that today's WIndows graphics API has not been significantly updated for at least four years, or perhaps as long as eight years. This means that the replacement of GPU cannot be reflected in the quality of picture special effects, but can only be "hard-earned" resolution, frame rate, and anti-aliasing level, which is the so-called hard-earned raster performance.

Are these remarks from NVIDIA just a smoke bomb, or do they mean something?

What’s more interesting is that it is obvious from the above table that NVIDIA does not seem to be as “successful” as they themselves claim. Even RTX 4090 has become the generation flagship product with the greatest improvement in raster performance and the most sincerity in many years

As a result, a strange situation arises. NVIDIA actually claimed on the side that "the next generation of products is becoming more and more difficult to develop, and we need to change our thinking", but in fact it launched new products that are completely in line with traditional thinking and have made huge progress

Understanding this, and then looking back to analyze the intention of their remarks, there are actually only the following possibilities.

The first possibility is that the "Vice President of Applied Deep Learning Research" does not really understand the graphics design of GPU. He mistakenly believed that the technical development of his own products was about to encounter difficulties, but in fact he did not.

The second possibility is that because he holds this position, he has to say these "scene words" and emphasize the importance of AI and DLSS, even though the performance of RTX 4090 is already very powerful in most games and there is no need to use AI anti-aliasing. , he must also say this

Of course, we cannot rule out the possibility that the above remarks are actually just a "smoke bomb" and that NVIDIA deliberately spreads false information in order to confuse competitors

Even further, if the huge performance improvement of the RTX 4090 generation is understood as NVIDIA’s “complete understanding of the characteristics of DX12.2”, then its ultra-high raster performance, and the “raster is useless” by NVIDIA related people "On", does it also imply that there is indeed a next-generation PC graphics API that relies heavily on AI computing power and is being developed, and it may not be far away from release (upgrading)?

[Some pictures in this article are from the Internet]

The above is the detailed content of Is the future of graphics cards only AI? An in-depth analysis of the 'violent comments” made by NV executives. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

git software installation

Apr 17, 2025 am 11:57 AM

git software installation

Apr 17, 2025 am 11:57 AM

Installing Git software includes the following steps: Download the installation package and run the installation package to verify the installation configuration Git installation Git Bash (Windows only)

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

This article will explain in detail how to view keys in Git software. It is crucial to master this because Git keys are secure credentials for authentication and secure transfer of code. The article will guide readers step by step how to display and manage their Git keys, including SSH and GPG keys, using different commands and options. By following the steps in this guide, users can easily ensure their Git repository is secure and collaboratively smoothly with others.