Technology peripherals

Technology peripherals

AI

AI

AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages

AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages

AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages

Electronic computers were born in the 1940s, and within 10 years of the emergence of computers, the first AI application in human history appeared.

AI models have been developed for more than 70 years. Now they can not only create poetry, but also generate images based on text prompts, and even help humans discover unknown protein structures

In such a short period of time, AI technology has achieved exponential growth. What is the reason for this?

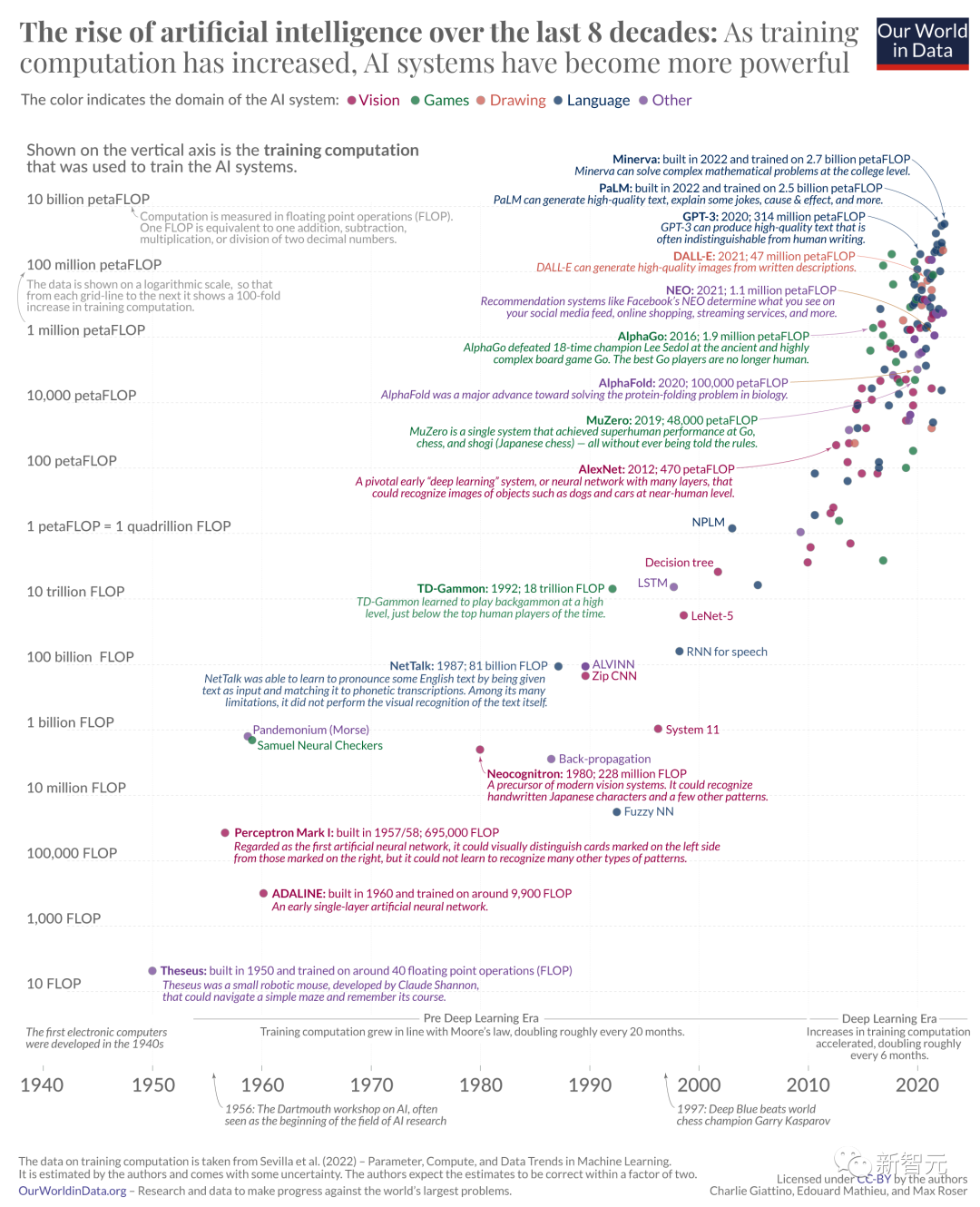

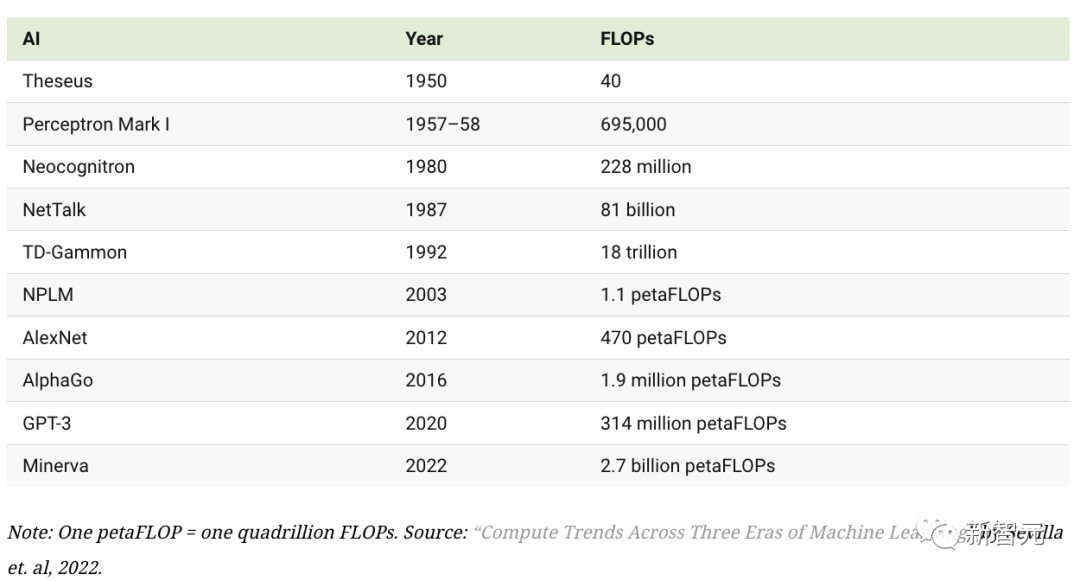

A long picture from "Our World in Data" (Our World in Data), using the computing power used to train the AI model as a scale to analyze the history of AI development. Traceability.

High-definition picture: https://www.visualcapitalist.com/wp-content/uploads/ 2023/09/01.-CP_AI-Computation-History_Full-Sized.html The content that needs to be rewritten is: High-resolution large image link: https://www.visualcapitalist.com/wp-content/uploads/2023/09/01.-CP_AI-Computation-History_Full-Sized.html

The source of this data is a paper published by researchers from MIT and other universities

Paper link: https://arxiv.org/pdf/2202.05924.pdf

In addition to the paper, a research team also produced a visual table based on the data in this paper. Users can zoom in and out of the chart at will to obtain more detailed data

The content that needs to be rewritten is: Table address: https://epochai.org /blog/compute-trends#compute-trends-are-slower-than-previously-reported

The author of the chart mainly estimates the training time of each model by calculating the number of operations and GPU time. The amount of calculation, and for which model to choose as a representative of an important model, the author mainly determines through three properties:

Significant importance: a certain system has a major historical impact, significant Improved SOTA, or been cited more than 1,000 times.

Relevance: The author only includes papers that contain experimental results and key machine learning components, and the goal of the paper is to promote the development of existing SOTA.

Uniqueness: If there is another more influential paper describing the same system, then that paper will be removed from the author's dataset

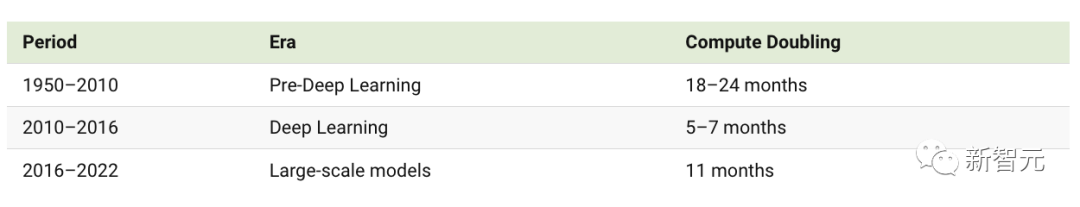

Three eras of AI development

In the 1950s, American mathematician Claude Shannon trained a robot mouse named Theseus to enable it to Navigate the maze and memorize the path. This is the first instance of artificial learning

Theseus is built on 40 floating-point operations (FLOPs). FLOPs are commonly used as a measure of computer hardware computing performance. The higher the number of FLOPs, the greater the computing power and the more powerful the system.

The advancement of AI relies on three key elements: computing power, available training data, and algorithms. In the early decades of AI development, the demand for computing power continued to grow according to Moore's Law, which means that computing power doubled approximately every 20 months

However, when the emergence of AlexNet (an image recognition artificial intelligence) in 2012 marked the beginning of the deep learning era, this doubling time was greatly shortened to six months, as researchers improved the computing and processor Investments increase

With the 2015 emergence of AlphaGo—a computer program that defeated professional human Go players—researchers discovered the third era: massive AI The model era has arrived, and its computational requirements are greater than all previous AI systems.

The progress of AI technology in the future

Looking back on the past ten years, the growth rate of computing power is simply incredible

For example, the computing power used to train Minerva, an AI that can solve complex mathematical problems, was almost 6 million times that used to train AlexNet a decade ago.

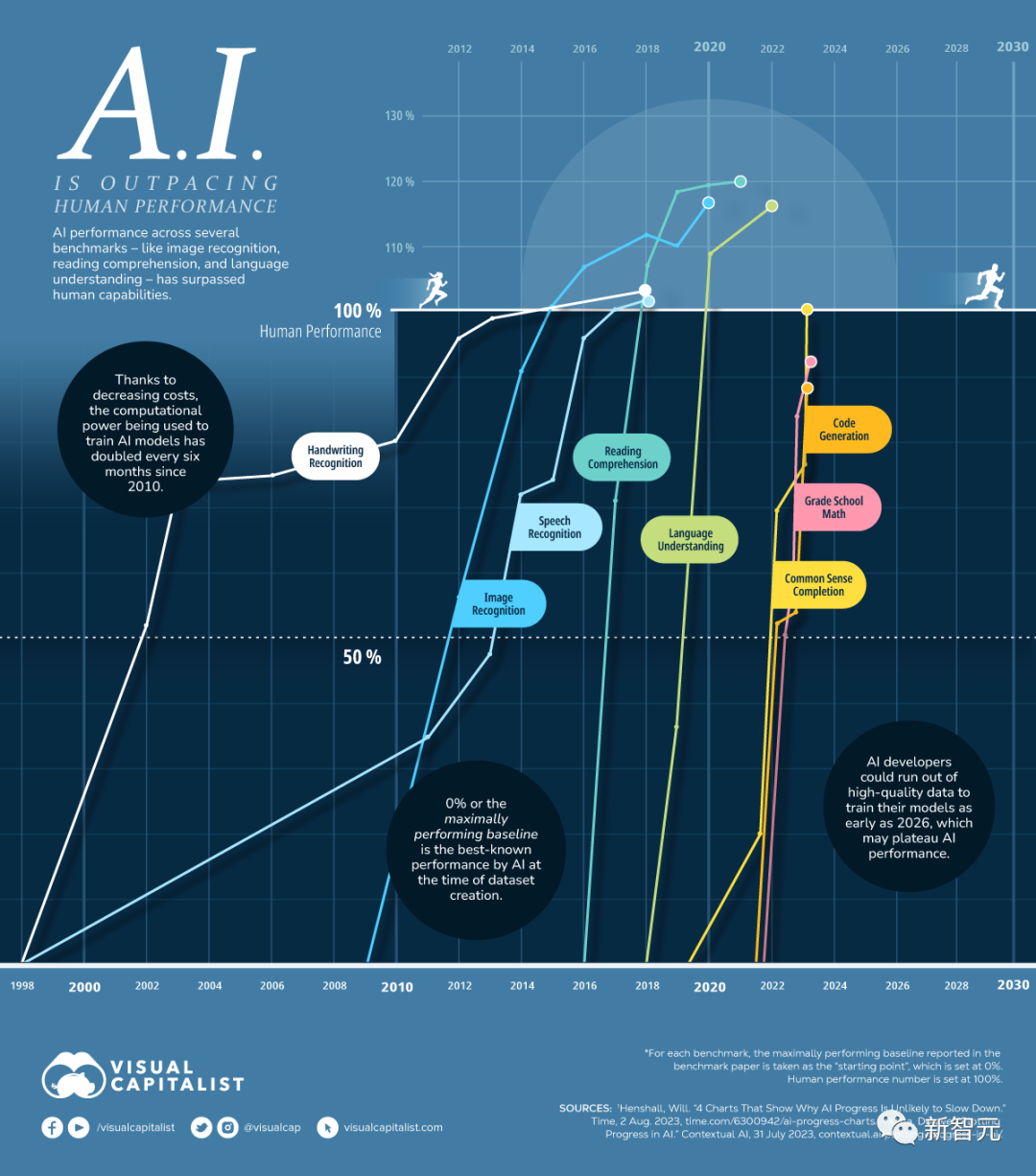

This growth in computing, coupled with the vast number of available data sets and better algorithms, has allowed AI to achieve a vast amount of results in an extremely short period of time. progress. Today, AI can not only reach human performance levels, but even surpass humans in many fields.

AI capabilities will continue to surpass humans in all aspects

As can be clearly seen from the above picture, Artificial intelligence has already surpassed human performance in many areas and will soon surpass human performance in others.

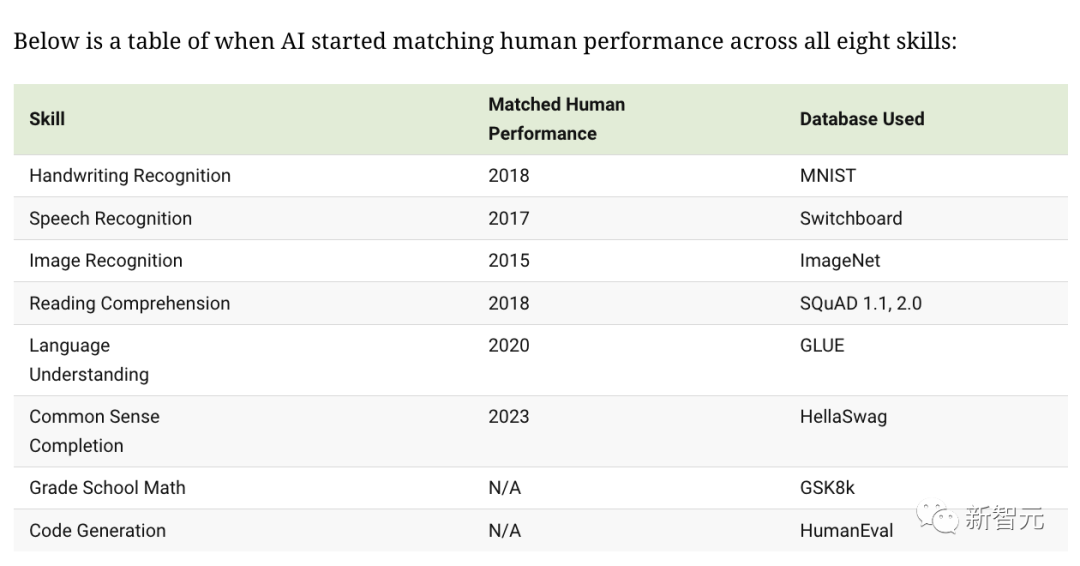

The following figure shows in which year AI has reached or exceeded human levels in common abilities that humans use in daily work and life.

AI technology development potential is sufficient

It is difficult to determine whether growth can maintain the same rate . The training of large-scale models requires more and more computing power. If the supply of computing power cannot continue to grow, it may slow down the development process of artificial intelligence technology

Similarly, exhaustion is currently available for All the data used to train AI models can also hinder the development and implementation of new models.

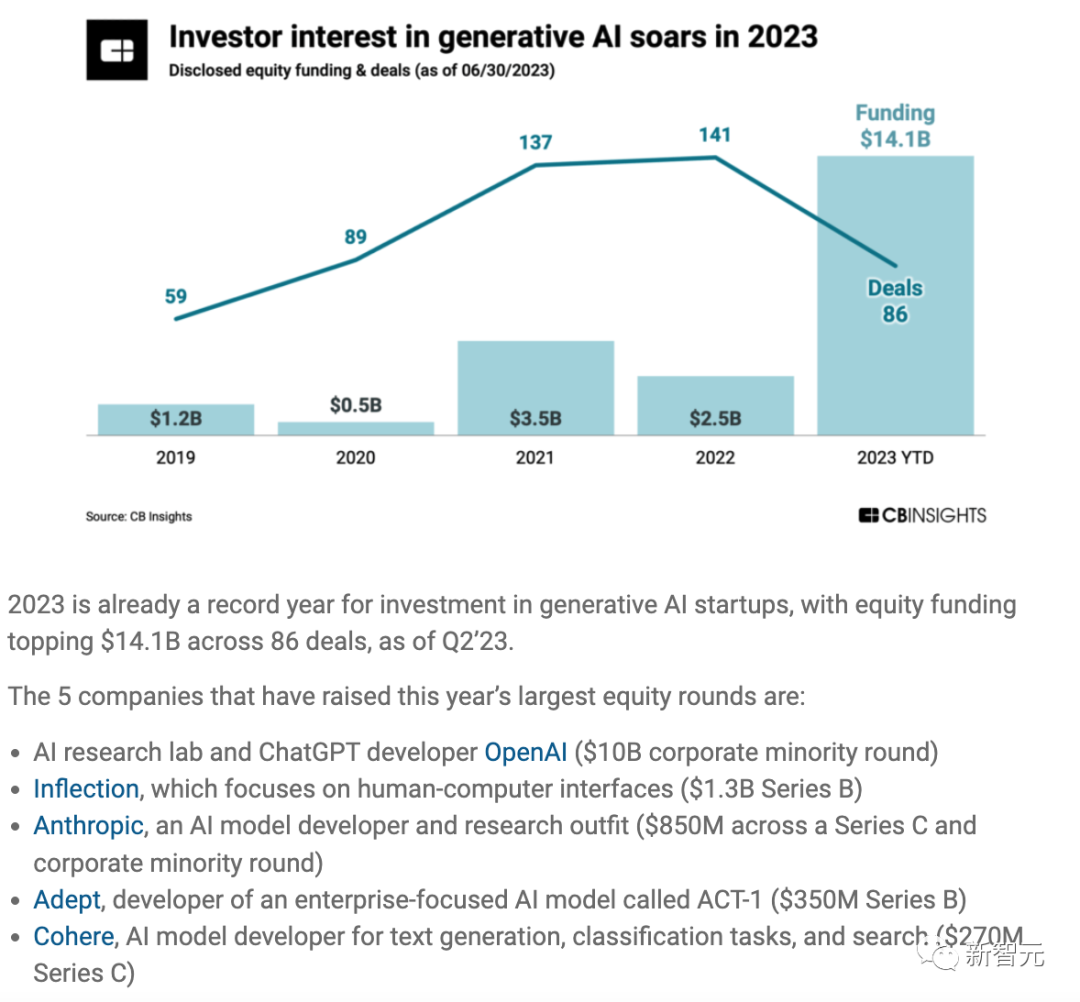

In 2023, the AI industry will see an influx of capital, especially generative AI represented by large language models. This may indicate that more breakthroughs are coming. It seems that the above three elements that promote the development of AI technology will be further optimized and developed in the future

In the first half of 2023, the AI industry of startups have raised $14 billion in funding, which is even more than the total funding received in the past four years.

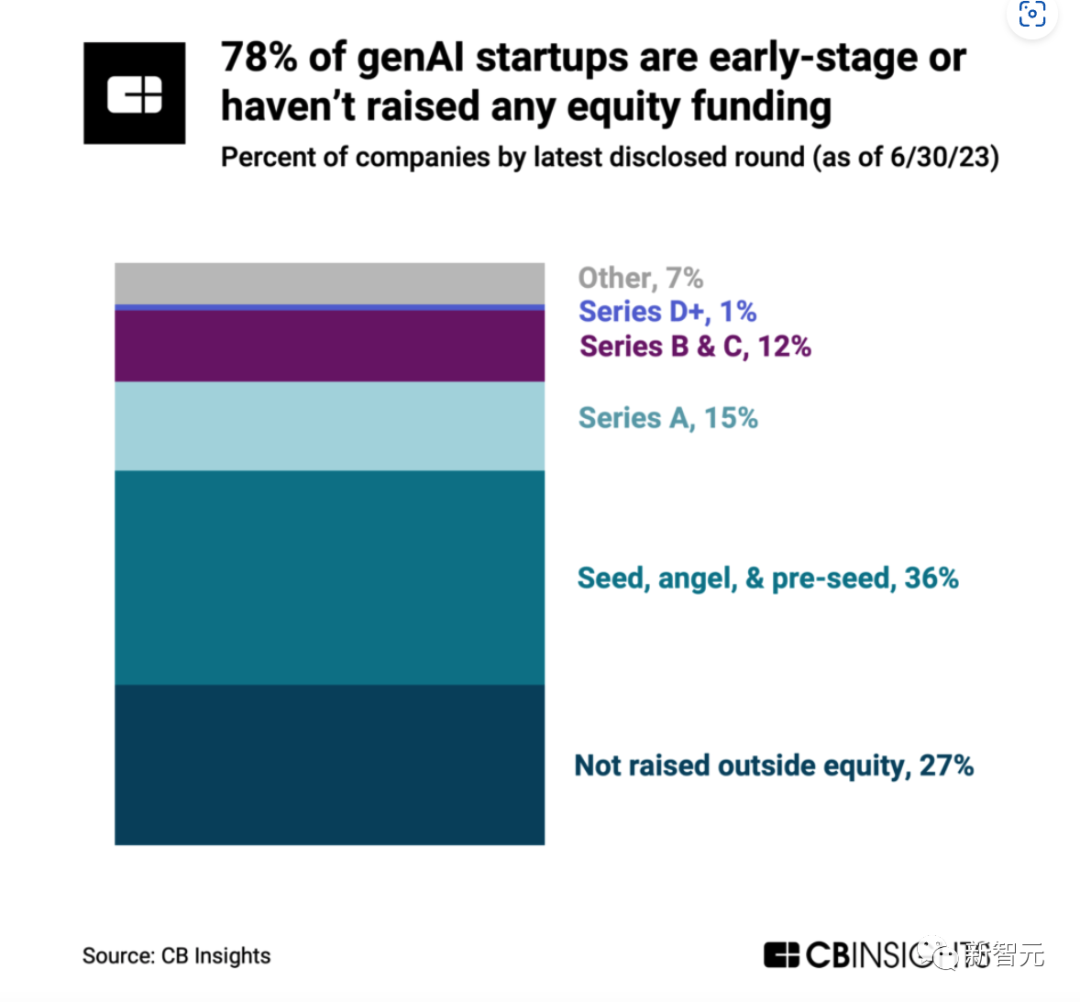

And a large number (78%) of generative AI startups are still in the very early stages of development, and even 27% of generative AI startups The company has not yet raised capital.

More than 360 generative artificial intelligence companies, 27% have not yet raised funds. More than half are projects of Round 1 or earlier, indicating that the entire generative AI industry is still in a very early stage.

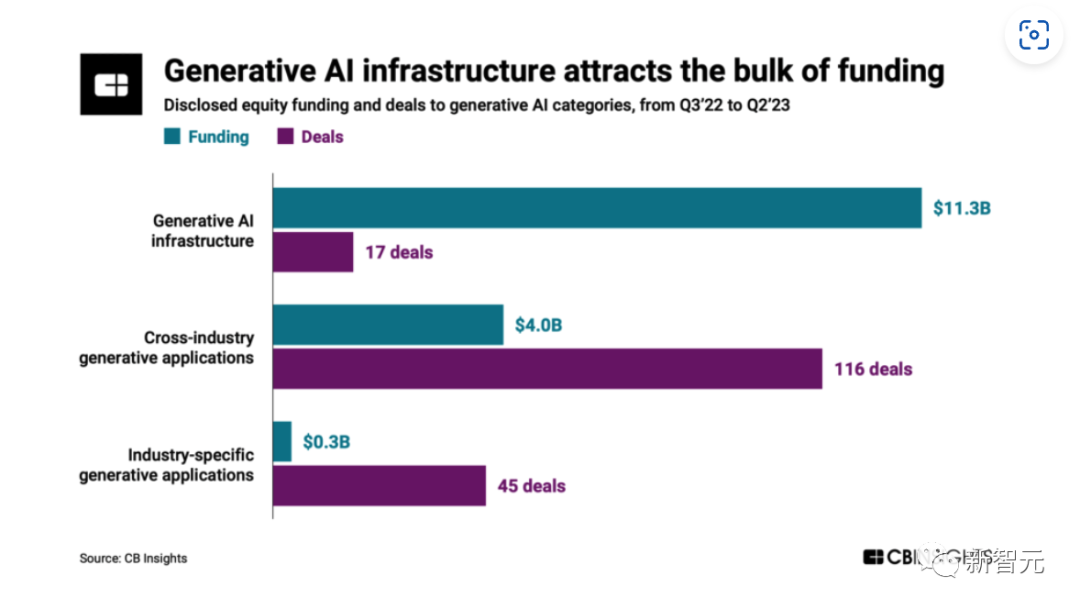

Due to the capital-intensive nature of developing large language models, the generative AI infrastructure category has gained over 70% since Q3 2022 % of funds, accounting for only 10% of all generative AI trading volume. Much of the funding comes from investor interest in emerging infrastructure such as underlying models and APIs, MLOps (machine learning operations), and vector database technology.

The above is the detailed content of AI technology explodes exponentially: computing power increased 680 million times in 70 years, witnessed in 3 historical stages. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

Managing Hadoop logs on Debian, you can follow the following steps and best practices: Log Aggregation Enable log aggregation: Set yarn.log-aggregation-enable to true in the yarn-site.xml file to enable log aggregation. Configure log retention policy: Set yarn.log-aggregation.retain-seconds to define the retention time of the log, such as 172800 seconds (2 days). Specify log storage path: via yarn.n