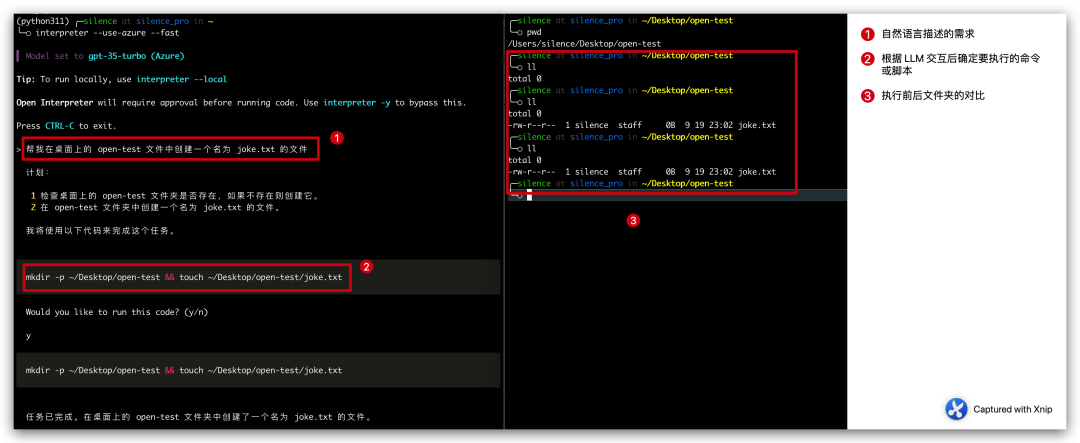

I recently discovered an artifact when browsing Github, called Open Interpreter, which is mainly used to interact with large language models locally. It converts natural language into script code through the large language model, and then executes it locally. thereby achieving the goal.

In short, if you want to create a file named joke.txt on the desktop, you do not need to create it manually. Instead, you can tell Open Interpreter through natural language and let it help us generate the created file. The script is then executed locally to generate a joke.txt file

All we have to do is tell it what our needs are and allow it to execute the code locally.

Please rewrite the content into Chinese without changing the original meaning. There is no need to appear the original sentence

Picture

Picture

The above case The whole process is divided into three steps:

计划: 1 检查桌面上的 open-test 文件夹是否存在,如果不存在则创建它。 2 在 open-test 文件夹中创建一个名为 joke.txt 的文件。我将使用以下代码来完成这个任务。mkdir -p ~/Desktop/open-test && touch ~/Desktop/open-test/joke.txt

This project has just started, and this case is also very simple, but we need to know that this seems to open another door. In the future, as long as the functions that can be achieved through code, we can use natural language to It’s come true, I’m very excited (scary) just thinking about it.

Official introduction: This tool can be used to edit videos and send emails. It can only be said that it is very promising.

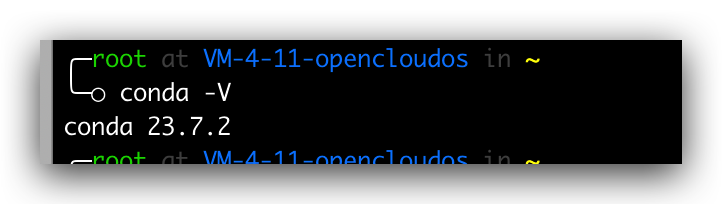

Installing this project is very simple. Normally, we only need to execute pip install open-interpreter on the command line. However, in order to ensure the stability of the environment, we are going to use conda for environment isolation. Therefore, we first need to install conda and execute the following commands in sequence

# 获取脚本wget https://repo.anaconda.com/archive/Anaconda3-2023.07-2-Linux-x86_64.sh# 增加可执行权限chmod +x Anaconda3-2023.07-2-Linux-x86_64.sh# 运行安装脚本./Anaconda3-2023.07-2-Linux-x86_64.sh# 查询版本conda -V

If the version number can be output normally, it indicates that the installation is successful. If the prompt command does not exist, then we need to configure the environment variables, in

vim .bash_profile# 加入下面一行PATH=$PATH:$HOME/bin:$NODE_PATH/bin:/root/anaconda3/bin# 再次执行conda -V

Picture

Picture

After installing conda, we will create an isolation environment for the specified Python version, through the following command

conda create -n python311 pythnotallow=3.11

The meaning of this line of code is to create an isolation environment named python311 through conda create. The python version of the isolation environment is specified as 3.11. After the creation is completed, we can query the list of isolation environments through the following command.

conda env list

Picture

Picture

Then we enter the isolation environment and install open interpreter in the isolation environment, command As follows

conda activate python311pip install open-interpreter

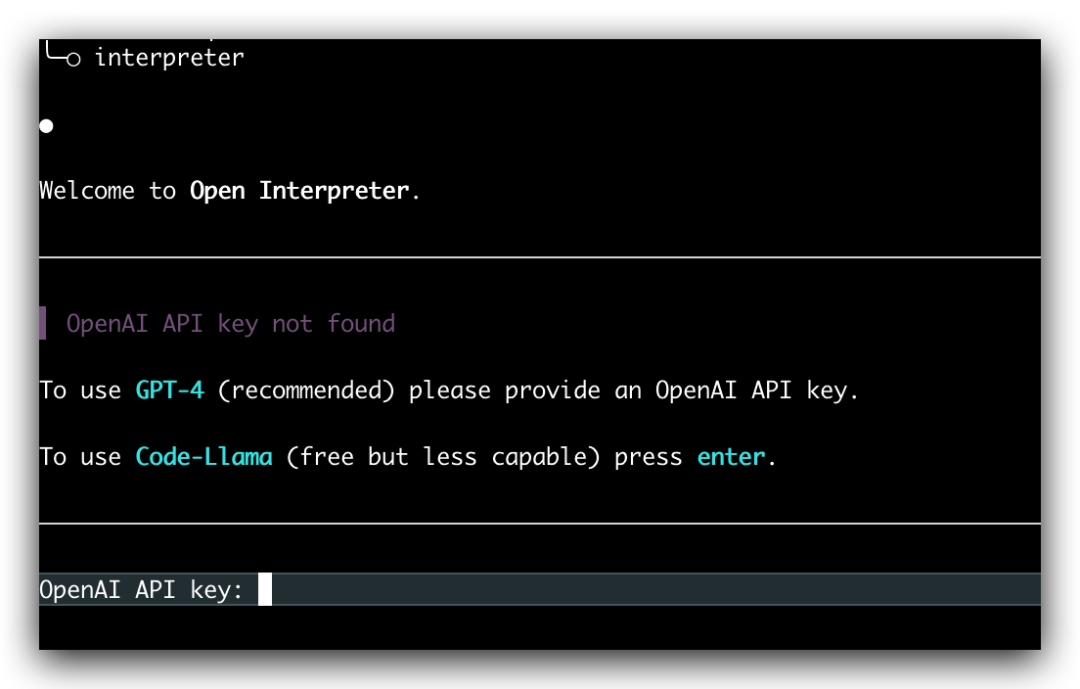

Wait for a while and the installation will be successful. After the installation is successful, enter the following command to start the local large language model interaction.

interpreter

Picture

Picture

There is no need to change the original meaning, the content that needs to be rewritten is: When we directly enter the "interpreter" command, we will be asked to enter Your own OpenAI API key. At this time, the GPT-4 model is used by default, but we can use the GPT-3.5 model by adding the "--fast" parameter

interpreter --fast

Here we need to manually fill in the OpenAI API key every time we execute the comparison Trouble, you can configure the environment variables

export OPENAI_API_KEY=skxxxx

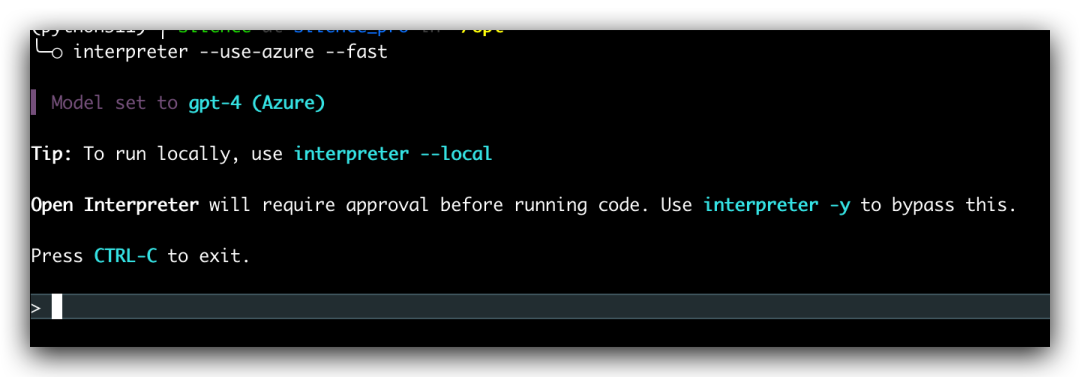

so that we don’t have to manually fill in the OpenAI API key for subsequent executions. If you want to use Microsoft’s Azure OpenAI, it is also supported. Just configure the following environment variables, and then Just add the --use-azure parameter at startup

export AZURE_API_KEY=export AZURE_API_BASE=export AZURE_API_VERSION=export AZURE_DEPLOYMENT_NAME=

interpreter --use-azure

Picture

Picture

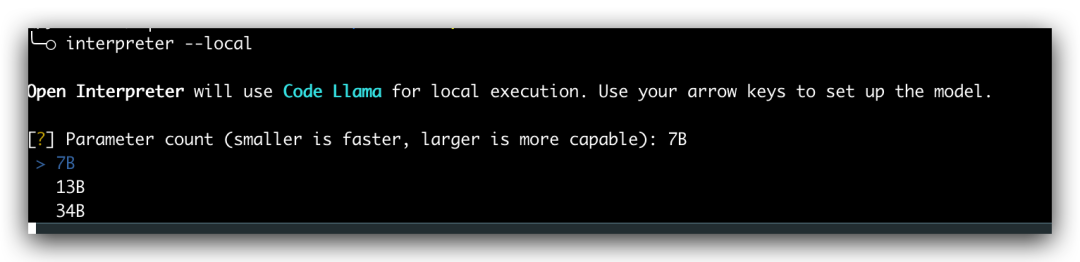

All operations require us Have the corresponding OpenAI API key or Azure's OpenAI endpoint. In fact, Open Interpreter also supports local running, just add the --local parameter when starting, and then select the corresponding model. However, local operation requires our computer configuration support. Friends who are interested in this can refer to the official documentation for practice, but my configuration does not allow this

Picture

Picture

Today I introduced to you the installation method and simple gameplay of Open interpreter. This project is currently undergoing rapid iteration and I believe it will bring about a revolution in the near future. It has to be said that the era of artificial intelligence has truly arrived, and the next few years will be a period of vigorous development of artificial intelligence products. As programmers, we must keep up with the pace of the times and not fall behind

The above is the detailed content of Open Interpreter: An open source tool that enables large language models to execute code locally. For more information, please follow other related articles on the PHP Chinese website!

How to flash Xiaomi phone

How to flash Xiaomi phone

How to center div in css

How to center div in css

How to open rar file

How to open rar file

Methods for reading and writing java dbf files

Methods for reading and writing java dbf files

How to solve the problem that the msxml6.dll file is missing

How to solve the problem that the msxml6.dll file is missing

Commonly used permutation and combination formulas

Commonly used permutation and combination formulas

Virtual mobile phone number to receive verification code

Virtual mobile phone number to receive verification code

dynamic photo album

dynamic photo album