Technology peripherals

Technology peripherals

AI

AI

Galaxy AI Network, the answer to transportation capacity in the era of large models

Galaxy AI Network, the answer to transportation capacity in the era of large models

Galaxy AI Network, the answer to transportation capacity in the era of large models

As the value of large AI pre-trained models continues to emerge, the scale of the models is becoming larger and larger. Industry and academia have reached a consensus: In the AI era, computing power is productivity.

Although this understanding is correct, it is not comprehensive. Digital systems have three pillars: storage, computing, and networking, and the same goes for AI technology. If you put aside storage and network computing power, then large models can only stand alone. In particular, network infrastructure adapted to large models has not received effective attention.

Faced with large-scale AI models that frequently require "tens of thousands of cards for training", "tens of thousands of miles of deployment" and "trillions of parameters", network transport capacity is a link that cannot be ignored in the entire intelligent system. The challenges it faces are very prominent, and it is waiting for answers that can break the situation.

Wang Lei, President of Huawei Data Communication Product Line

On September 20, a data communications summit with the theme of "Galaxy AI Network, Accelerating Industry Intelligence" was held during the Huawei Connect Conference 2023. Representatives from all walks of life discussed the transformation and development trends of AI network technology. At the meeting, Wang Lei, President of Huawei’s Data Communications Product Line, officially launched the Galaxy AI network solution. He said that large models make AI smarter, but the cost of training a large model is very high, and the cost of AI talent must also be considered. Therefore, in the intelligentization stage of the industry, only by concentrating on building large computing power clusters and providing intelligent computing cloud services to the society can artificial intelligence be truly penetrated into thousands of industries. Huawei has released a new generation of Galaxy AI network solution. Facing the intelligent era, it builds a new network infrastructure with ultra-high throughput, long-term stability, reliability, elasticity and high concurrency to help AI benefit everyone and accelerate the intelligence of the industry.

Take this opportunity to learn about the network challenges brought by the rise of large models to intelligent computing data centers, and why Huawei Galaxy AI Network is the optimal solution to these problems.

When it comes to the AI era, a model, a piece of data, and a computing unit can be regarded as a starlight. However, only by connecting them together efficiently and stably can a brilliant intelligent world be formed

The explosion of large models triggered a hidden network torrent

We know that the AI model is divided into two stages: training and inference deployment. With the rise of pre-trained large models, huge AI network challenges have also occurred in these two stages.

The first is the training phase of the large model. As the model scale and data parameters become larger and larger, large model training begins to require computing clusters of kilocalorie or even 10,000 kilowatts to complete. This also means that large model training must occur in data centers with AI computing power.

At the current stage, the cost of intelligent computing data centers is very high. According to industry data, the cost of building a cluster with 100P computing power reaches 400 million yuan. Taking a well-known international large model as an example, its daily computing power expenditure during the training process reaches 700,000 US dollars

If the connection capability of the data center network is not smooth, resulting in a large amount of computing resources being lost during network transmission, the losses to the data center and AI models will be immeasurable. On the contrary, if cluster training is more efficient under the same computing power scale, then data centers will gain huge business opportunities. The load rate and other network factors directly determine the training efficiency of the AI model. On the other hand, as the scale of the AI computing power cluster continues to expand, its complexity also increases accordingly, so the probability of failure is also increasing. Building a long-term stable and reliable cluster network is an important pivot for data centers to improve their input-output ratio

Outside of the data center, the value of the AI network can also be seen in the reasoning and deployment scenarios of AI models. The inference deployment of large models mainly relies on cloud services, and cloud service providers must try to serve larger customers with limited computing resources to maximize the commercial value of large models. As a result, the more users there are, the more complex the entire cloud network structure will be. How to provide long-term and stable network services has become a new challenge for cloud computing service providers.

In addition, in the last mile of AI inference deployment, government and enterprise users are faced with the need to improve network quality. In real scenarios, 1% link packet loss will cause TCP performance to drop 50 times, which means that for a 100Mbps broadband, the actual capacity is less than 2Mbps. Therefore, only by improving the network capabilities of the application scenario itself can we ensure the smooth flow of AI computing power and realize truly inclusive AI.

It is not difficult to see from this that in the entire process of the birth, transmission, and application of large AI models, every link faces the challenges and needs of network upgrades. The transportation capacity problem in the era of large models needs to be solved urgently.

The network breakthrough ideas in the intelligent era can extend from starlight to galaxy

The rise of large models has brought about a multi-link, full-process network problem. Therefore, we must take a systematic approach to address this challenge

Huawei has proposed a new network infrastructure for intelligent computing cloud services. The facility needs to support the three capabilities of "high-efficiency training", "non-stop computing power" and "inclusive AI services". These three capabilities cover the entire scenario of AI large models from training to inference deployment. Huawei not only focuses on meeting a single need and upgrading a single technology, but also comprehensively promotes the iteration of AI networks, bringing unique breakthrough ideas to the industry

Specifically, the network infrastructure in the AI era needs to include the following capabilities:

First of all, the network needs to maximize the value of the AI computing cluster in the training scenario. By building a network with ultra-large-scale connection capabilities, we can achieve high efficiency in training large AI models.

Secondly, in order to ensure the stability and sustainability of AI tasks, it is necessary to build long-term and reliable network capabilities to ensure that monthly training is not interrupted, and at the same time, stable delimitation, positioning and recovery at the second level are required. Minimize training interruptions as much as possible. This is the non-stop capacity building of computing power.

Thirdly, during the AI inference deployment process, the network is required to have the characteristics of elasticity and high concurrency, which can intelligently orchestrate massive user flows and provide the best AI landing experience. At the same time, it can resist the impact of network degradation and ensure different AI computing power flows smoothly between regions, which also realizes the capacity building of "inclusive AI services".

Huawei finally launched the Galaxy AI network solution, adhering to this game-breaking idea. This solution integrates dispersed AI technologies and forms a galaxy-like network through powerful computing capabilities

Galaxy AI Network gives a capacity answer to the big model era

During the Huawei Full Connection Conference 2023, Huawei shared its development vision for accelerating the creation of large AI models with large computing power, large storage capacity, and large transportation capacity. The new generation of Huawei's Galaxy AI network solution can be said to be Huawei's solution to large-scale transport capacity in the era of intelligence.

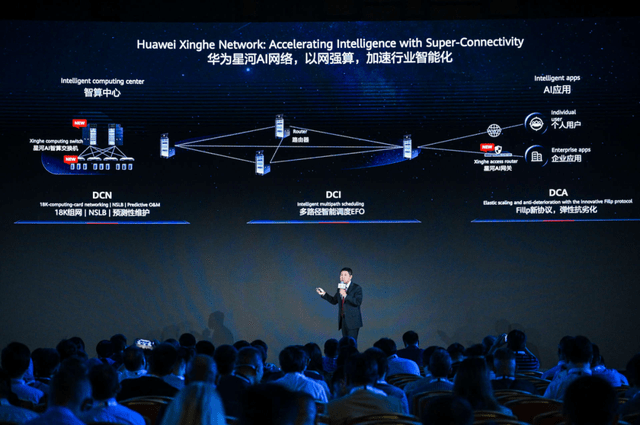

For intelligent data centers, Huawei Galaxy AI Network is the optimal solution based on network power.

Its ultra-high throughput network characteristics can provide important value to the AI cluster in the intelligent computing center to improve the network load rate and enhance training efficiency. Specifically, Galaxy AI network intelligent computing switches have the industry's highest density 400GE and 800GE port capabilities. Only a layer 2 switching network can realize a convergence-free cluster network of 18,000 cards, thus supporting large model training with over one trillion parameters. . Once the networking levels are reduced, it means that the data center can save a lot of optical module costs, while improving the predictability of network risks and obtaining more stable large model training capabilities.

The Galaxy AI network can support network-level load balancing NSLB, increasing the load rate from 50% to 98%, which is equivalent to realizing overclocking operation of the AI cluster, thereby improving the training efficiency by 20%, and meeting the expectations of efficient training

For cloud service manufacturers, Galaxy AI Network can provide stable and reliable computing power guarantee.

In DCI computing room interconnection scenarios, this technology can provide functions such as multi-path intelligent scheduling, automatically identify and proactively adapt to the impact of peak business traffic. It can identify large and small flows from millions of data flows and reasonably allocate them to 100,000 paths to achieve zero congestion in the network and provide elastic guarantee for high-concurrency intelligent computing cloud services

For government and enterprise users, Galaxy AI Network can cope with network degradation problems and ensure universal AI computing power.

It can support elastic anti-degradation capabilities in DCA calculation scenarios. It uses Fillp technology to optimize the TCP protocol, which can increase the bandwidth load rate from 10% to 60% under the condition of 1% packet loss rate, thereby ensuring that data from metropolitan areas The smooth flow of computing power to remote areas accelerates the inclusive application of AI services.

In this way, the network requirements of all aspects of large models from training to deployment are solved. From intelligent computing centers to thousands of industries, they all have the development fulcrum of network-based computing.

In an era of intelligence, a new era of science and technology opened by large models has just begun. Galaxy AI Network provides answers to transportation capacity in the intelligent era

The above is the detailed content of Galaxy AI Network, the answer to transportation capacity in the era of large models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

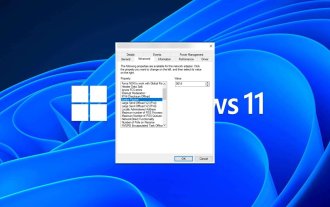

How to adjust MTU size on Windows 11

Aug 25, 2023 am 11:21 AM

How to adjust MTU size on Windows 11

Aug 25, 2023 am 11:21 AM

If you're suddenly experiencing a slow internet connection on Windows 11 and you've tried every trick in the book, it might have nothing to do with your network and everything to do with your maximum transmission unit (MTU). Problems may occur if your system sends or receives data with the wrong MTU size. In this post, we will learn how to change MTU size on Windows 11 for smooth and uninterrupted internet connection. What is the default MTU size in Windows 11? The default MTU size in Windows 11 is 1500, which is the maximum allowed. MTU stands for maximum transmission unit. This is the maximum packet size that can be sent or received on the network. every support network

![WLAN expansion module has stopped [fix]](https://img.php.cn/upload/article/000/465/014/170832352052603.gif?x-oss-process=image/resize,m_fill,h_207,w_330) WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

If there is a problem with the WLAN expansion module on your Windows computer, it may cause you to be disconnected from the Internet. This situation is often frustrating, but fortunately, this article provides some simple suggestions that can help you solve this problem and get your wireless connection working properly again. Fix WLAN Extensibility Module Has Stopped If the WLAN Extensibility Module has stopped working on your Windows computer, follow these suggestions to fix it: Run the Network and Internet Troubleshooter to disable and re-enable wireless network connections Restart the WLAN Autoconfiguration Service Modify Power Options Modify Advanced Power Settings Reinstall Network Adapter Driver Run Some Network Commands Now, let’s look at it in detail

How to solve win11 DNS server error

Jan 10, 2024 pm 09:02 PM

How to solve win11 DNS server error

Jan 10, 2024 pm 09:02 PM

We need to use the correct DNS when connecting to the Internet to access the Internet. In the same way, if we use the wrong dns settings, it will prompt a dns server error. At this time, we can try to solve the problem by selecting to automatically obtain dns in the network settings. Let’s take a look at the specific solutions. How to solve win11 network dns server error. Method 1: Reset DNS 1. First, click Start in the taskbar to enter, find and click the "Settings" icon button. 2. Then click the "Network & Internet" option command in the left column. 3. Then find the "Ethernet" option on the right and click to enter. 4. After that, click "Edit" in the DNS server assignment, and finally set DNS to "Automatic (D

Fix 'Failed Network Error' downloads on Chrome, Google Drive and Photos!

Oct 27, 2023 pm 11:13 PM

Fix 'Failed Network Error' downloads on Chrome, Google Drive and Photos!

Oct 27, 2023 pm 11:13 PM

What is the "Network error download failed" issue? Before we delve into the solutions, let’s first understand what the “Network Error Download Failed” issue means. This error usually occurs when the network connection is interrupted during downloading. It can happen due to various reasons such as weak internet connection, network congestion or server issues. When this error occurs, the download will stop and an error message will be displayed. How to fix failed download with network error? Facing “Network Error Download Failed” can become a hindrance while accessing or downloading necessary files. Whether you are using browsers like Chrome or platforms like Google Drive and Google Photos, this error will pop up causing inconvenience. Below are points to help you navigate and resolve this issue

Fix: WD My Cloud doesn't show up on the network in Windows 11

Oct 02, 2023 pm 11:21 PM

Fix: WD My Cloud doesn't show up on the network in Windows 11

Oct 02, 2023 pm 11:21 PM

If WDMyCloud is not showing up on the network in Windows 11, this can be a big problem, especially if you store backups or other important files in it. This can be a big problem for users who frequently need to access network storage, so in today's guide, we'll show you how to fix this problem permanently. Why doesn't WDMyCloud show up on Windows 11 network? Your MyCloud device, network adapter, or internet connection is not configured correctly. The SMB function is not installed on the computer. A temporary glitch in Winsock can sometimes cause this problem. What should I do if my cloud doesn't show up on the network? Before we start fixing the problem, you can perform some preliminary checks:

What should I do if the earth is displayed in the lower right corner of Windows 10 when I cannot access the Internet? Various solutions to the problem that the Earth cannot access the Internet in Win10

Feb 29, 2024 am 09:52 AM

What should I do if the earth is displayed in the lower right corner of Windows 10 when I cannot access the Internet? Various solutions to the problem that the Earth cannot access the Internet in Win10

Feb 29, 2024 am 09:52 AM

This article will introduce the solution to the problem that the globe symbol is displayed on the Win10 system network but cannot access the Internet. The article will provide detailed steps to help readers solve the problem of Win10 network showing that the earth cannot access the Internet. Method 1: Restart directly. First check whether the network cable is not plugged in properly and whether the broadband is in arrears. The router or optical modem may be stuck. In this case, you need to restart the router or optical modem. If there are no important things being done on the computer, you can restart the computer directly. Most minor problems can be quickly solved by restarting the computer. If it is determined that the broadband is not in arrears and the network is normal, that is another matter. Method 2: 1. Press the [Win] key, or click [Start Menu] in the lower left corner. In the menu item that opens, click the gear icon above the power button. This is [Settings].

How to enable/disable Wake on LAN in Windows 11

Sep 06, 2023 pm 02:49 PM

How to enable/disable Wake on LAN in Windows 11

Sep 06, 2023 pm 02:49 PM

Wake on LAN is a network feature on Windows 11 that allows you to remotely wake your computer from hibernation or sleep mode. While casual users don't use it often, this feature is useful for network administrators and power users using wired networks, and today we'll show you how to set it up. How do I know if my computer supports Wake on LAN? To use this feature, your computer needs the following: The PC needs to be connected to an ATX power supply so that you can wake it from sleep mode remotely. Access control lists need to be created and added to all routers in the network. The network card needs to support the wake-up-on-LAN function. For this feature to work, both computers need to be on the same network. Although most Ethernet adapters use

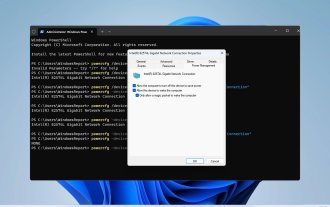

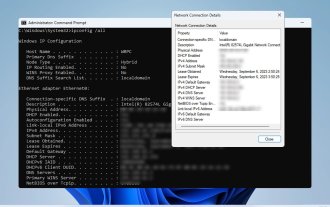

How to check network connection details and status on Windows 11

Sep 11, 2023 pm 02:17 PM

How to check network connection details and status on Windows 11

Sep 11, 2023 pm 02:17 PM

In order to make sure your network connection is working properly or to fix the problem, sometimes you need to check the network connection details on Windows 11. By doing this, you can view a variety of information including your IP address, MAC address, link speed, driver version, and more, and in this guide, we'll show you how to do that. How to find network connection details on Windows 11? 1. Use the "Settings" app and press the + key to open Windows Settings. WindowsI Next, navigate to Network & Internet in the left pane and select your network type. In our case, this is Ethernet. If you are using a wireless network, select a Wi-Fi network instead. At the bottom of the screen you should see