Experimental study on Radar-Camera fusion across data sets under BEV

Original title: Cross-Dataset Experimental Study of Radar-Camera Fusion in Bird's-Eye View

Paper link: https://arxiv.org/pdf/2309.15465.pdf

Author affiliation: Opel Automobile GmbH Rheinland -Pfalzische Technische Universitat Kaiserslautern-Landau German Research Center for Artificial Intelligence

Thesis idea:

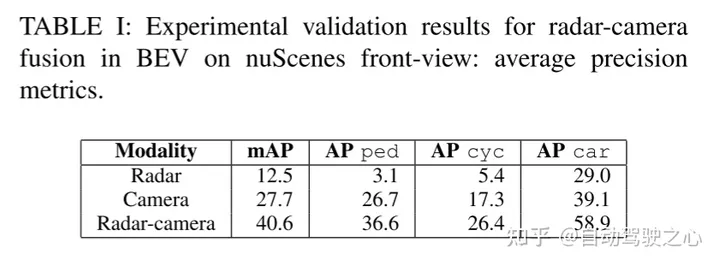

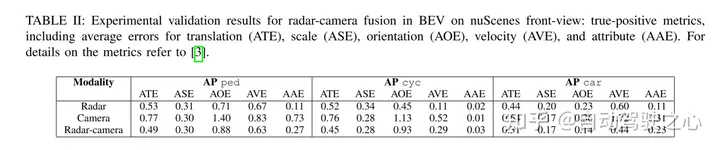

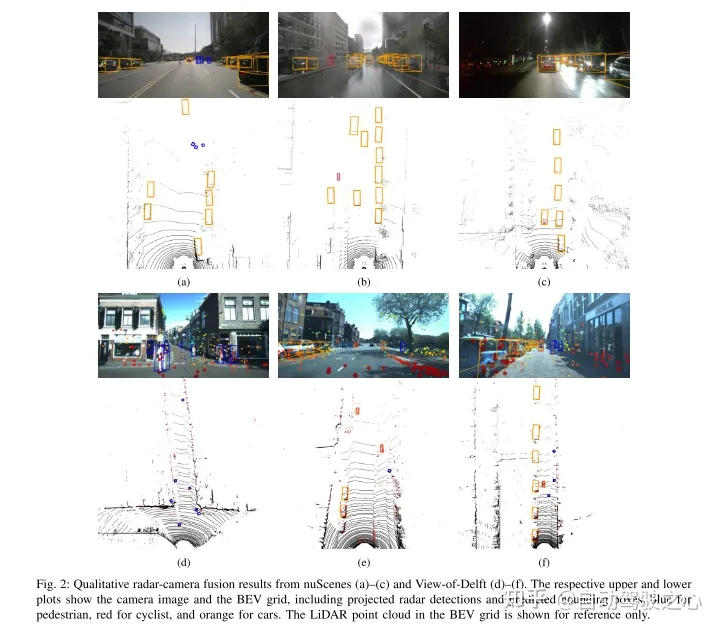

By leveraging complementary sensor information, Millimeter wave radar and camera fusion systems have the potential to provide highly robust and reliable perception systems for advanced driver assistance systems and autonomous driving functions. Recent advances in camera-based object detection provide new possibilities for the fusion of millimeter-wave radar and cameras, which can exploit bird's-eye feature maps for fusion. This study proposes a novel and flexible fusion network and evaluates its performance on two datasets (nuScenes and View-of-Delft). Experimental results show that although the camera branch requires large and diverse training data, the millimeter-wave radar branch benefits more from high-performance millimeter-wave radar. Through transfer learning, this study improves camera performance on smaller datasets. The research results further show that the fusion method of millimeter wave radar and camera is significantly better than the baseline method using only camera or only millimeter wave radar

Network Design:

Recently ,A trend in 3D object detection is to convert the ,features of images into a common Bird’s Eye View (BEV) ,representation. This representation provides a flexible fusion architecture that can be fused between multiple cameras or using ranging sensors. In this work, we extend the BEVFusion method originally used for laser camera fusion for millimeter-wave radar camera fusion. We trained and evaluated our proposed fusion method using a selected millimeter wave radar dataset. In several experiments, we discuss the advantages and disadvantages of each dataset. Finally, we apply transfer learning to achieve further improvements

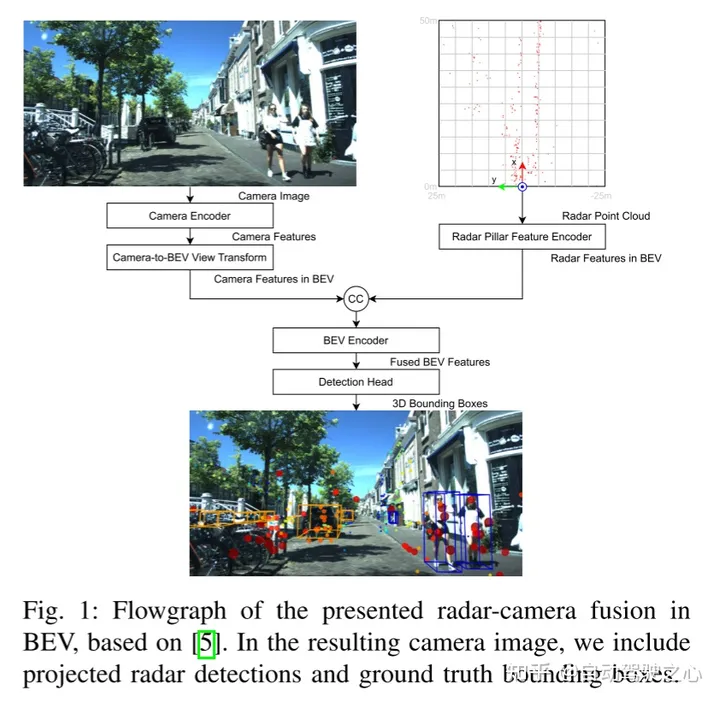

Here's what needs to be rewritten: Figure 1 shows the BEV millimeter wave radar-camera fusion flow chart based on BEVFusion. In the generated camera image, we include the detection results of the projected millimeter wave radar and the real bounding box

This article follows the fusion architecture of BEVFusion. Figure 1 shows the network overview of millimeter wave radar-camera fusion in BEV in this article. Note that fusion occurs when the camera and millimeter wave radar signatures are connected at the BEV. Below, this article provides further details for each block.

The content that needs to be rewritten is: A. Camera encoder and camera to BEV view transformation

The camera encoder and view transformation adopt the idea of [15], which is a flexible The framework can extract image BEV features of any camera external and internal parameters. First, features are extracted from each image using a tiny-Swin Transformer network. Next, this paper uses the Lift and Splat steps of [14] to convert the features of the image to the BEV plane. To this end, dense depth prediction is followed by a rule-based block where features are converted into pseudo point clouds, rasterized and accumulated into a BEV grid.

Radar Pillar Feature Encoder

The purpose of this block is to encode the millimeter wave radar point cloud into BEV features on the same grid as the image BEV features. To this end, this paper uses the pillar feature encoding technology of [16] to rasterize the point cloud into infinitely high voxels, the so-called pillar.

The content that needs to be rewritten is: C. BEV encoder

Similar to [5], the BEV features of millimeter wave radar and cameras are achieved through cascade fusion. The fused features are processed by a joint convolutional BEV encoder so that the network can consider spatial misalignment and exploit the synergy between different modalities

D. Detection Head

This article uses CenterPoint detection head to predict the heatmap of the object center for each class. Further regression heads predict the size, rotation and height of objects, as well as the velocity and class properties of nuScenes. The heat map is trained using Gaussian focus loss, and the rest of the detection heads are trained using L1 loss

Experimental results:

##

##

Citation:

Stäcker, L., Heidenreich, P., Rambach, J., & Stricker, D. (2023). "Radar-camera fusion from a bird's-eye view" Cross-dataset experimental research". ArXiv. /abs/2309.15465

The content that needs to be rewritten is: Original link; https://mp.weixin.qq.com/ s/5mA5up5a4KJO2PBwUcuIdQ

The above is the detailed content of Experimental study on Radar-Camera fusion across data sets under BEV. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,