Technology peripherals

Technology peripherals

AI

AI

Improving trajectory planning methods for autonomous driving in uncertain environments

Improving trajectory planning methods for autonomous driving in uncertain environments

Improving trajectory planning methods for autonomous driving in uncertain environments

Thesis title: "Trajectory planning method for autonomous vehicles in uncertain environments based on improved model predictive control"

Published journal: IEEE Transactions on Intelligent Transportation Systems

Published date : April 2023

The following are my own paper reading notes, mainly the parts that I think are the key points, not the full text translation. This article follows the previous article and sorts out the experimental verification part of this paper. The previous article is as follows: The following are my own paper reading notes, mainly the parts that I think are the key points, not the full text translation. This article follows the previous article and sorts out the experimental verification part of this paper. The previous article is as follows:

fhwim: A trajectory planning method based on improved model predictive control for autonomous vehicles in uncertain environments

https://zhuanlan.zhihu.com/p/ 658708080

1. Simulation verification

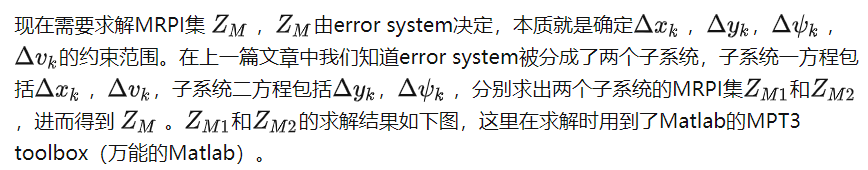

(1) Simulation environment

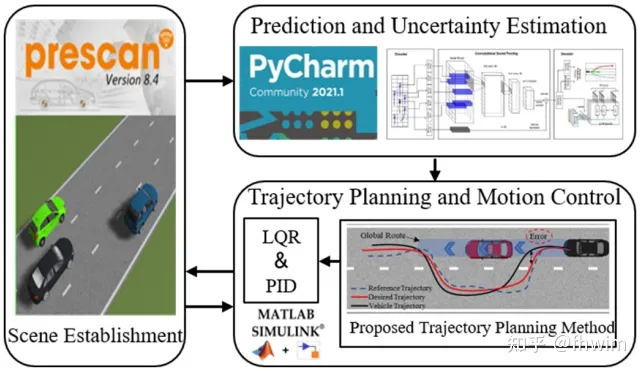

Co-simulation tools include Prescan, PyCharm, Matlab/Simulink, among which Prescan is used To build a simulated traffic scene, PyCharm (with neural network or easily with pytorch) is used to write the fusion prediction module, and Matlab/Simulink (with MPC toolbox) is used to build the trajectory planning module and realize vehicle control. The overall tool selection idea is still relatively simple. Natural and reasonable. The horizontal control used to control this part is LQR, and the vertical control used PID, which is also a relatively common control method. The LSTM encoder-decoder in the fusion prediction module uses open source code. The author said it came from the reference [31] Comprehensive Review of Neural Network-Based Prediction Intervals and New Advances, but I saw that this article was published in 2011. It’s a bit old (where was the LSTM encoder-decode in 2011?). I don’t know if the author has changed the code based on this.

Figure 1 Simulation environment construction

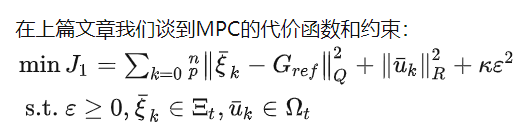

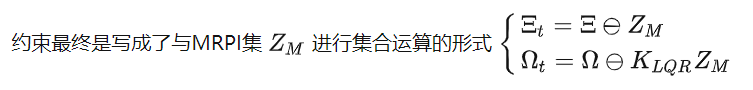

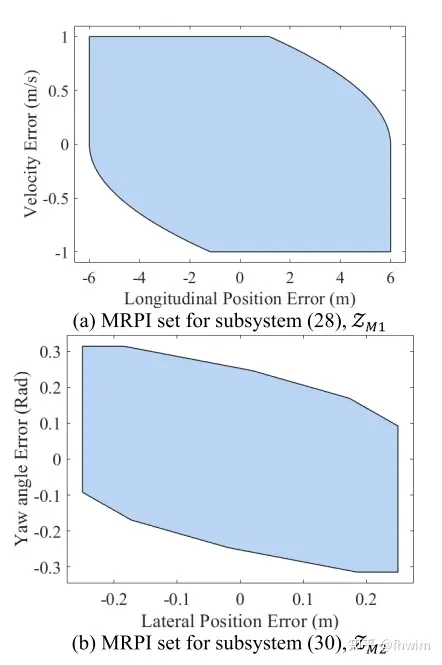

(2) Obtaining MRPI set

Figure 2 MRPI set of subsystem

(3) case1: static obstacle avoidance scenario

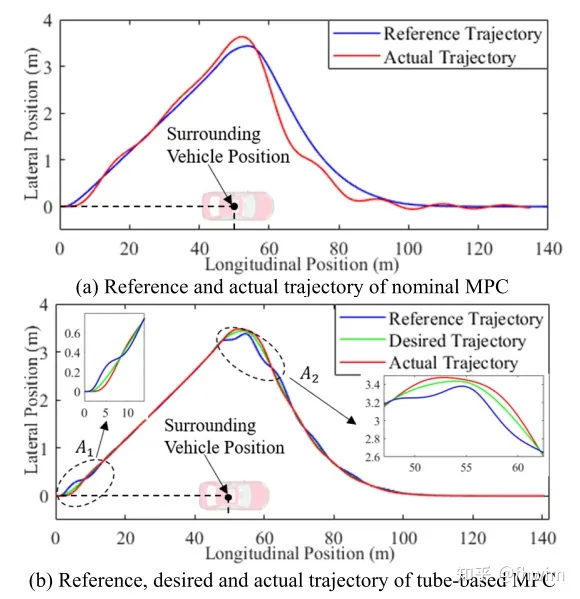

Static obstacle avoidance scenario refers to an obstacle vehicle standing still. The trajectory planning results are as follows:

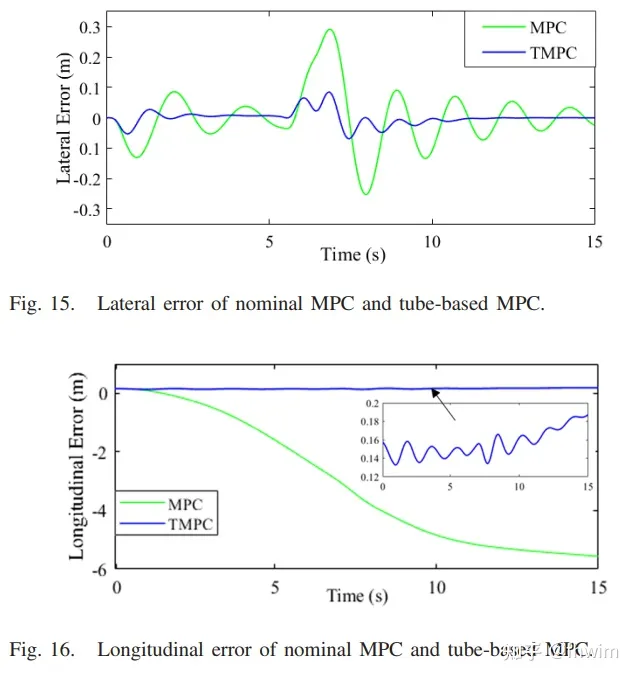

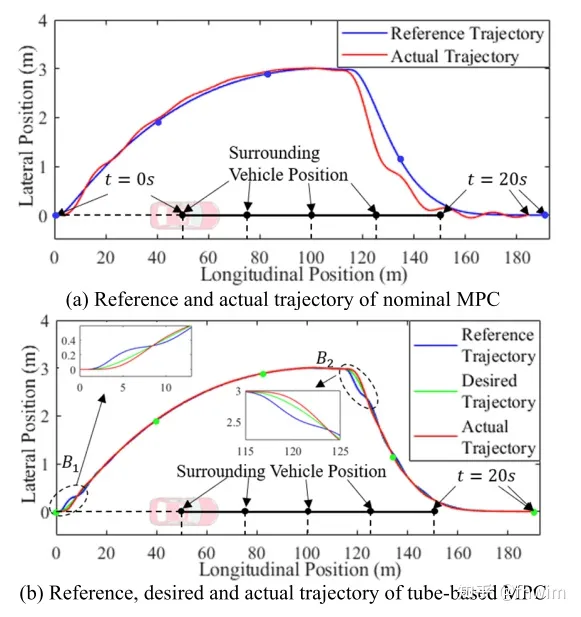

Compare the general MPC method and the pipeline-based method in Figure 3 MPC method

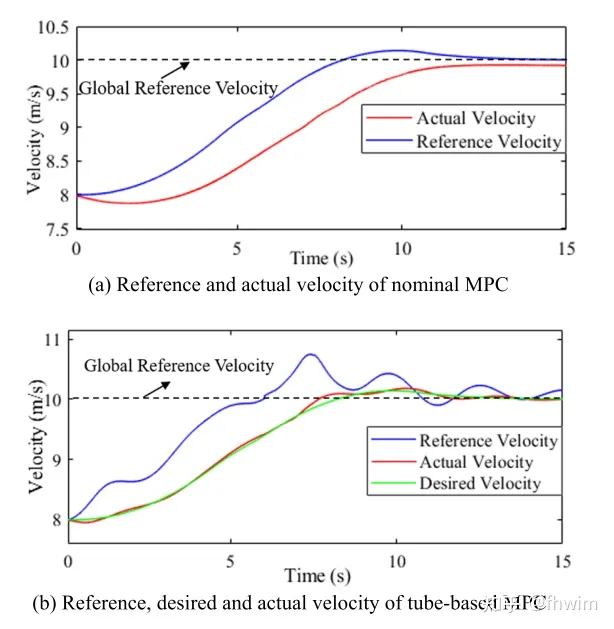

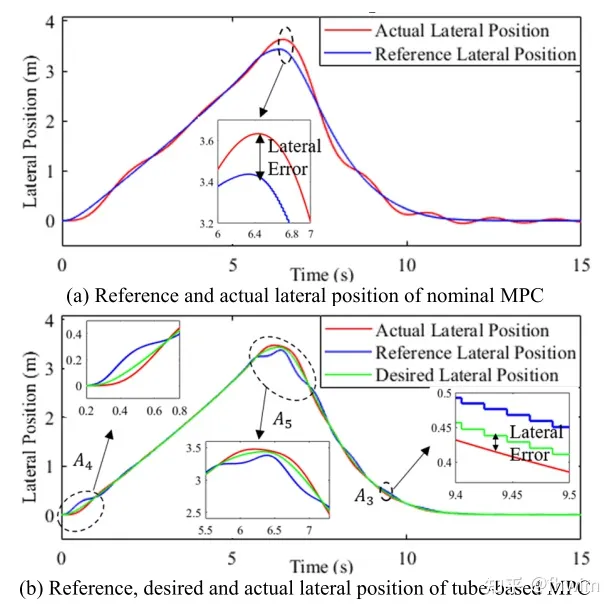

Here I suspect there is something wrong with his legend. According to the previous article, the final reference trajectory is obtained by adding the desired trajectory and the adjustment trajectory. At the same time, he also said tube-based when analyzing the results. MPC has larger errors in areas and in Figure 3(b). Since the adjustment trajectory reduces the error, it should be the desired trajectory and reference trajectory in Figure 3(b). It would be in line with the logic of his article to exchange the legends, that is, the green line is the final reference trajectory, and the blue line is the intermediate desired trajectory. Including the speed curve and horizontal and vertical error curves below, but you can probably understand what the author means. The green curve in tube-based MPC is the final result, and the blue curve is the result without adjustment trajectory.

Figure 4 shows the comparison of speed changes between the general MPC method and the pipeline-based MPC method

Figure 5 Horizontal position comparison

#The content that needs to be rewritten is: Figure 6

comparing horizontal and vertical errorsThe author also compared the smoothness of the steering wheel angle change, which will not be detailed here. In short, it has improved. At the same time, the author proposed a theoretical basis for the good effect of adjusting the trajectory. After adding it, the trajectory error is always within the MRPI set. That is to say, the tracking deviation of the tube-based model predictive control (tube-based MPC) is always within the MRPI set. General model predictive control (MPC) does not have a boundary in an uncertain environment, which may be very large

(4)case2: dynamic obstacle avoidance scenario

Compared with the previous scenario, now The obstacle car started moving. We will not go into details about the overall trajectory, speed changes, horizontal and vertical errors, and the smoothness of steering wheel changes. Here, only an illustration of the overall trajectory is shown

Figure 7 Comparison of the overall trajectory between the general MPC method and the tube-based MPC method

(5) case3: Real driving scenario

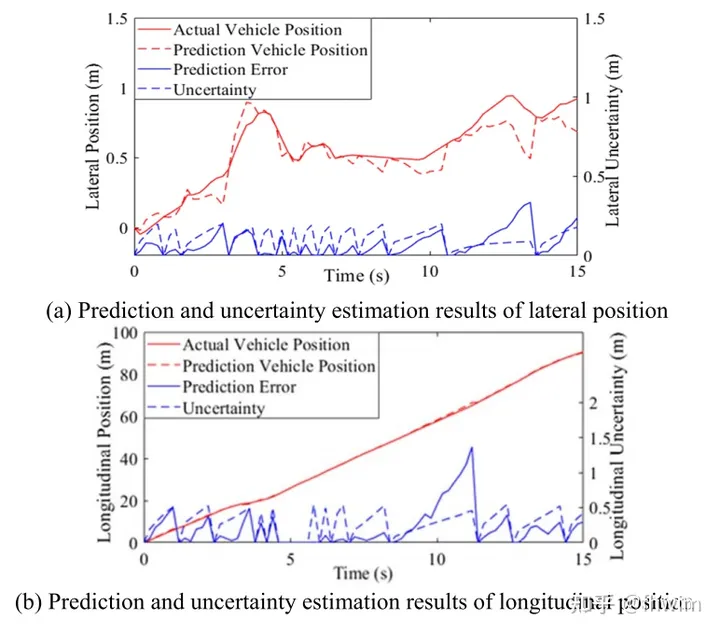

Here, the author chooses to use the NGSIM data set to verify his method. First, the authors validated the fusion prediction method. The NGSIM data set contains vehicle trajectory data, which the author split into historical trajectories and future trajectories, and constructed a training set for the LSTM encoder-decoder to learn. The author selected 10,000 trajectories, of which 7,500 were used as the training set and 2,500 as the validation set. The optimizer takes Adam and sets the learning rate to 0.01. The prediction effect is shown in the figure below

Figure 8 Horizontal and vertical trajectory prediction and uncertainty results

The author did not use trajectory in this article Commonly used indicators in the field of forecasting, such as ADE, FDE, etc. I think this approach is unconvincing, but it can also be understood that the focus of this article is trajectory planning based on tube-based MPC

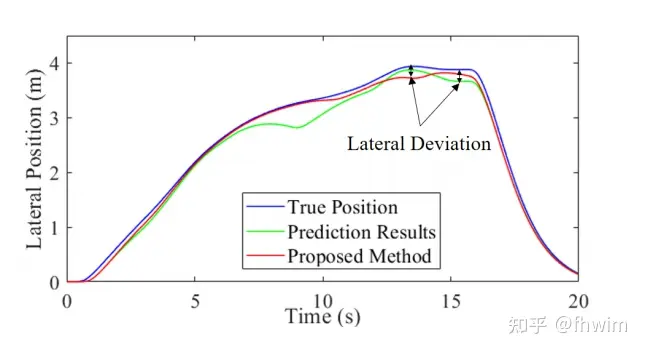

After verifying the trajectory prediction, trajectory planning was performed to further verify the role of the trajectory prediction module , here are three situations compared:

(a) When I already know the true future trajectory of the obstacle vehicle, I will perform trajectory planning. This serves as a control group.

When I don’t When I know the future trajectory of the obstacle vehicle, I first perform trajectory prediction (but do not calculate the uncertainty), and then perform trajectory planning

(c) When I do not know the future trajectory of the obstacle vehicle, I Carry out trajectory prediction first (calculate uncertainty), and then conduct trajectory planning

Figure 9 shows the results of (a), (b) and (c), corresponding to True Position, Prediction Results and Proposed Method

Proposed Method is the result of the method in this article. It can be seen that the Proposed Method is closer to the True Position, indicating that this fusion prediction method (especially the calculation of uncertainty) is effective.

Figure 9 Comparison of three methods to verify the trajectory prediction module

Here you can find that case1 and case2 verify the trajectory planning part, the general model predictive control ( The trajectory prediction part in front of MPC) and pipeline-based MPC is the same. This comparison can illustrate the role of pipeline-based MPC. Case3 is to verify the trajectory prediction module. You can see that two types of verification have been performed. The first type is to directly compare the predicted trajectory and the real trajectory, and the second type is to first know the future trajectory/predict the future trajectory (without calculating uncertainty)/predict the future trajectory (calculate uncertainty), and then perform trajectory planning. Using the real position as the standard, the effects of the trajectory prediction method with uncertainty calculation and the trajectory prediction method without uncertainty calculation are compared. The verification ideas for these two modules are still very clear

2. Real vehicle experimental verification

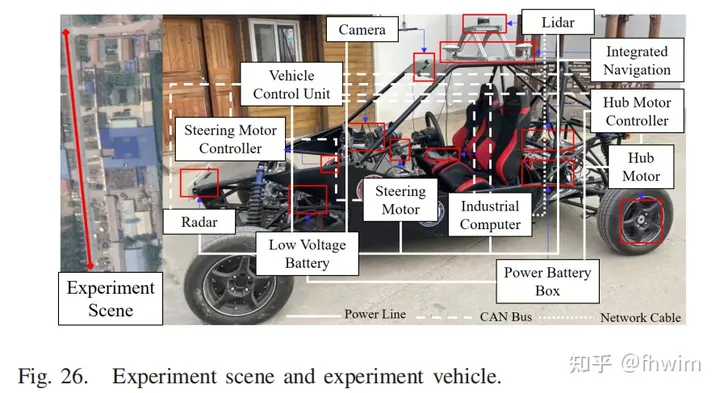

The vehicle used in the experiment is as shown in the figure below:

The content that needs to be rewritten is: Picture 10 of the vehicle used in the experiment

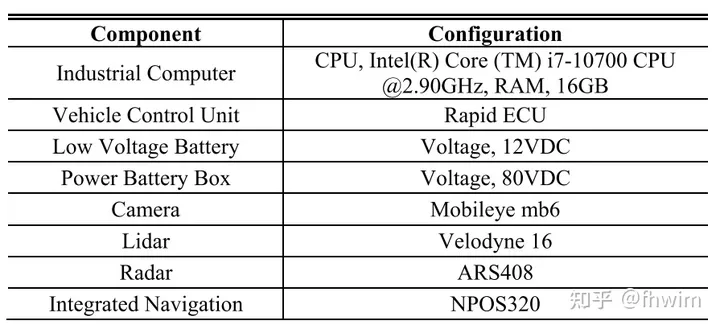

The author also provided the parameters of the experimental vehicle and the parameters of the computer and sensor used in the experiment:

The content that needs to be rewritten is: Figure 11 Computer and sensor parameters

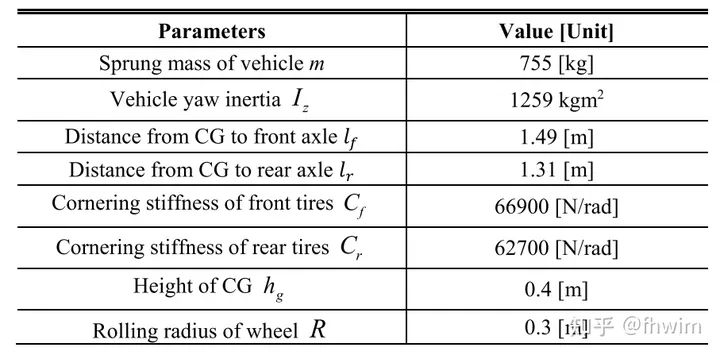

The content that needs to be rewritten is: Parameters of the experimental vehicle, Figure 12

For the sake of safety, the experimental scene set up by the author is the same as the simulation experiment case 1. It is a static obstacle avoidance scene. It is still a comparison of the overall trajectory, speed changes, horizontal and vertical errors, and the smoothness of steering wheel changes, which will not be described again.

3. Reading summary

First of all, the idea of the paper is about the trajectory prediction module with uncertainty calculation and the trajectory planning module based on tube-based MPC. Among them, the trajectory planning module is the main content. I am very satisfied with this modular form because it truly applies trajectory prediction to trajectory planning. The output of prediction is used as the input of planning, and the planning module only determines a safety threshold for the prediction module, and the coupling between the two modules is weak. In other words, the prediction module can be replaced by other methods as long as it can provide the results of predicting the trajectory and uncertainty of the obstacle car. In the future, more advanced neural networks could be considered to directly predict trajectories and uncertainties. Overall, the process of this fusion prediction algorithm is a bit complicated, but I think the idea of the paper is very good. The ideas and workload of simulation and real vehicle testing are also satisfactory

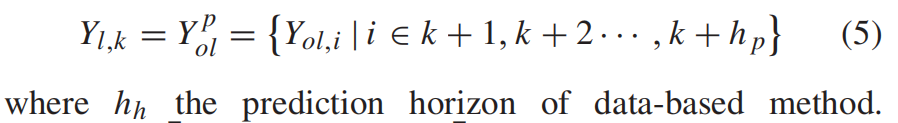

The second is some low-level errors discovered when reading the article. For example, in the LSTM encoder-decoder part, LSTM outputs the trajectory points of steps in the future, which is also written in the formula, but in the text it is written as .

Figure 13 Some errors in the LSTM encoder-decoder part

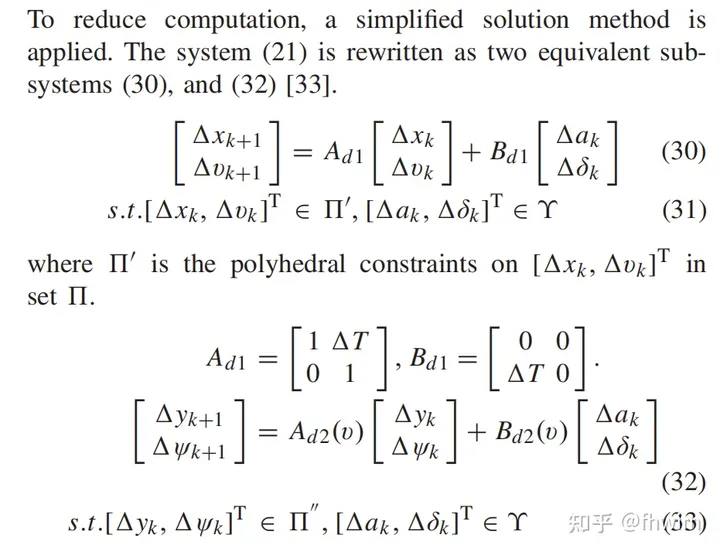

Then in the simulation experiment part, when calculating the MRPI Set, it says system (21), which is error The system is divided into subsystems (32) and (34), but it is actually subsystems (30) and (32). These small errors do not affect the overall method but will also affect the reader's reading experience.

Figure 14 Simulation experiment part MRPI Set original text

The error system in Figure 15 is divided into subsystems ( 30) and (32)

Original link: https://mp.weixin.qq.com/s/0DymvaPmiCc_tf3pUArRiA

The above is the detailed content of Improving trajectory planning methods for autonomous driving in uncertain environments. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.