Human pose estimation problem in computer vision

Human pose estimation problem in computer vision requires specific code examples

Human pose estimation is an important research direction in the field of computer vision, and its goal is to extract data from images or videos Accurately obtain the posture information of the human body, including joint positions, joint angles, etc. Human pose estimation has wide applications in many application fields, such as motion capture, human-computer interaction, virtual reality, etc. This article will introduce the basic principles of human pose estimation and provide specific code examples.

The basic principle of human posture estimation is to infer the posture of the human body by analyzing the key points of the human body in the image (such as head, shoulders, hands, feet, etc.). To achieve this goal, we can use deep learning models such as Convolutional Neural Network (CNN) or Recurrent Neural Network (RNN).

The following is a sample code that uses the open source library OpenPose to implement human pose estimation:

import cv2

import numpy as np

from openpose import OpenPose

# 加载OpenPose模型

openpose = OpenPose("path/to/openpose/models")

# 加载图像

image = cv2.imread("path/to/image.jpg")

# 运行OpenPose模型

poses = openpose.detect(image)

# 显示姿态估计结果

for pose in poses:

# 绘制骨骼连接

image = openpose.draw_skeleton(image, pose)

# 绘制关节点

image = openpose.draw_keypoints(image, pose)

# 显示图像

cv2.imshow("Pose Estimation", image)

cv2.waitKey(0)

cv2.destroyAllWindows()In the above sample code, we first import the necessary libraries, then load the OpenPose model and load the image. Next, we run the OpenPose model to detect poses, and the result returned is a list containing multiple poses. Finally, we use the drawing function provided by OpenPose to draw the pose estimation results and display the image.

It should be noted that the above sample code is only for demonstration purposes. In fact, realizing human posture estimation requires more complex pre-processing, post-processing and parameter adjustment processes. Furthermore, OpenPose is an open source library that provides more features and options for users to use.

In short, human posture estimation is an important issue in the field of computer vision, which infers the posture of the human body by analyzing key points in the image. This article provides sample code for implementing human posture estimation using the open source library OpenPose. Readers can conduct more in-depth research and development according to their own needs.

The above is the detailed content of Human pose estimation problem in computer vision. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

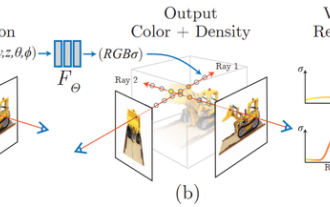

1 Introduction Neural Radiation Fields (NeRF) are a fairly new paradigm in the field of deep learning and computer vision. This technology was introduced in the ECCV2020 paper "NeRF: Representing Scenes as Neural Radiation Fields for View Synthesis" (which won the Best Paper Award) and has since become extremely popular, with nearly 800 citations to date [1 ]. The approach marks a sea change in the traditional way machine learning processes 3D data. Neural radiation field scene representation and differentiable rendering process: composite images by sampling 5D coordinates (position and viewing direction) along camera rays; feed these positions into an MLP to produce color and volumetric densities; and composite these values using volumetric rendering techniques image; the rendering function is differentiable, so it can be passed

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

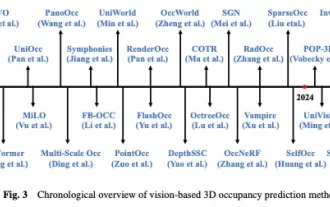

Written above & The author’s personal understanding In recent years, autonomous driving has received increasing attention due to its potential in reducing driver burden and improving driving safety. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. at last

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

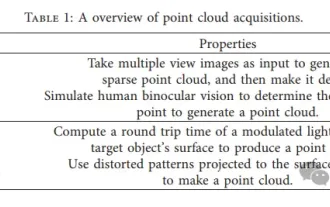

Point cloud, as a collection of points, is expected to bring about a change in acquiring and generating three-dimensional (3D) surface information of objects through 3D reconstruction, industrial inspection and robot operation. The most challenging but essential process is point cloud registration, i.e. obtaining a spatial transformation that aligns and matches two point clouds obtained in two different coordinates. This review introduces the overview and basic principles of point cloud registration, systematically classifies and compares various methods, and solves the technical problems existing in point cloud registration, trying to provide academic researchers outside the field and Engineers provide guidance and facilitate discussions on a unified vision for point cloud registration. The general method of point cloud acquisition is divided into active and passive methods. The point cloud actively acquired by the sensor is the active method, and the point cloud is reconstructed later.

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

May 13, 2023 pm 07:52 PM

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

May 13, 2023 pm 07:52 PM

When it comes to domestic games that have become popular all over the world in the past two years, Genshin Impact definitely takes the cake. According to this year’s Q1 quarter mobile game revenue survey report released in May, “Genshin Impact” firmly won the first place among card-drawing mobile games with an absolute advantage of 567 million U.S. dollars. This also announced that “Genshin Impact” has been online in just 18 years. A few months later, total revenue from the mobile platform alone exceeded US$3 billion (approximately RM13 billion). Now, the last 2.8 island version before the opening of Xumi is long overdue. After a long draft period, there are finally new plots and areas to play. But I don’t know how many “Liver Emperors” there are. Now that the island has been fully explored, grass has begun to grow again. There are a total of 182 treasure chests + 1 Mora box (not included). There is no need to worry about the long grass period. The Genshin Impact area is never short of work. No, during the long grass

AAAI2024: Far3D - Innovative idea of directly reaching 150m visual 3D target detection

Dec 15, 2023 pm 01:54 PM

AAAI2024: Far3D - Innovative idea of directly reaching 150m visual 3D target detection

Dec 15, 2023 pm 01:54 PM

Recently, I read a latest research on pure visual surround perception on Arxiv. This research is based on the PETR series of methods and focuses on solving the pure visual perception problem of long-distance target detection, extending the perception range to 150 meters. The methods and results of this paper have great reference value for us, so I tried to interpret it. Original title: Far3D: Expanding the Horizon for Surround-view3DObject Detection Paper link: https://arxiv.org/abs/2308.09616 Author affiliation :Beijing Institute of Technology & Megvii Technology Task Background 3D Object Detection in Understanding Autonomous Driving

Yan Shuicheng took charge and established the ultimate form of the 'universal visual multi-modal large model'! Unified understanding/generation/segmentation/editing

Apr 25, 2024 pm 08:04 PM

Yan Shuicheng took charge and established the ultimate form of the 'universal visual multi-modal large model'! Unified understanding/generation/segmentation/editing

Apr 25, 2024 pm 08:04 PM

Recently, Professor Yan Shuicheng's team jointly released and open sourced Vitron's universal pixel-level visual multi-modal large language model. Project homepage & Demo: https://vitron-llm.github.io/ Paper link: https://is.gd/aGu0VV Open source code: https://github.com/SkyworkAI/Vitron This is a heavy-duty general vision The multi-modal large model supports a series of visual tasks from visual understanding to visual generation, from low level to high level. It solves the image/video model separation problem that has plagued the large language model industry for a long time, and provides a comprehensive unified static image. Understanding, generating, segmenting, and editing dynamic video content