Multi-target tracking problem in target detection technology

Multiple target tracking problems in target detection technology

Abstract:

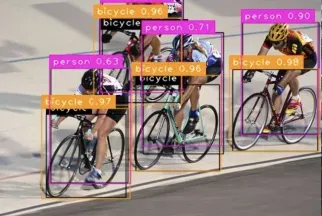

Object detection is one of the popular research directions in the field of computer vision. It aims to detect images from Or identify and locate objects of interest in videos. However, target detection alone cannot meet practical needs, because in real scenes, targets usually change continuously in time and space. Multi-target tracking technology aims to solve this problem. It can track the positions of multiple targets in the video and continuously update their status.

Introduction:

With the continuous development of computer hardware and algorithms, target detection algorithms have achieved very significant results. From the earliest feature-based algorithms to the current deep learning-based algorithms, the accuracy and speed of target detection have been greatly improved. However, target detection alone cannot meet the needs of practical applications. In many scenarios, multiple targets in the video need to be tracked, such as traffic monitoring, pedestrian tracking, etc. This article will introduce the multi-target tracking problem in target detection technology and provide specific code examples to help readers understand and practice.

1. Definition and issues of multi-target tracking

Multi-target tracking refers to identifying the targets in each frame through the target detection algorithm in a continuous video sequence and tracking them over time. location and status. Since targets in video sequences often undergo changes in scale, deformation, occlusion, etc., and targets may appear and disappear, multi-target tracking is a challenging problem. It mainly includes the following challenges:

- Target re-identification: In multi-target tracking, it is necessary to distinguish different target objects and track their status. Since the target may be deformed or occluded during tracking, recognition problems caused by changes in target appearance need to be addressed.

- Occlusion processing: In actual scenes, mutual occlusion of targets is a very common situation. When the target is occluded by other objects, some technical means need to be used to solve the occlusion problem and ensure continuous tracking of the target.

- Target Appearance and Disappearance: In video sequences, targets may suddenly appear or disappear. This requires a tracking algorithm that can automatically detect the appearance and disappearance of targets and handle them accordingly.

2. Multi-target tracking algorithm

Currently, multi-target tracking algorithms are mainly divided into two categories: multi-target tracking algorithms based on traditional image processing methods and multi-target tracking algorithms based on deep learning. .

Multi-target tracking algorithms based on traditional image processing methods mainly include Kalman filter, particle filter, maximum a posteriori probability (MAP) estimation, etc. Among them, the Kalman filter is one of the most common methods, which tracks the target by predicting and updating the state.

The multi-target tracking algorithm based on deep learning is based on target detection and adds some tracking modules to achieve continuous tracking of targets. For example, combining a target detection model with temporal information and a target tracking model can achieve tracking of dynamic targets.

3. Code example of multi-target tracking

In this article, we will use the Python language and the OpenCV library to provide a code example of multi-target tracking based on the Kalman filter. First, we need to import the necessary libraries:

import cv2 import numpy as np

Next, we need to define a class to implement target tracking:

class MultiObjectTracker:

def __init__(self):

self.kalman_filters = []

self.tracks = []

def update(self, detections):

pass

def draw_tracks(self, frame):

passIn the update function, we will Obtain the target detection result of the current frame, and then use the Kalman filter for target tracking. The specific code implementation is omitted, readers can write it according to their own needs.

In the draw_tracks function, we need to draw the tracking results on the image:

def draw_tracks(self, frame):

for track in self.tracks:

start_point = (int(track[0]), int(track[1]))

end_point = (int(track[0] + track[2]), int(track[1] + track[3]))

cv2.rectangle(frame, start_point, end_point, (0, 255, 0), 2)Finally, we can write a main function to call the tracker and process the video sequence :

def main():

tracker = MultiObjectTracker()

video = cv2.VideoCapture("input.mp4")

while True:

ret, frame = video.read()

if not ret:

break

# 目标检测,得到当前帧的检测结果

detections = detect_objects(frame)

# 跟踪目标

tracker.update(detections)

# 绘制跟踪结果

tracker.draw_tracks(frame)

# 显示结果

cv2.imshow("Multi-Object Tracking", frame)

if cv2.waitKey(1) == ord('q'):

break

video.release()

cv2.destroyAllWindows()In this code, we first create a MultiObjectTracker object and load the video file to be processed. Then, we continuously read each frame of the video and perform target detection and tracking, and finally display the tracking results in the window. The program can be exited by pressing the 'q' key on the keyboard.

Conclusion:

Multi-target tracking technology realizes tracking of multiple targets in video sequences by continuously tracking changes in time and space based on target detection. This article briefly introduces the definition and algorithm of multi-target tracking and provides a code example based on the Kalman filter. Readers can modify and expand according to their own needs to further explore the research and application of multi-target tracking technology.

The above is the detailed content of Multi-target tracking problem in target detection technology. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Multi-grid redundant bounding box annotation for accurate object detection

Jun 01, 2024 pm 09:46 PM

Multi-grid redundant bounding box annotation for accurate object detection

Jun 01, 2024 pm 09:46 PM

1. Introduction Currently, the leading object detectors are two-stage or single-stage networks based on the repurposed backbone classifier network of deep CNN. YOLOv3 is one such well-known state-of-the-art single-stage detector that receives an input image and divides it into an equal-sized grid matrix. Grid cells with target centers are responsible for detecting specific targets. What I’m sharing today is a new mathematical method that allocates multiple grids to each target to achieve accurate tight-fit bounding box prediction. The researchers also proposed an effective offline copy-paste data enhancement for target detection. The newly proposed method significantly outperforms some current state-of-the-art object detectors and promises better performance. 2. The background target detection network is designed to use

New SOTA for target detection: YOLOv9 comes out, and the new architecture brings traditional convolution back to life

Feb 23, 2024 pm 12:49 PM

New SOTA for target detection: YOLOv9 comes out, and the new architecture brings traditional convolution back to life

Feb 23, 2024 pm 12:49 PM

In the field of target detection, YOLOv9 continues to make progress in the implementation process. By adopting new architecture and methods, it effectively improves the parameter utilization of traditional convolution, which makes its performance far superior to previous generation products. More than a year after YOLOv8 was officially released in January 2023, YOLOv9 is finally here! Since Joseph Redmon, Ali Farhadi and others proposed the first-generation YOLO model in 2015, researchers in the field of target detection have updated and iterated it many times. YOLO is a prediction system based on global information of images, and its model performance is continuously enhanced. By continuously improving algorithms and technologies, researchers have achieved remarkable results, making YOLO increasingly powerful in target detection tasks.

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

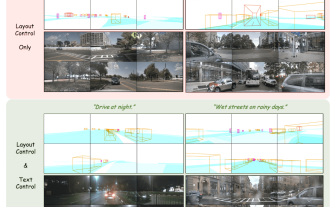

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

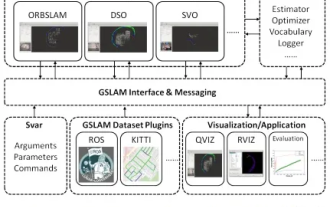

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.