Reward design issues in reinforcement learning

Reward design issues in reinforcement learning require specific code examples

Reinforcement learning is a machine learning method whose goal is to learn through interaction with the environment How to take actions that maximize cumulative rewards. In reinforcement learning, reward plays a crucial role. It is a signal in the learning process of the agent and is used to guide its behavior. However, reward design is a challenging problem, and reasonable reward design can greatly affect the performance of reinforcement learning algorithms.

In reinforcement learning, rewards can be seen as a communication bridge between the agent and the environment, which can tell the agent how good or bad the current action is. Generally speaking, rewards can be divided into two types: sparse rewards and dense rewards. Sparse rewards refer to rewards being given at only a few specific time points in the task, while dense rewards have reward signals at every time point. Dense rewards make it easier for the agent to learn the correct action strategy than sparse rewards because it provides more feedback information. However, sparse rewards are more common in real-world tasks, which brings challenges to reward design.

The goal of reward design is to provide the agent with the most accurate feedback signal possible so that it can learn the best strategy quickly and effectively. In most cases, we want a reward function that gives a high reward when the agent reaches a predetermined goal, and a low reward or penalty when the agent makes a wrong decision. However, designing a reasonable reward function is not an easy task.

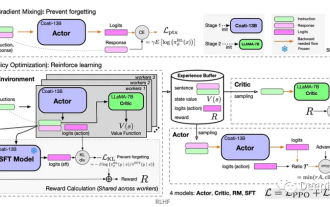

To solve the reward design problem, a common approach is to use human expert-based demonstrations to guide agent learning. In this case, the human expert provides the agent with a series of sample action sequences and their rewards. The agent learns from these samples to become familiar with the task and gradually improves its strategy in subsequent interactions. This method can effectively solve the reward design problem, but it also increases labor costs, and the expert's sample may not be completely correct.

Another approach is to use inverse reinforcement learning (Inverse Reinforcement Learning) to solve the reward design problem. Inverse reinforcement learning is a method of deriving a reward function from observed behavior. It assumes that the agent attempts to maximize a potential reward function during the learning process. By inversely deriving this potential reward function from the observed behavior, Agents can be provided with more accurate reward signals. The core idea of inverse reinforcement learning is to interpret the observed behavior as an optimal strategy and guide the agent's learning by deducing the reward function corresponding to this optimal strategy.

The following is a simple code example of inverse reinforcement learning, demonstrating how to infer the reward function from the observed behavior:

import numpy as np

def inverse_reinforcement_learning(expert_trajectories):

# 计算状态特征向量的均值

feature_mean = np.mean(expert_trajectories, axis=0)

# 构建状态特征矩阵

feature_matrix = np.zeros((len(expert_trajectories), len(feature_mean)))

for i in range(len(expert_trajectories)):

feature_matrix[i] = expert_trajectories[i] - feature_mean

# 使用最小二乘法求解奖励函数的权重向量

weights = np.linalg.lstsq(feature_matrix, np.ones((len(expert_trajectories),)))[0]

return weights

# 生成示例轨迹数据

expert_trajectories = np.array([[1, 1], [1, 2], [2, 1], [2, 2]])

# 使用逆强化学习得到奖励函数的权重向量

weights = inverse_reinforcement_learning(expert_trajectories)

print("奖励函数的权重向量:", weights)The above code uses the least squares method to solve the reward function The weight vector can be used to calculate the reward of any state feature vector. Through inverse reinforcement learning, a reasonable reward function can be learned from sample data to guide the agent's learning process.

In summary, reward design is an important and challenging issue in reinforcement learning. Reasonable reward design can greatly affect the performance of reinforcement learning algorithms. By leveraging methods such as human expert-based demonstrations or inverse reinforcement learning, the reward design problem can be solved and the agent can be provided with accurate reward signals to guide its learning process.

The above is the detailed content of Reward design issues in reinforcement learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Reward function design issues in reinforcement learning

Oct 09, 2023 am 11:58 AM

Reward function design issues in reinforcement learning

Oct 09, 2023 am 11:58 AM

Reward function design issues in reinforcement learning Introduction Reinforcement learning is a method that learns optimal strategies through the interaction between an agent and the environment. In reinforcement learning, the design of the reward function is crucial to the learning effect of the agent. This article will explore reward function design issues in reinforcement learning and provide specific code examples. The role of the reward function and the target reward function are an important part of reinforcement learning and are used to evaluate the reward value obtained by the agent in a certain state. Its design helps guide the agent to maximize long-term fatigue by choosing optimal actions.

Deep reinforcement learning technology in C++

Aug 21, 2023 pm 11:33 PM

Deep reinforcement learning technology in C++

Aug 21, 2023 pm 11:33 PM

Deep reinforcement learning technology is a branch of artificial intelligence that has attracted much attention. It has won multiple international competitions and is also widely used in personal assistants, autonomous driving, game intelligence and other fields. In the process of realizing deep reinforcement learning, C++, as an efficient and excellent programming language, is especially important when hardware resources are limited. Deep reinforcement learning, as the name suggests, combines technologies from the two fields of deep learning and reinforcement learning. To simply understand, deep learning refers to learning features from data and making decisions by building a multi-layer neural network.

Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation

Oct 31, 2023 pm 05:57 PM

Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation

Oct 31, 2023 pm 05:57 PM

Reinforcement learning (RL) is a machine learning method that allows an agent to learn how to behave in its environment through trial and error. Agents are rewarded or punished for taking actions that lead to desired outcomes. Over time, the agent learns to take actions that maximize its expected reward. RL agents are typically trained using a Markov decision process (MDP), a mathematical framework for modeling sequential decision problems. MDP consists of four parts: State: a set of possible states of the environment. Action: A set of actions that an agent can take. Transition function: A function that predicts the probability of transitioning to a new state given the current state and action. Reward function: A function that assigns a reward to the agent for each conversion. The agent's goal is to learn a policy function,

Clustering effect evaluation problem in clustering algorithm

Oct 10, 2023 pm 01:12 PM

Clustering effect evaluation problem in clustering algorithm

Oct 10, 2023 pm 01:12 PM

The clustering effect evaluation problem in the clustering algorithm requires specific code examples. Clustering is an unsupervised learning method that groups similar samples into one category by clustering data. In clustering algorithms, how to evaluate the effect of clustering is an important issue. This article will introduce several commonly used clustering effect evaluation indicators and give corresponding code examples. 1. Clustering effect evaluation index Silhouette Coefficient Silhouette coefficient evaluates the clustering effect by calculating the closeness of the sample and the degree of separation from other clusters.

Solve the 'error: redefinition of class 'ClassName'' problem that appears in C++ code

Aug 25, 2023 pm 06:01 PM

Solve the 'error: redefinition of class 'ClassName'' problem that appears in C++ code

Aug 25, 2023 pm 06:01 PM

Solve the "error:redefinitionofclass'ClassName'" problem in C++ code. In C++ programming, we often encounter various compilation errors. One of the common errors is "error:redefinitionofclass 'ClassName'" (redefinition error of class 'ClassName'). This error usually occurs when the same class is defined multiple times. This article will

Teach you how to diagnose common iPhone problems

Dec 03, 2023 am 08:15 AM

Teach you how to diagnose common iPhone problems

Dec 03, 2023 am 08:15 AM

Known for its powerful performance and versatile features, the iPhone is not immune to the occasional hiccup or technical difficulty, a common trait among complex electronic devices. Experiencing iPhone problems can be frustrating, but usually no alarm is needed. In this comprehensive guide, we aim to demystify some of the most commonly encountered challenges associated with iPhone usage. Our step-by-step approach is designed to help you resolve these common issues, providing practical solutions and troubleshooting tips to get your equipment back in peak working order. Whether you're facing a glitch or a more complex problem, this article can help you resolve them effectively. General Troubleshooting Tips Before delving into specific troubleshooting steps, here are some helpful

Solve PHP error: problems encountered when inheriting parent class

Aug 17, 2023 pm 01:33 PM

Solve PHP error: problems encountered when inheriting parent class

Aug 17, 2023 pm 01:33 PM

Solving PHP errors: Problems encountered when inheriting parent classes In PHP, inheritance is an important feature of object-oriented programming. Through inheritance, we can reuse existing code and extend and improve it without modifying the original code. Although inheritance is widely used in development, sometimes you may encounter some error problems when inheriting from a parent class. This article will focus on solving common problems encountered when inheriting from a parent class and provide corresponding code examples. Question 1: The parent class is not found. During the process of inheriting the parent class, if the system does not

How to solve the problem that jQuery cannot obtain the form element value

Feb 19, 2024 pm 02:01 PM

How to solve the problem that jQuery cannot obtain the form element value

Feb 19, 2024 pm 02:01 PM

To solve the problem that jQuery.val() cannot be used, specific code examples are required. For front-end developers, using jQuery is one of the common operations. Among them, using the .val() method to get or set the value of a form element is a very common operation. However, in some specific cases, the problem of not being able to use the .val() method may arise. This article will introduce some common situations and solutions, and provide specific code examples. Problem Description When using jQuery to develop front-end pages, sometimes you will encounter