Technology peripherals

Technology peripherals

AI

AI

Amazing running scores revealed, better than H100! What is NVIDIA's most powerful AI chip GH200?

Amazing running scores revealed, better than H100! What is NVIDIA's most powerful AI chip GH200?

Amazing running scores revealed, better than H100! What is NVIDIA's most powerful AI chip GH200?

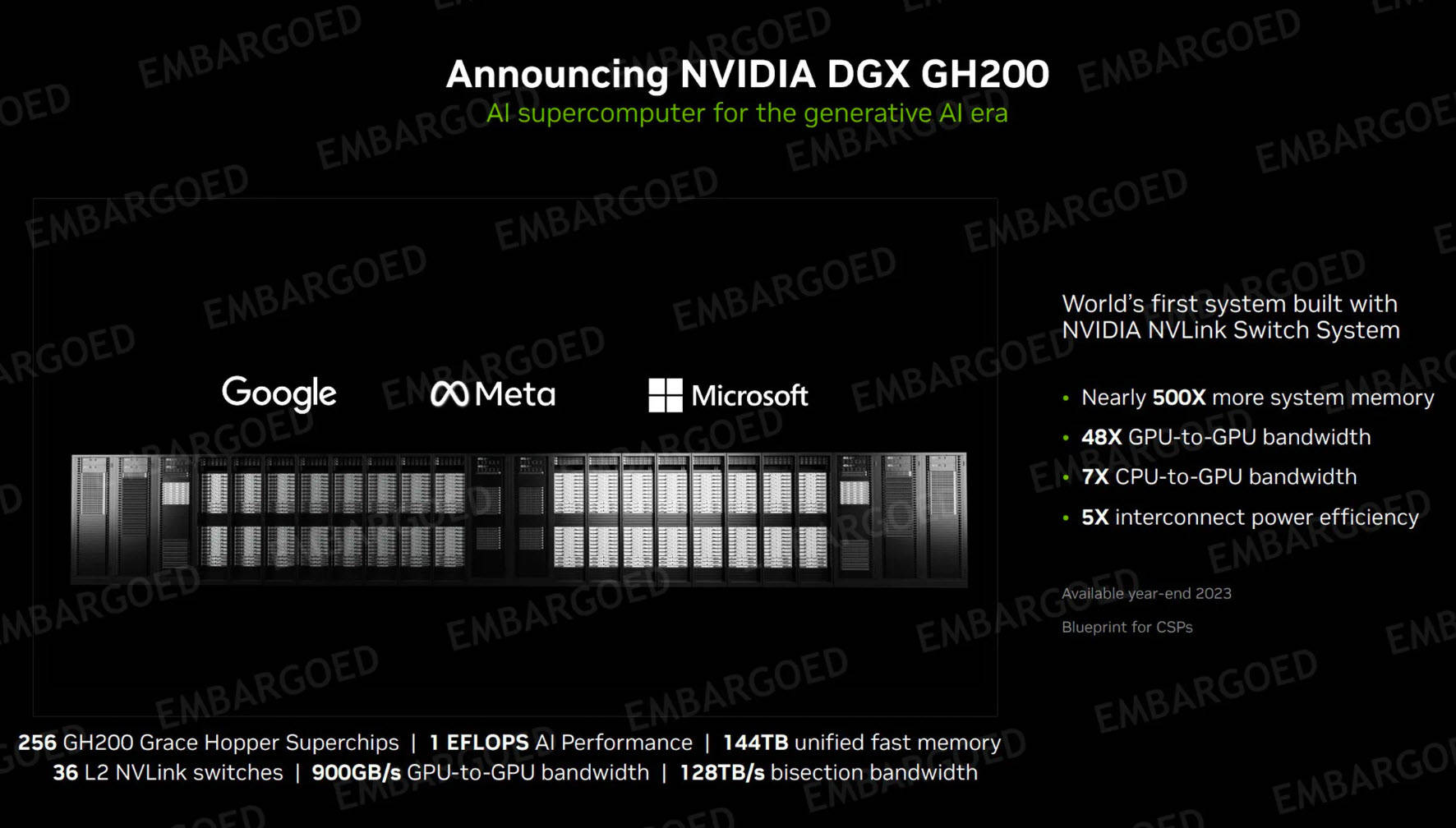

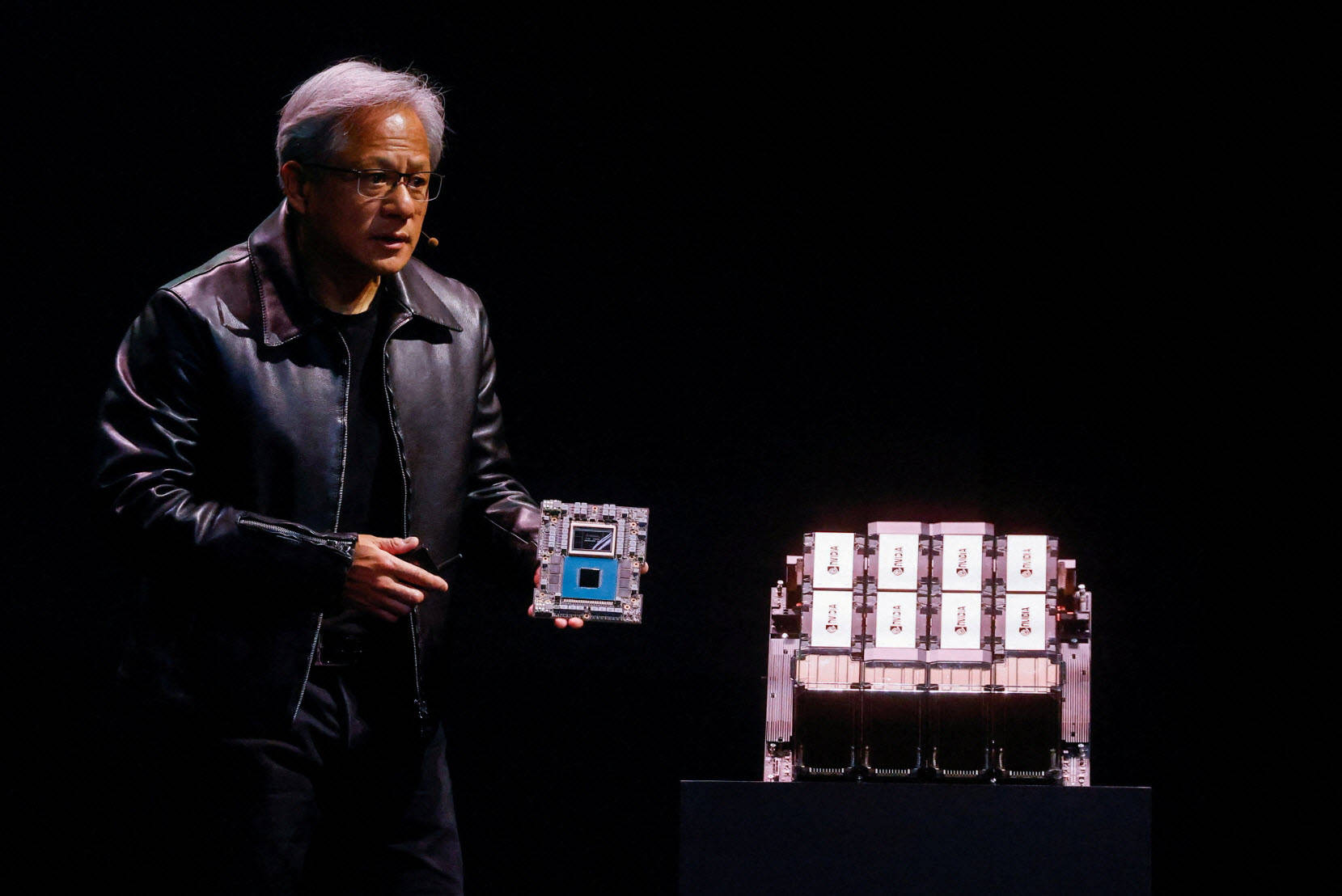

The growing demand for generative AI computing has boosted the stock price of NVIDIA, a major GPU manufacturer, to soar to a new high. In mid-August, Huida CEO Huang Renxun once again promoted DGXGH200 at the exhibition. It is a new generation of generative AI super chip built with GraceHopper accelerated computing card. It is also Huida’s first Exaflop-level DGX supercomputer product

The performance of rolling H100 reaches 17%

According to the running score data on September 11, NVIDIA announced that its GH200, H100, L4 GPU and Jetson Orin similar products have achieved leading performance.

GH200 is the first submission of a single chip. After comparing it with a single H100 with an Intel CPU, the GH200 combination has an improvement of at least 15% in each test. Dave Salvator, director of artificial intelligence at NVIDIA, said in a press conference: Grace Hopper performed very strongly for the first time, improving performance by 17% compared to the submitted H100 GPU, and we are already ahead across the board.

What is GH200?

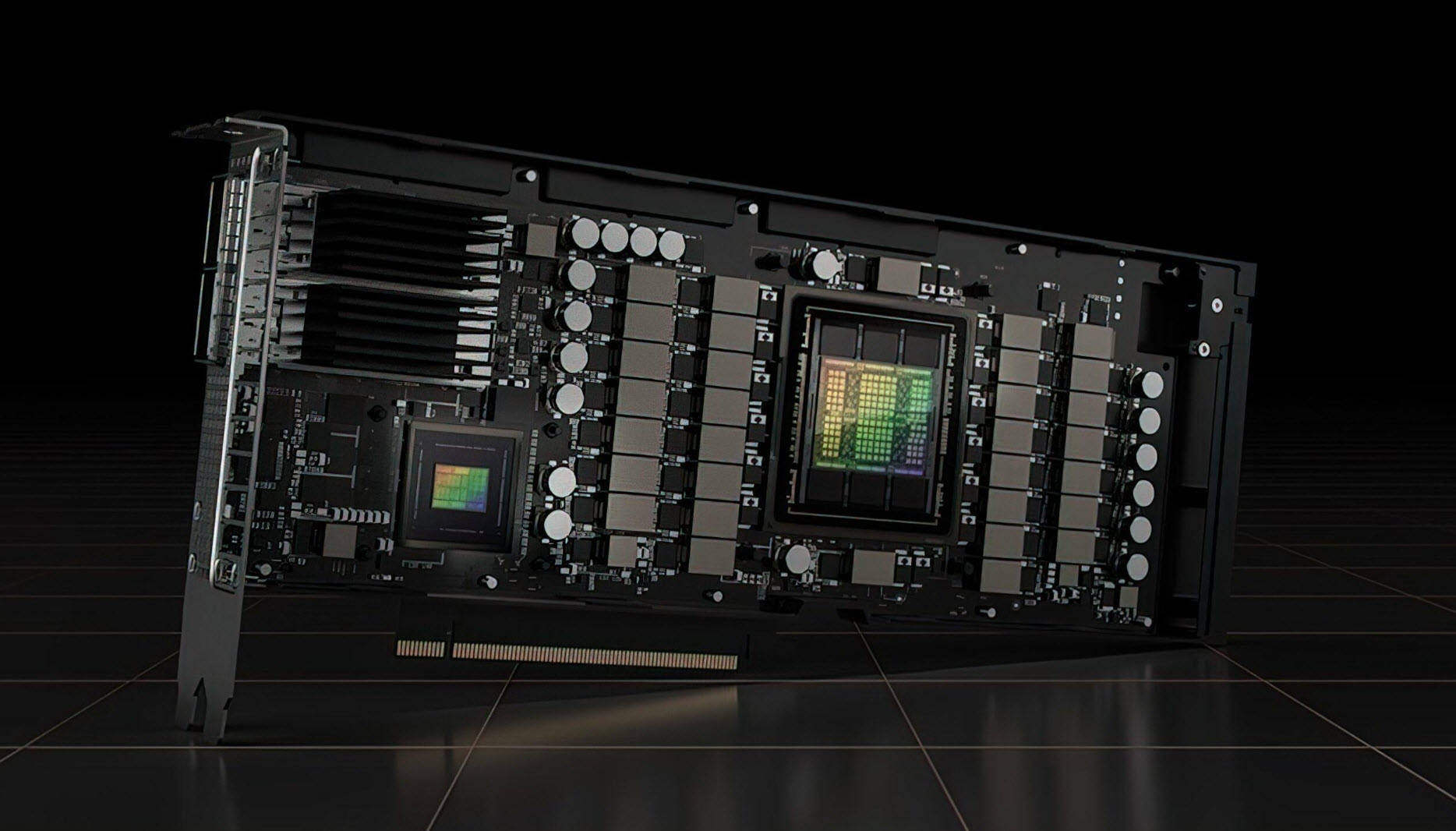

Official description: NVIDIA GH200 is the first super chip to integrate H100 GPU and Grace CPU. The two are interconnected through NVLink-C2C with a bandwidth of up to 900GB/s, which has better scalability. Train deep learning at ultra-fast speeds.

NVIDIA stated that GH200 has three major features:

l Huge memory for large models: Provides developers with more than 500 times the memory to build large models.

lSuper energy-saving computing: bandwidth increased by 7 times, and interconnection power consumption reduced by more than 5 times.

Integrated and ready to execute: create large models in weeks. What's been rewritten: Already integrated and ready to go: a large model can be built in a few weeks

The performance of most players has been improved to 20% compared to the previous generation

During COMPUTEX in May, NVIDIA CEO Huang Jenxun mentioned that the GH200 chip is scheduled to be mass-produced in the second quarter of 2024. The MLPerfv3.1 benchmark tested more than 13,500 results, and many submitters' performance improved by more than 20% compared to the 3.0 benchmark.

MLPerf is a common tool for customers to evaluate the performance of AI chips.

The list of submitters includes ASUS, Azure, Connect Tech, DELL, Fujitsu, Giga Computing, Google, H3C, HPE, IEI, Intel, Intel HabanaLabs, Nutanix, Oracle, Qualcomm, Quanta Cloud Technology, SiMA, Supermicro, TTA and xFusion etc

The above is the detailed content of Amazing running scores revealed, better than H100! What is NVIDIA's most powerful AI chip GH200?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the problem that the NVIDIA graphics card screen recording shortcut key cannot be used?

Mar 13, 2024 pm 03:52 PM

How to solve the problem that the NVIDIA graphics card screen recording shortcut key cannot be used?

Mar 13, 2024 pm 03:52 PM

NVIDIA graphics cards have their own screen recording function. Users can directly use shortcut keys to record the desktop or game screen. However, some users reported that the shortcut keys cannot be used. So what is going on? Now, let this site give users a detailed introduction to the problem of the N-card screen recording shortcut key not responding. Analysis of the problem of NVIDIA screen recording shortcut key not responding Method 1, automatic recording 1. Automatic recording and instant replay mode. Players can regard it as automatic recording mode. First, open NVIDIA GeForce Experience. 2. After calling out the software menu with the Alt+Z key, click the Open button under Instant Replay to start recording, or use the Alt+Shift+F10 shortcut key to start recording.

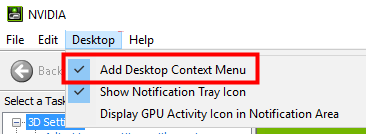

How to solve the problem of nvidia control panel when right-clicking in Win11?

Feb 20, 2024 am 10:20 AM

How to solve the problem of nvidia control panel when right-clicking in Win11?

Feb 20, 2024 am 10:20 AM

How to solve the problem of nvidia control panel when right-clicking in Win11? Many users often need to open the nvidia control panel when using their computers, but many users find that they cannot find the nvidia control panel. So what should they do? Let this site carefully introduce to users the solution to the problem that there is no nvidia control panel in Win11 right-click. Solution to Win11 right-click not having nvidia control panel 1. Make sure it is not hidden. Press Windows+R on the keyboard to open a new run box and enter control. In the upper right corner, under View by: select Large icons. Open the NVIDIA Control Panel and hover over the desktop options to view

Cyberpunk 2077 sees up to a 40% performance boost with new optimized path tracing mod

Aug 10, 2024 pm 09:45 PM

Cyberpunk 2077 sees up to a 40% performance boost with new optimized path tracing mod

Aug 10, 2024 pm 09:45 PM

One of the standout features ofCyberpunk 2077is path tracing, but it can put a heavy toll on performance. Even systems with reasonably capable graphics cards, such as the RTX 4080 (Gigabyte AERO OC curr. $949.99 on Amazon), struggle to offer a stable

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

According to news on November 14, Nvidia officially released the new H200 GPU at the "Supercomputing23" conference on the morning of the 13th local time, and updated the GH200 product line. Among them, the H200 is still built on the existing Hopper H100 architecture. However, more high-bandwidth memory (HBM3e) has been added to better handle the large data sets required to develop and implement artificial intelligence, making the overall performance of running large models improved by 60% to 90% compared to the previous generation H100. The updated GH200 will also power the next generation of AI supercomputers. In 2024, more than 200 exaflops of AI computing power will be online. H200

Exclusive version for mainland China, Hong Kong and Macau markets: NVIDIA will soon release RTX 4090D graphics card

Dec 01, 2023 am 11:34 AM

Exclusive version for mainland China, Hong Kong and Macau markets: NVIDIA will soon release RTX 4090D graphics card

Dec 01, 2023 am 11:34 AM

On November 16, NVIDIA is actively developing a new version of the graphics card RTX4090D designed specifically for mainland China, Hong Kong and Macao to cope with local production and sales bans. This special edition graphics card will bring a range of unique features and design tweaks to suit the specific needs and regulations of local markets. This graphics card means 2024, the Chinese Year of the Dragon, so "D" is added to the name, which stands for "Dragon." According to industry sources, this RTX4090D will use a different GPU core from the original RTX4090, numbered AD102-250. This number appears numerically lower compared to the AD102-300/301 on the RTX4090, indicating possible performance degradation. According to N.V.

AMD Radeon RX 7800M in OneXGPU 2 outperforms Nvidia RTX 4070 Laptop GPU

Sep 09, 2024 am 06:35 AM

AMD Radeon RX 7800M in OneXGPU 2 outperforms Nvidia RTX 4070 Laptop GPU

Sep 09, 2024 am 06:35 AM

OneXGPU 2 is the first eGPUto feature the Radeon RX 7800M, a GPU that even AMD hasn't announced yet. As revealed by One-Netbook, the manufacturer of the external graphics card solution, the new AMD GPU is based on RDNA 3 architecture and has the Navi

Detailed explanation of what to do if NVIDIA graphics card driver installation fails

Mar 14, 2024 am 08:43 AM

Detailed explanation of what to do if NVIDIA graphics card driver installation fails

Mar 14, 2024 am 08:43 AM

NVIDIA is currently the most popular graphics card manufacturer, and many users prefer to install NVIDIA graphics cards on their computers. However, you will inevitably encounter some problems during use, such as NVIDIA driver installation failure. How to solve this? There are many reasons for this situation. Let’s take a look at the specific solutions. Step 1: Download the latest graphics card driver You need to go to the NVIDIA official website to download the latest driver for your graphics card. Once on the driver page, select your product type, product series, product family, operating system, download type and language. After clicking search, the website will automatically query the driver version suitable for you. With GeForceRTX4090

How to solve unable to connect to nvidia

Dec 06, 2023 pm 03:18 PM

How to solve unable to connect to nvidia

Dec 06, 2023 pm 03:18 PM

Solutions for being unable to connect to nvidia: 1. Check the network connection; 2. Check the firewall settings; 3. Check the proxy settings; 4. Use other network connections; 5. Check the NVIDIA server status; 6. Update the driver; 7. Restart Start NVIDIA's network service. Detailed introduction: 1. Check the network connection to ensure that the computer is connected to the Internet normally. You can try to restart the router or adjust the network settings to ensure that you can connect to the NVIDIA service; 2. Check the firewall settings, the firewall may block the computer, etc.