PHP中的模板技术_PHP

在多人开发大型PHP项目时,模板技术非常有用,它可以分开美工和程序员的工作,并且方便界面的修改和完善;不仅如此,利用模板技术,我们还可以简单有效地定制或者修改站点。现在我们将要以PHPLIB的模板为例子讲述如何在PHP中应用模板技术。

如何使用PHPLIB模板?

设我们有一个模板, 名为UserTemp,路径为/home/user_dir/user_temp/,它的内容如下:

你订购的是:{Product}

大括号表示Product是一个模板变量。

然后我们编写如下的程序:

include "template.inc";

$user_product = "随身听";

$tmp = new Template("/home/user_dir/user_temp/"); // 创建一个名为 $t 的模板对象

$tmp->set_file("FileHandle","UserTemp.ihtml"); // 设置句柄FileHandle = 模板文件

$tmp->set_var("Product",$user_product); // 设置模板变量Product=$user_product

$tmp->parse("Output","FileHandle"); // 设置模板变量 Output = 分析后的文件

$tmp->p("Output"); // 输出 Output 的值(我们的分析后的数据)

?>

template.inc是PHPLIB中的一个文件,我们用include以便使用PHPLIB的模板功能。PHPLIB模板使用的是面向对象的设计,所以我们可以用$tmp = new Template("/home/user_dir/user_temp/")创建一个模板对象,其参数是一个路径("/home/user_dir/user_temp/"), 用来设置模板文件所在位置,默认路径是PHP脚本所在目录。

set_file()用来定义指向UserTemp.ihtml(PHPLIB模板的模板文件名的后缀为.ihtml )的句柄"FileHandle",set_var()用来设置模板变量Product为$user_product的值(即"随身听"),parse()方法会装入FileHandle(即UserTemp.ihtml)进行分析,将所有在模板中出现的"{Product}"替换成$user_product的值("随身听")。

如何使用嵌套的模板?

在上面的例子中,parse()方法设置的"Output"是一个模板变量,利用这点,我们可以实现模板的嵌套。

比如,我们有另外一个模板(假设为UserTemp2),其内容是:

欢迎你,亲爱的朋友!{Output}

那么在分析之后,其输出会是:

欢迎你,亲爱的朋友!你订购的是:随身听

下面是更新后的程序:

include "template.inc";

$user_product = "随身听";

$tmp = new Template("/home/user_dir/user_temp/");

$tmp->set_file("FileHandle","UserTemp.ihtml");

$tmp->set_var("Product",$user_product);

$tmp->parse("Output","FileHandle");

$tmp->set_file("FileHandle2","UserTemp2.ihtml");//设置第二个模板句柄

$tmp->parse("Output","FileHandle2");//分析第二个模板

$tmp->p("Output");

?>

很简单,我们就不详细解释了。这里有一个技巧:parse()和p()可以写成一个函数pparse(),比如$tmp->pparse(Output","FileHandle2)。

PHPLIB模板如何接受多组值?

setfile()和set_var()的参数可以是关联数组(句柄作为数组索引,模板文件作为值),这样模板就可以接受多个值,比如:

……

$tmp->setfile(array("FileHandle"=>"UserTemp.ihtml","FileHandle2"=>"UserTemp2.ihtml"));

$tmp->set_var(array("Product"=>"随身听","Product2"=>"电视机"));

……

?>

如何给模板变量追加数据?

我们可以给parse()和pparse()提供第三个参数(布尔变量)来给模板变量追加数据:

……

$tmp->pparse("Output","FileHandle",true);

……

?>

这样,FileHandle被分析后就会被追加到Output变量的值的后面而不是简单的替换。

为什么要使用block机制?

比方说我们想要显示:

你订购的是:随身听 电视机,……

用上面的方法直接追加的话,可能显示出来的是:

你订购的是:随身听 你订购的是:电视机 你订购的是:……

显然不符合我们的要求,那么如何有效解决这个问题呢?这里就要使用block机制。

我们将上面的模板文件UserTemp.ihtml修改一下:

你订购的是:

{Product}

<!-- END Product_List -->

这样我们就定义了一个名为"Product_List"的block。

相应的程序为:

include "template.inc";

$tmp=new Template("/home/user_dir/user_temp/");

$tmp->set_file("FileHandle","UserTemp.ihtml");

$tmp->set_block("FileHandle","Product_List","Product_Lists");

//将文件中的block替换成{Product_Lists}

$tmp->set_var("Product","随身听");

$tmp->parse("Product_Lists","Product_List",true);

$tmp->set_var("Product","电视机");

$tmp->parse("Product_Lists","Product_List",true);

//具体使用中,可以用数组和循环来做

$tmp->parse("Output","FileHandle");

$tmp->p("Output");

?>

现在的输出就是我们想要的结果了。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

How to add PPT mask

Mar 20, 2024 pm 12:28 PM

How to add PPT mask

Mar 20, 2024 pm 12:28 PM

Regarding PPT masking, many people must be unfamiliar with it. Most people do not understand it thoroughly when making PPT, but just make it up to make what they like. Therefore, many people do not know what PPT masking means, nor do they understand it. I know what this mask does, and I don’t even know that it can make the picture less monotonous. Friends who want to learn, come and learn, and add some PPT masks to your PPT pictures. Make it less monotonous. So, how to add a PPT mask? Please read below. 1. First we open PPT, select a blank picture, then right-click [Set Background Format] and select a solid color. 2. Click [Insert], word art, enter the word 3. Click [Insert], click [Shape]

Effects of C++ template specialization on function overloading and overriding

Apr 20, 2024 am 09:09 AM

Effects of C++ template specialization on function overloading and overriding

Apr 20, 2024 am 09:09 AM

C++ template specializations affect function overloading and rewriting: Function overloading: Specialized versions can provide different implementations of a specific type, thus affecting the functions the compiler chooses to call. Function overriding: The specialized version in the derived class will override the template function in the base class, affecting the behavior of the derived class object when calling the function.

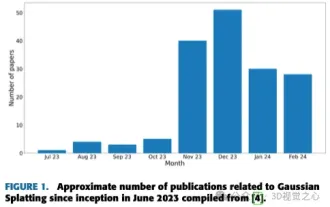

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up

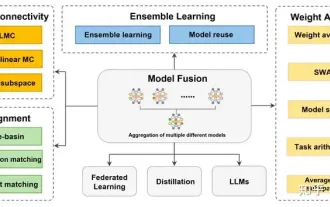

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

Revolutionary GPT-4o: Reshaping the human-computer interaction experience

Jun 07, 2024 pm 09:02 PM

Revolutionary GPT-4o: Reshaping the human-computer interaction experience

Jun 07, 2024 pm 09:02 PM

The GPT-4o model released by OpenAI is undoubtedly a huge breakthrough, especially in its ability to process multiple input media (text, audio, images) and generate corresponding output. This ability makes human-computer interaction more natural and intuitive, greatly improving the practicality and usability of AI. Several key highlights of GPT-4o include: high scalability, multimedia input and output, further improvements in natural language understanding capabilities, etc. 1. Cross-media input/output: GPT-4o+ can accept any combination of text, audio, and images as input and directly generate output from these media. This breaks the limitation of traditional AI models that only process a single input type, making human-computer interaction more flexible and diverse. This innovation helps power smart assistants