Noise suppression issues in image enhancement technology

Image enhancement is an important technology in digital image processing, which aims to improve the quality and details of images. However, in practical applications, images may be contaminated by various types of noise, such as Gaussian noise, salt-and-pepper noise, and speckle noise. These noises can reduce the visual effect and readability of images, so noise suppression is a key task in image enhancement.

The noise suppression problem in image enhancement technology can be solved through some effective methods. This article will introduce some common noise suppression techniques and provide corresponding code examples.

- Mean filter

Mean filter is a simple and commonly used noise suppression method. It is based on a fixed-size sliding window, calculates the average gray value of pixels within the sliding window, and uses this value as the filtered pixel value. The following is an example of a mean filter function based on Python:

import numpy as np

import cv2

def mean_filter(img, kernel_size):

width, height = img.shape[:2]

output = np.zeros_like(img)

pad = kernel_size // 2

img_pad = cv2.copyMakeBorder(img, pad, pad, pad, pad, cv2.BORDER_REFLECT)

for i in range(pad, width + pad):

for j in range(pad, height + pad):

output[i - pad, j - pad] = np.mean(img_pad[i - pad:i + pad + 1, j - pad:j + pad + 1])

return output

# 调用示例

image = cv2.imread('input.jpg', 0)

output = mean_filter(image, 3)

cv2.imwrite('output.jpg', output)- Median filter

Median filter is a nonlinear noise suppression method based on a fixed-size sliding Window, calculate the median value of pixels within the sliding window, and use this value as the filtered pixel value. The following is an example of a median filter function based on Python:

import numpy as np

import cv2

def median_filter(img, kernel_size):

width, height = img.shape[:2]

output = np.zeros_like(img)

pad = kernel_size // 2

img_pad = cv2.copyMakeBorder(img, pad, pad, pad, pad, cv2.BORDER_REFLECT)

for i in range(pad, width + pad):

for j in range(pad, height + pad):

output[i - pad, j - pad] = np.median(img_pad[i - pad:i + pad + 1, j - pad:j + pad + 1])

return output

# 调用示例

image = cv2.imread('input.jpg', 0)

output = median_filter(image, 3)

cv2.imwrite('output.jpg', output)- Bilateral filtering

Bilateral filtering is a filtering method that suppresses noise while maintaining image edge details. It calculates filter coefficients based on the spatial distance and gray value similarity of pixels, thereby suppressing noise while maintaining edge sharpness. The following is an example of a bilateral filtering function based on Python:

import numpy as np

import cv2

def bilateral_filter(img, sigma_spatial, sigma_range):

output = cv2.bilateralFilter(img, -1, sigma_spatial, sigma_range)

return output

# 调用示例

image = cv2.imread('input.jpg', 0)

output = bilateral_filter(image, 5, 50)

cv2.imwrite('output.jpg', output)Through the above example code, it can be seen that mean filtering, median filtering and bilateral filtering are all commonly used for noise suppression in image enhancement techniques. method. According to the actual situation and needs of the image, choosing the appropriate technology and parameters can effectively improve the quality and details of the image.

However, it should be noted that the selection and parameter settings of noise suppression methods are not static. Different types of noise and different images may require different processing methods. Therefore, in practical applications, it is very important to select appropriate noise suppression methods and parameters according to the characteristics and needs of the image.

The above is the detailed content of Noise suppression issues in image enhancement technology. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

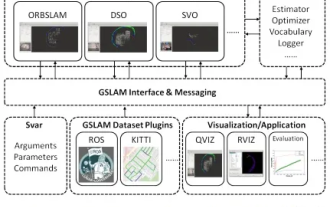

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

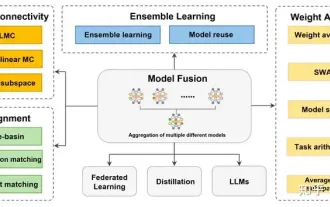

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion