Technology peripherals

Technology peripherals

AI

AI

The world model shines! The realism of these 20+ autonomous driving scenario data is incredible...

The world model shines! The realism of these 20+ autonomous driving scenario data is incredible...

The world model shines! The realism of these 20+ autonomous driving scenario data is incredible...

Do you think this is an ordinary boring self-driving video?

The original meaning of this content does not need to be changed, it needs to be rewritten into Chinese

Not a single frame is "real".

Different road conditions, various weather conditions, and more than 20 situations can be simulated, and the effect is just like the real thing.

The world model once again demonstrates its powerful role! This time, LeCun excitedly retweeted

after seeing it. The above effect is brought by the latest version of GAIA-1.

It has a scale of 9 billion parameters, and uses 4700 hours of driving video training to achieve the effect of inputting video, text or operations to generate automatic driving videos.

The most direct benefit is that it can better predict future events. It can simulate more than 20 scenarios, thereby further improving the safety of autonomous driving and reducing costs

The creative team said that this will change the rules of the autonomous driving game!

How is GAIA-1 implemented? In fact, we have previously introduced in detail the GAIA-1 developed by the Wayve team in Autonomous Driving Daily: a generative world model for autonomous driving. If you are interested in this, you can go to our official account to read relevant content!

The bigger the scale, the better the effect

GAIA-1 is a multi-modal generative world model that can understand and generate the world by integrating multiple perception methods such as vision, hearing and language. expression. This model uses deep learning algorithms to learn and reason about the structure and laws of the world from a large amount of data. The goal of GAIA-1 is to simulate human perception and cognitive abilities to better understand and interact with the world. It has wide applications in many fields, including autonomous driving, robotics, and virtual reality. Through continuous training and optimization, GAIA-1 will continue to evolve and improve, becoming a more intelligent and comprehensive world model

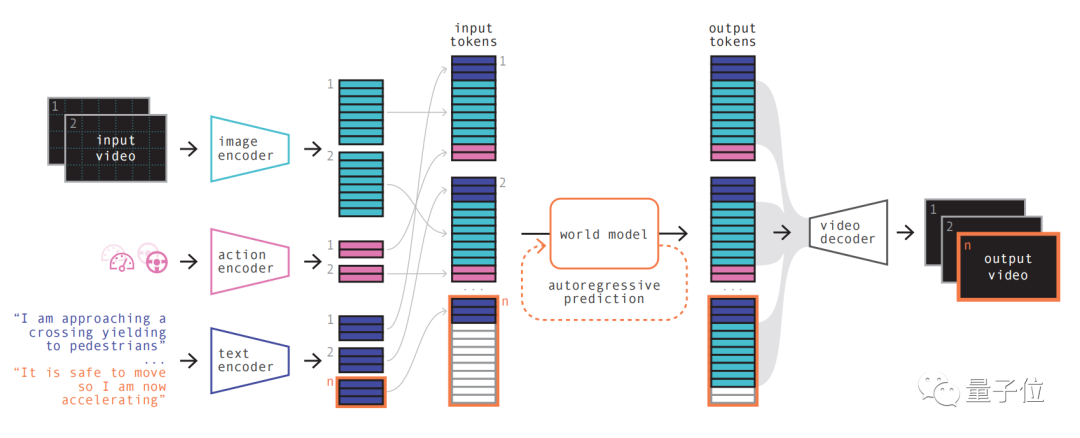

It uses video, text and motion as input, and generates realistic driving scene videos, while The behavior and scene characteristics of self-driving vehicles can be finely controlled

and videos can be generated with text prompts only .

The principle of its model is similar to that of large language models, that is, predicting the next token

The model can use vector quantization representation to discretize video frames, and then Predicting future scenarios is converted into predicting the next token in the sequence. The diffusion model is then used to generate high-quality videos from the language space of the world model.

The specific steps are as follows:

The first step is simple to understand, which is to recode and arrange and combine various inputs.

Different inputs can be projected into a shared representation by using specialized encoders to encode various inputs. Text and video encoders separate and embed the input, while the operational representations are individually projected into a shared representation

These encoded representations are temporally consistent

After permutation, key parts World Model appears.

As an autoregressive Transformer, it has the ability to predict the next set of image tokens in the sequence. It not only considers previous image tokens, but also considers contextual information of text and actions

The content generated by the model maintains consistency not only with the image, but also with the predicted text and actions

According to the team, the size of the world model in GAIA-1 is 6.5 billion parameters, which was trained on 64 A100s for 15 days.

By using a video decoder and a video diffusion model, these tokens are finally converted back to video

This step is about the semantic quality, image accuracy and temporal consistency of the video.

GAIA-1's video decoder has a scale of 2.6 billion parameters and was trained for 15 days using 32 A100s.

It is worth mentioning that GAIA-1 is not only similar in principle to the large language model, but also shows the characteristics of improving the generation quality as the model scale expands.

The team compared the early version released in June with the latest effect

The latter is 480 times larger than the former.

You can intuitively see that the video has been significantly improved in details, resolution, etc.

In terms of practical applications, GAIA-1 has also had an impact. Its creative team said that this will change the rules of autonomous driving.

The reasons come from three aspects:

- Safety

- Comprehensive training data

- Long tail scenario

First of all, in terms of safety, the world model can simulate the future and give AI the ability to be aware of its own decisions, which is critical to the safety of autonomous driving.

Secondly, training data is also very critical for autonomous driving. The data generated is more secure, cheaper, and infinitely scalable.

Generative AI can solve a major challenge facing autonomous driving - long-tail scenarios. It can handle more edge cases, such as encountering pedestrians crossing the road in foggy weather. This will further improve the performance of autonomous driving

Who is Wayve?

GAIA-1 comes from British autonomous driving startup Wayve.

Wayve was founded in 2017, with investors including Microsoft, etc., and its valuation has reached Unicorn.

The founders are current CEOs Alex Kendall and Amar Shah (the leadership page of the company’s official website no longer has information about them). Both of them graduated from Cambridge University and have a doctorate in machine learning

On the technical roadmap, like Tesla, Wayve advocates a purely visual solution using cameras, abandoning high-precision maps very early and firmly following the "instant perception" route.

Not long ago, another large model LINGO-1 released by the team also caused a sensation.

This self-driving model can generate explanations in real time during driving, thereby further improving the interpretability of the model

In March this year, Bill Gates also took a test ride on Wayve’s Self-driving cars.

##Paper address: https://arxiv.org/abs/2309.17080

The above is the detailed content of The world model shines! The realism of these 20+ autonomous driving scenario data is incredible.... For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile